Overview of Deep Bidirectional Language-Knowledge Graph Pretraining

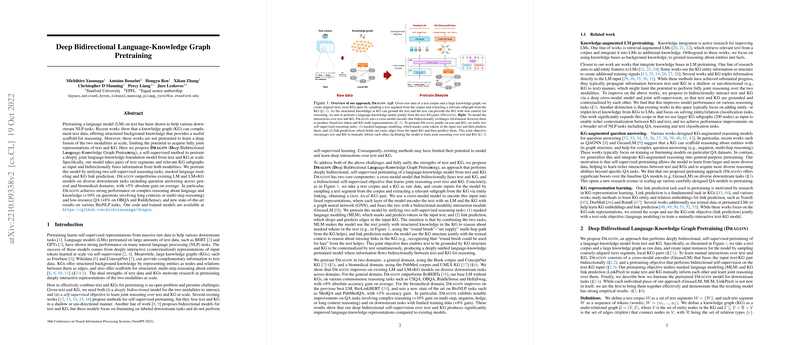

The paper presents a novel approach for pretraining models that unify LLMs (LMs) and knowledge graphs (KGs) to address the limitations of existing methods that either treat KGs as supplementary sources or lack deep bidirectional fusion at a large scale. This method, referred to as Dragon, involves bidirectional self-supervised pretraining that takes both text and KGs as inputs to produce joint representations, aiming to leverage the structured knowledge from KGs and the contextual richness of text.

Methodology

Dragon introduces a self-supervised pretraining technique characterized by two core components: a cross-modal encoder and a bidirectional training objective.

- Cross-Modal Encoder: This component utilizes a GreaseLM architecture, combining Transformers and graph neural networks (GNNs) to process text and KG data. It involves sequential layers that integrate textual and graphical information, allowing for bidirectional information flow and ensuring that the encoder produces fused representations of both modalities.

- Pretraining Objective: The model employs two principal self-supervised learning tasks: masked LLMing (MLM) for the textual data and link prediction for the KG data. This dual-task strategy necessitates that the model learns to ground textual data on KG structures and enrich KGs with textual context, thereby supporting joint reasoning. The incorporation of distinctive KG structure into the pretraining phase is a noteworthy aspect that sets Dragon apart from previous efforts.

Results and Evaluation

The authors conducted experiments across general domain tasks and specific biomedical tasks to evaluate Dragon's efficacy. The model consistently outperformed existing baselines, such as RoBERTa and state-of-the-art KG-augmented models (e.g., GreaseLM) across a suite of downstream tasks including question answering and commonsense reasoning benchmarks. Some specific highlights include:

- Dragon achieved a +5% average accuracy gain on general domain question-answering tasks compared to previous fused models.

- Significant improvements were noted in complex reasoning tasks involving extensive reasoning steps or indirect conclusions, suggesting superior integration of textual context with factual knowledge.

Dragon's capability also shone in low-resource environments, indicating promising data efficiency, and it proved scalable with increased model capacity, unlike its counterparts.

Implications and Future Directions

Practically, Dragon's approach indicates a meaningful step towards developing LLMs capable of complex reasoning by structurally combining textual data with entities and their relationships from KGs. Theoretically, it emphasizes the potential of bidirectional learning in models designed to process multimodal data. The method paves the way for further exploration into more diverse uses of structured knowledge, suggesting potential advancements in fields that require sophisticated reasoning capabilities such as legal technology, scientific research, and educational tools.

Future research directions could include extending Dragon's methodology to language generation tasks, which would bridge the gap between understanding grounded by structured knowledge and the production of coherent, contextually rich language outputs. Another avenue is enhancing the pretraining architecture to handle more varied and dynamic forms of both text and graph data, potentially expanding Dragon's application scope even further. Such advancements would further align AI systems with human-like interpretative and reasoning abilities.

This paper substantiates the value of unifying deep symbolic reasoning through KGs with the representational power of LMs, illustrated by Dragon's marked benefits in reasoning-intensive applications.