Overview of "QA-GNN: Reasoning with LLMs and Knowledge Graphs for Question Answering"

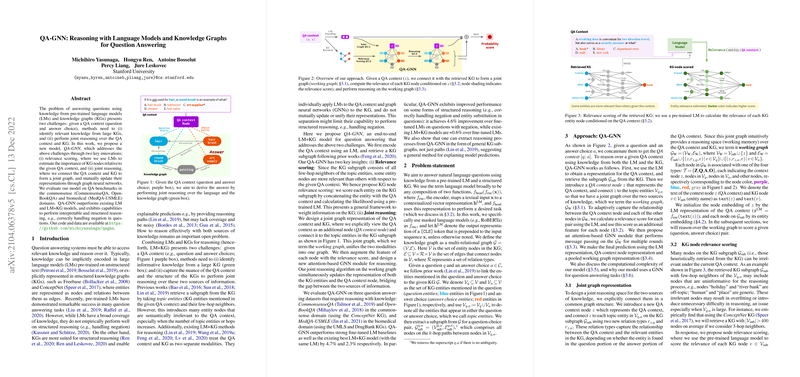

The paper introduces QA-GNN, an innovative model that advances question answering (QA) by integrating pre-trained LLMs (LMs) and knowledge graphs (KGs). The intersection of LMs with KGs addresses two major hurdles: identifying pertinent knowledge within expansive KGs and executing joint reasoning over the QA context and KG. QA-GNN introduces two vital advancements: relevance scoring and joint reasoning, enhancing both interpretability and reasoning structures in QA systems.

Key Contributions

- Relevance Scoring: The model utilizes LMs to determine the relevance of KG nodes concerning a specific QA context. This relevance helps trim superfluous nodes from the KG, ensuring the focus remains on meaningful information pertinent to the query.

- Joint Reasoning: QA-GNN forms a unified graph connecting the QA context node with the KG, enabling simultaneous updates of their representations through graph neural networks (GNNs). This integration facilitates more structured reasoning, particularly in instances requiring handling of language nuances such as negation.

Evaluation and Performance

QA-GNN's efficacy is demonstrated across multiple domains, including CommonsenseQA, OpenBookQA, and MedQA-USMLE, with consistent outperformance over existing LM and LM+KG models. For instance, it achieves a remarkable 4.7% improvement over standard fine-tuned LMs in CommonsenseQA and outshines the best existing LM+KG model by 2.3%. In biomedical domains, QA-GNN exhibits robustness by surpassing baseline LMs like SapBERT, affirming its cross-domain applicability.

Technical Insights

- Joint Graph Representation: QA-GNN creates a joint 'working graph' encompassing both QA context and KG nodes. This graph structure allows the model to apply attention mechanisms and relevance scoring, integrating both sources into a coherent reasoning process.

- GNN Architecture: The GNN module utilizes node type, relation, and relevance scores in its attention mechanisms, allowing the model to effectively process the joint graph, integrating semantic information from both the textual and structured datasets.

Implications and Future Directions

QA-GNN presents significant implications for the development of more nuanced and interpretable QA systems. By effectively leveraging the strengths of LMs and structured reasoning from KGs, it opens avenues for tackling more complex reasoning tasks. Future research could explore extensions of this architecture into other NLP tasks or further refine the integration mechanisms between diverse sources of knowledge.

Conclusion

QA-GNN provides a sophisticated approach for integrating LMs and KGs within a unified framework, achieving enhanced performance in structured reasoning tasks. Its advancements in relevance scoring and joint reasoning highlight the potential for more integrated and interpretable AI systems, marking a significant step forward in computational reasoning capabilities.