Medical Knowledge-Augmented LLMs for Question Answering

The paper "MEG: Medical Knowledge-Augmented LLMs for Question Answering" proposes an innovative approach to enhancing LLMs by integrating structured domain-specific knowledge into the model. This integration is facilitated through the use of knowledge graph embeddings (KGEs), which serve to address the shortcomings of LLMs in handling specialized fields such as medicine. The central contribution of this work is MEG, a framework that enables the incorporation of medical knowledge graphs into LLMs, enhancing their performance on medical question answering (QA) tasks.

Key Contributions

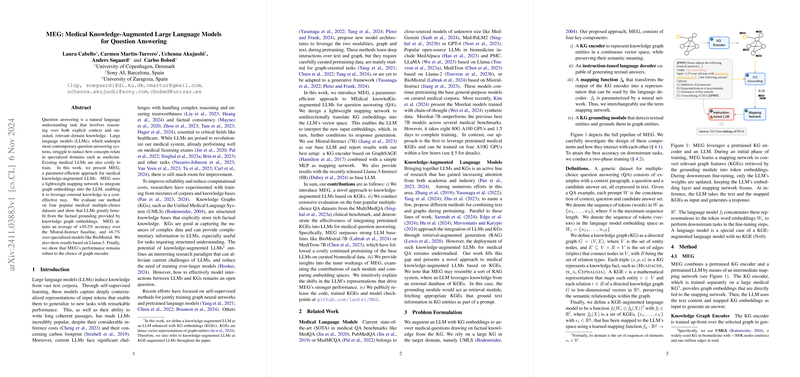

- Integration with Knowledge Graphs: The authors introduce a parameter-efficient method that maps knowledge graph embeddings to the LLM's embedding space. This mapping leverages a lightweight network that allows the LLM to interpret the structured information from knowledge graphs like the Unified Medical Language System (UMLS).

- Improved Performance on Medical QA: MEG demonstrates significant improvements over baseline models across several rigorous medical QA datasets, including MedQA, PubMedQA, and MedMCQA. For instance, MEG achieves +10.2% accuracy over the Mistral-Instruct baseline on these tasks, showcasing the benefit of grounding LLMs in factual medical knowledge.

- Efficiency in Training: Contrary to existing medical LLMs which require extensive resources for domain-specific fine-tuning, MEG achieves substantial accuracy gains with reduced computational costs. Notably, the training only involves a small fraction of the LLM’s parameters, accomplishing this efficiency by freezing the embedding layer during fine-tuning.

- Evaluation with Graph Encoders: The work includes a comprehensive analysis of different types of graph encoders to determine their effectiveness in this setup. GraphSAGE emerged as a preferred choice, suggesting the importance of message-passing capabilities in capturing the semantic richness of medical knowledge.

- Public Availability: To facilitate further research and reproducibility, the authors have open-sourced the code and pretrained models, making their findings accessible to the research community.

Implications and Future Directions

The integration of KGEs in LLMs has significant implications for the field of AI, particularly in domains that require an understanding of highly specialized and structured knowledge. This approach not only improves model accuracy but also reduces the need for costly domain-specific pretraining. In practical terms, the capability to ground models in domain-specific knowledge could significantly enhance the utility of LLMs in fields like healthcare, where accuracy and trustworthiness are paramount.

Future developments could explore the applicability of MEG to other specialized domains by leveraging different knowledge graphs. Additionally, the use of chain-of-thought (CoT) reasoning, as suggested by parallel efforts in LLMing, could further enhance the model’s decision-making processes by facilitating step-wise reasoning. Researchers might also explore deeper interactions between the LLMs and KGs, beyond mere embedding mappings, through the use of more complex architectures or alternative training objectives.

In conclusion, the paper presents a significant step forward in the integration of structured domain knowledge with LLMs, setting the stage for more specialized, efficient, and effective NLP systems in critical fields. The promising results demonstrated by MEG highlight the potential for further advancements and applications across various specialized domains.