Insightful Overview of "Re2G: Retrieve, Rerank, Generate"

The paper "ReG: Retrieve, Rerank, Generate" presents a sophisticated evolution in the domain of NLP models, particularly focusing on the paradigm of retrieval-augmented generation. Building upon the established frameworks of previous models such as REALM and RAG, which integrate retrieval into conditional generation, this paper introduces a novel methodology termed Re, strategically leveraging non-parametric knowledge bases for expanding a model’s access to information without the linear growth in computational resources typically required by large parameter models like GPT-3 or T5.

Methodology and Innovations

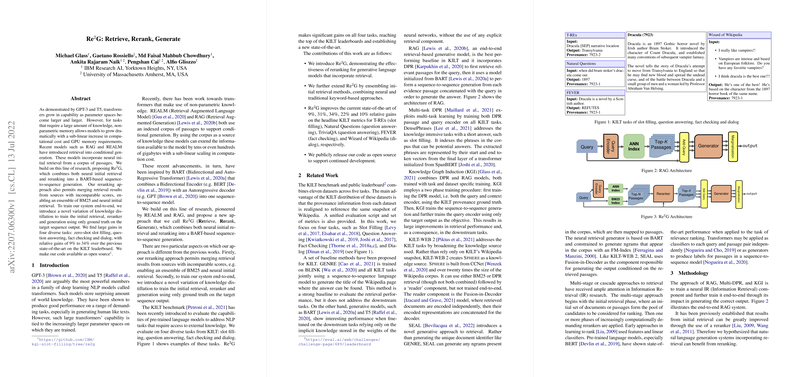

The approach delineated in the paper significantly enhances the pipeline of retrieval-based language generation by integrating two distinct processes: neural reranking and ensembling of retrieval methods. In particular, Re consists of several innovative elements:

- Neural Initial Retrieval and Reranking: Re incorporates a BART sequencing model to refine initial retrieval outcomes through an additional reranking stage, which predominantly uses an interaction model inspired by BERT's architecture. This reranking phase harmonizes results derived from divergent sources, thus facilitating the merging of BM25 and neural retrieval outputs.

- Innovative Training Regimen: A standout aspect of Re is the introduction of a novel variant of knowledge distillation. This technique is employed to train the retrieval components and the generative model in an end-to-end fashion using only sequence output ground truth, which presents a marked departure from reliance on ground-truth provenance usually necessary at intermediate stages.

- Empirical Rigour and Results: Demonstrating the efficacy of the approach, Re showcases substantial improvements across diverse benchmark tasks on the KILT leaderboard, such as zero-shot slot filling, question answering, fact verification, and dialog generation, attaining gains ranging from 9% to 34% over existing state-of-the-art methodologies.

Implications and Future Directions

The implications of Re extend to both practical and theoretical domains within AI. On a practical level, the substantial improvements in performance metrics infer potential applications in domains requiring precise information synthesis from vast corpuses, such as automated knowledge extraction or advanced chatbots. Theoretically, the integration of sophisticated reranking methodologies opens avenues for exploring further enhancements in how models balance implications from multiple retrieval strategies, potentially fostering a new line of inquiry into ensemble learning within LLMs.

A future trajectory of interest could involve expanding this paradigm towards scalability in real-world applications, evaluating Re’s framework across more comprehensive datasets outside the controlled environment of KILT. Furthermore, given the significant improvement observed with the ensemble approach, there is scope to examine its adaptability beyond NLP, possibly influencing retrieval strategies in multi-modal AI systems.

Conclusion

In investigating "ReG," the bundled synergy of retrieval, reranking, and generation catalyzes a notable advance in the effectiveness of retrieval-augmented natural LLMs. By availing neural reranking and ensembling different retrieval strategies, this research mitigates common bottlenecks associated with heavy parameter growth, presenting a scalable solution that situates Re within a promising outlook for both current and emergent AI applications. The open-source release of the code further promises to bolster continued exploration and innovation in this area.