Overview of MVP: Multi-task Supervised Pre-training for Natural Language Generation

The paper presents MVP, a multi-task supervised pre-training model aimed at enhancing natural language generation (NLG) tasks. Traditional approaches have typically focused on unsupervised pre-training with large-scale corpora, but MVP leverages the growing success of supervised pre-training, which uses labeled data for model training.

MVP Structure and Methodology

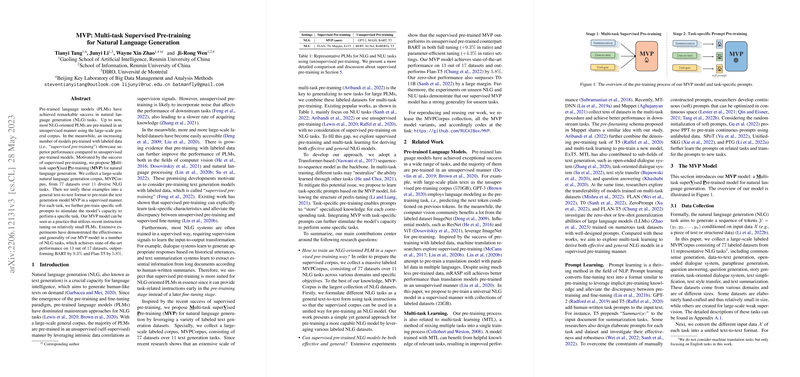

The MVP model is built upon the premise that supervised pre-training can provide task-specific knowledge more effectively than unsupervised methods. The authors have curated a vast labeled dataset, MVPCorpus, incorporating 77 datasets from 11 diverse NLG tasks. This dataset serves as the foundation for training the MVP model using a unified text-to-text format.

The model employs a Transformer-based architecture with specialized mechanisms such as task-specific prompts, using techniques like prefix-tuning to enhance task-specific knowledge storage. This approach intends to mitigate neutralization across tasks—an issue that often arises in multi-task learning scenarios.

Experimental Results

MVP has demonstrated impressive performance across multiple benchmarks. It achieved state-of-the-art results on 13 out of 17 datasets, surpassing existing models like BART by 9.3% and Flan-T5 by 5.8%. These enhancements highlight the robustness of supervised pre-training in capturing valuable nuances for various NLG tasks.

Implications and Future Directions

The strong numerical results emphasize the applicability of supervised pre-training for NLG, potentially challenging the current dominance of unsupervised pre-training paradigms. The authors have open-sourced MVPCorpus and their models, which affords other researchers the opportunity to further explore and expand upon this approach.

Future work may involve the scaling of this methodology to multilingual tasks and exploring the transferability of task knowledge across languages. Additionally, investigating the interaction between task relationships in a multi-task setting could provide deeper insights into the dynamics of supervised pre-training.

In conclusion, MVP represents a significant advancement in the domain of NLG by effectively utilizing labeled datasets to achieve superior results, offering promising avenues for further exploration in artificial intelligence research.