Cross-Lingual Natural Language Generation via Pre-Training

The paper "Cross-Lingual Natural Language Generation via Pre-Training," authored by Zewen Chi et al., addresses the problem of transferring supervision signals of natural language generation (NLG) tasks across multiple languages. This work is particularly focused on the challenge of extending the capabilities of NLG models trained in high-resource languages (e.g., English) to low-resource languages without the need for direct supervision in those languages.

Methodology

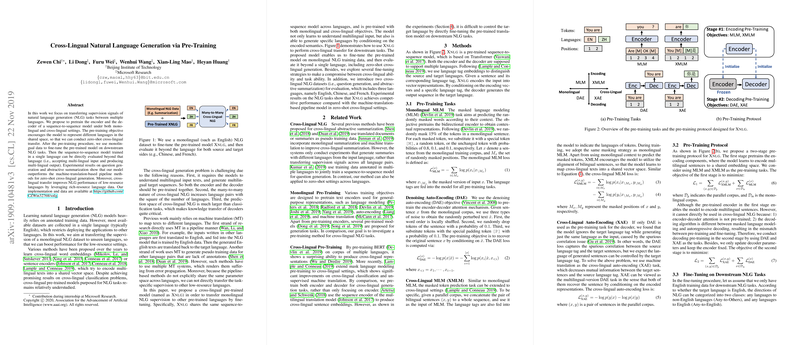

The authors propose a novel approach involving a cross-lingual pre-trained model (termed Xnlg) that encompasses both an encoder and a decoder, fine-tuned under monolingual and cross-lingual pre-training setups. The training utilizes several strategically designed objectives:

- Monolingual Masked LLMing (MLM): This task is akin to BERT's pre-training and aids in capturing rich monolingual contextual representations.

- Denoising Auto-Encoding (DAE): This objective assists in pre-training the encoder-decoder attention by reconstructing sentences from perturbed inputs.

- Cross-Lingual MLM (XMLM): Extending MLM to bilingual corpora, this task trains the model to capture cross-lingual semantic alignment within a shared representation space.

- Cross-Lingual Auto-Encoding (XAE): Infused with principles from machine translation, XAE facilitates language transfer, addressing potential spurious correlations between the source language and target sentences.

This pre-training paradigm allows for zero-shot cross-lingual transfer by enabling a shared semantic space and further fine-tuning on monolingual data, eventually supporting multilingual input and output without parallel data.

Experimental Results

In evaluating Xnlg, the paper focuses on two cross-lingual NLG tasks: question generation (QG) and abstractive summarization (AS). The model achieves superior performance compared to machine-translation-based pipeline methods across different evaluation metrics and settings.

- Question Generation: The model is tested on English-Chinese and Chinese-English language pairs for QG tasks, delivering significant improvements in BLEU-4, METEOR, and ROUGE scores over baselines like XLM and pipeline methods relying on translation systems.

- Abstractive Summarization: Similarly, in zero-shot summarization for French and Chinese, the Xnlg model demonstrates enhanced ROUGE scores, highlighting the robustness of the cross-lingual transfer.

The research highlights that cross-lingual pre-training can effectively enhance NLG performance in low-resource languages by leveraging knowledge from richer datasets. Additionally, the methodology mitigates issues like error propagation associated with traditional pipeline methods reliant on machine translation.

Implications and Future Work

The proposed cross-lingual NLG framework opens avenues for leveraging shared linguistic resources in multilingual settings. The authors argue for the potential application of this approach in entirely unsupervised contexts, suggesting future work could focus on improving pre-training towards fully unsupervised NLG. Furthermore, enhancements could explore more complex language pairs and the addition of more languages, potentially involving deeper models or alternative training objectives to optimize cross-lingual language mapping.

In conclusion, this work stands as a significant contribution to the field of multilingual NLP, providing a scalable and flexible architecture for NLG tasks across diverse language pairs and resource levels. As NLG applications expand globally, such innovations are critical in democratizing access to AI-driven language technologies.