An Analytical Overview of the Unified LLM (UniLM) for Natural Language Understanding and Generation

The paper "Unified LLM Pre-training for Natural Language Understanding and Generation" presents a comprehensive approach to pre-training a versatile LLM called UniLM. This model aims to bridge the gap between two fundamental areas in NLP: Natural Language Understanding (NLU) and Natural Language Generation (NLG). The paper's methodology and experimental results are rigorously documented, offering significant advancements in the efficiency and efficacy of LLMs.

Methodology

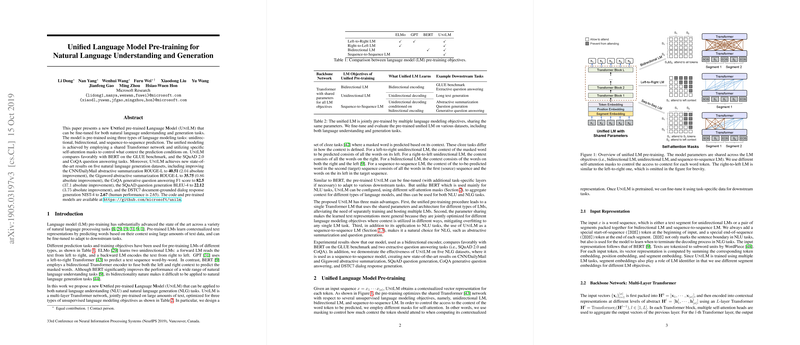

The core innovation of this work lies in the unification of various LLMs into a single framework. UniLM is a multi-layer Transformer network pre-trained using three distinct LLMing objectives: unidirectional, bidirectional, and sequence-to-sequence prediction. This unification is achieved by employing specific self-attention masks that regulate the context available to the prediction mechanism.

Key Features:

- Shared Transformer Network: UniLM employs a single, shared Transformer network across different LLMing tasks. This approach simplifies the model architecture and optimizes the use of parameters.

- Self-attention Masks: These masks control the context conditioning during the prediction, enabling UniLM to effectively switch between unidirectional, bidirectional, and sequence-to-sequence modes.

- Cloze Tasks: To train the model, the authors use cloze tasks that mask some input tokens and require the model to predict these masked tokens. This technique is applied across all pre-training objectives, ensuring a uniform training procedure.

Experimental Results

The experimental results are robust, showcasing UniLM's performance across multiple benchmarks and datasets.

- GLUE Benchmark: UniLM competes favorably with BERT, achieving comparable performance across various NLU tasks measured by the GLUE benchmark.

- Question Answering: The model shows a notable improvement in the SQuAD 2.0 and CoQA datasets for extractive QA tasks. For example, on CoQA, UniLM achieves an F1 score of 84.9, surpassing BERT's score of 82.7.

- Natural Language Generation: UniLM sets new state-of-the-art results on five NLG datasets. Specific improvements include:

- Abstractive Summarization: On the CNN/DailyMail dataset, UniLM improves the ROUGE-L score to 40.51 (an absolute improvement of 2.04).

- Gigaword Dataset: UniLM achieves a ROUGE-L score of 35.75 (an absolute improvement of 0.86).

- Generative Question Answering: On the CoQA dataset, UniLM obtains an F1 score of 82.5 (an absolute improvement of 37.1).

- Question Generation: The model improves the BLEU-4 score to 22.12 on SQuAD, demonstrating an absolute improvement of 3.75.

The results affirm that UniLM is not only competitive but also superior to many existing models in specific tasks. Its capability to perform both NLU and NLG tasks efficiently makes it a versatile tool in the NLP toolkit.

Implications and Future Work

The implications of this work are significant for both theoretical research and practical applications.

Theoretical Implications:

- Unified Framework: The unification of multiple LLMs into a single framework reduces the complexity tied to managing different models for different tasks. This can streamline research efforts and facilitate the development of more integrated NLP systems.

- Generalized Representations: The shared Transformer network across various tasks leads to more generalized text representations. This multi-task optimization mitigates overfitting and enhances the model's adaptability to diverse NLP tasks.

Practical Implications:

- Efficiency in Deployment: A single, unified model is easier to deploy and maintain, especially in production environments where resource constraints are a concern.

- Enhanced Performance: The superior performance on both NLU and NLG tasks can drive advancements in areas such as conversational AI, automated summarization, and intelligent QC systems.

Future Directions:

- Scaling and Robustness: Future research could explore training UniLM with larger datasets and more extensive parameters to push the boundaries of performance further.

- Cross-lingual Capabilities: Expanding UniLM to support cross-lingual tasks could make it a powerful tool in multilingual NLP applications.

- Task-specific Fine-tuning: Conducting multi-task fine-tuning on both NLU and NLG tasks could harness the model's full potential, enabling it to simultaneously address multiple applications.

Conclusion

The paper presents significant advancements in the development of a unified LLM, demonstrating its potential to handle both NLU and NLG tasks with high efficiency and performance. UniLM's design and the empirical results obtained set a new benchmark in the field, offering valuable insights and a robust framework for future NLP research and applications.