Analysis of CyCLIP: Cyclic Contrastive Language-Image Pretraining

The paper "CyCLIP: Cyclic Contrastive Language-Image Pretraining" addresses significant limitations in contrastive representation learning models, such as CLIP, which have dominated the landscape of multimodal AI through their robust performance in zero-shot classification and adaptability to distribution shifts. The current work introduces CyCLIP, a novel framework that aims to enhance the geometrical consistency of paired image-text representations, thereby improving downstream prediction consistency across these modalities.

Initial Motivations and Problem Statement

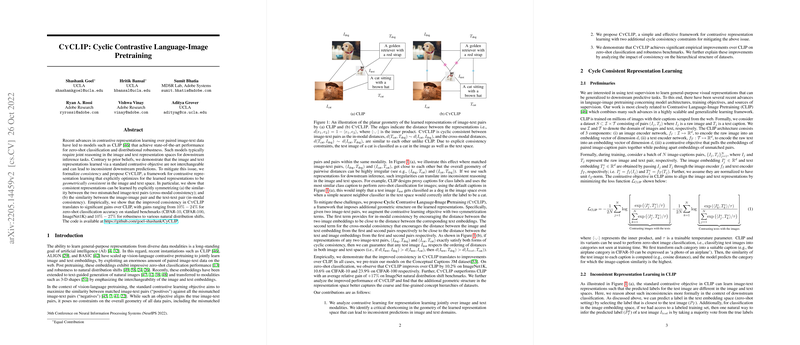

The impetus for this research arises from observations that contrastive learning models like CLIP can yield inconsistent predictions when using the learned image and text representations. Specifically, such inconsistencies manifest when the image-space and text-space predictions diverge, potentially leading to suboptimal zero-shot classification outcomes. The standard contrastive objective focuses on aligning matched image-text pairs without regard to the broader geometry of representation spaces, including mismatched pairs. This misalignment can introduce errors when models are applied to tasks requiring joint reasoning across both modalities.

CyCLIP Framework and Methodology

To address these issues, the authors propose the CyCLIP framework that integrates two key geometric consistency regularizers into the contrastive learning process:

- Cross-Modal Consistency: This regularizer symmetrizes the similarity between different mismatched image-text pairs, ensuring that distances in embedding spaces reflect actual data relationships accurately.

- In-Modal Consistency: This ensures consistency between distances in image-image and text-text pairs, further preserving geometric congruence within the same modality.

These regularizers are incorporated into the standard contrastive learning loss, effectively augmenting the CLIP objectives with cyclic symmetry constraints. As a result, CyCLIP aims to better align image and text representations and enhance the interchangeability of these embeddings for downstream tasks.

Empirical Validation

Extensive empirical evaluations demonstrate the efficacy of CyCLIP. When applied to standard image classification datasets like CIFAR-10, CIFAR-100, and ImageNet1K, CyCLIP outperforms the baseline CLIP by margins of 10% to 24% in zero-shot classification accuracy. Moreover, CyCLIP shows resilience to natural distribution shifts, achieving average relative gains of 10% to 27% over CLIP on benchmarks such as ImageNetV2 and ImageNet-R, underscoring its robust generalization capabilities. This improved performance is attributed to better alignment and coverage properties of the learned representation space, as captured by consistency metrics.

Theoretical and Practical Implications

Theoretical implications of this framework suggest that enforcing geometric consistency during contrastive pretraining allows models to capture more refined and coherent high-level concept hierarchies. Practically, CyCLIP may enhance AI systems' ability to deliver accurate and consistent inferences across modalities, a property critical in many real-world applications, such as image retrieval and multimodal content generation.

Prospects for Future Research

Future work could extend the current approach by further scaling CyCLIP to larger datasets, paralleling the extensive data used for CLIP's pretraining. Additionally, examining CyCLIP's performance across other multimodal tasks and investigating potential societal biases or security vulnerabilities inherent in this framework are productive areas for exploration. Integrating cyclic constraints with other forms of supervision could also yield new insights into the development of holistic and robust AI systems.

In summary, the CyCLIP framework represents a significant step forward in contrastive multimodal representation learning. By embedding geometric cycle-consistency constraints into pretraining, the proposed methodology addresses critical challenges of inconsistency and robustness, thereby paving the way for future advancements in AI's ability to comprehend and integrate diverse data modalities.