Explaining and Mitigating the Modality Gap in Contrastive Multimodal Learning

The paper presented an in-depth exploration of the modality gap in contrastive multimodal learning models, specifically focusing on how this gap manifests in the performance of these models and methods for mitigating it. This paper is crucial as multimodal models, like CLIP, are an integral part of modern machine learning, exhibiting the capability to bridge different modalities, such as text and images, into a shared representation space for enhanced cross-modal understanding.

Key Findings and Theoretical Contributions

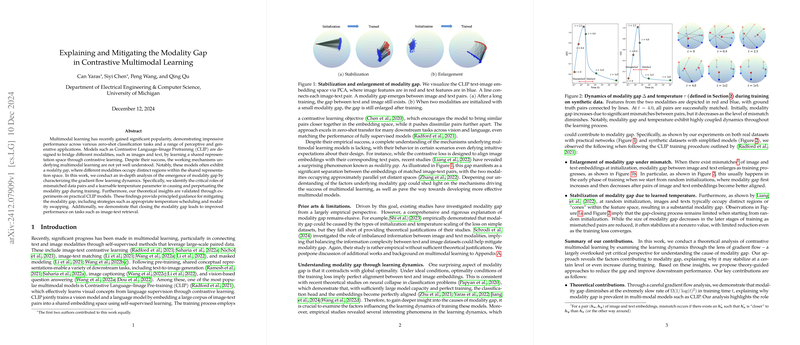

The research thoroughly examines the dynamics of modality gap development through a gradient flow analysis, revealing the circumstances under which modality gaps emerge and stabilize. Notably, the authors identified how mismatched data pairs and learnable temperature parameters contribute significantly to this phenomenon. Theoretically, they demonstrate that the closure of the modality gap progresses at a slow rate, specifically during training. This insight explains the persistence of the modality gap in models like CLIP, even after extensive training periods.

Moreover, the paper elucidates that mismatched pairs exacerbate the modality gap in the early stages of training, creating additional complexities in achieving alignment in the shared representation space. These mismatches, often a result of random initialization, lead to a pronounced discrepancy in initially aligning image-text pairs within the embedding space.

Practical Contributions and Methods for Mitigation

From a practical standpoint, the authors propose several methods aimed at reducing the modality gap, contributing to performance improvements in tasks, particularly in image-text retrieval. The proposed solutions include:

- Temperature Control: By manipulating the temperature parameters during training, such as employing temperature scheduling or reparameterization, the convergence rate of closing the modality gap can be accelerated.

- Modality Swapping: This technique involves altering the embedding characteristics between image and text modalities, effectively breaking their parallel feature space alignment. Both hard and soft swapping strategies are shown to mitigate the modality gap significantly.

These strategies collectively underscore the importance of temperature manipulation and data representation alteration in steering multimodal alignment processes.

Experimental Outcomes and Implications

Empirical verification of the theoretical assertions was conducted with CLIP models, trained from scratch using the MSCOCO dataset. Experiments affirmed that reducing the modality gap tangibly enhances image-text retrieval accuracy, though its influence is less significant in other tasks, such as visual classification. This differential impact suggests that while closing the modality gap is beneficial, other factors, like feature space uniformity, also play critical roles in model performance across varied tasks.

Discussion and Future Directions

The paper opens pathways to further investigations, particularly in understanding the interplay between modality gap mitigation and various downstream applications. Future research could extend gradient flow analyses to consider varied levels of shared information and data distribution impacts. Additionally, understanding the fine-tuning landscape where domain differences arise between pretraining and finetuning datasets remains a promising avenue.

In conclusion, this comprehensive paper provides both theoretical insights and practical solutions to tackle the modality gap challenge in multimodal learning systems. It stimulates further discourse and research, especially in refining multimodal model training protocols to enhance both alignment and performance across diverse applications.