- The paper introduces a novel non-contact rPPG measurement method using temporal difference transformers to capture both local and global spatio-temporal features.

- The model achieves superior accuracy in estimating heart rate and respiratory frequency, outperforming traditional and CNN-based approaches.

- The approach employs dynamic supervision and label distribution learning to robustly handle noise and variations in real-world facial videos.

The paper "PhysFormer: Facial Video-based Physiological Measurement with Temporal Difference Transformer" presents a novel approach to measure physiological signals, particularly heart rate, using remote photoplethysmography (rPPG) from facial videos. The introduction of the PhysFormer model, utilizing temporal difference transformers, capitalizes on both local and global spatio-temporal features to enhance the reliability and accuracy of rPPG signal extraction.

Introduction

PhysFormer addresses the limitations of traditional ECG and PPG sensors by offering a non-contact solution to measure physiological signals. Previous methods either relied on classical signal processing or non-end-to-end learning approaches that necessitated pre-processing steps, making them less adaptable to real-world scenarios with motion and variable lighting. Recent CNN-based solutions lacked long-range temporal awareness necessary for capturing the quasi-periodic nature of rPPG signals. PhysFormer leverages the capabilities of transformers, optimizing the learning of spatio-temporal interactions critical for rPPG measurement.

Model Architecture

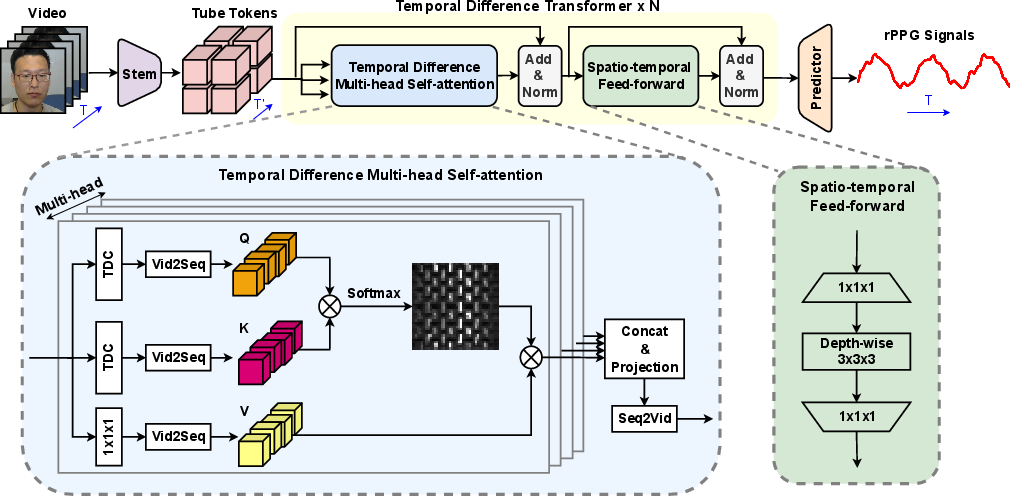

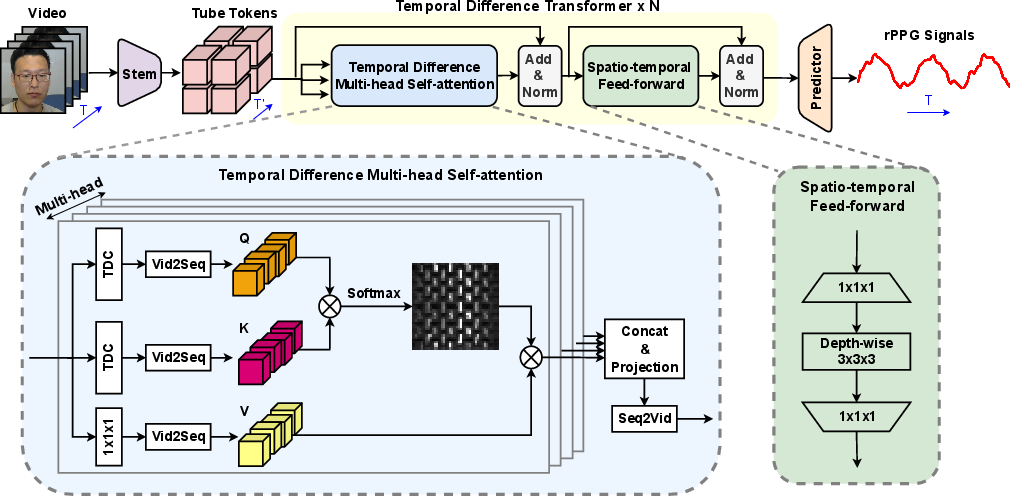

PhysFormer integrates several novel components:

- Temporal Difference Transformer Blocks: These enhance rPPG feature representation by computing global attention based on temporal differences.

- Tube Tokenization: The approach segments spatio-temporal data into tube tokens, aiding in computational efficiency and feature representation.

- Label Distribution Learning: This method treats rPPG estimation as a multi-label classification task over potential heart rate classes for robustness against variations in data.

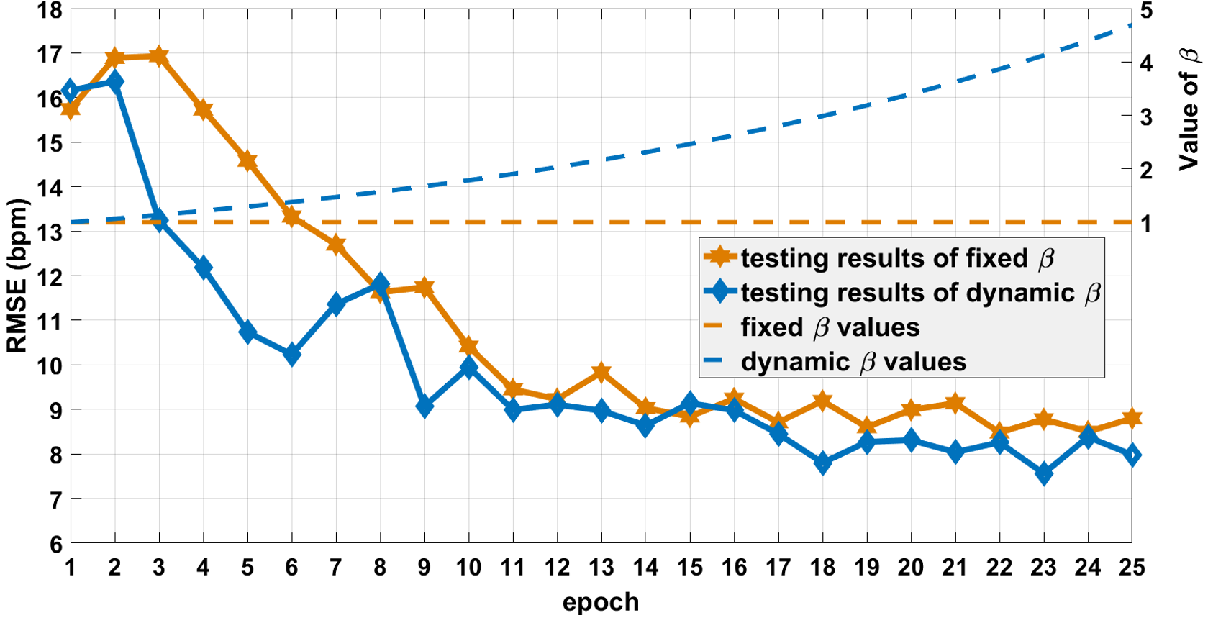

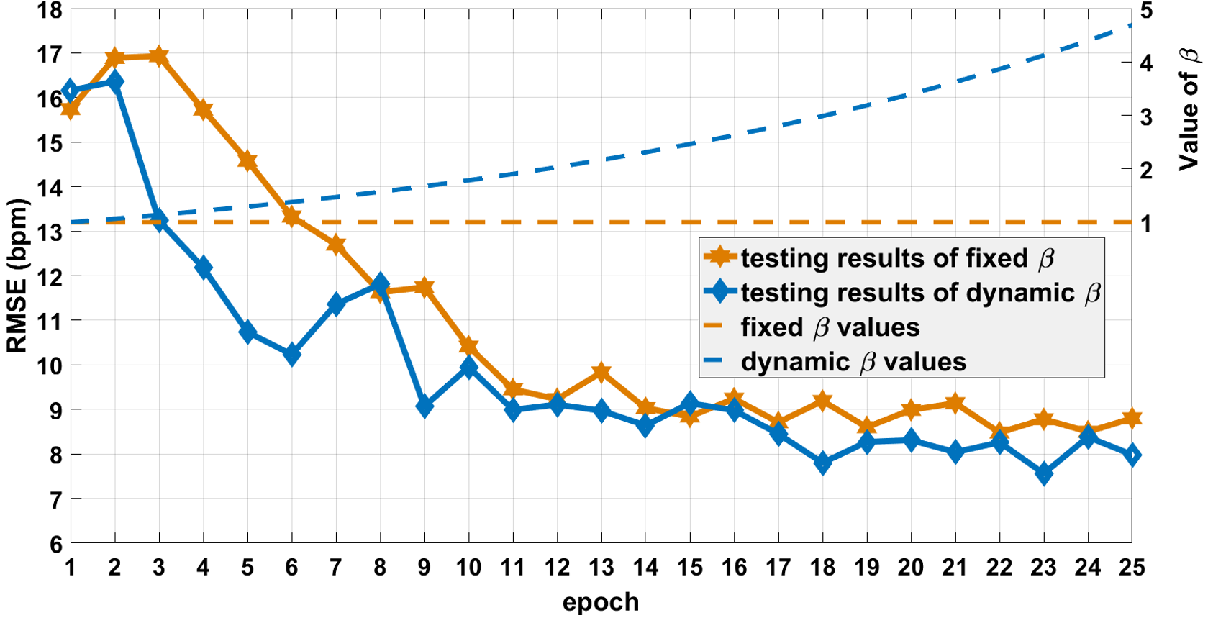

- Dynamic Supervision in Frequency Domain: Curriculum learning techniques dynamically adjust constraints, balancing between temporal and frequency domain supervision to mitigate overfitting and noise effects.

Figure 1: Framework of the PhysFormer. It consists of a shallow stem, a tube tokenizer, several temporal difference transformers, and a rPPG predictor head. TDC is short for temporal difference convolution.

Methodology

Temporal Difference Multi-head Self-attention (TD-MHSA)

TD-MHSA improves attention mechanism efficiency by incorporating temporal difference convolution (TDC), which captures transient skin color variations more effectively than standard self-attention mechanisms. The method adjusts the attention temperature to promote sparser and more focused attentional mappings, critical in detecting quasi-periodic rPPG signatures amidst temporal noise.

Label Distribution Learning

By employing a Gaussian distribution around true heart rate labels, the PhysFormer model enhances cross-class learning, smoothing the impact of labeled noise and better leveraging small-scale datasets.

Dynamic Loss Function

PhysFormer incorporates an adaptive learning routine where the contribution of frequency-based losses escalates exponentially during training. This regime leverages initial convergence achieved by temporal consistency before intensifying frequency-domain learning, optimizing both expressiveness and accuracy.

Experimental Evaluation

Extensive experiments conducted on diverse datasets (VIPL-HR, MAHNOB-HCI, MMSE-HR, and OBF) demonstrate PhysFormer’s superior performance against traditional, non-end-to-end, and end-to-end competitors. Notably, the model excels at averaging heart rate estimates and assesses respiratory frequency and heart rate variability with significant fidelity.

Figure 2: Testing results of fixed and dynamic frequency supervisions on the Fold-1 of VIPL-HR.

PhysFormer notably requires no large-scale pretraining, attributed to its robust architecture which outperforms or matches state-of-the-art results across intra- and cross-dataset evaluations.

Conclusion

The PhysFormer introduces an innovative framework for non-contact physiological measurements, pushing the boundaries of current video-based methods. Its success lies in the long-range spatio-temporal capacity bolstered by a transformer backbone. Future work could explore optimizing its deployment for mobile computing, focusing on architecture efficiency to cater to extensive and diverse video sequences. The implications for healthcare—where contactless monitoring is paramount—are significant, reflecting a promising avenue for continuous AI-driven innovation in vital signs monitoring.

The research successfully illustrates a path for exploiting transformer architectures in rPPG tasks, presenting a model that balances complexity with practical application.