Cross-Task Generalization via Natural Language Crowdsourcing Instructions

Natural language processing (NLP) has seen significant advancements through the fine-tuning of pre-trained LLMs (LMs). However, the ability of these models to generalize to unseen tasks remains inadequately explored. The paper "Cross-Task Generalization via Natural Language Crowdsourcing Instructions" aims to bridge this gap by introducing the concept of learning from instructions, a facet not prominently investigated in previous research.

Overview of the Research

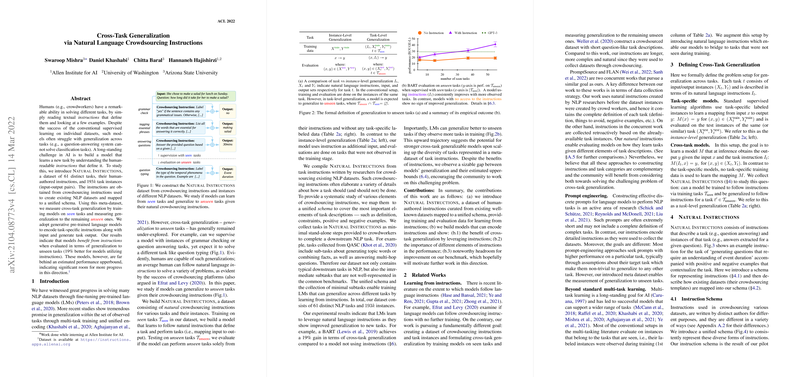

The central premise of this paper is the introduction of the "Natural Instructions," a meta-dataset comprising 61 distinct tasks, complete with human-authored instructions and 193,000 task instances. These instructions are sourced from crowdsourcing templates used in existing NLP datasets and are harmonized into a unified schema to facilitate task representation.

The paper primarily investigates cross-task generalization, examining whether LMs can learn a new task by understanding human-readable instructions without being exposed to task-specific data during training. This is achieved by training models on seen tasks and evaluating their performance on unseen ones, drawing parallels to human capabilities demonstrated in crowdsourcing environments.

Methodology and Dataset Construction

The research methodology involves several strategic steps. Initially, the authors collected crowdsourcing instructions from widely accepted NLP benchmarks. Each dataset is parsed to create task-specific subtasks that represent minimal units of work, allowing the model to learn granularly. Instructions were then mapped to a schema defining task title, prompt, definition, things to avoid, and examples, among other elements. This provides a robust and rich dataset for model training and evaluation.

BART, a generative pre-trained transformer model, is employed to see if it can generalize to new tasks using these instructions. The evaluations are conducted under various task splits, such as random split and leave-one-out splits that include leave-one-category, leave-one-dataset, and leave-one-task methodologies.

Results and Observations

The experimental results demonstrate that the inclusion of instructions significantly benefits task generalization. For example, BART achieved a 19% improvement in generalization performance when instructions were included. It is noteworthy that BART, despite being significantly smaller in scale compared to GPT3, displayed superior performance when fine-tuned with task instructions.

Interestingly, as the number of observed tasks (seen tasks) increased, the performance on unseen tasks correspondingly improved. This indicates the potential for models like BART to evolve into more robust cross-task systems given a wider array of instructions and tasks for training.

However, the research also identifies a clear gap between the generalization capability of current models and the upperbound estimated by task-specific models. This gap highlights significant opportunities for future research focused on enhancing the instruction-following ability of LLMs.

Implications and Future Directions

The implications of this research are manifold. Practically, it suggests a path towards developing LMs capable of fluid task adaptation without requiring extensive re-training, closely mirroring human adaptability. Theoretically, it lays the groundwork for more profound inquiries into the nature of task understanding within artificial intelligence.

For future developments, expanding the diversity of the instruction-based dataset is recommended, which could yield stronger generalization capabilities. Furthermore, more sophisticated methods for instruction encoding and integration within models should be explored to reduce the gap between current capabilities and estimated upperbounds. This work encourages continued exploration into instruction-driven learning paradigms, promising advancements in creating versatile and autonomous AI systems.