Overview of "NaturalInstructions 2.0: Generalization via Declarative Instructions on 1600+ NLP Tasks"

The paper presents "NaturalInstructions 2.0," a comprehensive benchmark comprising 1,616 diverse NLP tasks paired with natural language instructions. The goal is to evaluate how well NLP models can generalize across various unseen tasks when provided with explicit instructions. This work significantly extends previous benchmarks in scope and diversity, offering a robust platform for analyzing cross-task generalization using input prompts.

Key Contributions

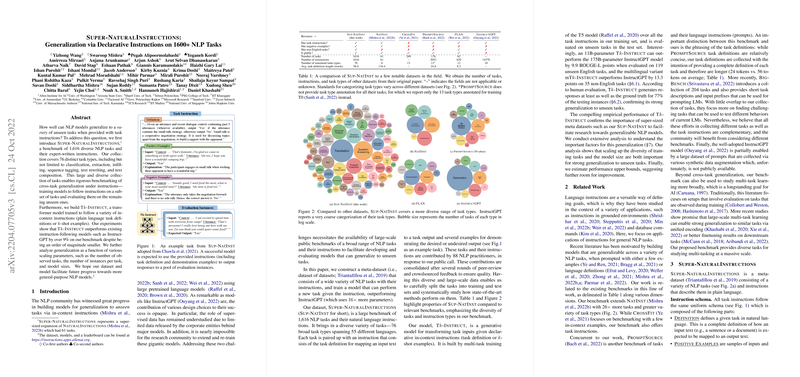

- Dataset Construction: The authors introduce a large-scale meta-dataset that includes a wide array of 76 task types, spanning environments such as classification, extraction, infilling, and text rewriting across 55 languages. This diversity enables exhaustive testing of models' abilities to generalize based on instructional prompts.

- T-Instruct Model: The paper introduces T-Instruct, a transformer-based model trained on various instructions. Remarkably, T-Instruct exceeds the performance of InstructGPT by over 9% on the benchmark, while being significantly smaller in scale.

- Methodological Insights: A detailed analysis reveals insights into factors affecting generalization, including the number of tasks, instances per task, and model size. This offers a depth of understanding of scaling laws in the context of instruction-following models.

Numerical Results and Comparative Analysis

- Performance Metrics: The primary metric for evaluation is ROUGE-L, applied across task-specific evaluations. T-Instruct shows substantial improvements, particularly on unseen tasks, confirming the model's robustness to unfamiliar instructions.

- Model Scaling Analysis: The paper unveils linear improvements in performance with exponential increases in training tasks and architecture size. This highlights the utility of more diverse tasks and larger models for improving generalization.

- Human Evaluation: Complementary human assessments validate the automatic metrics, ensuring alignment between machine-generated outputs and human expectations regarding instruction comprehension.

Implications

This benchmark and model offer significant theoretical and practical value:

- Theoretical Progression: As a tool for the paper of cross-task generalization, NaturalInstructions 2.0 facilitates exploration into the transferability of instruction-following in LLMs.

- Practical Applications: Models like T-Instruct showcase the potential for improved task generalization, underscoring their applicability to real-world scenarios where new tasks are encountered dynamically.

Future Directions

The work opens several avenues for future research:

- Language Diversity: Enhancements in non-English task representation could broaden the model's applicability across different linguistic landscapes.

- Instruction Construction: Further research into optimal instruction schema and training strategies could bolster model performance on complex tasks.

- Evaluation Metrics: Developing more refined metrics tailored to specific task types, beyond general-purpose ones like ROUGE-L, could provide deeper insights into model efficacy.

In conclusion, "NaturalInstructions 2.0" establishes a comprehensive foundation for advancing the development and evaluation of NLP models on cross-task generalization using instructions. It offers not only a benchmark but a stepping stone towards more adaptable and responsive AI systems.