An Academic Essay on "Transformers in Vision: A Survey"

The paper "Transformers in Vision: A Survey" by Khan et al. comprehensively examines the application of Transformer models to the computer vision domain. Though initially introduced in the context of natural language processing, Transformers have shown significant promise in various vision tasks due to their ability to model long-range dependencies and support parallel processing. This survey meticulously reviews the adaptations and innovations that have enabled the use of Transformers within various computer vision applications.

Fundamental Concepts and Design Variants

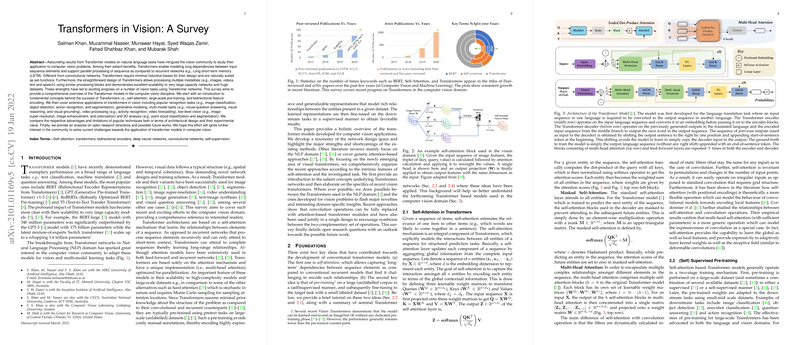

The survey begins by outlining two key ideas essential for understanding conventional Transformer models: self-attention and pre-training. Self-attention, a cornerstone of Transformer architectures, captures long-term dependencies by learning relationships between all elements of a sequence. This mechanism contrasts with recurrent networks, which inherently struggle with long-range relationships.

The discussion extends to pre-training, which involves training models on a vast corpus in a self-supervised manner before fine-tuning them for specific downstream tasks. This two-stage approach has been pivotal for large-scale models like BERT and GPT, allowing them to achieve state-of-the-art performance in various applications.

Vision Transformers: Unified Taxonomy and Architectures

The paper categorizes vision models using self-attention into two principal types: single-head self-attention in CNNs and multi-head self-attention with pure Transformer designs. The single-head self-attention models, such as CCNet and Axial Attention, improve computational efficiency and effectiveness by focusing attention on sparse structures like the criss-cross path rather than the entire feature map.

In contrast, multi-head self-attention models, including Vision Transformer (ViT) and Swin Transformer, completely replace standard convolutions with attention mechanisms. ViT, as the pioneering model, demonstrated the feasibility of using Transformers for image classification, albeit with the caveat of requiring vast datasets for pre-training. Subsequent models like DeiT addressed this limitation by introducing distillation techniques to train on mid-sized datasets. Additionally, hierarchical designs, such as Swin Transformer, improved the adaptability of Transformers to dense prediction tasks, making them comparable to traditional CNNs in applications like object detection and segmentation.

Self-Supervision and Multi-Modal Learning

The paper details various self-supervised learning approaches that have been adapted for training vision Transformers. These include masked image modeling and contrastive learning, which have shown promising results in balancing the trade-off between computational complexity and learning efficiency.

The section on multi-modal tasks underscores the versatility of Transformers in integrating vision and language. Models like ViLBERT and LXMERT use multi-stream architectures to learn joint embeddings, whereas single-stream designs like UNITER aim to unify vision and linguistic representations in a single framework. These models have set new benchmarks in tasks such as visual question answering and visual reasoning.

Applications Across Computer Vision Tasks

Transformers have been successfully deployed in myriad vision tasks beyond classification. In object detection, DETR replaces conventional architectures with an end-to-end Transformer-based approach, eschewing hand-crafted modules for a more integrative design. Similarly, in segmentation, models like SegFormer leverage hierarchical Transformer designs to capture fine spatial detail.

For video understanding, Transformers have facilitated the modeling of long-term dependencies, thus enhancing performance in tasks such as action recognition and video object detection. The survey also highlights innovative applications in low-shot learning and 3D analysis, where Transformers dynamically adapt embeddings or model spatial relationships in point clouds, respectively.

Challenges and Future Directions

Despite the transformative impact and impressive capabilities of Transformers in vision tasks, several challenges remain:

- High Computational Costs: The quadratic complexity of self-attention necessitates efficient architectural adaptations. Recent efforts focus on reducing this cost through sparse attention mechanisms or low-rank approximations.

- Large Data Requirements: Transformers' data-hungry nature poses a significant hurdle. Strategies like distilled training have shown potential, yet further research is needed to enable data-efficient training.

- Interpretability: As the complexity of these models grows, so does the need for tools to interpret their decision-making processes adequately.

- Hardware Efficiency: Deployment of Transformer models on resource-constrained environments such as IoT devices is an open challenge that requires optimized architecture search and efficient implementation.

Conclusion

This survey underlines the exciting progress in applying Transformer models to diverse computer vision challenges. By providing an exhaustive overview and emphasizing critical areas for future research, this paper serves as a vital resource for researchers and practitioners working at the intersection of deep learning and computer vision. Transforming the foundational architecture to better suit vision tasks while addressing its inherent challenges reveals a promising avenue towards more efficient and effective vision systems.