A Survey of Visual Transformers

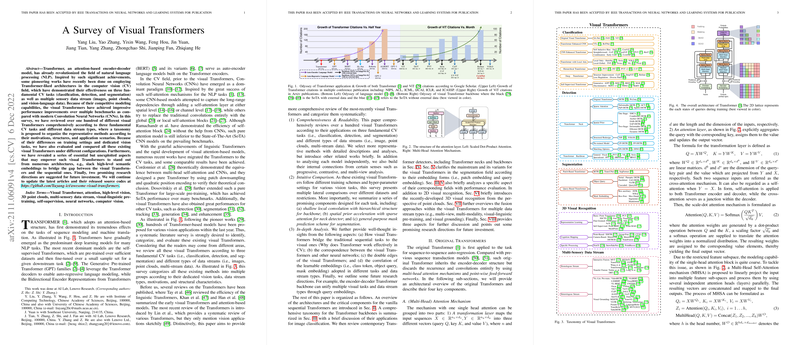

The integration of Transformer architectures into computer vision tasks marks a significant advancement beyond their original utility in natural language processing. The paper provides an extensive survey of visual Transformers across fundamental computer vision (CV) tasks including classification, detection, and segmentation, as well as their application to diverse data types such as images, point clouds, and vision-language data.

Overview of Visual Transformers

Visual Transformers have gained traction by incorporating attention mechanisms that enable them to capture long-range dependencies inherent in CV tasks. The paper organizes more than one hundred models into a clear taxonomy based on their applications, comparing architectures, motivations, and their effectiveness across different vision tasks. The impact on these tasks is measured against state-of-the-art convolutional neural networks (CNNs), highlighting significant improvements in performance and modeling capability.

Key Contributions

- Comprehensive Review: The survey delineates the broad landscape of visual Transformers, focusing on their structure and design rationale across different CV tasks. It discusses various architectural feats such as hierarchical, deep, and self-supervised visual Transformers, providing insights into their unique contributions and performance metrics.

- Taxonomy of Visual Transformers: The paper classifies visual Transformers by task and data type, aiding in understanding the diverse approaches and their applications in CV. For instance, for segmentation, the models are organized into patch-based and query-based Transformers, further dissecting into object queries and mask embeddings.

- Performance Analysis: Detailed performance analysis is presented, showing how visual Transformers achieve substantial accuracy gains over CNNs, especially with large datasets in classification tasks. The paper provides visual and tabular comparisons on tasks such as ImageNet classification and COCO detection, elucidating their efficiency and scalability.

- Future Directions: The paper highlights potential research directions, advocating for further exploration into set prediction in classification, self-supervised learning paradigms, and more efficient training strategies to mitigate limitations such as longer convergence times and computational complexity.

Implications and Speculation on Future Developments

Visual Transformers hold promise for unifying multiple CV tasks within a coherent architectural framework, leveraging their strength to synthesize learning across different sensory streams and task requirements. This unification could facilitate end-to-end training models without the need for handcrafted components like anchor boxes in object detection or bounding box proposals in segmentation. In the field of AI, broader adoption of visual Transformers could lead to more generalizable and robust models capable of performing a multitude of tasks with less data-specific tweaking.

The survey underscores the importance of visual Transformers in advancing CV tasks and suggests that with continuous research, they could become a cornerstone technology for future AI systems, akin to what CNNs achieved in the earlier deep learning wave.