A Survey on Visual Transformer

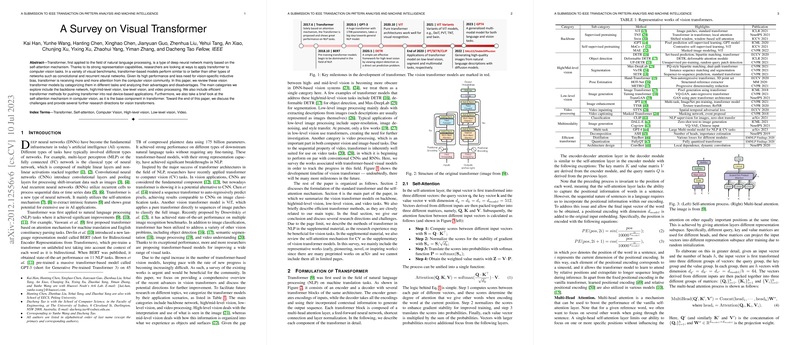

The paper "A Survey on Visual Transformer" by Han et al. provides a thorough and comprehensive review of the application of transformer models in the domain of computer vision (CV). Transformer models, well-known for their success in NLP, have recently been adapted for visual tasks, leading to significant advancements. This survey meticulously categorizes and analyzes the state-of-the-art vision transformers, covering their implementations across various tasks such as backbone networks, high/mid-level vision, low-level vision, and video processing.

Vision Transformers in Backbone Networks

One of the primary applications of transformers in CV is as backbone networks for image representation. Unlike traditional convolutional neural networks (CNNs), vision transformers leverage the self-attention mechanism to capture global dependencies in images. The paper reviews several notable models, including Vision Transformer (ViT), Data-efficient image transformer (DeiT), Twin Transformer (TNT), and Swin Transformer. ViT employs a straightforward approach by treating an image as a sequence of patches and applying a transformer encoder to this sequence. It achieves impressive results when pre-trained on large datasets. DeiT introduces data-efficient training strategies that allow competitive performance using only the ImageNet dataset. The Swin Transformer uses a hierarchical structure with shifted windows to balance local and global context efficiently.

High/Mid-Level Vision Tasks

Transformers have been effectively applied to high-level vision tasks such as object detection, segmentation, and pose estimation. In object detection, the Detection Transformer (DETR) reformulates the task as a set prediction problem, eliminating the need for conventional components like anchor generation and non-maximum suppression. Deformable DETR enhances detection performance by employing a deformable attention mechanism, leading to faster convergence and improved accuracy, particularly on small objects.

For segmentation tasks, the paper reviews models like SETR and Max-DeepLab, which leverage transformer architectures for pixel-level predictions. SETR uses standard transformer encoders to extract image features, which are then used for semantic segmentation. Max-DeepLab, on the other hand, introduces a dual-path network combining CNN and transformer features for end-to-end panoptic segmentation.

In pose estimation, transformers have been utilized to capture the complex relationships in human and hand poses. Models like METRO (Mesh Transformer) and Hand-Transformer demonstrate the application of transformers in predicting 3D poses from 2D images or point clouds, showcasing their ability to handle intricate spatial dependencies.

Low-Level Vision Tasks

While traditionally dominated by CNNs, low-level vision tasks such as image generation and enhancement have also benefited from transformer models. The Image Processing Transformer (IPT) utilizes pre-training on large datasets with various degradation processes, achieving state-of-the-art results in super-resolution, denoising, and deraining tasks. IPT's architecture, featuring a multi-head and multi-tail structure, exemplifies the adaptiveness of transformers to different image processing tasks.

In image generation, models like TransGAN and Taming Transformer employ the transformer architecture to generate high-resolution images, leveraging the global context captured by self-attention mechanisms. These models outperform conventional GANs, particularly in terms of generating coherent and contextually accurate images.

Video Processing

Transformers have also demonstrated promising results in the video domain. For video action recognition, models such as the action transformer use self-attention to model the relationship between subjects and context in a video frame. In video object detection, architectures like the spatiotemporal transformer integrate spatial and temporal information to improve detection performance effectively.

Video inpainting is another area where transformers excel. The Spatial-Temporal Transformer Network (STTN), for instance, employs self-attention to fill in missing regions in video frames by capturing and replicating contextual information both spatially and temporally.

Efficient Transformer Methods

Transformers inherently require significant computational resources, which is a bottleneck for their deployment in resource-limited environments. The survey discusses several methods to improve the efficiency of transformers, including network pruning, low-rank decomposition, knowledge distillation, and quantization. These techniques aim to reduce the computational burden while maintaining model performance, making transformers more viable for practical applications.

Implications and Future Directions

The advancements in vision transformers represent substantial progress in CV, demonstrating their potential to outperform traditional CNNs across various tasks. From a theoretical standpoint, the ability of transformers to capture long-range dependencies and integrate global context is fundamentally transformative for CV models.

Future directions include the development of more efficient transformers, understanding the interpretability of their decisions, and leveraging their pre-training capabilities for robust generalization across domains. Research must also focus on unifying models for multitask learning, potentially leading to grand unified models capable of addressing a wide array of visual and possibly multimodal tasks.

In conclusion, while transformers have already reshaped the trajectory of computer vision research, continued exploration into efficient architectures and broader applications promises even greater advancements and more versatile AI systems.