Overview of ERNIE-Gram: Pre-Training with Explicitly N-Gram Masked LLMing for Natural Language Understanding

Introduction

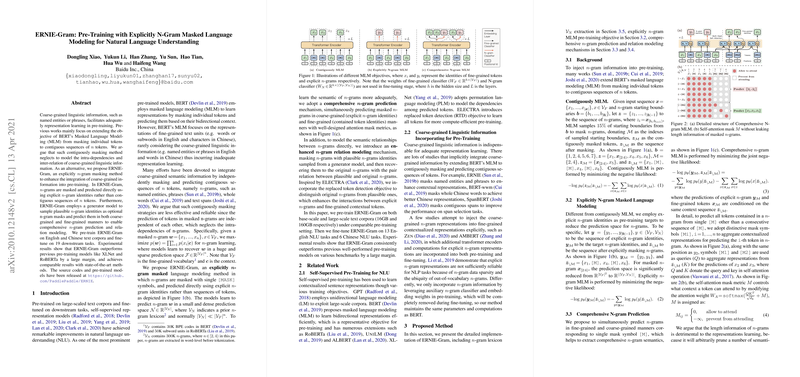

The paper introduces ERNIE-Gram, a novel approach to LLM pre-training that leverages explicitly masked n-grams to enhance natural language understanding (NLU). This approach addresses the limitations of traditional masked LLMs (MLMs), such as BERT, which predominantly focus on individual tokens. ERNIE-Gram seeks to integrate coarse-grained linguistic information, such as phrases and named entities, into the pre-training process.

Methodology

ERNIE-Gram employs a unique methodology involving several components:

- Explicitly N-Gram Masking: Unlike conventional MLMs that mask sequences of contiguous tokens, ERNIE-Gram masks n-grams using explicit n-gram identities. This reduces the prediction space, making it more focused and effective.

- Comprehensive N-Gram Prediction: The model predicts masked n-grams in both coarse-grained and fine-grained manners, allowing for a more robust understanding of n-gram semantics.

- Enhanced N-Gram Relation Modeling: A generator model samples plausible n-gram identities, enabling the main model to learn subtle semantic relationships between n-grams.

The model is pre-trained on English and Chinese corpora and fine-tuned across 19 downstream tasks.

Results

Empirical evaluations reveal that ERNIE-Gram significantly outperforms previous models, such as XLNet and RoBERTa, on benchmark tasks in NLU. Key highlights include strong performance on the GLUE benchmark and SQuAD, where ERNIE-Gram achieved notable improvements over the baseline methods.

Analysis

The use of explicitly n-gram masking allows ERNIE-Gram to maintain tighter intra-dependencies within coarse-grained text units, effectively capturing semantic details that conventional models might overlook. The n-gram relation modeling via plausible sample identities further enhances this advantage by modeling semantic pair relationships.

Implications

From a theoretical standpoint, ERNIE-Gram expands the possibilities for integrating more detailed semantic information into LLMs without increasing model complexity during fine-tuning. Practically, this aligns well with tasks requiring nuanced understanding of complex linguistic structures, such as named entity recognition and question answering.

Future Directions

The success of ERNIE-Gram suggests several avenues for further research:

- Exploration of larger and more comprehensive n-gram lexicons beyond tri-grams.

- Application of ERNIE-Gram in multi-lingual contexts to assess its adaptability across diverse linguistic datasets.

- Expansion to larger model sizes to determine scaling properties and impacts on even more resource-intensive tasks.

In conclusion, ERNIE-Gram contributes a significant advancement in the integration of n-gram semantics into LLM pre-training, offering both theoretical insights and practical enhancements to current NLU challenges.