Analysis of the ELECTRA Pre-training Framework

The paper "ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators" introduces an innovative approach to self-supervised learning for language representation, diverging from traditional masked LLMing (MLM) techniques like those employed in BERT. Instead of training models to generate masked tokens, ELECTRA optimizes them to distinguish between real input tokens and plausible replacements sampled from a small generator network. This methodological shift positions the model as a discriminator, enhancing its sample efficiency and computational performance.

Methodological Advancements

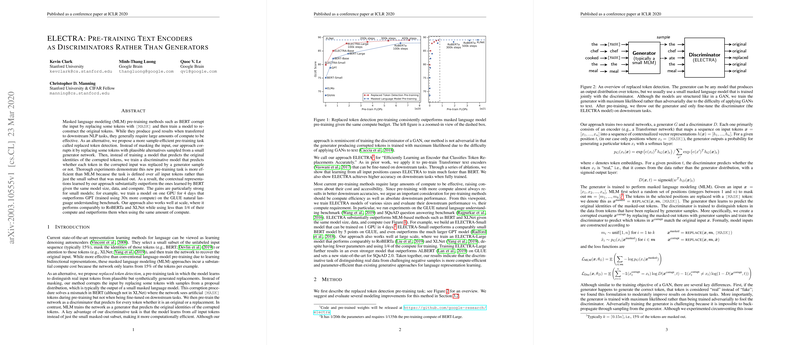

ELECTRA introduces a pre-training task known as replaced token detection. Unlike MLM, where a subset of tokens is masked out, ELECTRA replaces some tokens with samples from a generator, compelling the model to predict whether each token in the input is authentic or synthesized. This approach utilizes all input tokens for training rather than just a subset, which significantly reduces computational overhead and enhances efficiency.

The framework comprises a generator, which performs masked LLMing, and a discriminator, which identifies whether tokens have been replaced. Notably, the generator is trained via maximum likelihood, rather than in an adversarial manner, due to practical concerns with adversarial training on text data. Furthermore, to improve efficiency, ELECTRA employs a smaller generator model, sharing weights where feasible, thus retaining effectiveness even at reduced computational scales.

Empirical Evaluation

ELECTRA's efficacy is substantiated through extensive experiments on benchmarks like the GLUE and SQuAD datasets. Results consistently indicate that ELECTRA outperforms contemporary approaches such as BERT, GPT, RoBERTa, and XLNet across various configurations, particularly with smaller and more computationally efficient models. For instance, ELECTRA-Small, trained on modest hardware, can surpass larger models like GPT on GLUE tasks despite significant differences in parameters and computational investments.

When scaled to larger models, ELECTRA retains competitiveness, achieving comparable performance to state-of-the-art models while requiring less pre-training compute. ELECTRA-400K, when trained with less than a quarter of the compute used by RoBERTa or XLNet, maintains performance, and the more extensively trained ELECTRA-1.75M sets new benchmarks on SQuAD 2.0, highlighting its robustness at larger scales.

Theoretical and Practical Implications

Practically, ELECTRA provides a more compute-efficient alternative to existing pre-training methods, reducing the cost and time barriers often associated with developing state-of-the-art LLMs. The model's ability to derive meaningful representations from all input tokens could democratize access to NLP models, enabling broader research and application by institutions with limited computational resources.

Theoretically, the results contribute insights into the potential for discriminative pre-training tasks to outperform generative ones in certain contexts. By circumventing the necessity for tokens, ELECTRA mitigates discrepancies between pre-training and fine-tuning phases, addressing a prominent limitation in previous models such as BERT.

Future Prospects

Given ELECTRA's promising performance, several avenues for future development arise. Refinements could explore different configurations of generator-discriminator architecture, possibly integrating adversarial strategies more effectively without the pitfalls observed in current experimentation. Additionally, extending ELECTRA to multilingual domains could vastly broaden its applicability, capitalizing on its sample efficiency in diverse linguistic settings.

This paper's contributions are technical and notable, emphasizing a shift from generative to discriminative pre-training without inflating computational costs. As the landscape of NLP continues to evolve, methodologies like ELECTRA offer significant enhancements in both efficiency and accuracy, pushing the boundaries of what's achievable with LLMs.