ERNIE: Enhanced Representation through Knowledge Integration

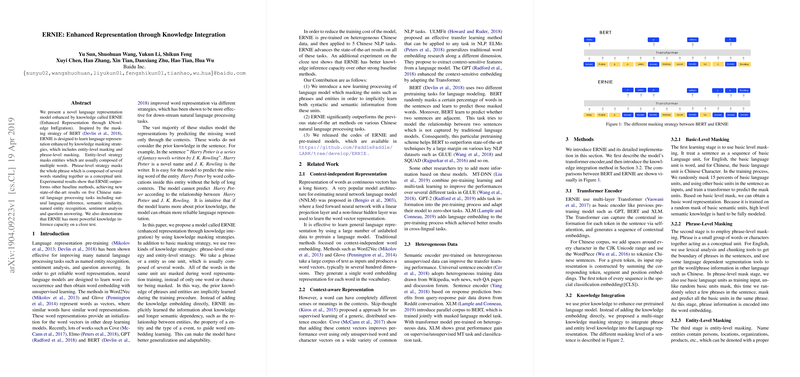

The paper "ERNIE: Enhanced Representation through Knowledge Integration" by Yu Sun et al. presents a novel pre-trained language representation model that aims to integrate explicit knowledge during the pre-training phase. The approach proposed in this paper builds upon the significant advancements achieved by models such as BERT by incorporating additional masking strategies and heterogeneous data sources to improve the model's ability to understand and generate semantically rich representations.

At its core, ERNIE (Enhanced Representation through Knowledge Integration) introduces two novel masking strategies: entity-level masking and phrase-level masking, supplementing the basic word-level masking employed by BERT. Entity-level masking involves hiding entities, which are typically composed of multiple words, whereas phrase-level masking involves concealing entire phrases that function as conceptual units within sentences. By masking these larger units, the model is compelled to learn more comprehensive semantic information, going beyond the capacity of traditional models that only focus on word co-occurrence within contexts.

Key Contributions and Findings

- Novel Masking Strategies: ERNIE introduces phrase-level and entity-level masking alongside basic word-level masking. This multi-stage masking strategy enables the model to capture richer syntactic and semantic information during pre-training. The entity-level masking allows the model to learn relationships among entities like people, places, and organizations, while phrase-level masking captures more complex syntactic constructs.

- State-of-the-Art Performance: The experimental results demonstrate that ERNIE surpasses existing models on multiple Chinese NLP tasks, establishing new state-of-the-art metrics in natural language inference, semantic similarity, named entity recognition, sentiment analysis, and question answering. For instance, on the XNLI dataset, ERNIE achieves a test set accuracy of 78.4%, outperforming BERT's 77.2%.

- Heterogeneous Data Integration: The corpus used for pre-training ERNIE includes a heterogeneous mix of data sourced from Chinese Wikipedia, Baidu Baike, Baidu News, and Baidu Tieba. This diverse data selection ensures that the model is exposed to various linguistic styles and domains, enhancing its generalizability and robustness across different NLP tasks.

- Dialogue LLM (DLM): To further enhance its capability, ERNIE incorporates the Dialogue LLM (DLM) task, which is designed to capture implicit relationships in dialogues. The DLM task involves pre-training the model on query-response pairs from dialogue threads and determining the real or fake nature of multi-turn conversations.

Experimental Evaluation

The authors conducted extensive evaluations across five Chinese NLP tasks:

- Natural Language Inference (XNLI): ERNIE achieved a test accuracy improvement of 1.2% over BERT, marking a notable advancement in the model's ability to perform textual entailment.

- Semantic Similarity (LCQMC): The model demonstrated improvements in accuracy, indicating superior performance in identifying sentence pairs with similar intentions.

- Named Entity Recognition (MSRA-NER): ERNIE's F1 score increased by 1.2%, highlighting its enhanced ability to identify and classify named entities.

- Sentiment Analysis (ChnSentiCorp): The sentiment analysis task saw an accuracy increase of 1.1%, underscoring the model's effectiveness in determining the sentiment of sentences.

- Question Answering (nlpcc-dbqa): ERNIE showed improvements in MRR and F1 metrics, suggesting better alignment of questions with their corresponding answers.

Implications and Future Directions

The introduction of knowledge integration through entity-level and phrase-level masking sets a new direction for pre-trained LLMs. The significant performance gains across various tasks imply that incorporating more semantic and syntactic information during pre-training can lead to better-contextualized word embeddings and enhanced model generalization.

The success of ERNIE opens several avenues for future research:

- Cross-Linguistic Application: Extending the integration of knowledge to other languages beyond Chinese, particularly in languages with rich syntactic structures, could validate the scalability and robustness of this approach.

- Incorporating Additional Knowledge Types: Future models could benefit from integrating other types of knowledge, such as syntactic parsing, relational knowledge graphs, and weak supervision signals from different domains, to construct even richer semantic representations.

- Enhancing Multi-Task Learning: By combining knowledge integration with multi-task learning strategies, similar to the MT-DNN approach, future models could further improve performance on a wide array of NLP benchmarks.

In conclusion, the ERNIE model marks a significant step forward in LLM pre-training, demonstrating the potential benefits of explicitly incorporating structured knowledge. The marked improvements across Chinese NLP tasks underscore the efficacy of the new masking strategies and heterogeneous data integration, which could inspire similar advancements in future research.