PP-OCR: A Practical Ultra Lightweight OCR System (Du et al., 2020 ) addresses the challenges of deploying Optical Character Recognition (OCR) systems in resource-constrained environments, such as mobile phones and embedded devices, while maintaining practical performance. Traditional OCR systems often require large models and significant computational power, making them unsuitable for such applications, especially when dealing with diverse text appearances in scene text or document images.

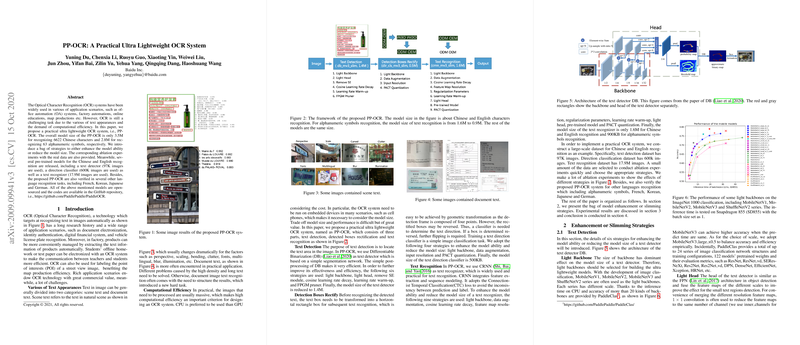

The proposed PP-OCR system is an end-to-end, ultra-lightweight, practical OCR solution designed for efficiency on CPUs and embedded devices. It adopts a modular pipeline consisting of three main stages:

- Text Detection: Locating text bounding boxes in an image.

- Detected Boxes Rectification: Correcting the orientation of the detected text boxes.

- Text Recognition: Transcribing the text within the rectified boxes.

A key contribution of the paper is the application of a "bag of strategies" to both enhance model accuracy and reduce model size across all three stages. These strategies are evaluated through extensive ablation studies using large-scale, real-world, and synthetic datasets.

Text Detection

The text detection stage uses a Differentiable Binarization (DB) model, known for its efficiency. To make it ultra-lightweight, PP-OCR employs several strategies:

- Light Backbone: Replaces heavier backbones with efficient ones like MobileNetV3_large_x0.5, selected based on balancing accuracy and CPU inference time metrics from PaddleClas.

- Light Head: Reduces the number of channels in the FPN-like head structure ( convolution channels, referred to as

inner_channels) from 256 to 96, significantly reducing model size and inference time with minimal accuracy drop. - Removing SE Modules: Eliminates Squeeze-and-Excitation (SE) blocks from the backbone. While SE blocks generally improve accuracy, the paper finds that for large input resolutions typical in detection, their accuracy gain is limited but their time cost is high. Removing them reduces model size and inference time without accuracy degradation.

- Cosine Learning Rate Decay: Uses a cosine annealing schedule for the learning rate, which helps achieve better final convergence accuracy compared to step decay.

- Learning Rate Warm-up: Starts training with a small learning rate before increasing it, improving training stability and accuracy.

- FPGM Pruner: Applies Filter Pruning via Geometric Median (FPGM) to remove redundant filters based on their similarity, further reducing model size and accelerating inference on mobile devices (like Snapdragon 855). The pruning ratio is determined based on layer sensitivity analysis.

Direction Classification

After text detection, a direction classifier is used to determine if a text line is reversed and needs flipping before recognition. This is treated as a simple image classification task:

- Light Backbone: Uses MobileNetV3_small_x0.35, a very light backbone, as the task is relatively simple.

- Data Augmentation: Employs both Base Data Augmentation (BDA), including rotation, perspective distortion, blur, and noise, and RandAugment to improve robustness and accuracy.

- Input Resolution: Increases the input image resolution for the classifier from the typical to to improve accuracy without significant computational cost increase due to the light backbone.

- PACT Quantization: Applies Parameterized Clipping Activation (PACT) for online quantization, modifying the clipping range to better handle activation functions like hard swish used in MobileNetV3. This strategy reduces model size and inference time, and interestingly, showed a slight accuracy improvement in ablation. L2 regularization is added to PACT parameters for robustness.

Text Recognition

The text recognition stage uses a CRNN (Convolutional Recurrent Neural Network) model with a Connectionist Temporal Classification (CTC) loss. Similar to detection, several strategies are applied:

- Light Backbone: Uses MobileNetV3_small_x0.5 (or x1.0 for slightly higher accuracy at the cost of size) as the feature extractor.

- Data Augmentation: Combines BDA and TIA (Text Image Augmentation), which applies geometric transformations by moving fiducial points, to synthesize diverse training data.

- Cosine Learning Rate Decay & Learning Rate Warm-up: Similar to detection, these are used for better convergence and stability.

- Feature Map Resolution: Modifies the stride of downsampling layers in the MobileNetV3 backbone from (2,2) to (2,1) and the second downsampling stride from (2,1) to (1,1). This preserves more horizontal and vertical information, which is crucial for accurate text recognition, especially for languages like Chinese or vertical text.

- Regularization Parameters (L2_decay): Finds that L2 regularization on weights with a specific coefficient (e.g., $1e-5$) significantly improves accuracy by preventing overfitting.

- Light Head: Reduces the dimension of the sequence features before the final classification layer from 256 to 48. This drastically cuts down the model size, particularly important for recognizing large character sets like Chinese (6000+ characters).

- Pre-trained Model: Utilizes models pre-trained on a massive dataset (17.9M images) as a starting point for fine-tuning, leading to significant accuracy improvements, especially when training data is limited.

- PACT Quantization: Applies PACT quantization to reduce model size and inference time. LSTM layers are skipped during quantization due to complexity.

Dataset and Implementation

The system is trained on a large-scale dataset for Chinese and English, comprising 97K images for detection, 600K for direction classification, and 17.9M for recognition. These datasets include a mix of real and synthetic images, with synthesis tailored to specific challenges like long text, multi-direction text, reversed text, varying backgrounds, and transformations. The data synthesis tool is based on text_render. Ablation studies were conducted on smaller subsets for faster iteration. The Adam optimizer is used for training. Evaluation metrics include HMean for detection, Accuracy for classification/recognition, and F-score for the end-to-end system. Inference times are reported for CPU, T4 GPU, and Snapdragon 855.

System Performance

The paper demonstrates that the combined strategies result in an ultra-lightweight system with a total model size of only 3.5M for Chinese and English and 2.8M for alphanumeric symbols. Ablation studies confirm the effectiveness of each strategy in reducing size, improving efficiency (inference time), or boosting accuracy. The FPGM pruner and PACT quantization together reduce the overall system size by 55.7% and accelerate inference on a mobile device by 12.42% with no F-score impact. While acknowledging a gap in accuracy compared to a much larger-scale OCR system (155.1M model size), PP-OCR achieves a significantly better trade-off in terms of model size and inference time, making it highly practical for resource-constrained applications.

PP-OCR also shows promising results for multilingual recognition (French, Korean, Japanese, German) by training recognition models using synthesized data for those languages, leveraging the multilingual capability of the pre-trained detection model.

All models, datasets, and code are open-sourced on the PaddlePaddle/PaddleOCR GitHub repository, facilitating practical implementation and application.