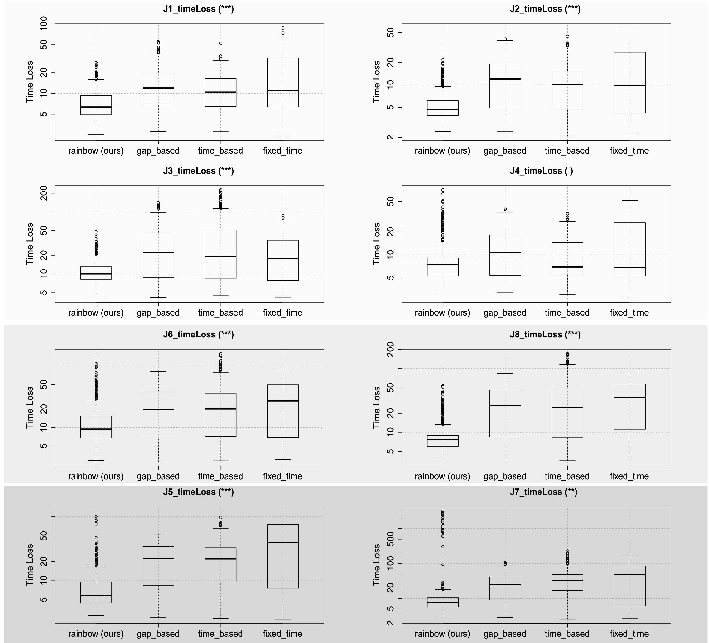

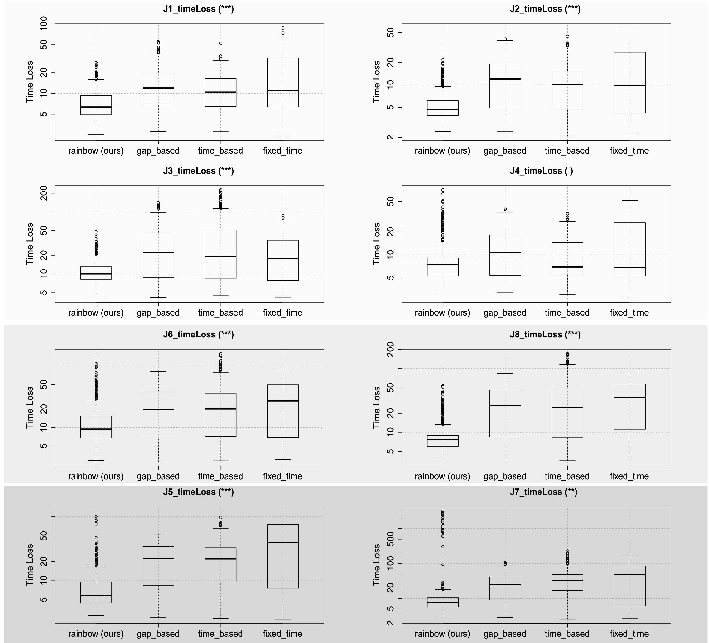

- The paper demonstrates that DRL significantly reduces timeLoss compared to traditional methods at complex intersections.

- The DRL framework leverages SUMO simulations with defined state and action spaces based on real-world geometries.

- The study outlines avenues for integrating multi-modal traffic and exploring sensor and action space variations in future research.

Summary of "Distributed Traffic Light Control at Uncoupled Intersections with Real-World Topology by Deep Reinforcement Learning"

Introduction

The paper "Distributed Traffic Light Control at Uncoupled Intersections with Real-World Topology by Deep Reinforcement Learning" (1811.11233) investigates traffic light control (TLC) systems focusing on uncoupled intersections with complex real-world topologies. The paper aims to address traffic inefficiencies caused by outdated and rigid traffic management systems, which negatively impact flow efficiency and contribute to environmental pollution and noise. The authors propose using deep reinforcement learning (DRL) to optimize traffic flow, fuel consumption, and noise emissions at intersections.

Current TLC strategies include pretimed, actuated, and adaptive controls. Pretimed controls use fixed cycles, actuated controls switch schemes based on detectors, and adaptive controls optimize action based on intersection states. These methods have limitations in real-time adaptivity and handling varied traffic conditions. DRL has demonstrated efficacy in optimizing TLC systems, but often neglects the complexities introduced by real-world intersection topologies.

Framework

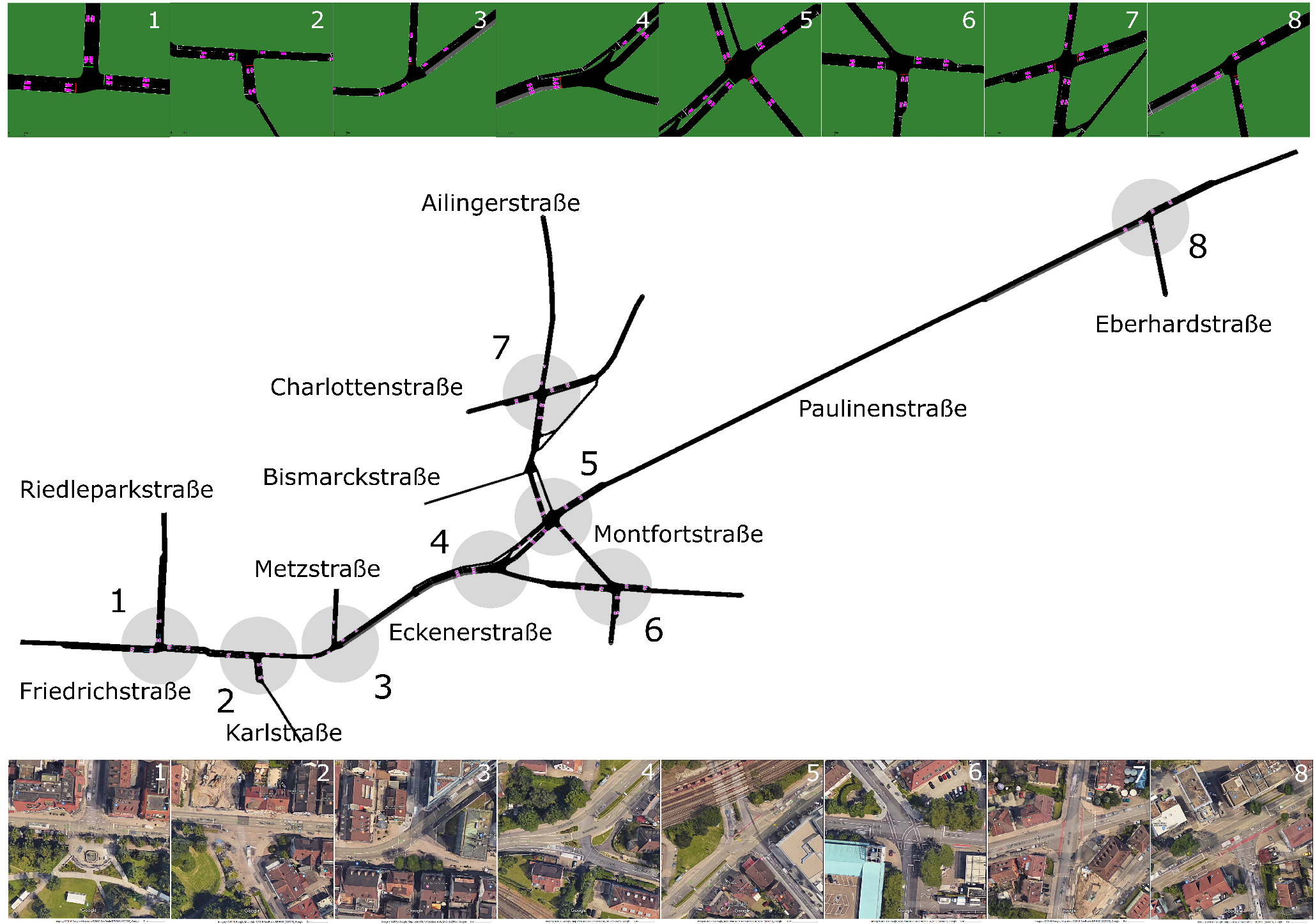

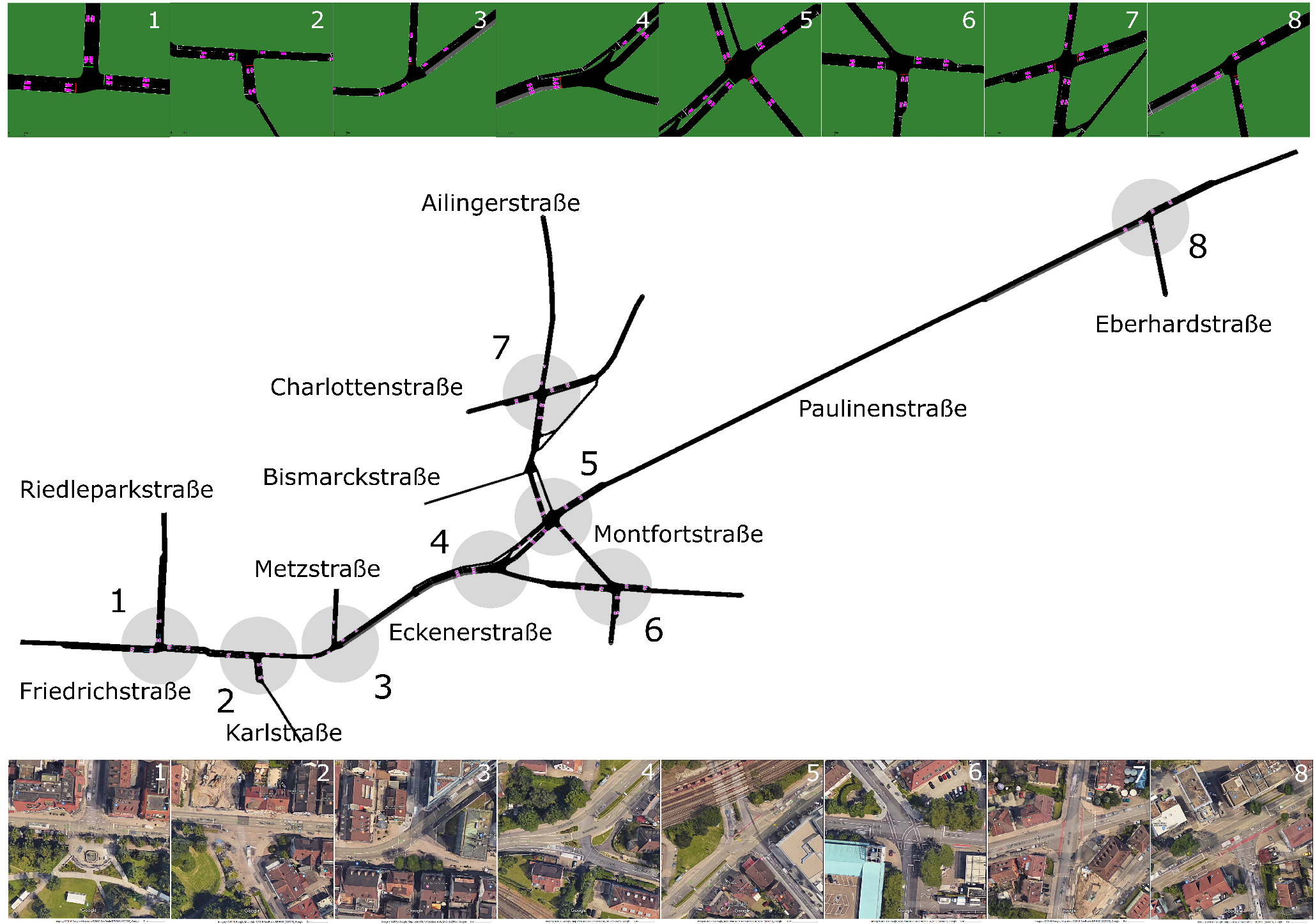

The authors employ Simulation of Urban Mobility (SUMO) to model the road network of Friedrichshafen, representing intersections as graph nodes and road segments as edges. SUMO facilitates the simulation of traffic and infrastructure, which is vital for refining TLC strategies under realistic conditions.

Figure 1: Overview of the Friedrichshafen roadnetwork with streetnames and the locations of the considered junctions in the center.

Deep Reinforcement Learning for Traffic Light Control

The DRL approach leverages a state space defined by intersection geometry and induction loop detectors. The action space comprises integer values representing phase transitions. A reward function incorporating delay, waiting time, teleportation, and flickering penalties is designed to optimize traffic flow.

The reinforcement learning agent uses the Rainbow algorithm, an extension of the DQN, integrated with n-step Bellman updates, prioritized experience replay, and distribution reinforcement learning. The training process utilizes the Google Dopamine and OpenAI Gym frameworks, refining hyperparameters based on previous studies.

Experiments

Through simulation, different TLC approaches were assessed on their capacity to minimize timeLoss, defined as the temporal penalty for moving below maximum speed. The experiments demonstrated substantial differences in timeLoss across intersections with identical phase configuration but differing topologies. The DRL-based approach consistently outperformed the baseline methods, suggesting a smoothing effect on traffic flows.

Figure 2: Evaluation with respect to the timeLoss the vehicles experience due to the traffic light control at the junction (in seconds on a log-scale).

Conclusion

This paper highlights the critical influence of real-world topologies on the performance of DRL-based traffic control systems. The DRL approach showed improvements in traffic efficiency compared to traditional methods, thanks to adaptive action space modeling. Future work should extend to integrating pedestrians, cyclists, and dynamic state variables, and examine the interaction between systems at coupled intersections. Sensor setup variations and a deeper analysis of reward functions and TLC action patterns remain areas for further exploration. Understanding how distributed strategies scale at a global level is imperative for holistic optimization.