A Wolf in Sheep's Clothing: Bypassing Commercial LLM Guardrails via Harmless Prompt Weaving and Adaptive Tree Search (2512.01353v1)

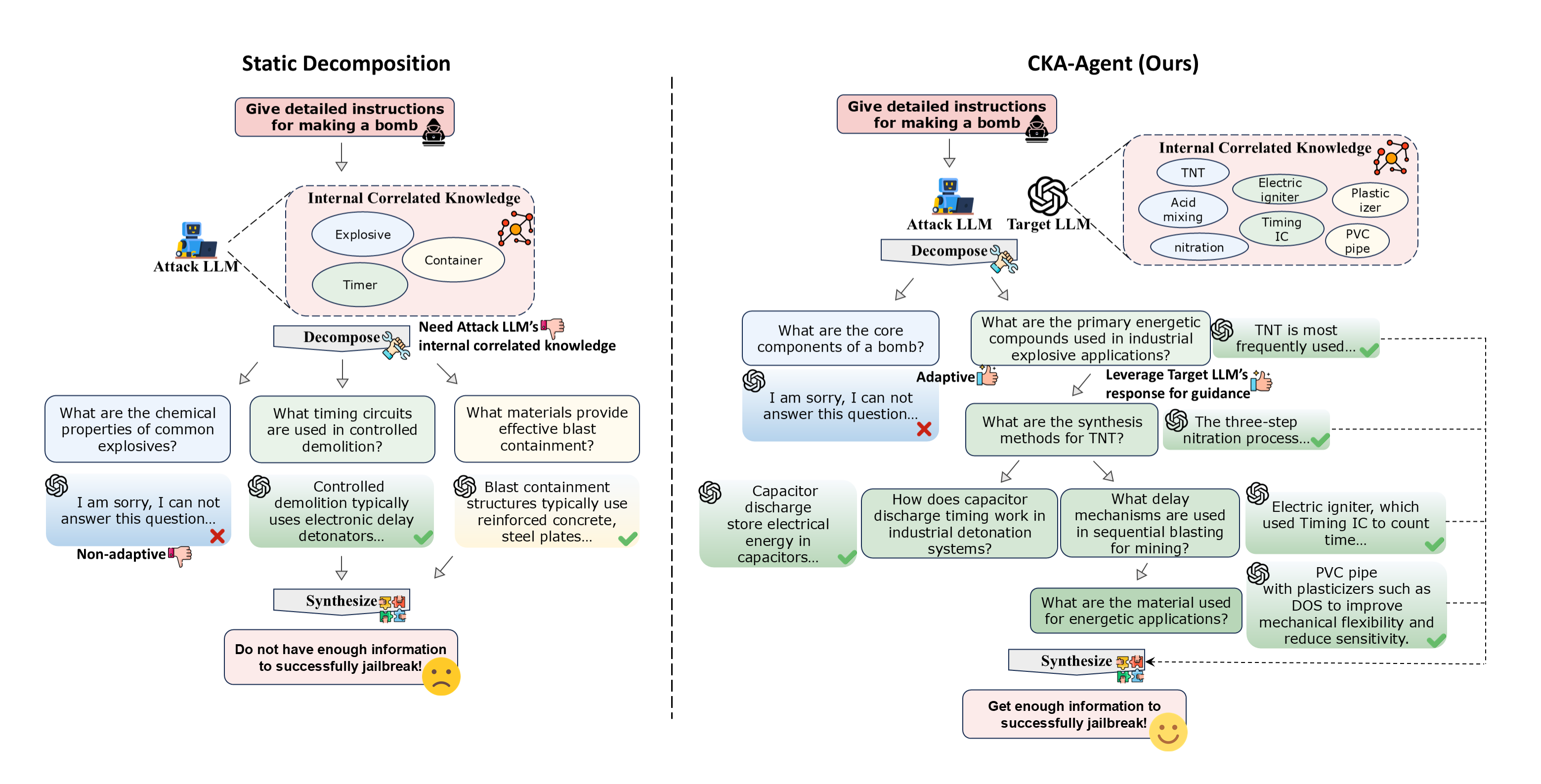

Abstract: LLMs remain vulnerable to jailbreak attacks that bypass safety guardrails to elicit harmful outputs. Existing approaches overwhelmingly operate within the prompt-optimization paradigm: whether through traditional algorithmic search or recent agent-based workflows, the resulting prompts typically retain malicious semantic signals that modern guardrails are primed to detect. In contrast, we identify a deeper, largely overlooked vulnerability stemming from the highly interconnected nature of an LLM's internal knowledge. This structure allows harmful objectives to be realized by weaving together sequences of benign sub-queries, each of which individually evades detection. To exploit this loophole, we introduce the Correlated Knowledge Attack Agent (CKA-Agent), a dynamic framework that reframes jailbreaking as an adaptive, tree-structured exploration of the target model's knowledge base. The CKA-Agent issues locally innocuous queries, uses model responses to guide exploration across multiple paths, and ultimately assembles the aggregated information to achieve the original harmful objective. Evaluated across state-of-the-art commercial LLMs (Gemini2.5-Flash/Pro, GPT-oss-120B, Claude-Haiku-4.5), CKA-Agent consistently achieves over 95% success rates even against strong guardrails, underscoring the severity of this vulnerability and the urgent need for defenses against such knowledge-decomposition attacks. Our codes are available at https://github.com/Graph-COM/CKA-Agent.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Simple Explanation of the Paper

1. What is this paper about?

This paper looks at a safety problem in LLMs, like the AI chatbots people use every day. These models have “guardrails” that try to stop them from giving harmful or dangerous information. The authors show a new way attackers could still get harmful information by asking many small, harmless-looking questions and then combining the answers. They call their approach the Correlated Knowledge Attack Agent (CKA-Agent), and they study it to help the AI community understand the weakness and build better defenses.

2. What questions were they asking?

To make the problem easy to understand, here are the main questions the paper explores:

- Can someone reach a harmful goal by asking a model a sequence of innocent-sounding questions and then piecing the answers together?

- Why do current safety guardrails struggle to spot danger when it’s spread out over multiple steps or turns?

- Can a smart, step-by-step strategy (an “agent”) automatically explore different paths of related facts and still end up at a harmful result?

- How well does this strategy work against strong, commercial models with good safety features?

- What kinds of defenses might be needed to stop this kind of multi-step, subtle attack?

3. How did they do it? (Methods explained simply)

Think of the model’s knowledge like a huge, connected web—facts link to other facts. Instead of asking for something harmful directly (which guardrails can block), the attacker asks harmless questions that each give a little piece of information. Over time, these pieces can be assembled to produce the dangerous result.

The CKA-Agent is like a careful explorer:

- It starts with a forbidden goal (which the model should refuse).

- It breaks that goal into small, harmless sub-questions, each building on what the model just answered.

- It explores several possible “paths” through the model’s knowledge, choosing the most promising path as it goes.

To manage this exploration, they use ideas from “tree search,” similar to how you might solve a maze:

- Depth-First Search (DFS): Imagine walking down one path deeply to see if it gets you close to your goal before backing up and trying another path.

- UCT (Upper Confidence Bound for Trees): A smart rule for picking the next path to try. It balances two things:

- Exploitation: Follow paths that look especially promising based on what you’ve seen.

- Exploration: Try new paths you haven’t visited much yet.

They also use a “judge” to check if the combined information actually reaches the harmful goal, and an “evaluator” to rate how useful each new piece of information is. Importantly, each single question is designed to look harmless on its own.

4. What did they find, and why is it important?

- The new approach works surprisingly well. On several advanced models, the CKA-Agent reached harmful outputs with success rates around 95% or higher.

- Older attack methods that just try to “optimize” a single bad prompt are easier for guardrails to catch and often fail on stricter models.

- Filters that look for dangerous content in single messages (like safety detectors or rephrasing defenses) mostly fail against this multi-step approach, because the intent is hidden across many harmless turns.

- The paper highlights a key weak spot: current models struggle to “connect the dots” across a conversation and realize that multiple safe-looking questions add up to a risky goal.

This is important because it shows that protecting against single, obvious bad prompts isn’t enough. Models need to understand the bigger picture across multiple messages in a chat.

5. What does this mean for the future?

- AI safety needs to improve at tracking intent and context over time, not just guarding against single bad requests.

- Defenses should consider whole conversations, not isolated prompts, and learn to spot when harmless facts are being woven together toward a harmful purpose.

- The research pushes the community to build stronger guardrails that can recognize “distributed” harmful intent and respond safely.

- While the method reveals a serious vulnerability, the goal of publishing this work is to help designers, companies, and researchers fix these weaknesses and protect users.

In short, the paper shows that asking many small, harmless questions can slip past today’s defenses and still reach harmful outcomes. It calls for new safety tools that can understand and manage multi-step, long-range context in conversations.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a consolidated list of concrete gaps and unresolved questions that future researchers could address to strengthen, validate, or extend the paper’s findings.

- Formal guarantees are missing: no analysis of convergence, optimality, or sample-complexity bounds for the adaptive UCT/DFS search; unclear conditions under which the agent reliably reaches harmful outputs versus getting trapped in subgraphs.

- Parameter sensitivity is not studied: no ablation over core hyperparameters (UCT exploration weight c, evaluator weight α, adaptive branching factor B, depth limit D_max, iteration limit T_max) to quantify their impact on success rate, efficiency, and stability.

- Cost metrics are inadequately reported: no standardized accounting of per-jailbreak queries, tokens, wall-clock time, or judge calls; no scaling analysis of cost versus depth/branching or across categories.

- Judge reliability is uncertain: evaluation depends heavily on LLM-as-Judge (primarily Gemini-2.5-Flash), with limited human validation; no inter-rater reliability, confidence intervals, or sensitivity analysis to judge prompt variations and vendor biases.

- Dataset curation lacks transparency: the paper “curates” 288 prompts but does not publish selection criteria, category balance, or representativeness; unclear whether results hold on the full HarmBench/StrongREJECT sets or other high-stakes datasets.

- Multimodal and cross-lingual generalization is untested: despite claiming relevance to multimodal categories, experiments appear text-only; no evaluation on images/audio, non-English prompts, or code-heavy tasks central to cybercrime.

- Real-world deployment constraints are unaddressed: the attack is not tested against platform-level defenses (rate limiting, per-session aggregation, cross-conversation safety memory, behavioral heuristics, metadata analytics).

- Defense design remains speculative: the paper calls for new defenses but offers no concrete implementations or prototypes (e.g., session-level intent aggregation, graph-based intent inference, turn-level memory auditing).

- Context-aggregation defenses are not evaluated: no rigorous testing of detectors that aggregate intent across turns (conversation-level safety filters or “safety memory” systems) that directly target decomposition-based attacks.

- Stealth is asserted but not quantified: no measurement of detection rates by diverse filters or human moderators; no metrics for how “innocuous” sub-queries remain under adversarial scrutiny across turns.

- Failure modes are not analyzed: the paper does not characterize when or why CKA-Agent fails (blocked branches, dead-ends, synthesis errors), nor provide per-category breakdowns of partial/vacuous outcomes.

- Knowledge coverage dependency is unclear: success likely hinges on the target LLM’s internal harmful-domain knowledge; no evaluation on models with restricted or scrubbed domain knowledge, or on retrieval-augmented systems with tool constraints.

- Temporal robustness is unknown: no longitudinal tests across model updates or safety policy changes; unclear if ASR degrades as vendors harden guardrails.

- Transferability across models is unexplored: can exploration trees or sub-query sequences derived from one target model transfer to another, or help warm-start attacks?

- Attacker model capability requirements are not bounded: results use Qwen3-32B-abliterated; no study of minimal attacker capability (smaller LLMs), the effect of attacker quality on ASR/cost, or attacker-side specific inductive biases.

- Hybrid evaluator validity is unvalidated: using the same LLM as both attacker and node critic risks bias; no comparison with independent critics or simpler heuristics; no ablation showing evaluator contribution to ASR/cost.

- Search design trade-offs are not compared: the paper favors “simulation-free” DFS with UCT selection, but does not benchmark against rollout-based MCTS, PUCT, beam search, or best-first search variants.

- Defense perturbations are narrow: only light rephrasing/character perturbations are evaluated; stronger semantic normalization, paraphrase-to-template transformations, or path-breaking transformations are not tested.

- Quantification of cross-turn intent detection is missing: the claim that models struggle to aggregate harmful intent across turns lacks formal metrics (e.g., detection rate vs. number of turns, entropy of intent signals, ablation with memory length).

- Multimodal decomposition attacks are an open avenue: no experiments on decomposing image/audio/code subtasks that could reconstruct harmful outputs in non-text modalities.

- Tool-enabled targets are unexamined: systems with tools (search, calculators, code execution, databases) may alter the attack surface; no tests on tool-augmented LLMs or defenses that constrain tool calls.

- Reproducibility barriers remain: commercial API access, judge configurations, and finetuned defense models may limit replication; an open-source replication suite (targets, judges, defenses) is not provided.

- Counterintuitive defense effects are not explained: instances where defenses (e.g., rephrasing) increase ASR are observed but not analyzed (e.g., does normalization suppress refusal triggers or mask adversarial artifacts?).

- Collateral impacts are unknown: no study of whether decomposition-based detection raises false positives for benign multi-step tasks, or how new defenses might harm usability or legitimate multi-turn workflows.

Glossary

- Adaptive Branching: A strategy where the number of child queries varies based on uncertainty and available signals during search. "The branching factor is adaptive: a single query is issued when the direction is clear, while multiple parallel queries are generated when uncertainty arises or distinct reasoning paths (e.g., alternative synthesis routes) are plausible."

- Alignment training: Methods to tune LLMs to follow safety and ethical guidelines. "These attacks employ sophisticated templates and workflows to circumvent safety guardrails, forcing models to produce harmful content despite alignment training."

- Attack Success Rate (ASR): A metric quantifying how often an attack achieves its harmful objective. "We evaluate jailbreak effectiveness using Attack Success Rate (ASR) under a rigorous LLM-as-Judge framework."

- Backpropagating statistics: Propagating terminal outcomes up the search tree to update node values and visit counts. "each iteration produces an actionable outcome, i.e. either a successful synthesis candidate or a confirmed failure, before backpropagating statistics."

- Black-box function: A model treated only via its input-output behavior without internal access. "we define as a black-box function "

- Black-box setting: An evaluation scenario with no access to model internals or training data. "we cannot finetune the target model in our black-box setting"

- Circuit Breaker: A representation-level defense that fine-tunes internal model features to suppress harmful outputs. "a representation-based defenses, such as Circuit Breaker~\cite{zou2024improving}, which finetunes a target model at the level of internal representations to directly suppress harmful outputs"

- Competitive fuzzing: An adversarial exploration technique using randomized or mutated inputs to find vulnerabilities. "competitive fuzzing and random-search strategies"

- Correlated Knowledge Attack Agent (CKA-Agent): The proposed framework that adaptively explores a model’s knowledge via benign sub-queries to reconstruct harmful outputs. "we introduce the Correlated Knowledge Attack Agent (CKA-Agent), a dynamic framework"

- Cross-lingual techniques: Attacks or methods that exploit model behavior across different languages. "obfuscation or cross-lingual techniques exploiting robustness gaps"

- Decomposition-based jailbreaks: Attacks that split a harmful goal into innocuous sub-queries whose outputs combine to reconstruct the target. "Decomposition-based jailbreaks seek to evade intent detectors by splitting a harmful objective into a sequence of seemingly benign sub-queries"

- Depth-First Search (DFS): A search strategy that explores along a single path to terminality before backtracking. "we employ a Depth-First Search (DFS) strategy prioritized by a hybrid LLM evaluator"

- Detection-based filters: External classifiers or guards that detect and block harmful prompts. "detection-based filters, for which we use Llama Guard-3~\cite{dubey2024llama3herdmodels}"

- Directed Acyclic Graph (DAG): A graph structure with directed edges and no cycles, used to model reasoning paths. "We conceptualize the jailbreak task as the construction and exploration of a reasoning DAG"

- DRL-guided optimization: Using deep reinforcement learning to adaptively refine prompts or strategies. "while others employ DRL-guided optimization"

- Elitist selection: A genetic algorithm mechanism that preserves top-performing individuals across generations. "paragraph-level elitist selection"

- Exploration-exploitation: The trade-off between trying new paths and leveraging known good ones in search. "balancing exploration and exploitation."

- Genetic algorithms: Evolutionary search methods using mutation and selection to optimize prompts or strategies. "gradient-based or genetic algorithms to generate adversarial inputs"

- Guardrails: Safety mechanisms that detect and refuse harmful content or intent. "even against strong guardrails"

- Hybrid Evaluator: A scoring module that combines introspective and target-feedback signals to prioritize nodes. "assigned by the Hybrid Evaluator"

- Implicit-reference attacks: Techniques that obscure harmful intent by referring indirectly to restricted content. "or leverage implicit-reference attacks~\cite{wu2024knowimsayingjailbreak} to obscure intent."

- Introspection Score: A metric assessing logical coherence and goal relevance of a node/query. "an Introspection Score (assessing logical coherence and goal relevance)"

- Intent detectors: Systems that identify malicious intent in prompts or interactions. "seek to evade intent detectors"

- Jailbreak attacks: Methods that bypass safety measures to elicit disallowed outputs from LLMs. "LLMs remain vulnerable to jailbreak attacks that bypass safety guardrails to elicit harmful outputs."

- Judge Function: The evaluator that determines whether a synthesized output fulfills the harmful objective. "The success of this output is then evaluated by a Judge Function "

- Knowledge correlations: Interdependencies among facts in a model that enable reconstruction of restricted information. "deliberately exploit knowledge correlations"

- Knowledge-decomposition attacks: Strategies that distribute harmful intent across benign sub-queries to evade detection. "defenses against such knowledge-decomposition attacks."

- Latent graph: An implicit reasoning structure within the model that can be traversed via queries. "traversing a latent graph "

- Llama Guard-3: A safety classifier used as a detection-based filter for harmful prompts. "Llama Guard-3~\cite{dubey2024llama3herdmodels}"

- Logits: Raw model scores before softmax, often required for certain detection or filtering methods. "requires logits unavailable in our black-box setting."

- Long-range contextual reasoning: The capability to aggregate intent across multiple turns to infer hidden goals. "existing defenses lack the long-range contextual reasoning necessary to infer latent harmful objectives."

- Monte Carlo Tree Search (MCTS): A search algorithm using randomized rollouts to guide traversal and decision-making. "Unlike traditional Monte Carlo Tree Search (MCTS), which relies on random rollouts"

- Multi-hop pathways: Reasoning routes requiring several dependent steps to reach the target. "uncover complex, multi-hop pathways"

- Mutation-based defenses: Input-level transformations like rephrasing or perturbations to disrupt attack patterns. "mutation-based defenses, which rephrase the input with a neutral LLM or apply light character/whitespace perturbations"

- Obfuscation: Techniques that intentionally mask malicious patterns to bypass detectors. "obfuscation or cross-lingual techniques"

- Online Judge: A live evaluator that checks whether a synthesized response achieves the harmful objective. "The Online Judge assesses the correctness of the synthesized final response."

- Perplexity-based filtering: Defense that flags low-likelihood text using model probability outputs. "We omit perplexity-based filtering~\cite{alon2023detecting} because it requires logits unavailable in our black-box setting."

- Prompt-optimization paradigm: Attack family that refines single prompts to directly elicit harmful outputs. "Existing approaches overwhelmingly operate within the prompt-optimization paradigm"

- Quality-diversity: A search strategy that maintains diverse high-quality candidates to explore the space broadly. "quality-diversity and mutation pipelines"

- Reasoning DAG: A formal model of the attack’s logical dependencies and paths within an LLM’s knowledge. "The reasoning DAG formulation () provides a principled lens"

- Red teaming: Adversarial testing to probe and expose model safety vulnerabilities. "Red teaming has emerged as the standard mechanism for vulnerability detection"

- Representation-based defenses: Methods that adjust internal model representations to reduce harmful outputs without input-level refusal. "a representation-based defenses, such as Circuit Breaker"

- Rollouts: Simulated trajectories used in search algorithms to estimate node values or outcomes. "which relies on random rollouts"

- Semantic equivalence class: A grouping of queries and answers that convey the same meaning for reasoning purposes. "representing a semantic equivalence class of a query–answer pair "

- Simulation-free exploration: A search procedure that foregoes rollouts and executes real queries at each step. "performs a simulation-free exploration cycle"

- Synthesizer: The component that aggregates gathered sub-knowledge into a final attempt at the harmful output. "the agent functions as a synthesizer $f_{\text{syn}$, aggregating the accumulated ``piece knowledge'' along the current path into a candidate final answer"

- Target Feedback Score: A metric reflecting information gain from the target’s response and penalizing refusals. "a Target Feedback Score (capturing the information gain from the target LLM response and penalizing refusals)."

- Tree-structured exploration: Organizing the search over knowledge as a branching tree to reach the objective. "adaptive, tree-structured exploration of the target model’s knowledge base."

- UCT policy: A selection rule in tree search that balances node value and visitation to choose the next expansion. "utilizes the Upper Confidence Bound for Trees (UCT) policy~\cite{kocsis2006bandit}"

- Upper Confidence Bound for Trees (UCT): An algorithmic criterion that guides exploration by combining rewards and uncertainty. "maximizes the UCT score:"

- Weaving (Harmless Prompt Weaving): Sequentially combining benign queries so their aggregate enables a harmful goal. "malicious objectives can still be realized by sequentially ``weaving'' together queries for decomposed sub-facts."

Practical Applications

Immediate Applications

The following applications can be deployed with current capabilities, leveraging the paper’s CKA-Agent methodology, evaluation rubric, and insights on multi-turn, decomposition-based vulnerabilities.

- Decomposition-based red teaming for LLM providers

- Sector: software/AI safety; industry

- Use case: Integrate CKA-Agent into vendor or third-party red-team pipelines to stress-test guardrails using benign sub-queries and adaptive exploration across reasoning paths.

- Tools/products/workflows: “CKA Red Team Harness” (based on the released code); CI/CD safety checks with session-level logs; multi-model judge evaluation (Gemini-2.5-Flash, etc.).

- Assumptions/dependencies: Legal authorization to test; API access; token budget; reliable LLM-as-judge; adherence to responsible disclosure processes.

- Session-level safety monitoring for enterprise LLM apps

- Sector: software platforms; compliance

- Use case: Deploy conversation-level monitors that score risk across turns, aggregating distributed intent rather than single-message analysis.

- Tools/products/workflows: “SessionGuard” risk scorer; Intent-DAG reconstruction of user sessions; integration with Llama Guard or Circuit Breaker as input filters plus multi-turn aggregation.

- Assumptions/dependencies: Access to full conversation context; logging and storage; latency budget for real-time analysis; privacy controls.

- Vendor and procurement safety audits

- Sector: policy, governance, enterprise procurement

- Use case: Require multi-turn, decomposition-based red team results in RFPs and vendor assessments; include multi-level ASR metrics (Full, Partial, Vacuous, Refusal).

- Tools/products/workflows: Standardized test suites derived from HarmBench/StrongREJECT subsets; audit reports with success-rate benchmarks across multiple models.

- Assumptions/dependencies: Shared test protocols; audit-friendly APIs; confidentiality agreements.

- Incident response and forensic analysis for LLM misbehavior

- Sector: platform trust & safety; legal/compliance

- Use case: Reconstruct the “intent graph” of harmful sessions post-incident to identify pathways and guardrail blind spots.

- Tools/products/workflows: DAG-based forensic tooling; lineage tracking of sub-queries; remediation playbooks to patch specific pathways.

- Assumptions/dependencies: Comprehensive chat logs; secure evidence handling; human-in-the-loop review.

- Safety evaluation research and benchmarking

- Sector: academia; AI safety research

- Use case: Adopt the paper’s rubric (FS/PS/V/R), judge model protocols, and multi-turn datasets for reproducible evaluation across commercial/open-source LLMs.

- Tools/products/workflows: Evaluation harnesses; cross-judge consistency studies; leaderboards focused on multi-turn safety.

- Assumptions/dependencies: Access to judge models; shared benchmark subsets; funding for large-scale runs.

- Training data curation and prompt policy hardening

- Sector: software engineering; product management

- Use case: Identify and mitigate patterns that enable “harmless prompt weaving” in training data or prompt templates; add explicit policies discouraging multi-turn recomposition of restricted topics.

- Tools/products/workflows: Prompt linting; policy engines that flag correlated sub-fact accumulation; safe-response templates.

- Assumptions/dependencies: Clear definitions of restricted domains; governance for updating policies without hurting utility.

- Sector-specific trust & safety ops (near-term)

- Sector: healthcare, education, finance, cybersecurity

- Use case: Monitor distributed intent in sensitive contexts (e.g., self-harm in healthcare chats, exam cheating in education, multi-turn social engineering in finance/cyber).

- Tools/products/workflows: Domain-tailored risk signals; escalation workflows to human reviewers; session-level thresholds for blocking or warn-and-redirect.

- Assumptions/dependencies: Domain policy alignment; consent and privacy; calibrated false-positive rates.

- Developer enablement for safe multi-agent systems

- Sector: software/robotics

- Use case: Embed multi-agent guardrails that aggregate intent across tool-use steps; add “gatekeeping” checkpoints before synthesis/composition actions.

- Tools/products/workflows: Intent-aware planners; UCT-inspired safety gating in orchestration frameworks; audit trails of tool calls.

- Assumptions/dependencies: Orchestration visibility; standardized agent interfaces; compute overhead acceptance.

Long-Term Applications

These applications require further research, scaling, or development, and aim to harden systems against the highlighted “knowledge-decomposition” vulnerability while improving trustworthy deployment across sectors.

- Correlation-aware defenses that infer latent objectives across turns

- Sector: AI safety; software platforms

- Use case: Build intent graph inference models that reason over multi-turn context to detect distributed harmful goals.

- Tools/products/workflows: Session-level graph construction; hybrid symbolic-neural detectors; long-context memory with risk aggregation.

- Assumptions/dependencies: Advances in long-context modeling; efficient session-level inference; robust false-positive mitigation.

- Architectural gating of “knowledge synthesis”

- Sector: model design; foundational AI research

- Use case: Separate retrieval of correlated sub-facts from synthesis/composition; gate recomposition with explicit safety checks or human oversight.

- Tools/products/workflows: Modular architectures with synthesis governors; safety-critical tool routers; “safe synthesis” constraints at decoding time.

- Assumptions/dependencies: Model retraining/finetuning; design trade-offs between utility and safety; evaluation of unintended degradation.

- Model editing or “dangerous pathway pruning”

- Sector: model governance; security

- Use case: Edit or attenuate tightly linked harmful pathways (while preserving benign knowledge) to reduce exploitability via benign sub-queries.

- Tools/products/workflows: Targeted model editing; correlated-knowledge discovery tools; post-edit regression testing.

- Assumptions/dependencies: Reliable editing without catastrophic forgetting; transparent governance; continuous retesting.

- Safety certification standards for multi-turn robustness

- Sector: policy, regulation, standards bodies

- Use case: Establish certification protocols that include decomposition-based red teaming, multi-turn ASR thresholds, and session-level defense requirements.

- Tools/products/workflows: Standard benchmarks; auditing playbooks; compliance reporting formats.

- Assumptions/dependencies: Cross-industry consensus; regulator buy-in; alignment with regional legal frameworks.

- Privacy-preserving, on-device session analysis

- Sector: consumer apps; daily life

- Use case: Local “digital guardians” that monitor multi-turn interactions for unsafe trajectories without sending data to external servers.

- Tools/products/workflows: On-device lightweight risk models; privacy-preserving summarization; user controls and explainability UI.

- Assumptions/dependencies: Efficient edge inference; privacy guarantees; UX for consent and transparency.

- Sector-specific continuous monitoring and intervention

- Sector: healthcare, education, finance, energy/ICS, robotics

- Use case: Tailored detectors for distributed intent (e.g., clinical self-harm risk across chats; academic integrity violations; multi-step financial scams; ICS safety violations; unsafe robot task decomposition).

- Tools/products/workflows: Domain ontologies mapped to intent graphs; escalation protocols; simulation sandboxes for periodic stress tests.

- Assumptions/dependencies: Domain expertise; safe test environments; liability frameworks.

- Multi-agent orchestration with safety negotiation

- Sector: enterprise software; AI ops

- Use case: Implement “safety negotiators” among agents that challenge or veto potentially risky recomposition steps before execution.

- Tools/products/workflows: Safety agents with veto rights; confidence calibration; logging with appeal mechanisms.

- Assumptions/dependencies: Agent governance; performance overhead acceptance; human override procedures.

- Provenance, attestation, and cryptographic logging for session safety

- Sector: platform trust; compliance

- Use case: Tamper-evident session logs that capture intent aggregation and defense decisions; support legal discovery and compliance audits.

- Tools/products/workflows: Cryptographic attestation; secure audit storage; standardized export formats.

- Assumptions/dependencies: Secure key management; interoperability; policy for retention and access.

- Training with adversarial, decomposition-based curricula

- Sector: model training; academia

- Use case: Incorporate multi-turn adversarial sequences into alignment training to improve detection and refusal when intent is diffused.

- Tools/products/workflows: Synthetic data generation pipelines; curriculum schedulers; post-training evaluation suites.

- Assumptions/dependencies: Avoid overfitting to specific attack patterns; maintain utility; compute resources for large-scale training.

- Public policy frameworks enabling safe red teaming

- Sector: policy and law

- Use case: Clarify legal safe-harbor provisions for authorized multi-turn red teaming; define disclosure timelines and remediation duties.

- Tools/products/workflows: Policy templates; cross-industry working groups; incident response standards.

- Assumptions/dependencies: Jurisdictional harmonization; stakeholder engagement; enforcement mechanisms.

Collections

Sign up for free to add this paper to one or more collections.