OpenMMReasoner: Pushing the Frontiers for Multimodal Reasoning with an Open and General Recipe (2511.16334v1)

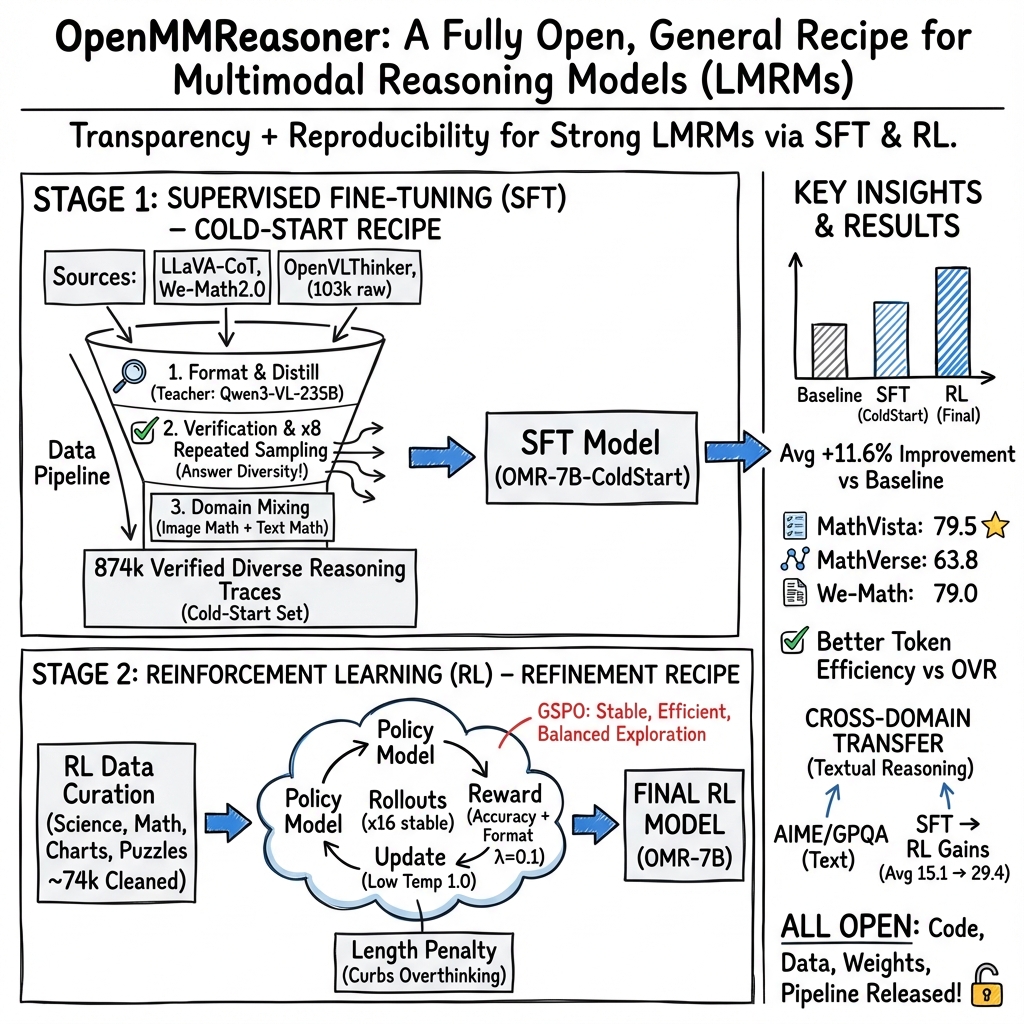

Abstract: Recent advancements in large reasoning models have fueled growing interest in extending such capabilities to multimodal domains. However, despite notable progress in visual reasoning, the lack of transparent and reproducible data curation and training strategies remains a major barrier to scalable research. In this work, we introduce OpenMMReasoner, a fully transparent two-stage recipe for multimodal reasoning spanning supervised fine-tuning (SFT) and reinforcement learning (RL). In the SFT stage, we construct an 874K-sample cold-start dataset with rigorous step-by-step validation, providing a strong foundation for reasoning capabilities. The subsequent RL stage leverages a 74K-sample dataset across diverse domains to further sharpen and stabilize these abilities, resulting in a more robust and efficient learning process. Extensive evaluations demonstrate that our training recipe not only surpasses strong baselines but also highlights the critical role of data quality and training design in shaping multimodal reasoning performance. Notably, our method achieves a 11.6% improvement over the Qwen2.5-VL-7B-Instruct baseline across nine multimodal reasoning benchmarks, establishing a solid empirical foundation for future large-scale multimodal reasoning research. We open-sourced all our codes, pipeline, and data at https://github.com/EvolvingLMMs-Lab/OpenMMReasoner.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper introduces OpenMMReasoner, a way to train smart computer models that can “reason” using both text and images. Think of it like teaching an AI to solve problems where it needs to read, look at a picture, and think step by step. The main goal is to share a clear, open recipe that other researchers can reuse: first teach the model with carefully checked examples, then coach it with practice and feedback to make it even better.

Key Objectives

Here are the simple questions the paper set out to answer:

- How do we build a strong “thinking” AI that understands both words and pictures?

- What kind of training data and steps work best to teach reasoning?

- Which training strategies (especially in reinforcement learning) are stable, efficient, and give the best results?

- Can we make the entire process open and easy for others to reproduce?

Methods and Approach

The team used a two-stage training recipe, similar to how students learn.

- Stage 1: Supervised Fine-Tuning (SFT)

- Imagine a student studying from a high-quality workbook with step-by-step solutions. The researchers built a big, clean set of 874,000 examples. They made sure answers were correct and explained clearly. They even used a “teacher model” (a stronger AI) to produce multiple good solution paths for the same question. This “answer diversity” helps the model learn different ways to solve problems.

- Stage 2: Reinforcement Learning (RL)

- Now imagine coaching with practice tests: the AI tries to solve problems and gets feedback (a reward) when it’s right and follows the proper format. They used a smaller but diverse 74,000-example RL dataset. The team tested several training styles (think different coaching methods) to see which one keeps learning steady and improves the most:

- GRPO, DAPO, and GSPO are three RL strategies. In simple terms, they control how the AI updates itself after trying problems.

- GSPO worked best here: it was more stable, learned faster, and gave consistently strong results.

To keep things efficient, they also:

- Checked that longer answers aren’t always better. They encouraged the AI to think enough to be right—but not waste tokens on overly long reasoning.

- Mixed questions from different areas (like math with images and math with text) so the model learns to generalize.

Technical terms in everyday language:

- Multimodal: the model uses more than one type of information (text + images).

- Teacher model: a stronger AI that provides high-quality solutions for the student AI to learn from.

- Answer diversity: different correct solution paths for the same question—like learning multiple ways to solve a math problem.

- Reward function: a score that tells the AI if its answer is correct and well-formatted.

- Benchmarks: standardized tests to measure how well the model performs.

Main Findings and Why They Matter

The paper reports several important discoveries:

- A clear, open recipe improves performance across many tests. Their method beat a strong baseline model (Qwen2.5-VL-7B-Instruct) by about 11.6% on nine multimodal reasoning benchmarks.

- Better teachers and more varied answers help a lot. Using a strong teacher model and creating multiple verified solutions per question made the student AI learn deeper and more reliably.

- Don’t over-filter the data. Removing too many samples (by length or estimated difficulty) hurt performance because it reduced diversity.

- Mixing domains boosts generalization. Combining image-based math and text-based math with general reasoning data improved results across tasks.

- GSPO is the most stable RL training style in this setting. Among the tested methods, GSPO gave smoother training and better scores.

- Efficiency matters. The model reached high accuracy without overly long answers, meaning it reasons well without wasting tokens.

- Skills transfer across modes. As multimodal reasoning improved, pure text reasoning also got better, suggesting the AI’s “thinking skills” carry over.

Implications and Potential Impact

This work offers a practical, open, and reproducible path for building multimodal reasoning systems:

- It shows how to carefully design datasets and training steps to teach AI to think with both images and text.

- It highlights that answer diversity and cross-domain mixing are powerful levers for better reasoning.

- It provides evidence that stable RL strategies like GSPO can sharpen reasoning further without sacrificing efficiency.

- Because the code, data pipeline, and model are open-sourced, other researchers and developers can follow, test, and improve the recipe. This can accelerate progress toward reliable, efficient AI that can help with complex tasks in education, science, and everyday problem-solving.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a concise, actionable list of what remains missing, uncertain, or unexplored in the paper, intended to guide future research.

- Generality across backbones and scales: The recipe is validated primarily on Qwen2.5-VL-7B; it is unclear how it transfers to different architectures (e.g., LLaVA/InternVL families), parameter scales (1–3B, 13–34B), and vision encoders/resolutions. Evaluate cross-backbone and cross-scale robustness with matched training budgets.

- Modality breadth: The pipeline focuses on image–text reasoning. Extend and evaluate to other modalities (video, audio, 3D, time-series) and multi-image or multi-document reasoning to assess true multimodal generality.

- Interactive/multi-turn reasoning: Training and evaluation remain single-turn QA-centric. Investigate dialog-level reasoning, planning, tool invocation over multiple turns, and memory across sessions.

- Contamination auditing: No systematic analysis of train–test overlap or leakage across SFT/RL datasets and benchmarks (e.g., WeMath, MathVista, MathVerse, MMMU). Perform hash/near-duplicate checks (image-text, OCR content, metadata) against test splits and report contamination rates.

- Reasoning faithfulness: Final-answer verification is used, but step-wise correctness and faithfulness of chain-of-thought traces are not evaluated. Develop step-level validators (e.g., symbolic/algebraic checkers, program execution), and measure agreement between steps and final answers.

- LLM-as-judge reliability: The distillation filter relies partly on an LLM-as-judge. Quantify judge error rates (false positives/negatives), bias across domains, and sensitivity to prompt variants; consider human-audited calibration sets.

- Teacher bias and diversity: Only a single high-capacity teacher (Qwen3-VL-235B) is used in the final recipe. Evaluate ensembles of teachers, domain-specialist teachers, and mixed teacher styles to reduce stylistic bias and improve robustness.

- Answer diversity scaling beyond ×8: The data benefit from ×8 answer sampling, but diminishing returns, optimal sampling rates, and cost-performance curves beyond ×8 are not explored. Map the diversity–compute trade-off and identify saturation points.

- Filtering strategies: The paper concludes “over-filtering” harms performance based on length/difficulty filters. Test alternative filters (e.g., contradiction/consistency checks across traces, semantic diversity selection, step-quality scoring) that preserve diversity while reducing noise.

- Reward design ablations: The composite reward uses a fixed format weight (λ_fmt=0.1) with accuracy-only task rewards. Ablate λ_fmt, introduce step-level rewards, partial credit for plausible intermediate steps, and penalties for hallucinated visual references.

- Verifiable rewards for visual tasks: RL uses exact-answer checks but does not integrate multimodal verifiers (OCR, chart parsers, table extractors, geometric solvers). Incorporate programmatic validators tailored to visual domains to strengthen RL signals.

- Tool-use integration: The pipeline does not train or evaluate explicit tool use (OCR, calculators, code execution, plotting). Assess whether tool-augmented RL improves accuracy and efficiency on chart/science/math tasks.

- RL algorithm fairness and hyperparameter sensitivity: GSPO outperforms DAPO/GRPO under the chosen settings, but hyperparameter parity and sensitivity are not exhaustively explored. Conduct controlled sweeps (KL schedules, clip thresholds, entropy targets, rollout batch sizes) to ensure fair comparisons.

- RL dataset size and composition: Only a ~74k RL dataset is used. Study scaling laws for RL (size, domain mixture, difficulty distributions), online data generation, and dynamic replay buffers to assess how RL data volume/composition affects training stability and generalization.

- Curriculum learning variants: Difficulty-based curriculum (teacher pass rate) did not help, but alternative curricula (competence-based pacing, uncertainty-aware sampling, interleaved domain blocks, self-play progression) remain unexplored.

- Stability controls beyond rollout count and temperature: Investigate KL annealing, adaptive entropy regularization, reward normalization strategies, and advantage estimation variants to prevent collapse (especially in DAPO) and improve convergence.

- Efficiency metrics beyond tokens: Efficiency analysis focuses on token length. Report and compare latency, throughput, memory footprint, energy, and cost per correct answer; study inference-time controls (e.g., adaptive thinking, early-exit criteria).

- Test-time sampling strategies: Beyond fixed decoding, evaluate best-of-n, self-consistency, confidence-based reranking, and mixture-of-temperatures to balance accuracy and budget at inference.

- Robustness and safety: No experiments on adversarial or perturbed inputs, safety/fairness biases, or hallucination detection. Test robustness to image corruptions, spurious chart labels, adversarial prompts, and measure harmful outputs.

- Non-English and multilingual generalization: The datasets and benchmarks are predominantly English. Assess multilingual reasoning, cross-lingual transfer, and culturally diverse visual contexts.

- Benchmark coverage and head-to-head gaps: Despite strong overall gains, OVR remains stronger on MathVision test. Analyze failure modes, per-category breakdowns (e.g., geometry vs algebra vs charts), and targeted data/training to close these gaps.

- Explainability and user trust: No evaluation of how well the model’s reasoning traces align with human-understandable steps. Introduce human evaluation of trace clarity, faithfulness, and error diagnosis utility.

- Domain transfer analysis: Cross-domain improvements are reported but not dissected. Quantify which source domains contribute to gains in which target domains and detect negative transfer or catastrophic forgetting.

- Hardware and compute transparency: The main text lacks detailed reporting of GPU types, training hours, batch sizes, and token budgets per stage. Provide full compute accounting to enable reproducible scaling comparisons.

- Licensing and data provenance: Teacher-generated traces and mixed datasets may have varying licenses. Audit and clarify licensing, consent, and provenance to ensure compliant redistribution and use.

- Long-context and document tasks: MMMU includes multi-disciplinary tasks but not necessarily long documents. Evaluate long-context visual-text tasks (multi-page PDFs, charts + tables + captions) and memory over extended inputs.

- Multi-image compositional reasoning: The pipeline does not explicitly target tasks requiring reasoning across multiple images or views. Add benchmarks and training for multi-image composition and spatial-temporal relations.

- Failure case repository: Provide a systematic catalog of errors (formatting, math steps, visual grounding, chart misreads) to guide targeted data augmentation and reward shaping in subsequent iterations.

- Human-in-the-loop training: The pipeline is fully automated. Explore minimal human verification or preference signals for difficult examples to improve trace quality and reduce judge bias.

- Generalization to open-ended tasks: RL relies on exact-answer rewards; applicability to open-ended, non-verifiable tasks (explanations, hypotheses) remains unclear. Investigate proxy rewards (consistency, entailment) and hybrid RLHF/RLAIF setups for such tasks.

- Privacy and sensitive content: No discussion of sensitive data handling in images (faces, documents). Establish filtering and redaction protocols and quantify privacy risks.

Glossary

- Answer diversity: Variety in verified solutions per question used to improve generalization. "demonstrating that answer diversity is a crucial factor for data quality and model generalization."

- Answer trace: A full step-by-step reasoning trajectory produced for a question. "selecting teacher models to generate new answer traces."

- Answer verification: Checking whether a generated solution’s final answer is correct. "applying both format validation and answer verification."

- Cold-start dataset: An initial training set enabling a model to start learning from scratch. "we construct an 874K-sample cold-start dataset with rigorous step-by-step validation"

- Composite reward function: A reward that combines multiple objectives (e.g., accuracy and format) in RL. "we adopt a composite reward function similar to those in~\citep{dsr1_cite,openr1}, which combines both aspects in a weighted manner."

- Critic network: A model component estimating value/advantage in RL; removed in GRPO. "removes the need for a critic network and Generalized Advantage Estimation (GAE)"

- Cross-domain generalization: Transfer of learned reasoning skills across different modalities or tasks. "indicating strong cross-domain generalization of reasoning capabilities."

- Curriculum learning: Training strategy that orders tasks from easy to hard. "Ablation results in~\cref{tab:rl-abl} show that curriculum learning does not outperform the original mixed sampling approach"

- DAPO (Decoupled Clip and Dynamic Sampling Policy Optimization): An RL algorithm designed to stabilize optimization and mitigate bias. "Decoupled Clip and Dynamic Sampling Policy Optimization (DAPO) addresses several limitations of GRPO, including entropy collapse, training instability, and length bias"

- Data distillation: Using a stronger model to generate higher-quality supervision for training. "The dataset is constructed through the following stages: 1) Data Sourcing, 2) Data Distillation and Scaling, and 3) Domain Mixing."

- Decoupled clipping: A modification to PPO-style clipping that separates clipping bounds to reduce instability. "DAPO introduces a decoupled clipping mechanism"

- Deduplicate: Remove redundant samples via similarity checks. "We then deduplicate by computing both image and text similarities"

- Difficulty-based sampler: A sampling strategy selecting problems by measured difficulty. "employs a difficulty-based sampler, starting with easy tasks and gradually shifting to harder ones."

- Domain mixing: Combining datasets from different domains to improve generalization. "3) Domain Mixing."

- Entropy collapse: A failure mode in RL where output diversity drops, harming learning. "including entropy collapse, training instability, and length bias"

- GAE (Generalized Advantage Estimation): A technique for estimating advantages in RL to reduce variance. "removes the need for a critic network and Generalized Advantage Estimation (GAE)"

- GRPO (Group Relative Policy Optimization): A PPO variant using group-normalized rewards without a critic. "Group Relative Policy Optimization (GRPO) is an efficient variant of PPO"

- GSPO (Group Sequence Policy Optimization): An RL method optimizing sequence-level ratios to reduce token-level bias. "Group Sequence Policy Optimization (GSPO) tackles the token-level importance bias inherent in GRPO"

- i.i.d. (independent and identically distributed): Assumption that samples are independent and drawn from the same distribution. "Let each data pair be i.i.d from a distribution "

- Importance ratio: The ratio measuring how probable a sequence is under current vs. old policy. "where is the importance ratio based on sequence likelihood."

- KL-divergence penalty: A regularization term constraining policy updates to remain close to the reference. "incorporates likelihood-ratio clipping with a KL-divergence penalty"

- Liger-Kernel: An optimized training kernel to accelerate model training. "we adopt online packing and the Liger-Kernel to accelerate training efficiency."

- Likelihood-ratio clipping: PPO-style constraint limiting how much the policy can change per update. "incorporates likelihood-ratio clipping with a KL-divergence penalty"

- LLM-as-judge: Using an LLM to automatically evaluate correctness or quality of outputs. "and an LLM-as-judge check are retained"

- LMRMs (Large Multimodal Reasoning Models): Multimodal models specialized for complex reasoning tasks. "training Large Multimodal Reasoning Models (LMRMs) from open-source LMMs"

- Online packing: A data-loading technique that packs multiple samples for efficient training throughput. "we adopt online packing and the Liger-Kernel to accelerate training efficiency."

- Overlength penalty: A penalty discouraging excessively long reasoning outputs. "we adopt a similar overlength penalty strategy as proposed in DAPO~\citep{yu2025dapoopensourcellmreinforcement} to mitigate the overthinking behavior"

- Policy gradient variance: Variability in gradient estimates that can destabilize RL training. "suggesting that excessive exploration amplifies policy gradient variance and destabilizes optimization."

- PPO (Proximal Policy Optimization): A widely used RL algorithm with clipped objectives. "Group Relative Policy Optimization (GRPO) is an efficient variant of PPO"

- Rejection sampling: Selecting high-quality samples by discarding those failing specified criteria. "By carefully selecting an appropriate teacher model for rejection sampling"

- Reinforcement learning (RL): Training paradigm optimizing actions by maximizing expected rewards. "supervised fine-tuning (SFT) and reinforcement learning (RL)."

- RLVR (Reinforcement Learning with Verifiable Rewards): RL approach where rewards are automatically verifiable. "reinforcement learning with verifiable rewards (RLVR)"

- Reward function: A function scoring outputs based on correctness or other criteria. "and is a predefined reward function that quantifies whether the response yields ."

- Rollout count: Number of sampled trajectories per update step in RL. "rollout temperature and rollout count."

- Rollout temperature: Sampling temperature controlling exploration during generation. "Unless otherwise specified, the rollout temperature is set to 1.0 by default."

- Rule-based validator: Automated checker ensuring outputs meet format or correctness rules. "pass a rule-based validator and an LLM-as-judge check"

- Sequence-level importance ratio: Optimization using sequence likelihood ratios instead of token-level ratios. "by introducing a sequence-level importance ratio for optimization."

- SFT (Supervised fine-tuning): Training with labeled data to align model behavior before RL. "supervised fine-tuning (SFT)"

- Step-wise outputs: Structured reasoning responses presented in explicit steps. "ensuring consistent step-wise outputs"

- Teacher model: A stronger model used to produce higher-quality supervision for distillation. "By carefully selecting an appropriate teacher model for rejection sampling"

- Teacher sampling: Generating multiple answers per question from a teacher to increase diversity. "Repeated teacher sampling provides a scaling axis."

- Token efficiency: Achieving accuracy with fewer tokens or shorter reasoning traces. "Token efficiency comparison with OVR. OpenMMReasoner achieve better accuracy while using significantly less token budget."

- Top-performing checkpoint: The best snapshot of model weights used as a starting point for further training. "we use temperature 1.0 and the top-performing checkpoint from the SFT phase"

- Unified reasoning format: A standardized structure for reasoning responses to reduce training instability. "We standardize all samples to a unified reasoning format"

- Verl: An RL framework used to accelerate training. "we utilize verl~\citep{sheng2024hybridflow} and vllm~\citep{kwon2023efficient} to accelerate the training process."

- Verifiable rewards: Rewards determined by automatically checkable criteria (e.g., exact match). "reinforcement learning with verifiable rewards (RLVR)"

- vllm: A high-throughput inference/training engine for large models. "we utilize verl~\citep{sheng2024hybridflow} and vllm~\citep{kwon2023efficient} to accelerate the training process."

- Wall-clock time: Actual elapsed time during training, regardless of token counts or steps. "actual wall-clock time remains comparable between and due to similar token-length constraints."

Practical Applications

Overview

The paper introduces OpenMMReasoner, a fully transparent, open-source training recipe for large multimodal reasoning models that unifies supervised fine-tuning (SFT) and reinforcement learning (RL). Its key innovations—teacher-distilled multi-answer datasets, rigorous validation and formatting, cross-domain mixing, and an RL strategy favoring GSPO with format-aware rewards and token-efficiency penalties—deliver reproducible, scalable gains across diverse visual and textual reasoning benchmarks. Below are practical, real-world applications derived from these findings, organized by their deployment horizon.

Immediate Applications

The following applications can be implemented now by leveraging the open-source pipeline, datasets, and training configurations presented in the paper.

- Education: Visual math tutoring with step-by-step reasoning

- Tools/Workflow: Use the 874k SFT dataset and the GSPO RL configuration to deploy a “Visual Math Tutor” that explains geometry diagrams, graphs, and puzzle VQA with verified steps and format consistency.

- Assumptions/Dependencies: Quality OCR/vision preprocessing for diagrams; safe pedagogy practices; access to compute for fine-tuning.

- Finance and Business Intelligence: Chart and dashboard Q&A copilot

- Tools/Workflow: “ChartQA Copilot” embedded in BI tools to answer questions about dashboards, trending plots, KPI charts, and provide concise, token-efficient rationales using formatting normalization and overlength penalties.

- Assumptions/Dependencies: Reliable chart parsing; enterprise data access and governance; token cost constraints.

- Scientific Research Support: Figure and table explainer for papers

- Tools/Workflow: “FigureSense” assistant integrated with PDF pipelines to interpret plots, tables, flowcharts, and provide verifiable reasoning; adopt the standardized chain-of-thought formatting from SFT.

- Assumptions/Dependencies: Accurate figure extraction/OCR; domain calibration for science-specific visual conventions.

- Enterprise Document Processing and Compliance: Q&A over PDFs with charts/photos

- Tools/Workflow: Ingest multimodal documents (reports, regulatory filings, manuals) and answer compliance questions with step-by-step rationales; enforce format rewards for consistent outputs.

- Assumptions/Dependencies: Privacy and policy compliance; high-fidelity image/text extraction; domain-specific validators.

- Customer Support in Manufacturing and Electronics: Troubleshooting via manuals with diagrams

- Tools/Workflow: “Visual Troubleshooter” that reasons over assembly diagrams and annotated manuals; deploy RLVR-style validation to check step correctness.

- Assumptions/Dependencies: Domain corpus availability; robust OCR and diagram parsing; retrieval integration for context.

- ML Operations and Data Labeling: Cost-effective dataset curation with multi-trace rationales

- Tools/Workflow: Apply teacher-distilled multi-answer sampling (×8) with rejection sampling and LLM-as-judge verification to build domain datasets with diverse reasoning traces.

- Assumptions/Dependencies: Strong teacher models (e.g., Qwen3-VL-235B) and licensing; reliable rule-based validators; compute budget.

- Software Productivity Suites: “Explain this chart/diagram” feature

- Tools/Workflow: Integrate OpenMMReasoner into presentations, spreadsheets, and documentation tools to generate concise, structured explanations of embedded visuals.

- Assumptions/Dependencies: Efficient inference (vLLM), token budgets, UX guardrails.

- Benchmarking and Reproducibility in Academia/Industry: Transparent evaluation pipelines

- Tools/Workflow: Standardize LMM reasoning evaluation with LMMs-Eval and the paper’s open data pipeline; reproduce ablations (teacher selection, answer diversity, domain mixing) for internal QA.

- Assumptions/Dependencies: Benchmark licensing; consistent prompt formatting; agreed-upon metrics.

- Policy and Procurement: Transparency-first AI vendor requirements

- Tools/Workflow: Use the paper’s fully open pipeline as a reference for procurement policies (data curation disclosure, RL recipe, reward functions, evaluation logs).

- Assumptions/Dependencies: Regulatory alignment; IP and data rights; audit capacity.

- Token-Efficient Inference for Cost Control

- Tools/Workflow: Adopt the RL overlength penalty and format-aware rewards to reduce unnecessary reasoning tokens while maintaining accuracy in production systems.

- Assumptions/Dependencies: Careful tuning of penalties to avoid under-thinking; monitoring for performance trade-offs.

Long-Term Applications

These applications require additional research, domain-specific data, safety validation, or scaling beyond the current image–text focus.

- Healthcare Imaging Decision Support with Explainable Reasoning

- Sector: Healthcare

- Tools/Workflow: Adapt the recipe to medical imaging (radiology, pathology) with verified step-wise rationales and strict format adherence; RL with domain-specific reward validators.

- Assumptions/Dependencies: Clinical-grade datasets, rigorous validation protocols, regulatory approval (e.g., FDA), privacy and safety guardrails.

- Vision-Language Planning in Robotics

- Sector: Robotics

- Tools/Workflow: Extend the GSPO-based RL to embodied settings where visual reasoning guides planning; integrate closed-loop rewards from sensors and simulators.

- Assumptions/Dependencies: Real-time perception, reliable reward signals in dynamic environments, safety-critical testing.

- Autonomous Scientific Assistant for Data Integrity Checks

- Sector: Science/Research

- Tools/Workflow: Verify experimental plots and statistical charts, flag inconsistencies, and suggest corrections with multi-step rationales.

- Assumptions/Dependencies: Domain-tailored validators (statistical tests, units, provenance), lab data access.

- Financial Research Copilot with Auditable Chain-of-Thought

- Sector: Finance

- Tools/Workflow: Analyze earnings reports, filings, and market charts; produce audit trails of reasoning for compliance.

- Assumptions/Dependencies: Proprietary data ingestion, compliance with record-keeping and confidentiality laws, reliability guarantees.

- Adaptive, Accredited Multimodal Tutoring Systems

- Sector: Education

- Tools/Workflow: Build curriculum-aware agents that assess student work including diagrams and handwritten solutions, delivering step-by-step feedback.

- Assumptions/Dependencies: Pedagogical validation, fairness and accessibility, integration with LMS platforms.

- Legal eDiscovery with Multimodal Reasoning

- Sector: Legal

- Tools/Workflow: Review exhibits, photos, diagrams, and contracts with consistent reasoning and verifiable steps.

- Assumptions/Dependencies: Sensitive data handling, evidentiary standards, robust open-ended reward design.

- Energy Grid and Industrial Monitoring: Visual Anomaly Detection with Explanations

- Sector: Energy/Manufacturing

- Tools/Workflow: Interpret SCADA screens, charts, and inspection images, providing reasoned alerts and concise diagnostics.

- Assumptions/Dependencies: High-fidelity access to operational visuals, reliability and safety testing, domain benchmarks.

- Governance and Certification: Standardizing Rewarded Reasoning and Transparency

- Sector: AI Governance/Policy

- Tools/Workflow: Use format-aware rewards, token-efficiency constraints, and open data pipelines as certification criteria for safe, efficient multimodal systems.

- Assumptions/Dependencies: Consensus on metrics and audits, standardized documentation practices.

- General-Purpose Multimodal RL Platform (Video/Audio/3D)

- Sector: Software/Research

- Tools/Workflow: Extend the open recipe to richer modalities, developing scalable data curation (teacher distillation, multi-answer sampling) and verifiable rewards for temporal and spatial tasks.

- Assumptions/Dependencies: Large-scale multimodal datasets, domain-specific validators, compute scaling.

- Tool-Use and Agentic Workflows with Verifiable Rewards

- Sector: Software

- Tools/Workflow: Integrate calculators, chart parsers, OCR, and retrieval to strengthen verifiable rewards beyond math; orchestrate multi-step tool sequences with GSPO.

- Assumptions/Dependencies: Robust tool APIs, failure handling, monitoring cost and reliability.

Cross-Cutting Assumptions and Dependencies

- Verifiable reward functions are essential; tasks with clear ground truth (math, structured VQA) benefit most, while open-ended domains require new validators.

- Access to strong teacher models and licensing for data and models is a prerequisite for effective distillation and multi-answer sampling.

- Compute resources are required for SFT and RL (especially for higher rollout counts); vLLM/verl help but cost remains a consideration.

- Formatting standardization reduces training instability; production systems must enforce consistent output templates.

- Safety, privacy, and alignment must be actively managed during RL to avoid degradation; guardrails and audit trails are recommended.

- Token-efficiency tuning (overlength penalties) should be monitored to avoid under-thinking in complex cases; domain-specific calibration may be necessary.

Collections

Sign up for free to add this paper to one or more collections.