TWIST2: Scalable, Portable, and Holistic Humanoid Data Collection System (2511.02832v1)

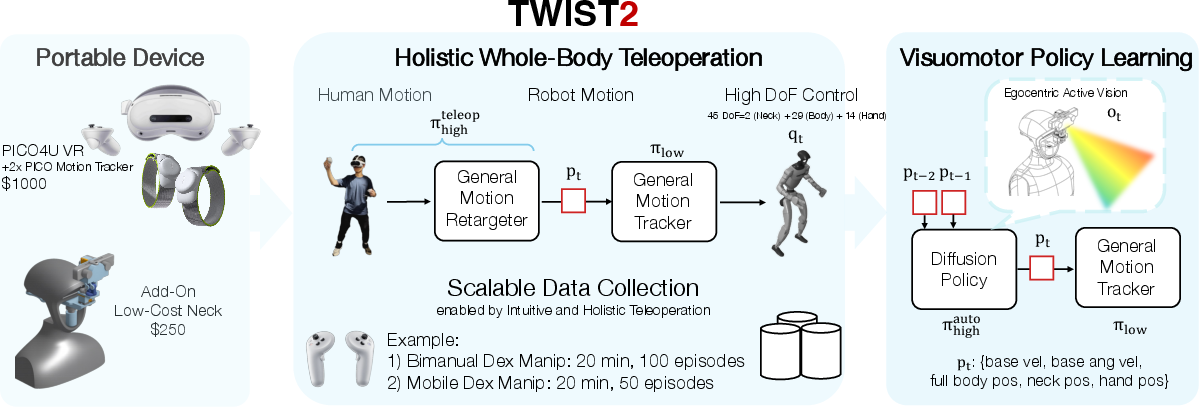

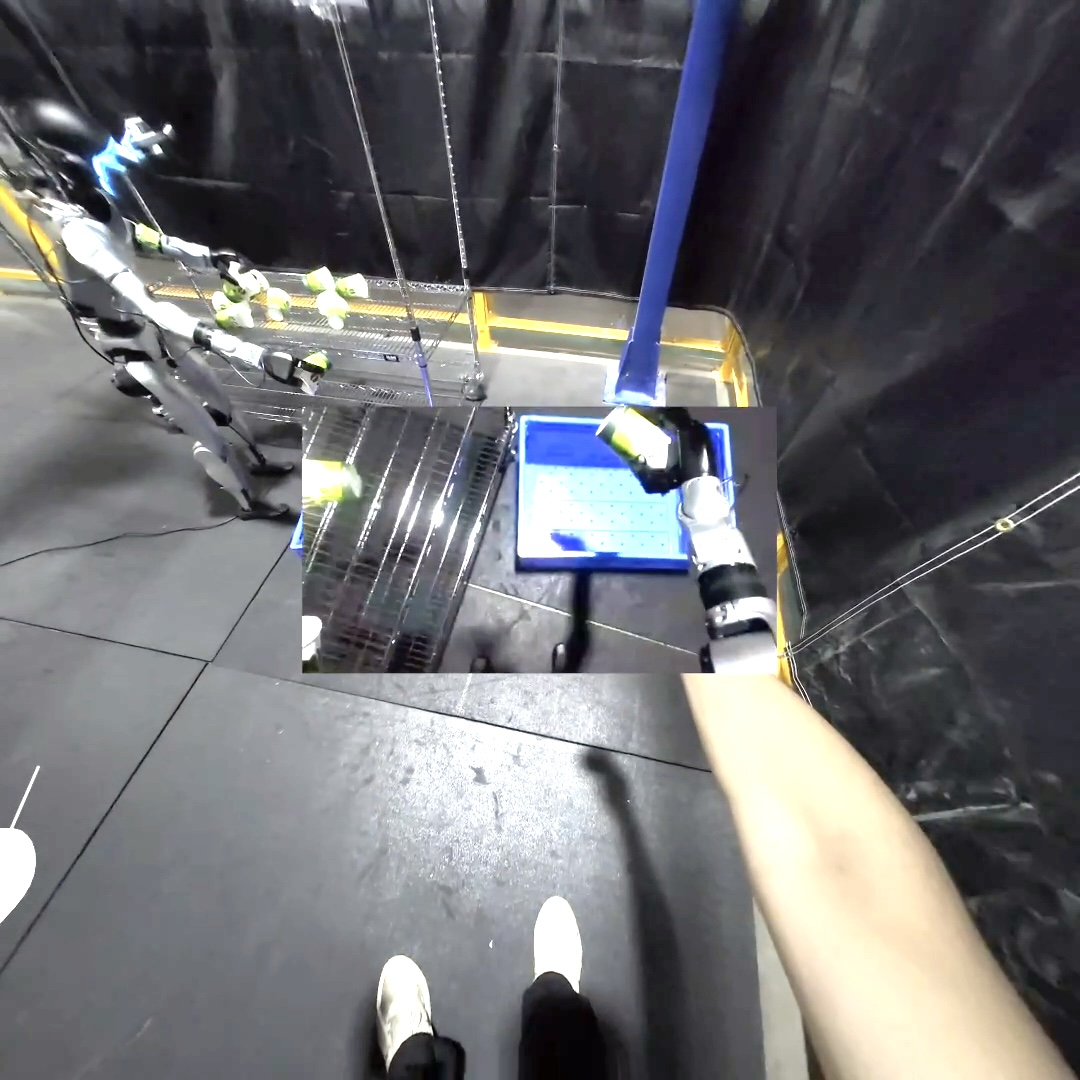

Abstract: Large-scale data has driven breakthroughs in robotics, from LLMs to vision-language-action models in bimanual manipulation. However, humanoid robotics lacks equally effective data collection frameworks. Existing humanoid teleoperation systems either use decoupled control or depend on expensive motion capture setups. We introduce TWIST2, a portable, mocap-free humanoid teleoperation and data collection system that preserves full whole-body control while advancing scalability. Our system leverages PICO4U VR for obtaining real-time whole-body human motions, with a custom 2-DoF robot neck (cost around $250) for egocentric vision, enabling holistic human-to-humanoid control. We demonstrate long-horizon dexterous and mobile humanoid skills and we can collect 100 demonstrations in 15 minutes with an almost 100% success rate. Building on this pipeline, we propose a hierarchical visuomotor policy framework that autonomously controls the full humanoid body based on egocentric vision. Our visuomotor policy successfully demonstrates whole-body dexterous manipulation and dynamic kicking tasks. The entire system is fully reproducible and open-sourced at https://yanjieze.com/TWIST2 . Our collected dataset is also open-sourced at https://twist-data.github.io .

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper introduces Humanoid-DC, a new way to control and teach humanoid robots using simple, portable tools. Instead of relying on expensive motion capture systems, it uses a VR headset and a few small trackers to stream a person’s whole-body movements to a robot. The system also helps collect lots of training examples quickly, so robots can later learn to act on their own using their cameras, like a person seeing through their own eyes.

What questions are the researchers trying to answer?

The paper focuses on three easy-to-understand questions:

- How can we control a humanoid robot’s entire body (arms, legs, torso, and head) in a natural way without expensive equipment?

- Can we collect a lot of high-quality demonstration data quickly with just one operator, so robots can be trained more easily?

- Can robots learn to use their own vision to move their whole body and complete complex tasks on their own?

How does the system work? (Methods explained simply)

Think of this system like puppeteering a robot, but with smart tools:

- VR-based teleoperation: A person wears a VR headset (PICO 4U) and two small motion trackers on their ankles. As they move, the system tracks their whole-body motion in real time. This is like playing a motion-controlled video game, except the “character” is a real robot.

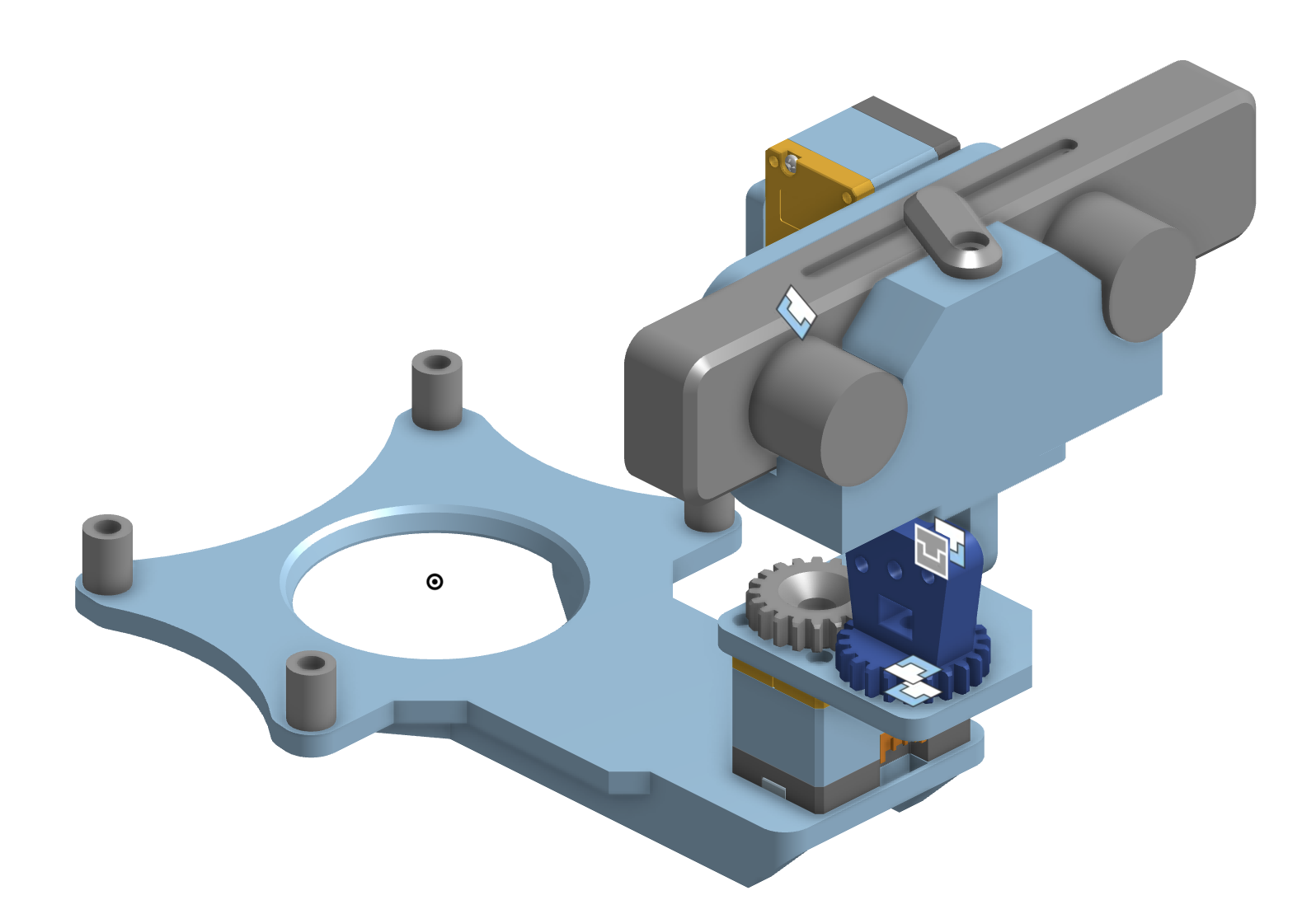

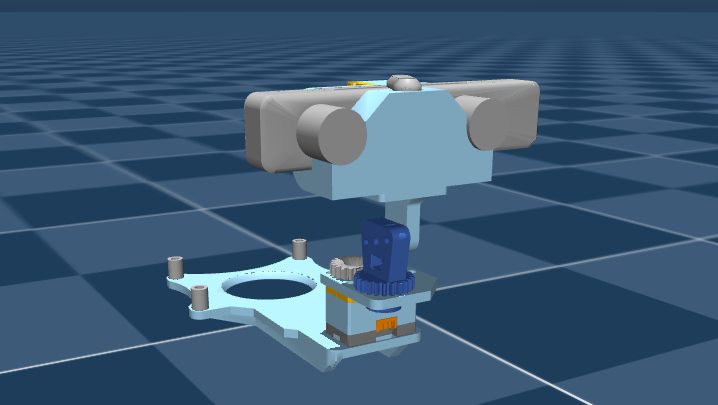

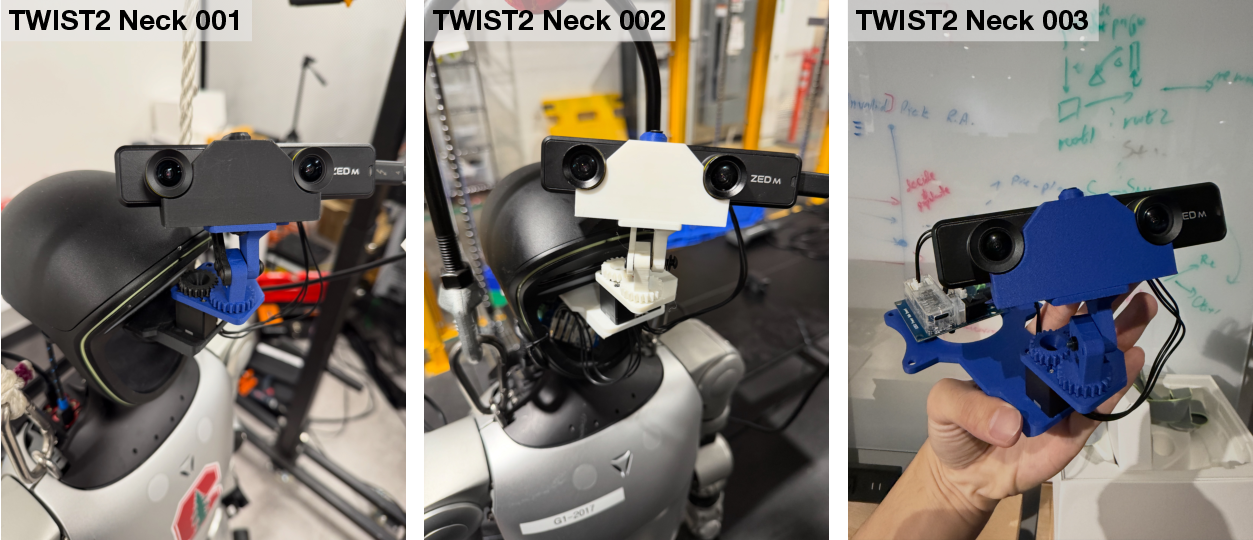

- Egocentric vision: The robot has a small, low-cost “neck” with two angles (yaw and pitch) and a stereo camera on it. “Egocentric” means the robot sees from its own point of view (first-person), like you do. Stereo vision gives depth, like watching a 3D movie, which helps with judging distance when grabbing or stepping.

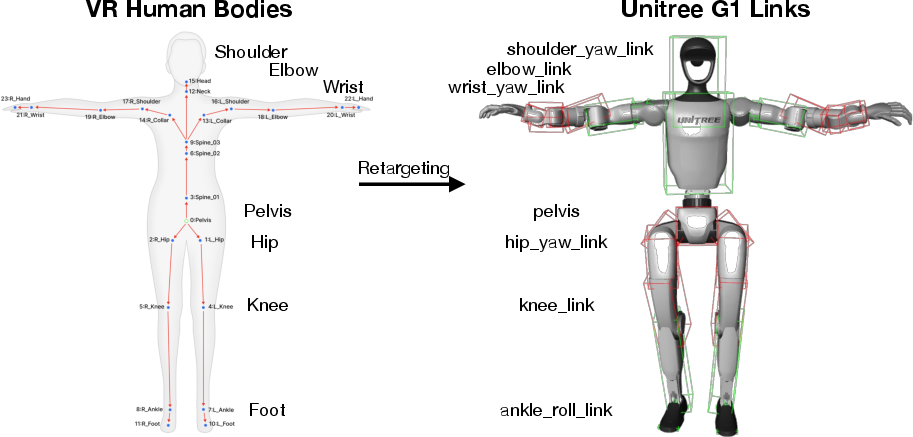

- Motion retargeting: The robot’s joints aren’t the same as a human’s. So the system translates (“retargets”) the person’s movements to the robot’s joints. Think of it as converting dance moves to match a different dancer’s body.

- Low-level controller (steady body control): This is like the robot’s “muscle control.” The system sends commands for where the robot’s joints should go, and a PD controller acts like springs and dampers to move smoothly and avoid jerking.

- High-level controller (decision-making): This can be either the human teleoperator (during data collection) or an AI policy (during autonomous operation). The AI policy learns from the collected examples to predict whole-body commands based on what the robot sees.

- Training the robot’s skills: First, the team collects lots of demonstrations—showing the robot how tasks are done through teleoperation. Then they train a “Diffusion Policy,” a type of AI that learns by imagining many possible next moves and selecting ones that match successful examples. This helps the robot plan sequences of whole-body actions from video input.

Important details that make it practical:

- The VR setup is cheap and quick to put on (about $1000 total), with almost no calibration needed.

- The custom robot neck costs about $250 and can be 3D-printed and easily attached.

- The system has very low delay (<0.1 seconds), so the robot follows the user smoothly.

- A single operator can fully start, pause, and stop the robot using VR hand controllers.

What did they find?

The team showed several key results:

- Full-body, portable teleoperation: The robot can be controlled naturally—arms, legs, torso, and head together—without motion capture labs. It works in real environments, not just in studios.

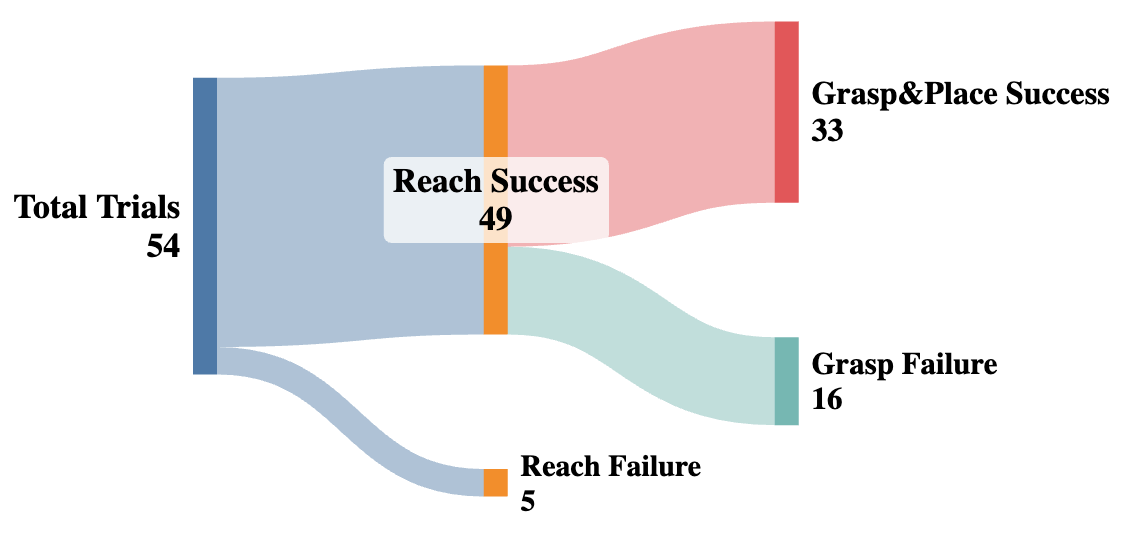

- Fast data collection: They collected about 100 successful demonstrations in 15–20 minutes, with nearly 100% success. This is great for training AI policies.

- Long, complex tasks with one operator:

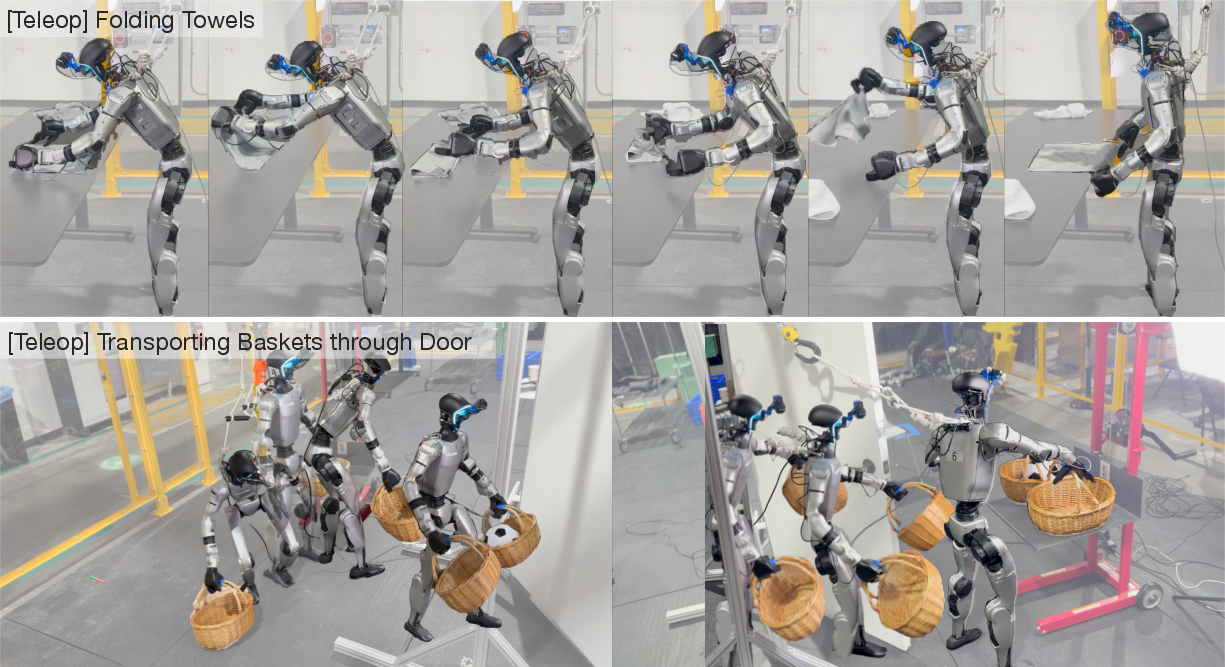

- Folding towels: The robot locates a towel, shakes it out, folds it in halves and thirds, presses the folds, and places it neatly. This needs fine wrist control, two-handed coordination, careful vision, and whole-body reaching.

- Transporting baskets through a door: The robot bends, picks up baskets, opens the door with its arm, walks through, and places them on a shelf.

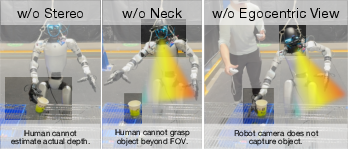

- Active stereo vision and a movable neck matter: Without stereo vision, operators often misjudge height and miss grasps. Without the neck, it’s hard to look around, making tasks slower and harder. With both, users are faster and more accurate.

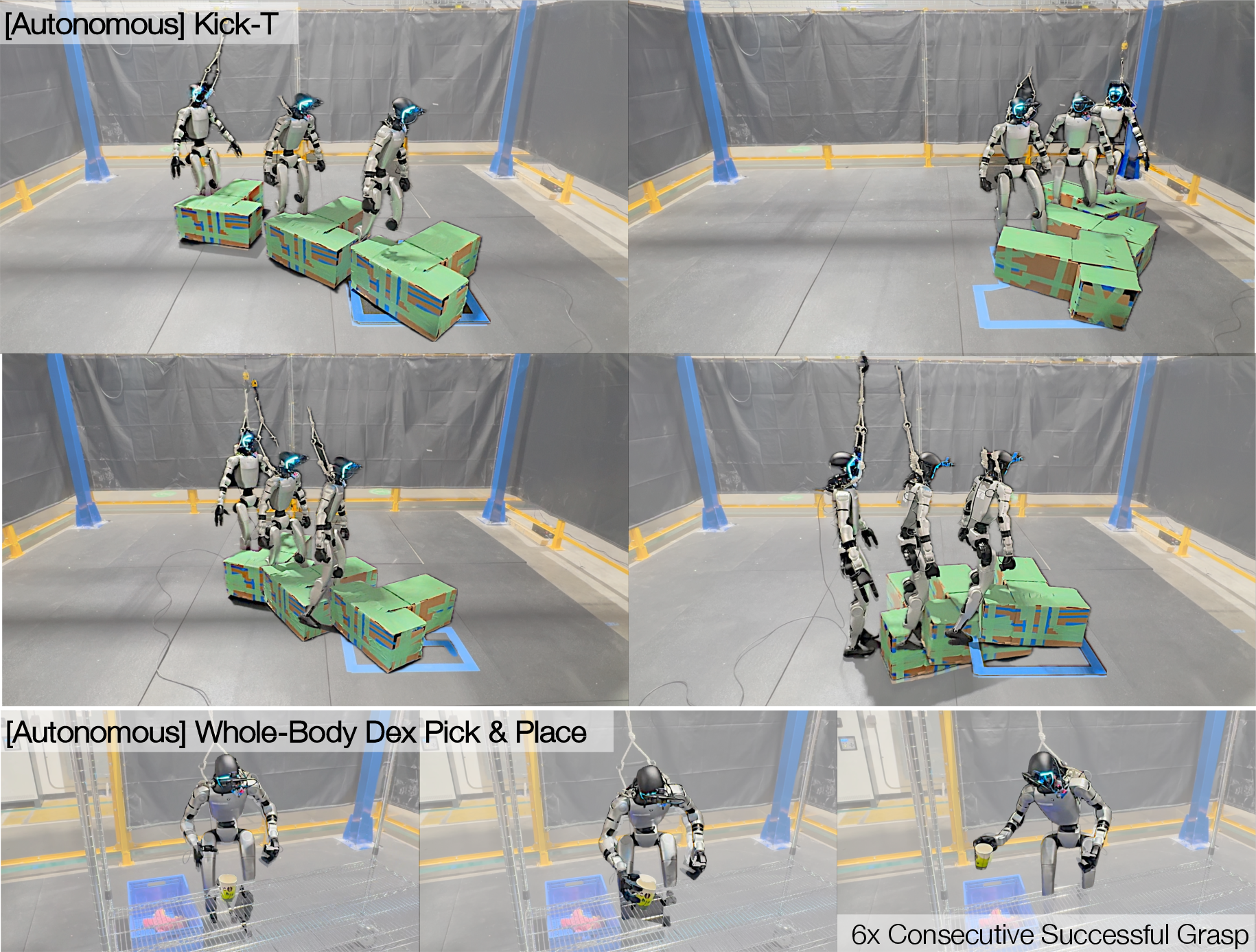

- Autonomous control from vision: After training, the robot can perform:

- Whole-body pick-and-place: Bend, grasp a cup, and place it in a box (works well, but very light objects like cups need very precise control).

- Kicking a T-shaped box to a target: Kick forward and step to balance; successful in most trials.

Why is this important?

Humanoid robots need lots of high-quality data to learn complex skills—like moving across rooms, using both hands, and coordinating balancing with manipulation. In the past, collecting this kind of data required expensive motion capture setups and multiple people. This system is:

- Portable: Works anywhere, not just labs.

- Affordable: Uses consumer VR gear and a $250 neck add-on.

- Scalable: One person can quickly collect many demonstrations.

- Holistic: Controls the whole body, not just arms or legs separately.

Because it’s open-sourced, other researchers and teams can build on it, speeding up progress in humanoid robotics.

What are the real-world impacts and future possibilities?

- Democratizing robot learning: More people and labs can collect useful data and train humanoid robots.

- Better whole-body skills: Robots can learn natural human-like coordination—grabbing, walking, opening doors, balancing while manipulating objects.

- Standardization: Using common, widely available hardware (like Unitree G1) helps everyone share and reuse data.

Limitations and next steps:

- Super-fast, athletic moves are still hard due to tracking and control limits.

- VR pose estimation isn’t as precise as high-end motion capture, especially for elbows and knees.

- Future work could improve accuracy, handle more dynamic motions, and teach robots to plan longer sequences and adapt angles (like walking around an object before kicking).

In short, this paper shows a practical, low-cost way to control and teach humanoid robots using VR and egocentric vision, and it proves that with the right system, we can collect lots of quality data and train robots to act on their own in the real world.

Knowledge Gaps

Knowledge Gaps, Limitations, and Open Questions

Below is a consolidated list of what remains missing, uncertain, or unexplored in the paper, framed as concrete, actionable items for future research.

- Quantitative motion fidelity benchmarking is absent: measure per-joint tracking error, foot slip distance, COM stability, contact timing accuracy, and latency end-to-end; compare against MoCap-based full-body systems (e.g., TWIST) and partial-control VR baselines (AMO/CLONE) across diverse tasks.

- Robustness to highly dynamic and perturbed motions is not demonstrated: evaluate sprinting, jumping, rapid turns, push recovery, external perturbations, and uneven terrain (stairs, slopes, compliant surfaces), and identify controller failure modes and recovery strategies.

- PICO whole-body pose accuracy and drift are under-characterized: provide per-joint error vs. optical MoCap, quantify drift over long horizons, assess occlusion-induced failures, and systematically paper the impact of varying tracker counts (2 vs. 4–6 trackers) and calibration procedures.

- No ablation on retargeting design choices: quantify the benefits of pelvis-centric positional constraints for lower body and rotation-only constraints for upper body (e.g., foot sliding reduction, hand placement accuracy, upper-body teleportation stability).

- Safety engineering remains informal: develop and evaluate formal safety layers (collision avoidance, torque/velocity limits, thermal management, fall detection, emergency stop behavior), and report incident statistics during long-horizon teleoperation.

- Data scale, diversity, and annotation are limited and under-specified: expand beyond two autonomous tasks, quantify environment diversity (lighting, textures, clutter), object variety (shape, mass, friction), and provide task metadata (success/failure labels, subtask boundaries) with automated segmentation and quality checks.

- Generalization of visuomotor policies is narrow: test cross-scene, cross-object, and cross-operator generalization; evaluate multi-task learning, curriculum strategies, and transfer to novel task variants (e.g., varied box shapes, orientations, masses, and kick directions).

- Depth and stereo are not leveraged in policy learning: assess whether using stereo disparity or depth maps (vs. RGB-only) improves performance; paper sensitivity to latency, compression artifacts (H.265, JPEG), and stereo calibration errors.

- Visual backbone and representation choices lack comparison: ablate R3M vs. other pretraining (e.g., VC-1, DINOv2, EgoDex, Nymeria) and multimodal VLA models; evaluate frozen vs. fine-tuned encoders and temporal models (transformers vs. conv1D).

- Command interface design (relative root commands + whole-body joints) is not empirically validated: compare against root-velocity-only lower-body control on stability, legged manipulation success, and long-horizon drift without global state estimation.

- Low-level controller training lacks sim-to-real robustness analysis: report domain randomization strategies (friction, mass, delay), sim fidelity, and real-world transfer metrics; ablate dataset composition (AMASS/OMOMO vs. PICO motions) and reward shaping.

- Frequency and latency mismatches are unexplored: analyze the impact of 20 Hz high-level policy with 30–50 Hz low-level control on stability and responsiveness; paper performance under network jitter and remote teleoperation settings.

- Hand retargeting is simplified to gripper-like control: investigate richer Dex31 finger mappings, tactile/force feedback integration, hybrid control modes (pinch vs. power grasp), and autonomous fine manipulation (e.g., cloth handling) beyond simple pick-and-place.

- Neck design constraints are not evaluated: quantify whether missing roll DOF limits performance (e.g., extreme head tilts, seated interactions), measure neck-induced camera vibration during gait, and provide calibration/standardization protocols for reproducible assembly.

- Autonomy on complex long-horizon tasks is limited: extend beyond teleoperated towel folding to fully autonomous execution with perception, sequencing, and error recovery; report task-level success rates and failure analyses.

- User studies are too small to draw general conclusions: scale to more operators (novice/expert), report learning curves, fatigue metrics, and human factors (UI usability, motion sickness) under egocentric stereo vs. third-person pass-through.

- Open-source artifacts are incomplete or unspecified: provide working links, BOMs, CAD/STLs, firmware, MuJoCo XMLs, calibration scripts, streaming pipelines, and reproducible instructions; document licenses and dataset schemas for reuse.

- Remote operation and scalability are untested: evaluate teleoperation over WAN/5G, bandwidth and latency tolerances, failover strategies, and multi-robot/multi-operator scenarios for fleet-scale data collection.

- Foot contact sensing and state estimation are missing: explore foot force/pressure sensors, contact classifiers, and state estimators to improve legged manipulation, kicking precision, and balance under variable friction.

- Cross-embodiment transfer remains open: test Humanoid-DC on other humanoids (e.g., Unitree R1, Booster K1/T1), provide morphology-aware retargeters and controller adaptation methods (e.g., policy distillation, dynamics matching).

- Integration with language or task-level planners is absent: investigate language-conditioned goal specification, hierarchical planners, and world models to scale to compositional, long-horizon tasks with flexible sequencing.

- Formal evaluation of data collection throughput and cost-efficiency is lacking: measure end-to-end operator time per successful demo, setup/teardown overhead, equipment cost amortization, and maintenance requirements across extended deployments.

- Ethical, privacy, and safety-in-the-wild considerations are not discussed: address data privacy for egocentric recordings, bystander safety, and responsible deployment practices in non-lab environments.

Glossary

- AMASS: A large-scale human motion capture dataset used to train and evaluate motion models. "The motion data source includes AMASS~\cite{mahmood2019amass}, OMOMO~\cite{li2023omomo}, and our in-house MoCap data."

- Anthropomorphic: Describing robot designs or behaviors that mimic human form and movement. "the anthropomorphic nature of humanoids makes whole-body control the most natural and effective teleoperation approach"

- Convolutional history encoder: A neural network module that compresses temporal sequences with convolutional layers to capture motion history. "the convolutional history encoder and the MLP backbone."

- Degrees of Freedom (DoF): Independent parameters that define a robot’s possible motions (e.g., joint angles). "We use Unitree G1 with 29 DoF (3 DoF waist + two 6 DoF legs + two 7 DoF arms)"

- Diffusion Policy: A generative control policy that models action sequences via diffusion processes for robust planning. "The second component is a Diffusion Policy that directly predicts whole-body joint positions based on visual observations"

- Egocentric teleoperation: Controlling a robot from the operator’s own viewpoint, aligning the robot’s camera with the user’s perspective. "We build a 2-DoF Neck (Humanoid-DC Neck) to enable egocentric teleoperation"

- Egocentric vision: Visual sensing from the robot’s own head/eyes to perceive the environment from its perspective. "egocentric vision is crucial for human-like task execution"

- GMR: A real-time motion retargeting method that maps human pose to robot joint configurations. "We adapt GMR~\cite{ze2025twist,joao2025gmr}, a real-time motion retargeting method, to the PICO human motion format"

- GStreamer: A multimedia framework for building streaming pipelines (e.g., video encoding/transport). "The stereo images are streamed from ZED Mini to PICO via GStreamer in the h265 format"

- IMU: Inertial Measurement Unit that provides orientation and angular velocity for state estimation. "root orientation and angular velocity from IMU readings"

- Interpupillary distance: The distance between the centers of the eyes in VR displays, adjusted for proper stereoscopic depth. "shader implemented in ~\cite{zhao2025xrobotoolkit} that adjusts the interpupillary distance and sets the focal point"

- Legged manipulation: Using a robot’s legs, not just arms, to interact with and manipulate objects. "long-horizon, dexterous, mobile whole-body manipulation and legged manipulation."

- Loco-manipulation: Tasks requiring coordinated locomotion and manipulation simultaneously. "long-horizon whole-body loco-manipulation and legged manipulation tasksâcapabilities not demonstrated in previous works."

- MLP backbone: A multilayer perceptron serving as the core network for processing encoded features. "the convolutional history encoder and the MLP backbone."

- MoCap: Motion capture systems that track human movement using external sensors/cameras. "enjoyable user experience compared to MoCap solutions such as TWIST~\cite{ze2025twist}"

- MuJoCo: A physics engine for detailed robotic simulation and model-based optimization. "we build MuJoCo XML files for our TWIST2 neck."

- ONNX: An open format for representing machine learning models for cross-framework deployment. "For efficient real-time execution, the trained Diffusion Policy is converted to ONNX format"

- Parallel-jaw gripper: A simple end-effector with two parallel fingers for grasping objects. "the functionality of the Dex31 hand is much closer to a parallel-jaw gripper than to a dexterous multi-fingered hand."

- Pelvis-centric frame: A coordinate system centered on the pelvis to reduce sensitivity to global pose errors. "we measure all human positions in a pelvis-centric frame."

- PICO 4U: A VR device used to stream whole-body human motion for teleoperation. "we utilize PICO 4U~\cite{pico4ultra_2023} combined with two PICO Motion Trackers"

- PD controller: Proportional-Derivative controller that tracks target positions by computing torques from errors and velocities. "which are then tracked by a PD controller to generate the final torque"

- PPO: Proximal Policy Optimization, a reinforcement learning algorithm for training control policies. "The actor $\pi_{\text{low}$ is trained via PPO"

- Proprioception: Internal sensing of the robot’s own state (e.g., joint angles, velocities) used for control. "robot proprioception, including root orientation and angular velocity from IMU readings"

- Redis: An in-memory data store used here as a low-latency communication channel between modules. "and then send $\mathbf{p}_{\text{cmd}$ to $\pi_{\text{low}$ (Section~\ref{sec:training controller}) through Redis~\cite{redis_redis_2025}."

- R3M: A representation learning model that provides robust visual features for robotics tasks. "we use a ResNet-18 backbone pre-trained with R3M~\cite{nair2022r3m}"

- ResNet-18: A convolutional neural network architecture used for visual feature extraction. "We use a ResNet-18 backbone pre-trained with R3M"

- Reward supervision: Using reward signals derived from motion data to train reinforcement learning controllers. "We then generate reward supervision from the motion datasets."

- Root velocity: Commands specifying the base (root) translational/angular motion of the robot body. "moving beyond simplified commands such as root velocity."

- Sim-to-real: Transferring policies trained in simulation to real-world robots while handling domain gaps. "typically employing task-specific sim-to-real reinforcement learning (RL) approaches."

- SO(3): The mathematical group of 3D rotations representing orientation in space. "Let $R_{\text{head}, R_{\text{spine} \in SO(3)$ be the global rotations of the human head and spine in the world frame, respectively."

- Stereoscopic vision: Binocular rendering that provides depth perception for teleoperators. "our teleoperation system is equipped with stereoscopic vision via the custom"

- Teleoperation: Remote human control of a robot, mapping human motions to robot actions. "Teleoperation is crucial for enabling humanoid robots to interact with complex real-world environments and perform sophisticated loco-manipulation tasks."

- Visuomotor policy: A control policy that maps visual inputs (and proprioception) to motor commands. "a hierarchical visuomotor policy learning framework consisting of two components."

- XRoboToolkit: A toolkit used to access and stream motion/vision data from XR devices like PICO. "We use XRoboToolkit~\cite{zhao2025xrobotoolkit} for access to motion streaming from PICO"

- ZED Mini: A stereo camera providing depth-aware visual input mounted on the robot’s neck. "We use Zed Mini as our stereo camera attached to the neck"

- ZMQ: ZeroMQ, a messaging library used for sending image streams during data collection. "and to the data collection process via ZMQ in the JPEG format."

Practical Applications

Immediate Applications

Below are actionable uses that can be deployed now, leveraging the paper’s open-sourced system, dataset, and hardware add-ons.

- Industry (Robotics R&D, Warehousing, Manufacturing): Rapid “humanoid data ops” for skill bootstrapping

- Use the Humanoid-DC pipeline to collect high-quality whole-body imitation data (≈100 demos in ~15–20 minutes, ~100% success) for tasks such as bimanual pick-and-place, door traversal, basket transport, towel folding, and legged manipulation.

- Integrate the hierarchical control stack (low-level motion tracker + high-level Diffusion Policy) to prototype autonomous variants of these tasks in pilot environments, with human-in-the-loop teleoperation fallbacks.

- Potential tools/products/workflows: Humanoid-DC Kit (VR teleop + 2-DoF neck + stereo camera + software stack), DataOps GUI for trajectory segmentation/filtering, ONNX deployment of policies, retargeting SDK for PICO.

- Assumptions/Dependencies: Unitree G1 (29-DoF) + Dex31 hands; PICO 4U headset + two motion trackers; ZED Mini stereo camera; Redis/ZMQ/GStreamer; trained PPO motion-tracking controller; trained operators; safe test spaces; reliable 50–100 Hz streaming; adequate compute (GPU for training/inference).

- Academia (Robotics, Embodied AI, HCI): Mocap-free whole-body teleoperation and reproducible datasets

- Replace expensive optical MoCap with PICO VR + ankle trackers to collect full-body humanoid demonstrations at scale; use open MuJoCo XML models and GMR-based retargeting.

- Train and evaluate whole-body visuomotor policies (Diffusion Policy with R3M features) on egocentric stereo data; benchmark long-horizon loco-manipulation and legged manipulation.

- Potential tools/products/workflows: Open dataset (https://twist-data.github.io), GMR retargeting adaptation for PICO, PPO motion-tracking controller code, curriculum-lab packages (teleop labs, policy learning assignments).

- Assumptions/Dependencies: Access to Unitree G1 or compatible humanoids; adherence to safety protocols; basic VR setup skills; GPU resources; reproducibility via open-source repos.

- Software and Tooling (SDKs, Platforms): Portable teleop and data collection stack

- Package retargeting, streaming, and policy-learning components into a developer-facing SDK for integration with existing robot stacks; provide templates for stereo egocentric rendering and single-operator controls on PICO controllers.

- Potential tools/products/workflows: “Humanoid Teleop SDK” (Redis/ZMQ/GStreamer integration), “Egocentric VR Teleop App,” “Whole-body Policy Starter” (Diffusion Policy + ONNX).

- Assumptions/Dependencies: Cross-platform support (Linux), driver availability for cameras/motors, stable APIs for Unitree devices, operator training.

- Education and Training (Universities, Maker Spaces): Hands-on whole-body humanoid control without MoCap

- Use the low-cost neck module ($250) + VR teleop to run safe in-lab demos and projects; train students on single-operator workflows and egocentric perception fundamentals.

- Potential tools/products/workflows: 3D-print files for the TWIST2 neck, assembly guides, course modules on retargeting and hierarchical control.

- Assumptions/Dependencies: Basic fabrication capability; Dynamixel motors (XC330-T288) + U2D2; institutional safety procedures; stereo camera procurement (~$400).

- Policy and Standards (Research Governance, Consortiums): Best practices for safe single-operator teleop

- Adopt the paper’s safety workflow (smooth motion interpolation, pause/resume on controller, limited latency <0.1 s) as a template for lab and pilot deployments; promote standardization on humanoid platforms (e.g., Unitree G1 + egocentric camera module) to enable data sharing and reproducibility.

- Potential tools/products/workflows: Safety SOPs, operator training checklists, recommended hardware profiles.

- Assumptions/Dependencies: Institutional oversight; risk assessments; compliance with local robotics regulations; privacy-safe handling of egocentric video data.

- Daily Life / Maker Communities: Demonstrations and telepresence experiences

- Run controlled telepresence demos (e.g., simple pick-and-place, folding) in maker spaces or exhibitions using egocentric VR; foster community contributions to open datasets.

- Assumptions/Dependencies: Strict safety guarding; insurance/liability considerations; supervised environments; operator fatigue management.

Long-Term Applications

These use cases require further research, scaling, robustness improvements, safety certification, or standardization before broad deployment.

- Healthcare and Assistive Robotics: In-home support and eldercare

- Teleoperated initially, transitioning to autonomous whole-body loco-manipulation for household tasks (tidying, fetching objects, light chores), trained from large egocentric datasets.

- Potential tools/products/workflows: Care-certified Humanoid-DC variant; bedside teleop workstation; fleet learning from home deployments.

- Assumptions/Dependencies: Reliability and safety certification; improved dexterous grasping; robust perception under diverse home conditions; data privacy; caregiver training.

- Logistics, Retail, and Facilities: Autonomous shelf stocking, door interaction, and item transport

- Deploy hierarchical visuomotor policies for frequent manipulation tasks; maintain teleop fallback for edge cases; aggregate demonstration data across sites for continuous improvement.

- Potential tools/products/workflows: “Humanoid DataOps Platform” for multi-site collection, fleet-level policy rollout pipelines, task libraries (door opening, bin transfer).

- Assumptions/Dependencies: Hardware standardization across fleets; low-latency, secure networking; robust controllers for dynamic motions; OSHA-like compliance; ROI vs. manual labor.

- Disaster Response and Public Safety: Remote operation in hazardous environments

- Use egocentric VR teleop and single-operator control to perform assessment, object relocation, or barrier manipulation; progressively enhance autonomous capabilities.

- Potential tools/products/workflows: Ruggedized Humanoid-DC kits; satellite/mesh networking; mission control dashboards; operator training programs.

- Assumptions/Dependencies: Reliable comms in degraded environments; improved dynamic motion tracking (sprinting, rapid balance recovery); specialized hardware ruggedization; regulatory clearances.

- Workforce and Operations: Teleoperation call centers for humanoid fleets

- Centralize skilled operators who supervise multiple robots, intervening when policies struggle; scale “robot operations” as a new occupation category.

- Potential tools/products/workflows: Multi-robot control UI; task routing; operator performance analytics; standardized training certifications.

- Assumptions/Dependencies: Human factors research (fatigue, cognitive load); fair labor practices; secure and resilient infrastructure; clear SLAs.

- AI and Software Platforms: General VLA (Vision-Language-Action) for whole-body control

- Train large VLA models using scaled Humanoid-DC datasets to enable instruction-following and generalization across tasks and environments.

- Potential tools/products/workflows: Multimodal pretraining pipelines; cross-embodiment retargeting layers; open benchmarks for whole-body autonomy.

- Assumptions/Dependencies: Massive, diverse datasets; compute budgets; standardized embodiment representations; evaluation protocols; alignment and safety safeguards.

- Robotics Standardization and Ecosystem: Interoperable modules and benchmarks

- Establish common neck/camera modules, streaming interfaces, and retargeting APIs across humanoid platforms to maximize dataset reuse and policy transferability.

- Potential tools/products/workflows: Interoperability standards, certification suites, open-source reference designs, consortium-led testbeds.

- Assumptions/Dependencies: Vendor buy-in; community governance; IP/licensing harmonization; shared evaluation criteria.

- Public Policy and Regulation: Frameworks for teleoperated/autonomous humanoids in public spaces

- Develop regulations for egocentric video capture (privacy), liability for teleop/autonomy handoff, safety envelopes for interaction with people.

- Potential tools/products/workflows: Compliance checklists, auditing tools for data handling, incident reporting protocols.

- Assumptions/Dependencies: Multi-stakeholder engagement (industry, academia, regulators); evidence from pilots; coherent standards across jurisdictions.

- Consumer and Home Robotics: General-purpose household robots

- Transition from teleop-assisted to fully autonomous long-horizon tasks (laundry folding, tidying, simple cooking prep) using scaled egocentric datasets and improved whole-body controllers.

- Potential tools/products/workflows: Home-grade Humanoid-DC derivatives; app ecosystems; user training modules; maintenance and support networks.

- Assumptions/Dependencies: Cost reduction; reliability over months/years; robust perception in clutter; safety around children/pets; user acceptance.

- Emerging Products and Services: End-to-end commercialization of Humanoid-DC elements

- Humanoid-DC Kit (hardware + software), Egocentric Stereo Teleop App, Whole-Body Diffusion Policy Service, DataOps and Annotation Platform, Teleop Operator Training/Credentialing.

- Assumptions/Dependencies: Market demand; service and support scalability; cybersecurity; vendor partnerships; sustainable business models.

Collections

Sign up for free to add this paper to one or more collections.