OmniX: From Unified Panoramic Generation and Perception to Graphics-Ready 3D Scenes (2510.26800v1)

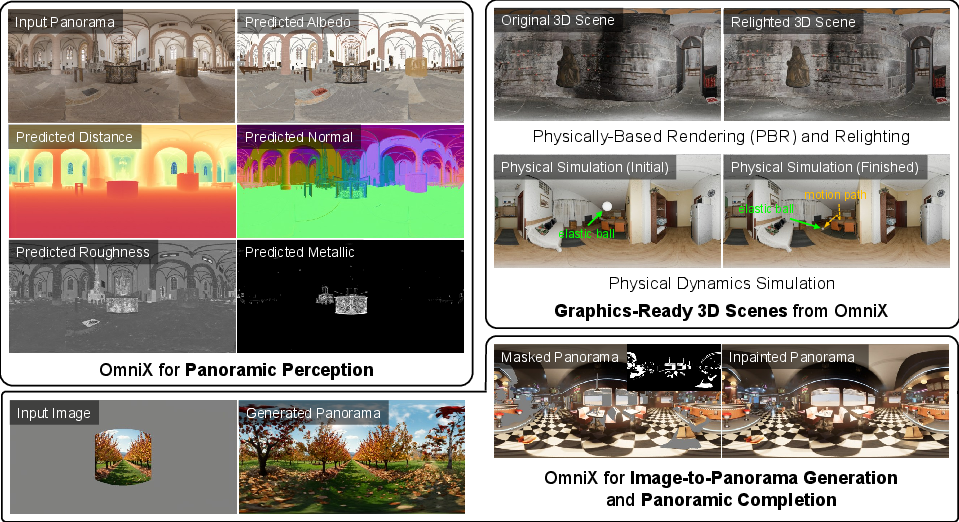

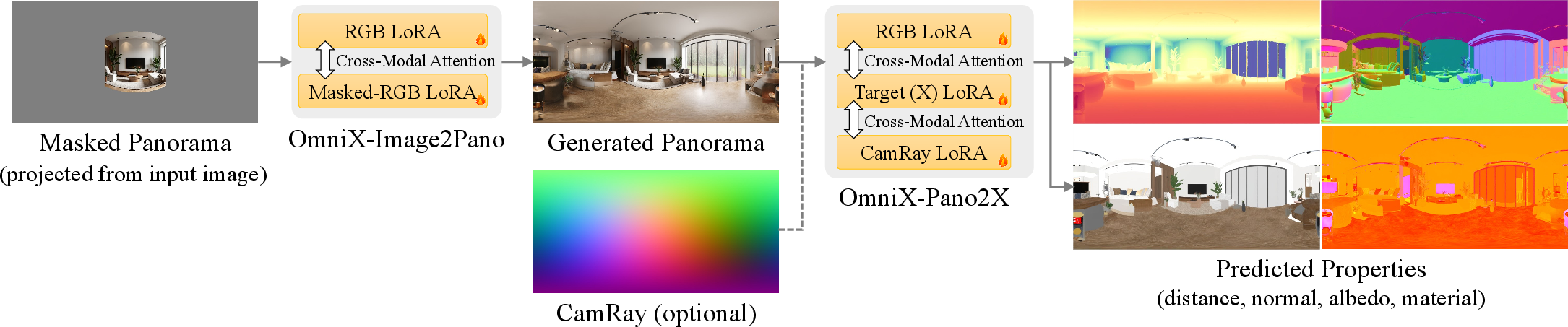

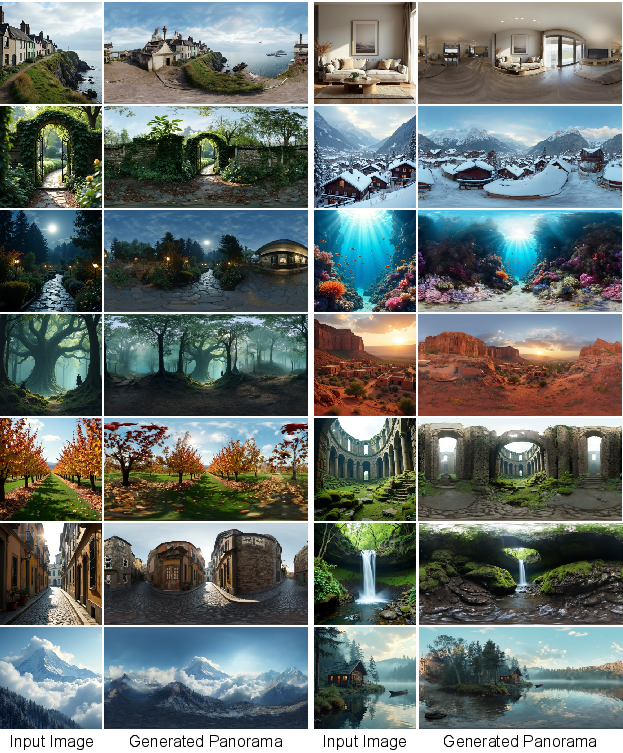

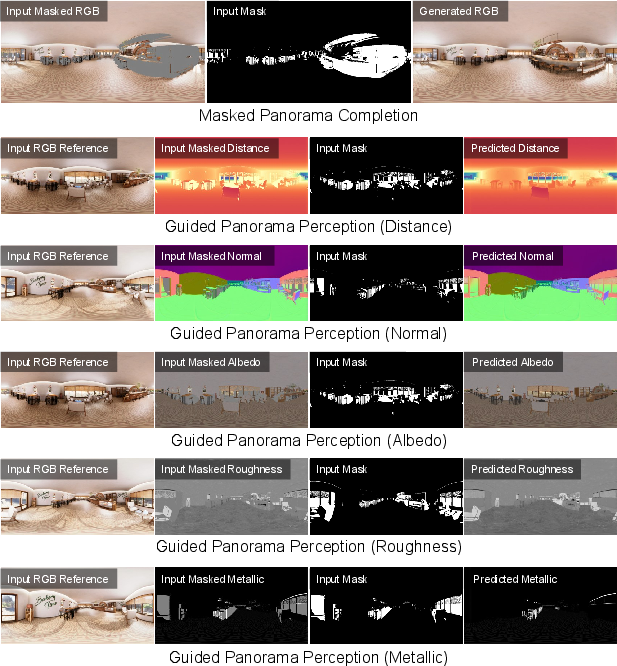

Abstract: There are two prevalent ways to constructing 3D scenes: procedural generation and 2D lifting. Among them, panorama-based 2D lifting has emerged as a promising technique, leveraging powerful 2D generative priors to produce immersive, realistic, and diverse 3D environments. In this work, we advance this technique to generate graphics-ready 3D scenes suitable for physically based rendering (PBR), relighting, and simulation. Our key insight is to repurpose 2D generative models for panoramic perception of geometry, textures, and PBR materials. Unlike existing 2D lifting approaches that emphasize appearance generation and ignore the perception of intrinsic properties, we present OmniX, a versatile and unified framework. Based on a lightweight and efficient cross-modal adapter structure, OmniX reuses 2D generative priors for a broad range of panoramic vision tasks, including panoramic perception, generation, and completion. Furthermore, we construct a large-scale synthetic panorama dataset containing high-quality multimodal panoramas from diverse indoor and outdoor scenes. Extensive experiments demonstrate the effectiveness of our model in panoramic visual perception and graphics-ready 3D scene generation, opening new possibilities for immersive and physically realistic virtual world generation.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper introduces OmniX, a clever system that can turn regular 2D image models into tools that understand and create 360° panoramas and build full 3D scenes from them. The big goal is to make 3D worlds that don’t just look real, but also work in modern graphics software—so you can relight them, simulate physics, and explore them, just like in video games or movies.

What questions did the researchers ask?

- Can we reuse powerful 2D image models to handle 360° panoramas without starting from scratch?

- Can a single framework both generate panoramas and “see” what’s inside them (like distance and material details)?

- Can we build 3D scenes from these panoramas that are ready for real lighting and physics in standard tools?

- Is there a good dataset of panoramas with all the material and geometry information needed to learn these skills?

How did they do it?

Turning 2D models into panorama experts

Most AI art models are trained on flat images. OmniX takes a strong 2D model (called a “flow matching” model) and adds smart attachments so it can:

- Generate panoramas from a single photo

- Understand panoramas by predicting things like distance and surface properties

- Fill in missing parts of panoramas

Think of “flow matching” like giving the model a GPS route from a blurry start to a clear finished image—the model learns how to move from point A to point B.

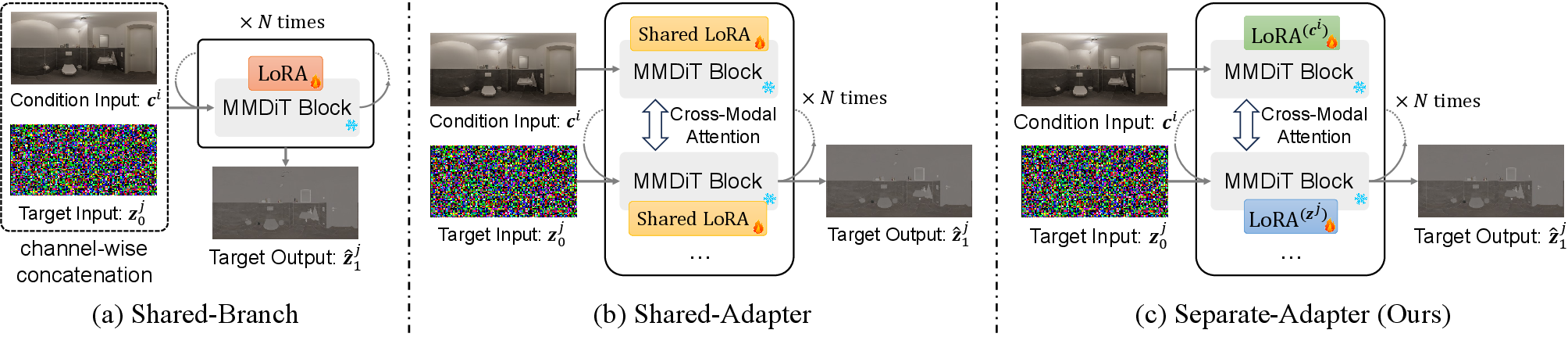

Adapters: like plug-ins for different tasks

OmniX uses small plug-ins called “adapters” (kept lightweight with a technique called LoRA) to teach the model new skills without changing its core too much. The best design they found is the “Separate-Adapter”: one plug-in per input type (for example, one for RGB images, one for masks), so the model stays stable and flexible.

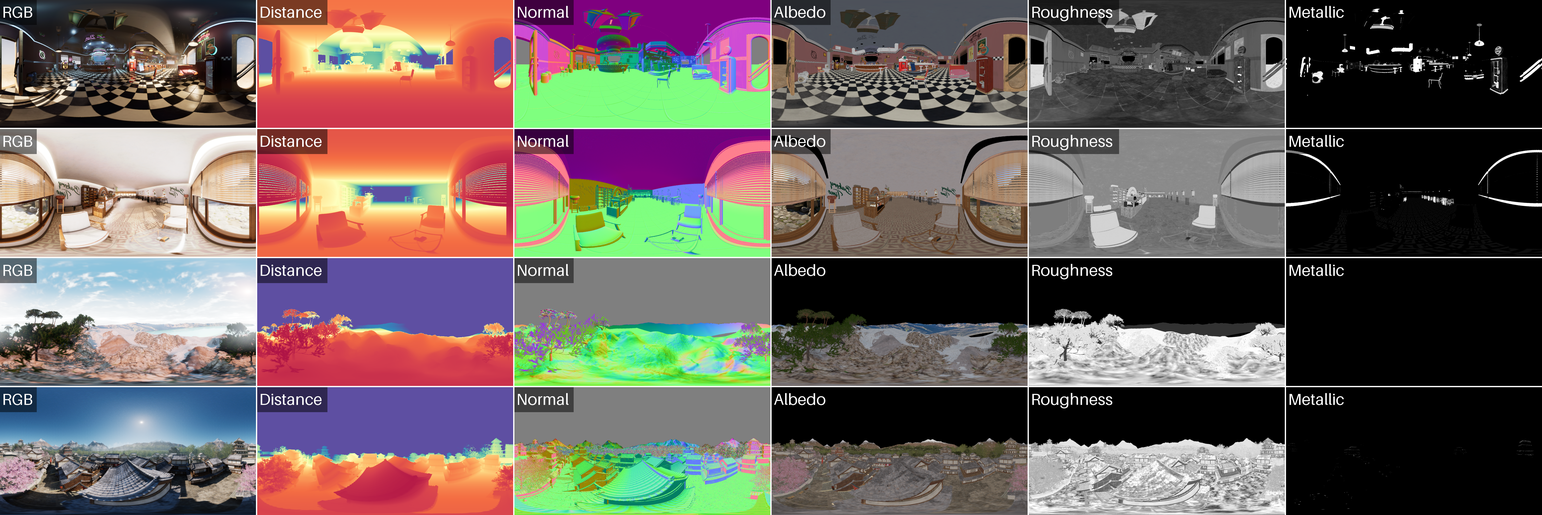

Building the PanoX dataset

To train and test OmniX properly, the team created PanoX—a large synthetic dataset of indoor and outdoor 360° panoramas. Each panorama has aligned “layers” that tell you:

- Distance (how far things are)

- Surface normal (which way surfaces face—helps with fine details and lighting)

- Albedo (base color without lighting)

- Roughness (how shiny or matte a surface is)

- Metallic (how metallic a surface is)

They rendered these in Unreal Engine 5 and included text descriptions. This gives the model rich, consistent training data.

Making 3D scenes you can use

OmniX follows a three-step pipeline:

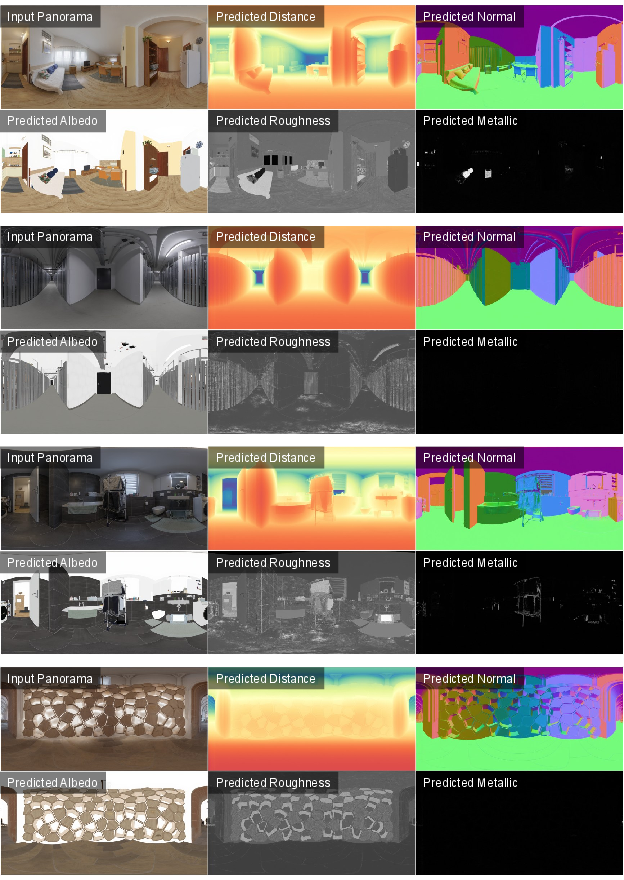

- From a single image, generate a full panorama and then predict its distance and material maps.

- Turn the panorama into a 3D mesh by projecting pixels into 3D using the distance map.

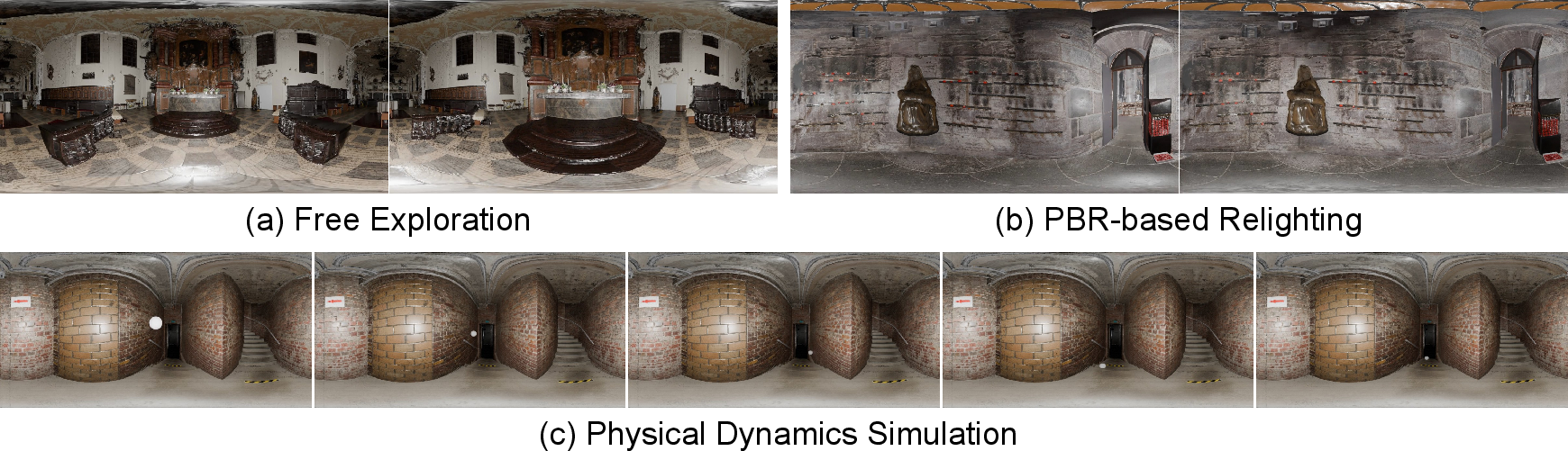

- Wrap the material maps onto the mesh, making it “PBR-ready” (Physically Based Rendering)—so light behaves correctly.

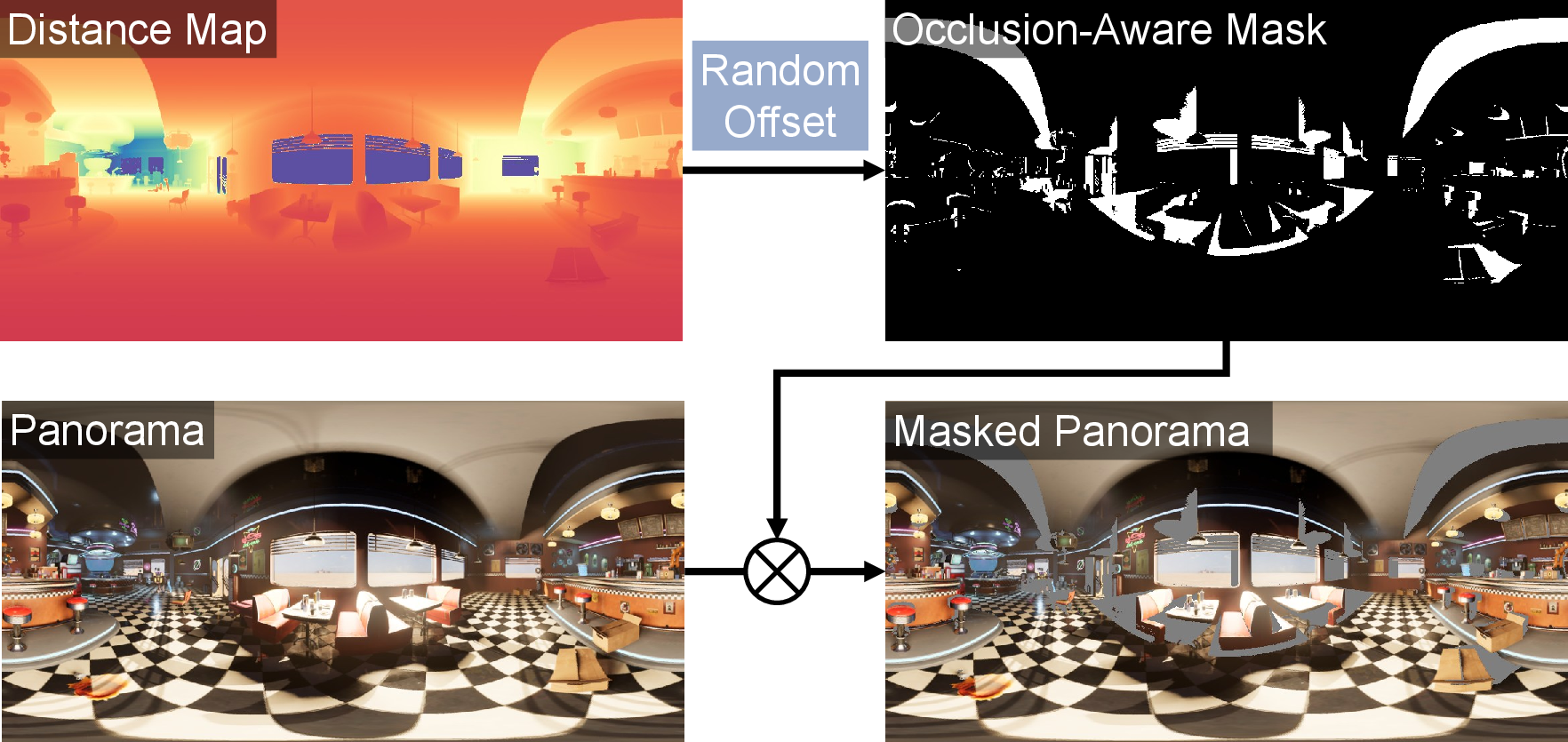

They also trained OmniX to “fill in” missing or occluded parts of scenes using smart masks, so you can progressively complete a world and explore further.

What did they find?

OmniX works well across many tasks and often beats other methods:

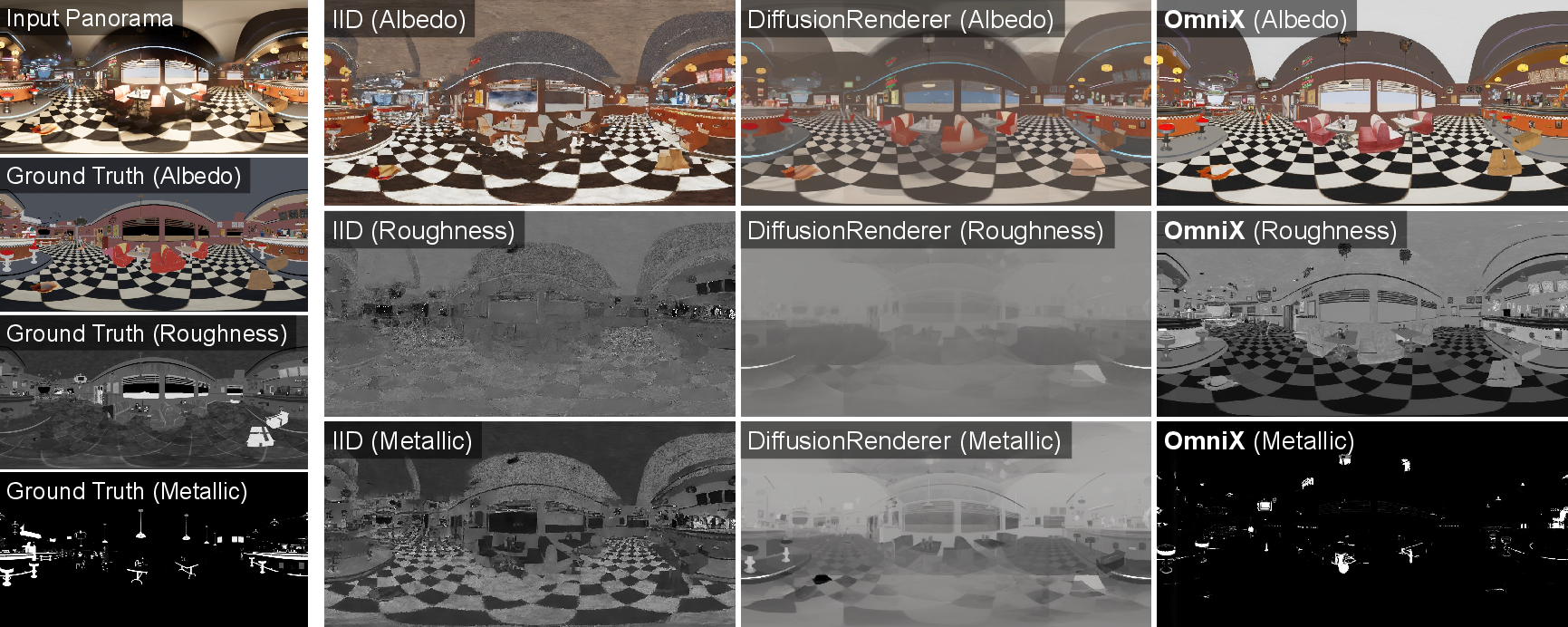

- It predicts material maps (albedo, roughness, metallic) more accurately than competing approaches.

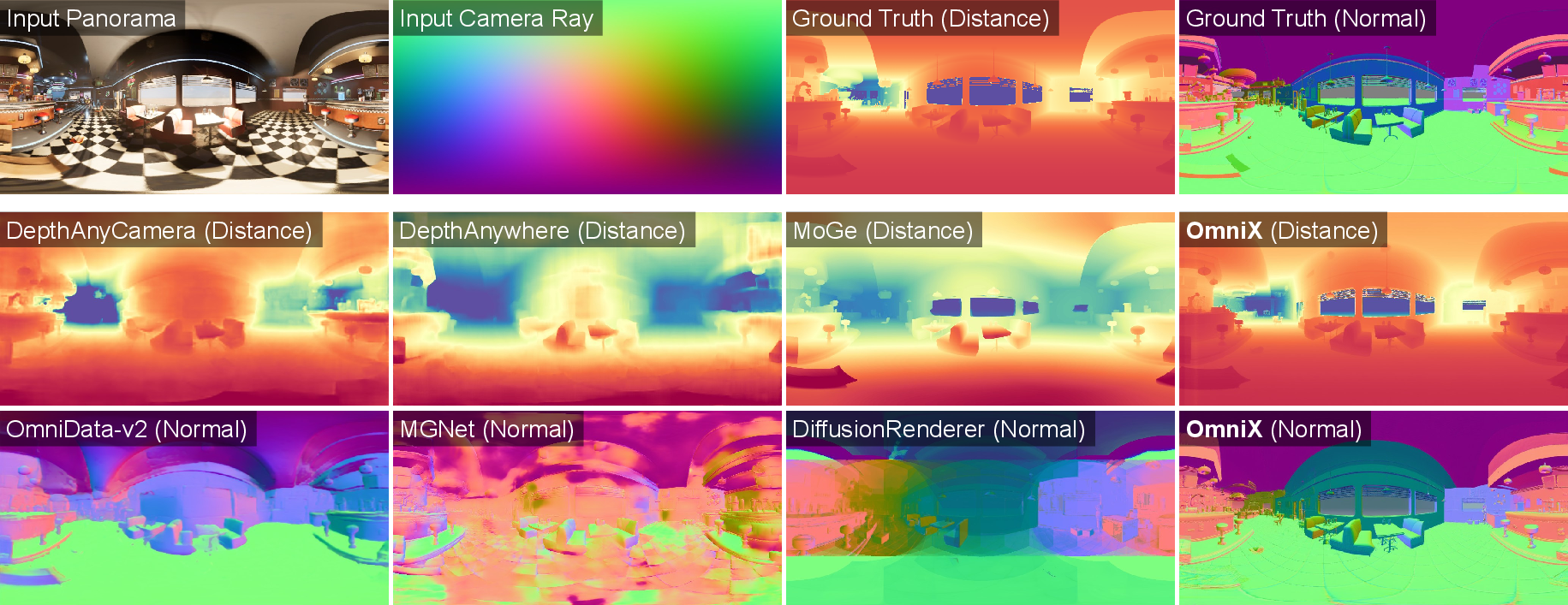

- It estimates geometry reliably, with especially strong surface normal predictions and competitive depth results.

- The Separate-Adapter design consistently performs best.

- Adding extra camera ray information helps a little for normals but doesn’t change most tasks much.

- The generated 3D scenes import smoothly into Blender and support relighting and physics, proving they’re “graphics-ready.”

In short, OmniX can turn a single photo into an explorable, realistic 3D scene with materials that behave properly under light.

Why does this matter?

- Faster world-building: Artists, game designers, and researchers can create large, realistic 3D environments with less manual work.

- Better training grounds: Robots and AI agents get rich, low-cost simulation spaces to learn in.

- Realistic visuals: Because scenes are PBR-ready, lighting looks natural and surfaces feel believable.

- Unified toolset: One framework handles generation, understanding, and completion—reducing complexity and making workflows smoother.

Overall, OmniX helps bridge the gap between 2D image AI and fully usable 3D worlds, making virtual environments more immersive, editable, and ready for real production use.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

The following list captures what remains missing, uncertain, or unexplored in the paper, phrased to be actionable for future research:

- Real-world generalization: PanoX is synthetic (8 UE5 scenes; 10k instances), and most training/evaluation for perception uses synthetic sources. How well do OmniX’s material and geometry estimates hold on diverse, real HDR/LDR panoramas with complex, noisy capture conditions (motion blur, exposure variation, stitching artifacts), and how can domain adaptation or semi-supervised approaches bridge the synthetic–real gap?

- Material model completeness: The framework predicts albedo, roughness, metallic, normals, and depth, but omits critical PBR attributes (specular tint/F0 for dielectrics, anisotropy, clearcoat, transmission/IOR, subsurface scattering, ambient occlusion, height/displacement). What architectures and supervision are needed to recover full SVBRDFs and volumetric/participating media for physically faithful relighting?

- Tangent-space consistency: Predicted normals are world-space; when applied as textures via spherical UVs, tangent-space alignment is unclear. How to derive or learn consistent tangent-space normal/height maps that match mesh UV parameterizations and avoid shading artifacts?

- Illumination estimation: The pipeline does not jointly estimate scene illumination (HDR environment maps, localized emitters). Can OmniX be extended to disentangle and recover light sources and environment lighting to enable physically correct relighting and inverse rendering on real captures?

- Absolute scale and metric depth: Monocular Euclidean distances in synthetic data have known units, but real scenes often lack metric scale. How to enforce or recover absolute scale (e.g., via priors, multi-sensor cues, or learned scale calibration) and quantify scale uncertainty across in-the-wild panoramas?

- Mesh quality and topology: Reconstruction via per-pixel projection and neighbor connectivity can yield non-manifold, non-watertight meshes with holes at discontinuities/sky. What meshing strategies (e.g., spherical Delaunay, Poisson/screened reconstruction, topology-aware regularization) and quantitative mesh metrics (watertightness, self-intersections, curvature statistics) can improve and evaluate geometric fidelity?

- ERP seam handling and spherical encoding: The paper notes DiT positional encoding limitations on ERP seams and uses horizontal blending, but lacks evaluation of spherical/cubemap/icosahedral representations or specialized spherical positional encodings. Which panoramic representation and encoding yield the best seam continuity and cross-view consistency?

- Multi-step interactive completion stability: Progressive OmniX-Fill completion may accumulate drift and inconsistencies. How to maintain global geometric/material coherence over long exploration sequences (e.g., via global optimization, scene graphs, or memory modules) and quantify degradation across iterative fills?

- Occlusion-aware mask sampling realism: The training masks are generated via random 3D displacements and ray intersections; it is unclear how well this approximates occlusions encountered during real exploration. Can mask distributions be calibrated to actual movement patterns and occlusion statistics, and how does this affect completion quality?

- Outdoor material diversity: Only 3 outdoor scenes in PanoX; materials like vegetation, water, sky, glass, and highly glossy/mirror surfaces are underrepresented. How does OmniX perform on these challenging materials, and what data/priors are required to handle complex BRDFs and view-dependent effects outdoors?

- Generation quality evaluation: Image-to-panorama generation and masked completion lack rigorous metrics (e.g., FID/KID adapted to panoramas, spherical LPIPS, structural consistency scores) and comparative baselines (e.g., PanoDiffusion, CubeDiff). Establish benchmarking protocols for panoramic generation and outpainting quality and cross-view consistency.

- Relighting validity: Blender demos show qualitative relighting, but no quantitative or human studies assess material realism under novel lighting (e.g., renderings compared to ground-truth PBR scenes via ΔE/SSIM, perceptual studies). How physically accurate are the predicted materials under varying illumination?

- Physics plausibility: The physics demo uses an elastic ball but does not validate collision accuracy or physical parameters derived from predicted materials. How to map estimated materials to physics properties (friction, restitution, stiffness) and evaluate simulation fidelity against ground-truth scenes?

- HDR/LDR handling: Training uses LDR panoramas (HDR360-UHD converted to LDR). How does loss of dynamic range impact material decomposition and relighting? Can HDR-aware training (tone-mapping invertibility, HDR supervision) improve physical correctness?

- Unified multi-task model vs. many adapters: Although a MIMO loss is formulated, 12 task-specific adapters are trained separately. Can a single unified model jointly handle generation, perception, and completion with shared adapters, and what are the trade-offs in accuracy, interference, and memory?

- Adapter capacity and training details: LoRA ranks, parameter budgets, and training dynamics (e.g., freezing strategies) are not explored. What is the minimal adapter capacity that maintains SOTA performance, and how do rank/placement choices affect different modalities?

- Geometry-aware inputs: Camera rays slightly help normals but not other modalities. What additional geometry-aware signals (ray differentials, spherical coordinates, depth priors, surface segmentation) or architectural changes can systematically improve depth/material estimation?

- Failure modes and robustness: No systematic error analysis exists for challenging cases (thin structures, specular/mirror/glass, heavy occlusions, extreme seams). Build a taxonomy of failure modes, measure robustness (noise/exposure/blur/stitch errors), and develop uncertainty estimates or confidence maps for downstream use.

- Resolution scaling: All panoramas are 512×1024; high-end graphics require 4K–8K textures and detailed geometry. What are the memory/compute bottlenecks, and how can OmniX be scaled (tiling, latent upsamplers, multi-res training) without degrading seam continuity or material fidelity?

- Semantic integration: The pipeline omits semantics/instances. Can semantic/material-aware scene graphs improve reconstruction, completion, and physics (e.g., assigning material priors by category, enforcing structural regularities)?

- Dynamic scenes and temporal coherence: The method targets static panoramas; handling dynamic objects or videos is unexplored. How to extend to time-varying panoramas with temporally consistent geometry/materials and motion-aware completion?

- Cross-engine compatibility: PBR mappings and UV strategies may vary across renderers (Blender, Unreal, Unity). Provide standardized material translation, validate cross-engine consistency, and address UV distortions from spherical unwrapping.

- Prompt quality and controllability: Text prompts for HDR360-UHD are auto-extracted (BLIP2/Florence2), but their accuracy and effect on generation are unmeasured. How to improve prompt grounding and achieve fine-grained control over geometry and layout while preserving physical plausibility?

- Licensing and reproducibility: Dataset and model release details (licenses, asset provenance, training scripts, inference configs) are not specified. Clear release plans and reproducible pipelines would enable broader benchmarking and extension.

- Inference efficiency: There is no report of runtime, memory footprint, or solver details for rectified-flow integration, nor real-time feasibility on consumer GPUs. Profile and optimize inference (solver steps, latent resolution, adapter bottlenecks) for interactive applications.

- Quantitative 3D scene metrics: The paper does not evaluate reconstructed scenes with 3D metrics (surface/volume IoU, chamfer distance to ground-truth meshes, BRDF fit errors). Establish measurable criteria for “graphics-ready” quality beyond image-space metrics.

- ERP vs. alternative panoramas: A direct comparison between ERP, cubemap, and learned spherical parameterizations is missing for both perception and generation. Which representation best balances seam handling, distortion, and transformer compatibility?

Practical Applications

Practical applications of OmniX and PanoX

Below are actionable, real-world applications that follow from the paper’s findings, methods, and innovations (unified panoramic generation/perception/completion via cross-modal adapters; multimodal panorama dataset with dense PBR/geometry; graphics-ready scene reconstruction pipeline). Each item notes sectors, concrete use cases, potential tools/workflows, and key assumptions or dependencies.

Immediate Applications

Media, gaming, and virtual production

- Scene blocking and rapid worldbuilding from a single image

- Sector: software, media, gaming

- Use: Convert a concept image into an explorable, PBR-ready 3D scene for previsualization, grey-box level design, and look-dev.

- Tools/workflows: OmniX-Image2Pano → OmniX Pano2Depth/Normal/Albedo/Roughness/Metallic → mesh reconstruction → Blender/Unreal import; baking and relighting.

- Assumptions/dependencies: PBR estimates are approximations; absolute scale may require manual calibration; 512×1024 ERP resolution may need upscaling; license compatibility for pre-trained models/assets.

- HDRI-style lighting environments from single images

- Sector: media, VFX

- Use: Generate panorama environments for image-based lighting in path tracers without dedicated HDR captures.

- Tools/workflows: OmniX image-to-panorama, PBR maps; plug into Arnold/Cycles/V-Ray.

- Assumptions/dependencies: LDR-to-HDR fidelity is limited; brightness/white balance and dynamic range may need artist correction.

- Inpainting and set extension for 360 content

- Sector: media, immersive content

- Use: Fill occlusions or extend 360 shots while preserving geometry/material coherence.

- Tools/workflows: OmniX-Fill with occlusion-aware masks; round-trip to NLE/compositors.

- Assumptions/dependencies: Seam handling (ERP blending) is needed; edits benefit from human QA.

XR and digital experiences

- Fast prototyping of VR experiences and virtual tours

- Sector: XR, real estate, tourism

- Use: Turn a few photos into explorable, relightable VR scenes for previews, virtual open houses, or cultural heritage exhibits.

- Tools/workflows: OmniX chain to PBR mesh → VR engines (Unity/Unreal) → lightweight navigation.

- Assumptions/dependencies: Single vantage point limits full layout; interactive completion needed for full traversal; scale alignment may require user input.

Robotics and autonomy

- Synthetic training data and domain randomization

- Sector: robotics

- Use: Generate diverse indoor/outdoor 3D scenes with controllable materials and lighting for pretraining perception stacks.

- Tools/workflows: OmniX → simulator import (Isaac Sim, Gazebo) → task-specific datasets (depth, normals).

- Assumptions/dependencies: Metric scale ambiguity; physics meshes may need cleanup; sensor noise models still required.

- Simulation-for-robotics tasks (navigation, manipulation staging)

- Sector: robotics, research

- Use: Build lightweight worlds to evaluate navigation policies, lighting robustness, and sensor placement.

- Tools/workflows: OmniX PBR scenes → physics engines; scripted relighting to test edge cases.

- Assumptions/dependencies: Material-physics mismatch (friction/elasticity) unless hand-tuned; limited multi-room continuity.

Architecture, engineering, construction (AEC) and real estate

- Concept-stage interior/exterior previsualization

- Sector: AEC, real estate

- Use: From a mood/reference photo, create a relightable 3D staging for client review.

- Tools/workflows: OmniX → Blender/Unreal → light studies, camera paths for client presentations.

- Assumptions/dependencies: Not a substitute for CAD/BIM accuracy; material properties approximate; no code-compliant dimensions by default.

- Preliminary daylight/illumination “sanity checks”

- Sector: AEC, energy

- Use: Quick, qualitative daylight or artificial light positioning trials before formal simulations.

- Tools/workflows: OmniX PBR scene → path tracer preview.

- Assumptions/dependencies: Not suitable for compliance-grade analyses; needs later HDR/BRDF calibration.

E-commerce, marketing, and content creation

- Virtual product staging in generated environments

- Sector: e-commerce, advertising

- Use: Place products into photoreal, style-matched 3D rooms for catalog imagery and 360 experiences.

- Tools/workflows: OmniX → mesh import → product placement → PBR renders.

- Assumptions/dependencies: Requires collision-free placement; shadows/reflections tuned to match product materials.

Education and training

- Interactive training environments for safety and emergency response

- Sector: education, public safety

- Use: Quickly assemble lifelike training rooms (warehouses, stores, outdoor sites) for scenario-based learning.

- Tools/workflows: OmniX → physics-enabled engines → scripted events.

- Assumptions/dependencies: Physics/material realism may need manual tuning; environment validation for safety-critical instruction.

Research and open science

- Panoramic perception benchmark and method development

- Sector: academia

- Use: Use PanoX for benchmarking RGB→X perception and inverse rendering on panoramas; test cross-modal adapter designs.

- Tools/workflows: PanoX dataset; OmniX Separate-Adapter with LoRAs; MIMO flow-matching training recipes.

- Assumptions/dependencies: Synthetic-to-real gap; dataset licensing; reproduce with available compute.

Software tooling and integrations

- DCC and engine plugins

- Sector: software

- Use: Blender/Unreal/Unity add-ons for “Image→PBR Scene” and “Panorama→Mesh” with relighting presets.

- Tools/workflows: Packaged OmniX adapters; UI for scale calibration, seam blending, and mask-guided completion.

- Assumptions/dependencies: GPU/accelerator availability; model weights redistribution/licensing.

Long-Term Applications

Digital twins and urban/industrial planning

- City/neighborhood-scale generative digital twins

- Sector: urban planning, utilities, logistics

- Use: Compose multi-block panoramas into continuous, explorable twins for planning, traffic simulation, and sensor placement studies.

- Tools/workflows: Iterative OmniX-Fill for interactive completion; graph-based stitching; GIS alignment.

- Assumptions/dependencies: Requires multi-view capture or strong priors for topology continuity; georeferencing and metric scale must be enforced; compute and QA at scale.

- Brownfield/industrial site simulations

- Sector: energy, manufacturing

- Use: Create proxy twins for hazard analysis, evacuation planning, and robot deployment planning.

- Tools/workflows: PBR materials with calibrated physics; rule-based augmentation for safety equipment.

- Assumptions/dependencies: High-fidelity materials and physical properties needed; verification protocols required.

Robotics sim-to-real and embodied AI

- Closed-loop world models for long-horizon tasks

- Sector: robotics, AI

- Use: Train agents in generative worlds that co-evolve with policies (curriculum learning, rare-event synthesis).

- Tools/workflows: OmniX-driven world generation + RL frameworks; automated scene completion to expand traversable space.

- Assumptions/dependencies: Robustness to compounding errors; automatic scale and metric consistency; domain gap minimization.

- On-device panoramic perception for AR/robot mapping

- Sector: AR, robotics

- Use: Real-time RGB→depth/normals/materials on headsets/robots for mapping, relighting, and grasp planning.

- Tools/workflows: Distilled OmniX adapters; tensor-RT/ONNX optimizations; panoramic camera rigs.

- Assumptions/dependencies: Latency and power constraints; safety validation; privacy-preserving on-device inference.

AEC and energy performance analysis

- Early-stage energy modeling and daylight optimization

- Sector: energy, AEC

- Use: Rapid what-if studies for glazing, shading, and reflectance to guide design before BIM maturity.

- Tools/workflows: OmniX → calibrated PBR → coupling with Radiance/EnergyPlus.

- Assumptions/dependencies: Requires HDR lighting and measured BRDF libraries; alignment to real geometry; verification against metrology.

- Automated as-built reconstruction from sparse captures

- Sector: construction tech

- Use: Fill occlusions, infer materials, and create navigable proxies from few images or partial panoramas.

- Tools/workflows: Mask-guided OmniX-Fill; constraint-based scale fitting; alignment to control points.

- Assumptions/dependencies: Accuracy thresholds for compliance; survey ground-truth needed.

Creative AI and co-design systems

- Multi-modal co-pilots for environment design

- Sector: software, creative industries

- Use: Assist designers with round-trip edits across appearance, geometry, and materials under textual or sketch constraints.

- Tools/workflows: Extended MIMO flow-matching with multi-conditional adapters; parametric controls (style, roughness, metallicity).

- Assumptions/dependencies: Fine-grained controllability and provenance tracking; safety/ethics guardrails.

Policy, governance, and privacy

- Synthetic data pipelines reducing privacy exposure

- Sector: policy, compliance

- Use: Replace real 360 captures in sensitive areas with synthetic but representative scenes for model development.

- Tools/workflows: Governance frameworks for synthetic scene generation; dataset documentation (datasheets, model cards).

- Assumptions/dependencies: Bias in source assets propagates to synthetic data; need for domain coverage guarantees.

- Standards for panoramic inverse rendering benchmarks

- Sector: standards, academia

- Use: Promote PanoX-like benchmarks for fair evaluation of RGB→X on panoramas (indoor and outdoor).

- Tools/workflows: Leaderboards; challenge tasks (intrinsics, depth, completion).

- Assumptions/dependencies: Community buy-in; curated real-world test sets to complement synthetic data.

Commerce and consumer applications

- Personal VR journaling and home redesign previews

- Sector: consumer apps, retail

- Use: Turn phone photos into relightable VR spaces; preview paint, flooring, and furniture with plausible materials.

- Tools/workflows: Mobile-friendly distilled models; retailer catalogs integration.

- Assumptions/dependencies: UX for scale setting; material catalogs must match predicted PBR for realism.

- Virtual events and pop-up experiences at scale

- Sector: marketing, entertainment

- Use: Generate themed, explorable venues with consistent art direction and physics for large audiences.

- Tools/workflows: Batch scene generation with art style constraints; moderation pipelines.

- Assumptions/dependencies: Content safety and IP compliance; server-side acceleration.

Cross-cutting assumptions and dependencies

- Fidelity: Predicted PBR materials and geometry are strong but not metrology-grade; critical simulations (safety, energy, compliance) need calibration and validation.

- Scale and metric consistency: Single-view reconstructions lack absolute scale; workflows should include scale setting and georeferencing.

- Generalization: Trained partly on synthetic panoramas; real-world diversity can require fine-tuning or domain adaptation.

- Performance: Real-time or batch deployment depends on accelerators; mobile/edge use needs distilled or quantized adapters.

- Licensing and ethics: Ensure rights to base models (e.g., FLUX.1-dev), 3D assets, and integration of commercial DCC/engine pipelines; establish content safety checks.

- Seam handling: ERP seams require blending; multi-view capture or cube-map variants may improve continuity for production.

Glossary

- 2D generative priors: Knowledge embedded in large image models that can be repurposed for other tasks like perception; example: "2D generative priors"

- AdamW: An optimization algorithm that decouples weight decay from gradient updates; example: "We adopt an AdamW optimizer with learning rate of 1e-4 for training."

- AbsRel: Absolute relative error metric commonly used for depth/distance evaluation; example: "AbsRel, -1.25, MAE, and RMSE"

- Albedo: The base diffuse color of a surface independent of lighting; example: "albedo, roughness, and metallic maps."

- Camera rays: Directional rays from the camera used to relate pixels to 3D space; example: "Camera rays are considered important for spatial perception and understanding."

- Channel-wise concatenation: Combining inputs by stacking channels before processing in a model; example: "Shared-Branch and Shared-Adapter are concatenations of channel-wise and token-wise approaches, respectively."

- Co-training: Joint training on multiple data sources (e.g., synthetic and real) to improve generalization; example: "photorealistic image generation through co-training on synthetic and real data."

- Delta-1.25 (δ-1.25): The percentage of predicted depths within a 1.25 multiplicative threshold of ground truth; example: "AbsRel, -1.25, MAE, and RMSE"

- Diffusion Transformer (DiT): A transformer architecture designed for diffusion or flow matching generative models; example: "The flexibility of Diffusion Transformer (DiT) enables multiple ways to adapt the DiT-based flow matching model"

- ERP panoramas: Equirectangular projection panoramas that map the sphere to a rectangle; example: "the DiT model has difficulty learning the seam continuity of ERP panoramas"

- Euclidean distance: Straight-line distance used as the metric for panoramic depth; example: "For Euclidean distances, we use four commonly used metrics"

- FLUX.1-dev: A pre-trained 2D flow matching model from Black Forest Labs used as the base generator; example: "FLUX.1-dev~\citep{flux1dev}"

- Flow matching: A generative modeling approach that learns velocity fields to transform noise to data; example: "pre-trained 2D flow matching models"

- Flow matching loss: Objective that measures error between predicted and true velocity in flow matching; example: "flow matching loss"

- G-buffer: A set of per-pixel geometric and material buffers used in rendering (e.g., normals, albedo); example: "combining G-buffer estimation with photorealistic image generation through co-training on synthetic and real data."

- HDR: High Dynamic Range imaging capturing a wide luminance range; example: "HDR360-UHD is an HDR panorama dataset"

- Horizontal blending: A technique to improve seam continuity in equirectangular panoramas; example: "we follow LayerPano3D~\citep{layerpano3d} and introduce the horizontal blending technique."

- Inverse rendering: Estimating scene geometry, materials, and lighting from images; example: "Inverse rendering aims to estimate intrinsic scene properties such as geometry, materials, and lighting from images."

- Intrinsic image decomposition: Separating an image into intrinsic properties like albedo and material maps; example: "We divide panoramic perception into intrinsic image decomposition (albedo, roughness, metallic)"

- LDR: Low Dynamic Range imaging with limited luminance range; example: "We convert these HDR images into LDR images"

- LoRA: Low-Rank Adaptation, a lightweight method to fine-tune large models via rank-constrained updates; example: "multiple LoRAs~\citep{lora} are optimized"

- LPIPS: Learned Perceptual Image Patch Similarity, a perceptual metric for image similarity; example: "PSNR (Peak Signal-to-Noise Ratio) and LPIPS"

- MAE: Mean Absolute Error, a regression metric used for evaluating distance/depth predictions; example: "AbsRel, -1.25, MAE, and RMSE"

- Metallic: A material property indicating how much a surface behaves like a metal; example: "albedo, roughness, and metallic maps."

- MIMO: Multiple-Input Multiple-Output setting where a model handles multiple conditions and targets; example: "yielding a MIMO version of flow matching loss"

- NPU: Neural Processing Unit, specialized hardware for accelerating AI workloads; example: "evaluated on four Ascend 910B NPUs"

- Occlusion-aware mask sampling: Mask generation that accounts for occlusions by simulating blocked regions; example: "Occlusion-aware mask sampling."

- Outpainting: Extending an image beyond its original boundaries using generative models; example: "using outpainting or depth estimation"

- Panoramic distance map: A 360° map of per-pixel distances used to reconstruct 3D geometry; example: "Given a panoramic distance map, since the ray direction corresponding to each pixel is known"

- Panoramic perception: Understanding scene properties across 360° images; example: "panoramic perception"

- PBR (Physically Based Rendering): Rendering approach that models light–material interaction using physical principles; example: "physically-based rendering (PBR)"

- PBR materials: Material parameter maps (albedo, roughness, metallic, normal) for PBR workflows; example: "PBR materials"

- Positional encoding: Encoding spatial positions for transformer-based models; example: "2D positional encoding"

- PSNR: Peak Signal-to-Noise Ratio, a fidelity metric for image reconstruction quality; example: "PSNR (Peak Signal-to-Noise Ratio)"

- Ray intersection: Computing where rays intersect scene geometry, used for occlusion estimation; example: "we can estimate the occluded regions by ray intersection."

- Relighting: Re-rendering a scene under new lighting conditions; example: "relighting"

- RMSE: Root Mean Square Error, a regression metric emphasizing larger errors; example: "AbsRel, -1.25, MAE, and RMSE"

- Separate-Adapter: Architecture assigning distinct adapters per input modality for better performance; example: "Separate-Adapter architecture achieves best visual perception performance"

- Shared-Adapter: Architecture using a single adapter shared across modalities; example: "Shared-Adapter is equivalent to token-wise concatenation"

- Shared-Branch: Architecture concatenating inputs along channels before a shared processing branch; example: "Shared-Branch concatenates different inputs along the channel dimension"

- Spherical UV unwrapping: Mapping panoramic textures to a mesh via spherical coordinates for texturing; example: "via spherical UV unwrapping"

- SVBRDF: Spatially Varying Bidirectional Reflectance Distribution Function modeling material reflectance across a surface; example: "recovers geometry, complex SVBRDFs, and spatially-coherent illumination"

- Token-wise concatenation: Combining inputs by concatenating their token sequences in transformer models; example: "concatenations of channel-wise and token-wise approaches"

- Surface normals: Per-pixel vectors perpendicular to surfaces, used for shading and geometry; example: "surface normals"

Collections

Sign up for free to add this paper to one or more collections.