- The paper introduces an end-to-end personalized English learning tool leveraging LLMs to deliver context-aware proficiency assessments and adaptive exercises.

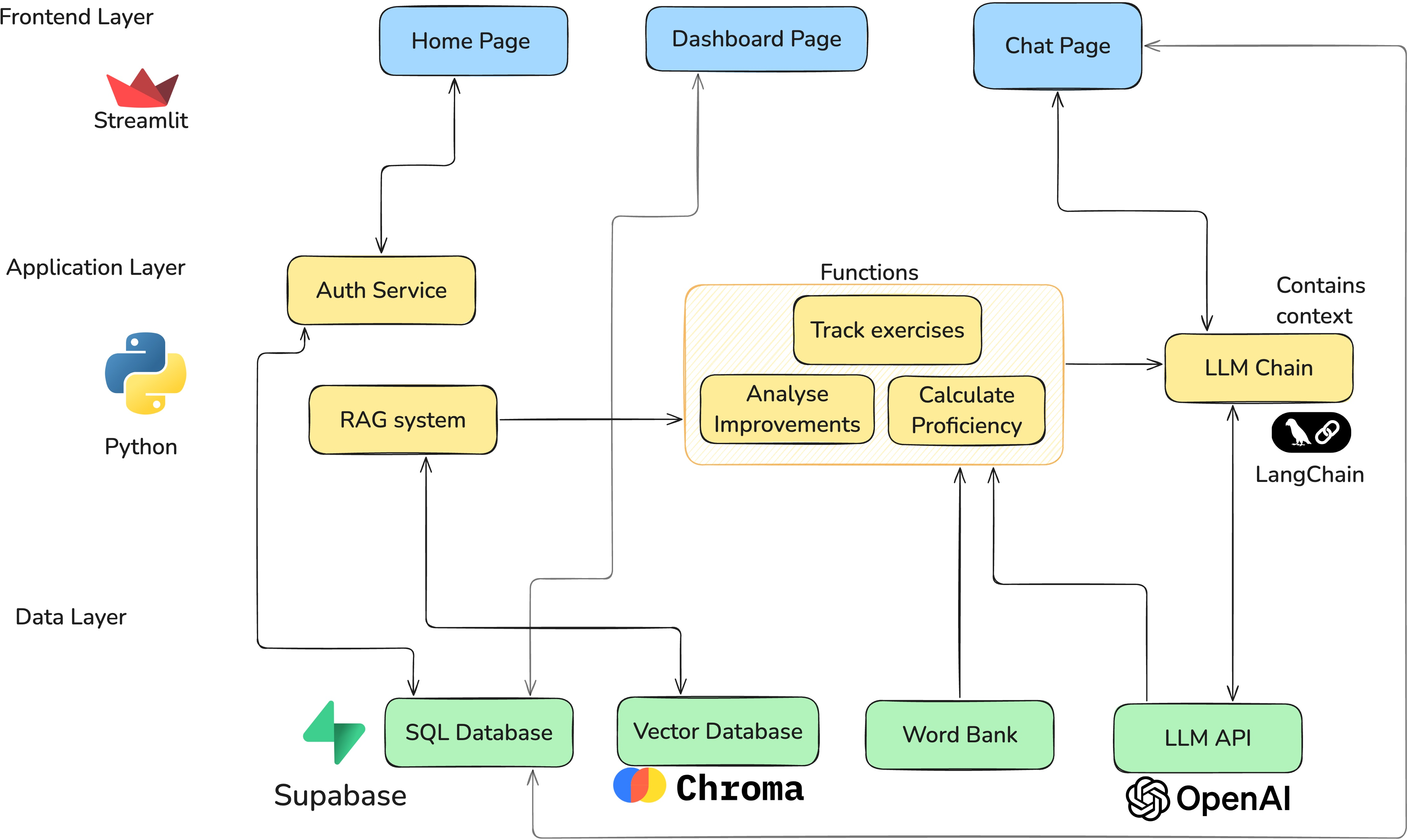

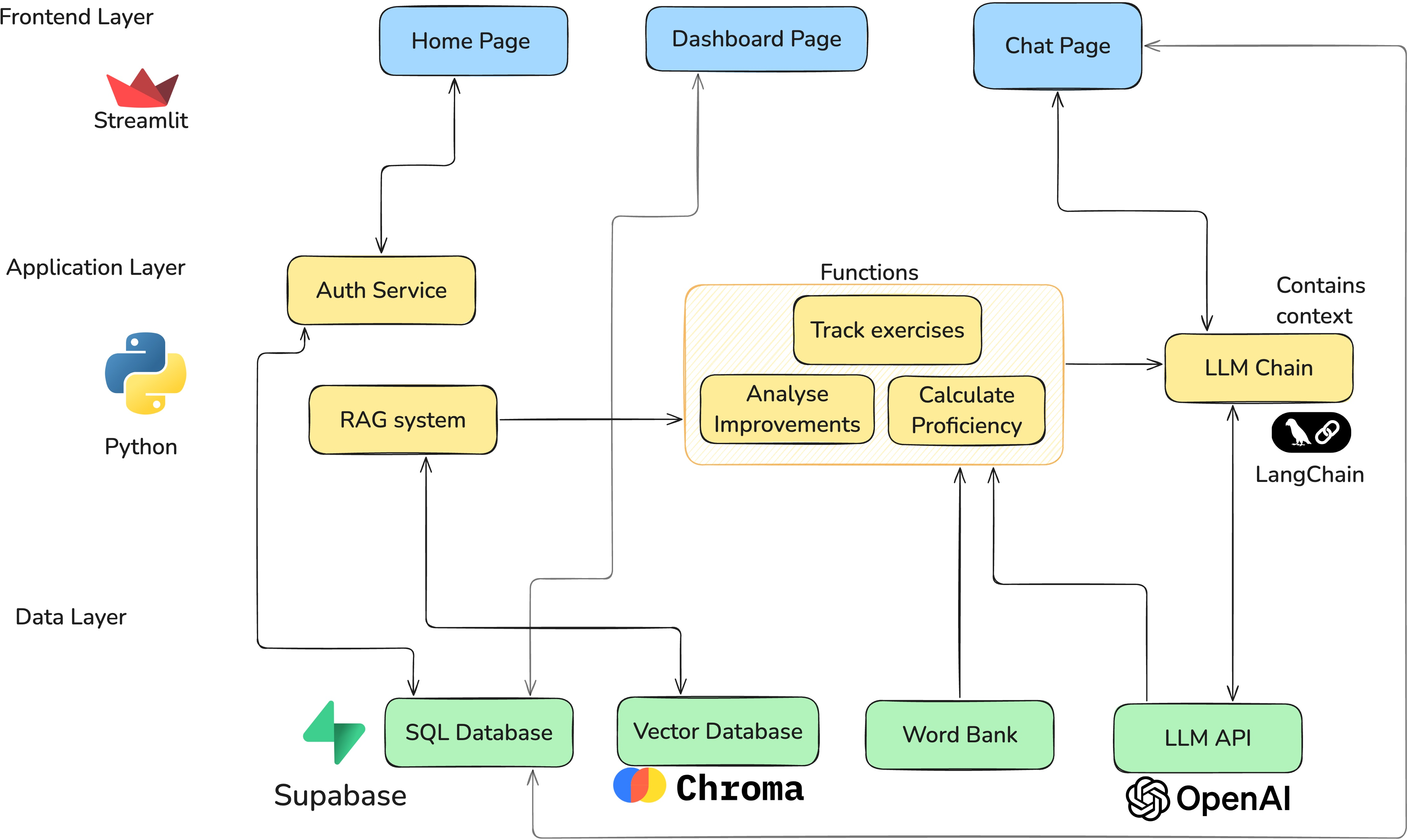

- It employs a modular architecture with Streamlit, Supabase, LangChain, GPT, and ChromaDB to integrate voice input and real-time feedback.

- Evaluation through surveys and persona-based tests shows improved learner engagement, grammatical accuracy, and tailored instructional support.

Motivation and Positioning

LangLingual introduces an end-to-end architecture to enable individualized English language learning by leveraging LLMs and contemporary frameworks. The driving motivation is to deliver context-aware, proficiency-adaptive language exercises and instantaneous feedback traditionally difficult to scale in classroom environments. Positioned against existing LLM-based educational tools, LangLingual prioritizes sustained learner progress tracking, Socratic conversational feedback, and granular exercise targeting over single-turn query-answering paradigms.

System Architecture

LangLingual is instantiated as a web-native application integrating several open-source and commercial components. The modular architecture segregates concerns for prompt composition, model interaction, voice input, learner authentication, and data persistence.

Figure 1: Architecture Diagram of the LangLingual system.

- Front-end/Hosting: Built using Streamlit, it orchestrates conversational UI and session management.

- Authentication/Storage: Supabase delivers secure user management and persistent storage via PostgreSQL, facilitating individualized learning histories and improvement records with row-level security.

- Conversation Pipeline: LangChain backend enables composable, context-aware LLM querying. OpenAI GPT models are utilized for dialogue, proficiency assessment, and exercise creation. Whisper API transcribes voice inputs.

- Exercise and Feedback Memory: Active exercise state and feedback are tracked using dictionary structures, mediating between user inputs and evaluation modules.

- Document Embedding and Retrieval: ChromaDB supports RAG for supplementing LLMs with curated resource retrieval.

This architecture is agnostic to specific LLM vendors, supporting scalability and future integration with alternate model providers.

Data Sources and Proficiency Assessment

Two structured datasets underpin the adaptive behavior of LangLingual:

- Word Bank: 50k vocabulary items labeled with proficiency levels (1-14), curated by a linguistics expert.

- Resource Dataset: Manually compiled resource catalog with topic, difficulty, and instructional content.

Proficiency estimation fuses rule-based and model-based judgments. Inputs are lemmatized and matched against the Word Bank to compute median/average proficiency, while parallel LLM assessment yields a second scalar. Final proficiency integrates both via a weighted sum:

Levelcombined=wwb×Levelwb+wLLM×LevelLLM

with wwb=0.4, wLLM=0.6. The hybrid scheme mitigates deficiencies in lexical coverage and leverages current LLM context understanding for robust diagnostics.

Personalised Exercise Generation and Feedback

LangLingual dynamically adapts exercise generation to detected proficiency and conversational context. After each LLM response, the system inspects for trigger keywords denoting exercise intent; flagged exchanges are stored and managed for user progress continuity.

Improvement area identification folds learner inputs across intervals and processes them through LLM analysis to yield JSON-formatted diagnostics. Feedback areas are filtered by a confidence threshold (0.3), reporting only sufficiently frequent and severe patterns. Issues are categorized (e.g., articles, tense, SVA), and illustrative examples are surfaced from session transcripts.

Evaluation: Survey and Persona-based Methods

Two complementary evaluation protocols substantiate system efficacy:

Survey-based Evaluation

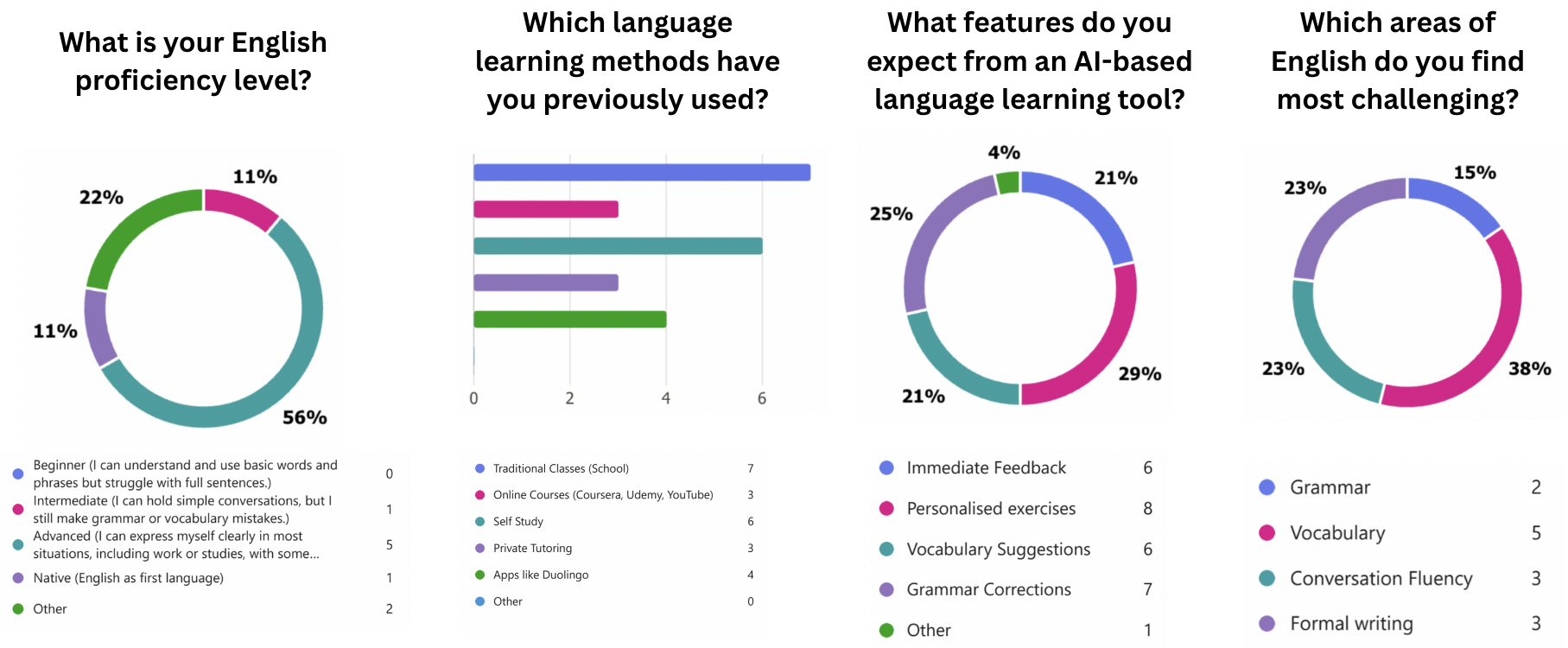

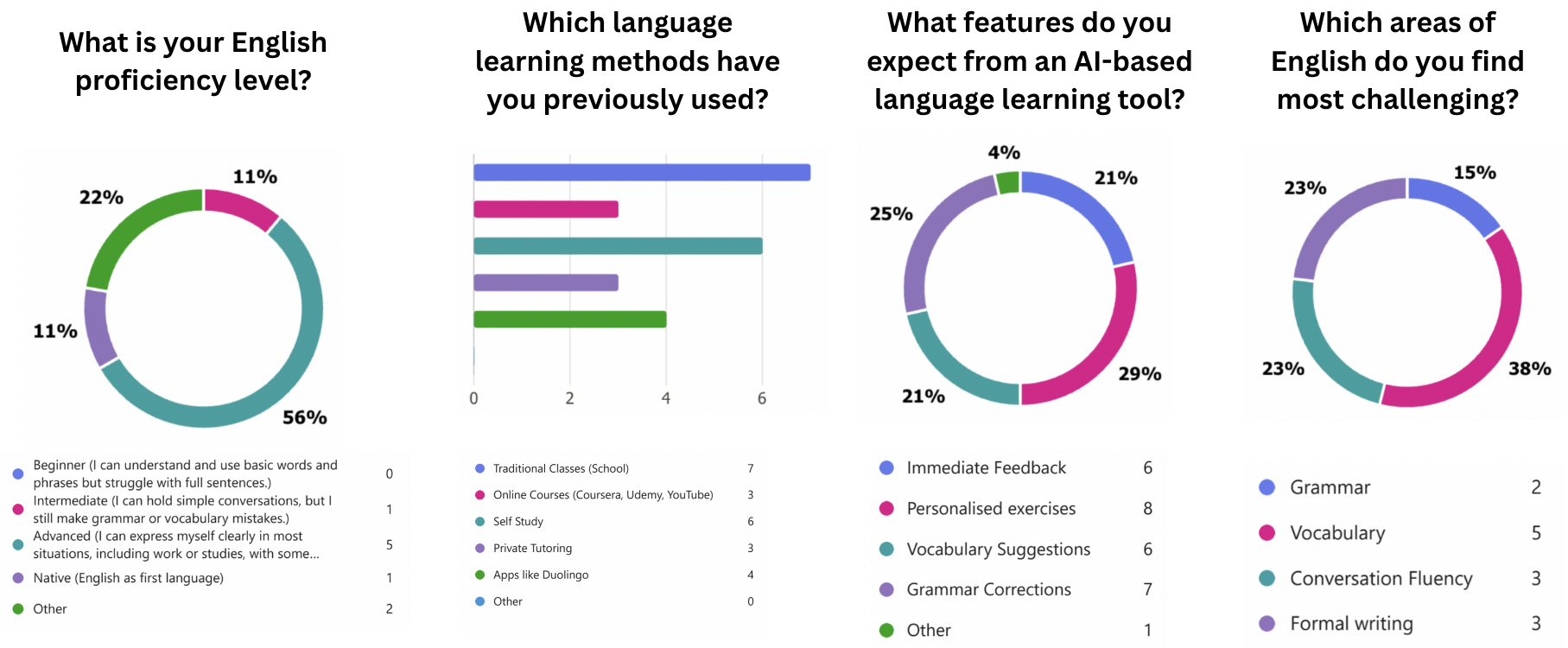

A cohort of seven learners underwent a three-phase evaluation: pre-survey, LangLingual session, post-survey. Participants represented diverse age groups, nationalities, and occupational backgrounds, offering perspectives across English proficiency levels.

Figure 2: Findings from the pre-survey conducted to evaluate LangLingual.

Pre-survey responses indicated most learners self-categorized as intermediate-to-advanced, frequently utilizing online/app-based language solutions, and valuing grammar correction and personalized exercises.

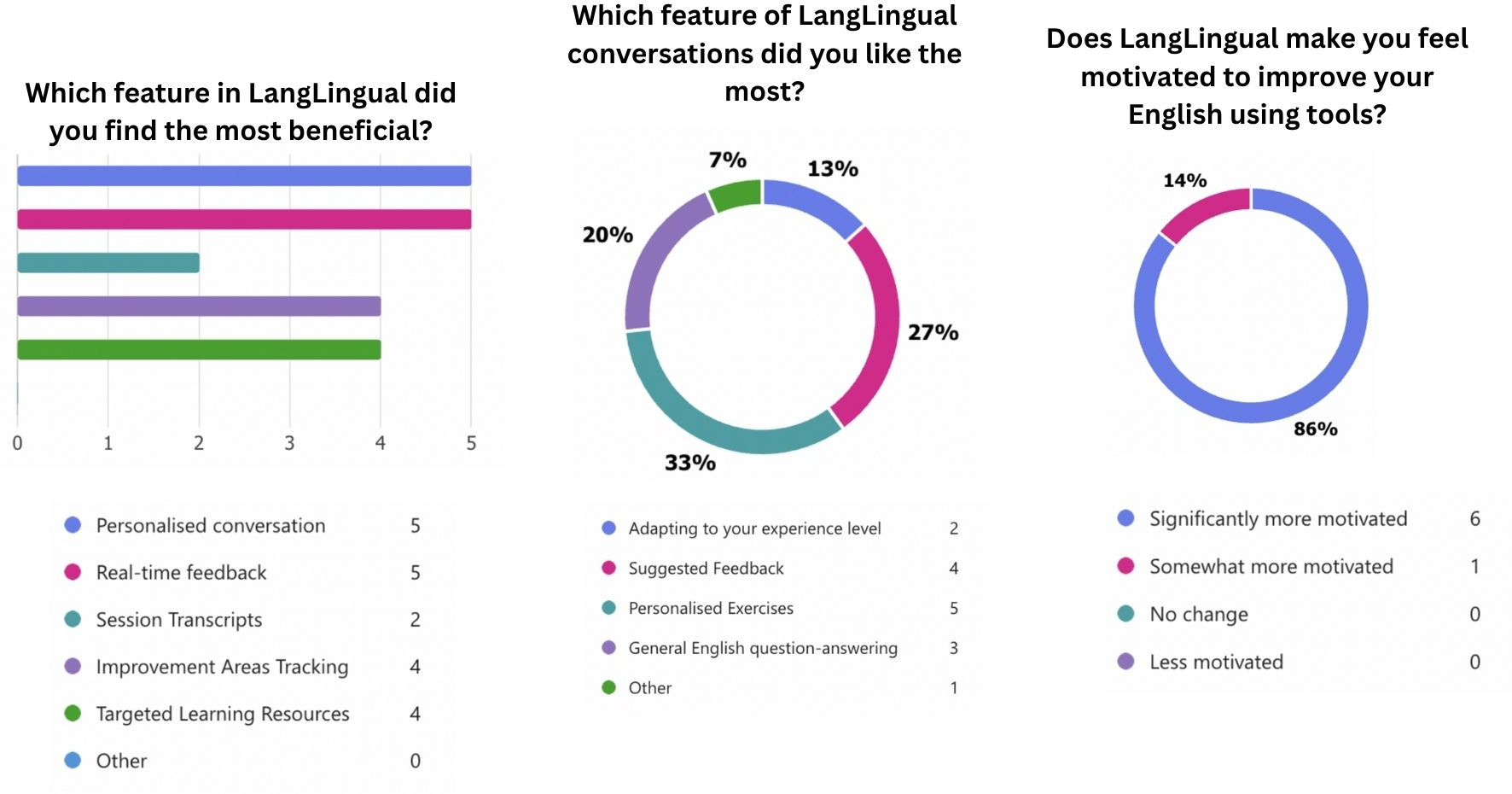

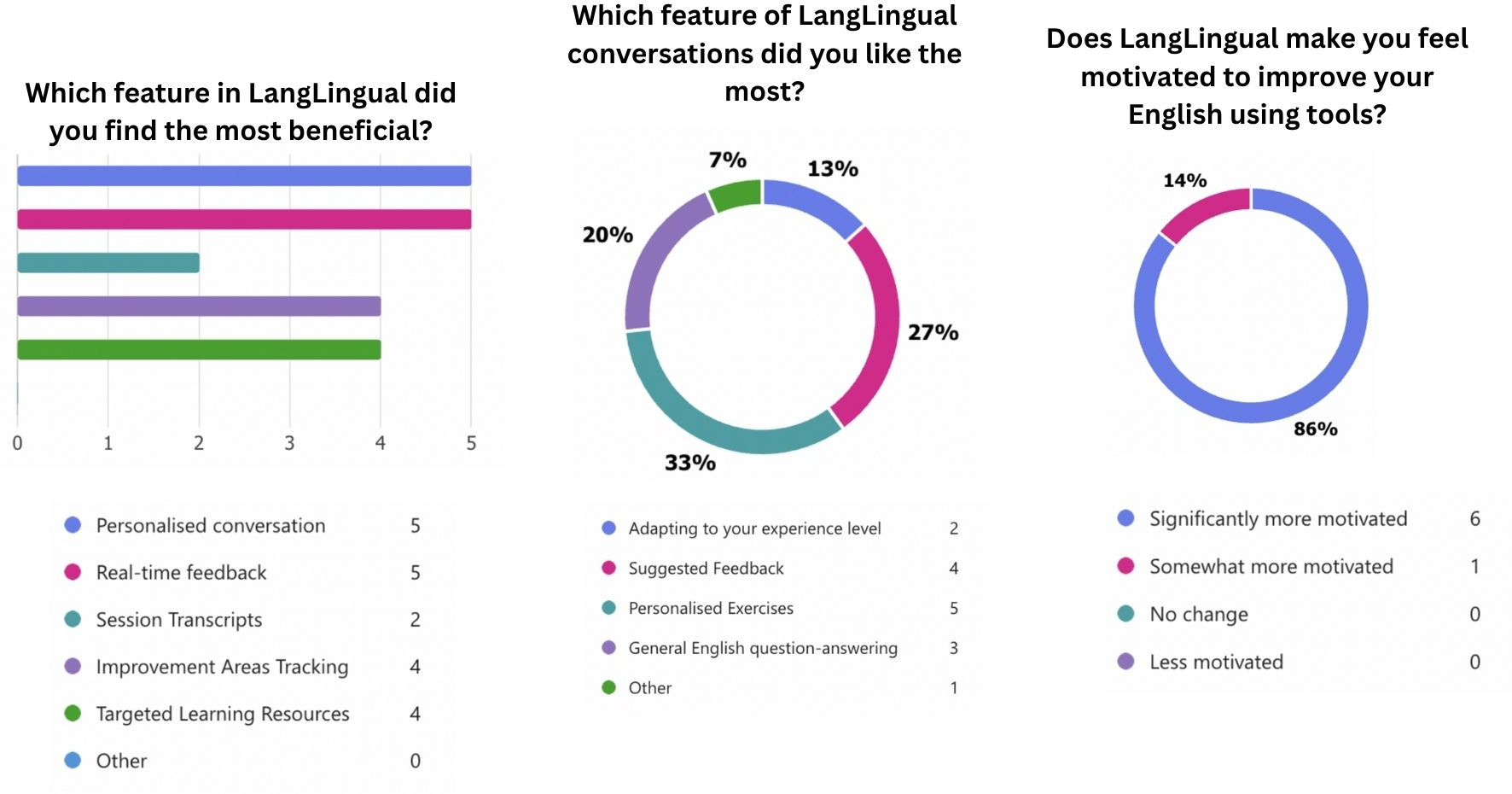

The post-survey assessed engagement, perceived usefulness, and motivational impact after tool usage.

Figure 3: Findings from the ppost-survey conducted to evaluate LangLingual.

Participants consistently rated personalized feedback and real-time exercise generation as motivating. Notably, multiple anecdotal responses highlighted reduction in grammatical errors and the value of iterative, focused correction.

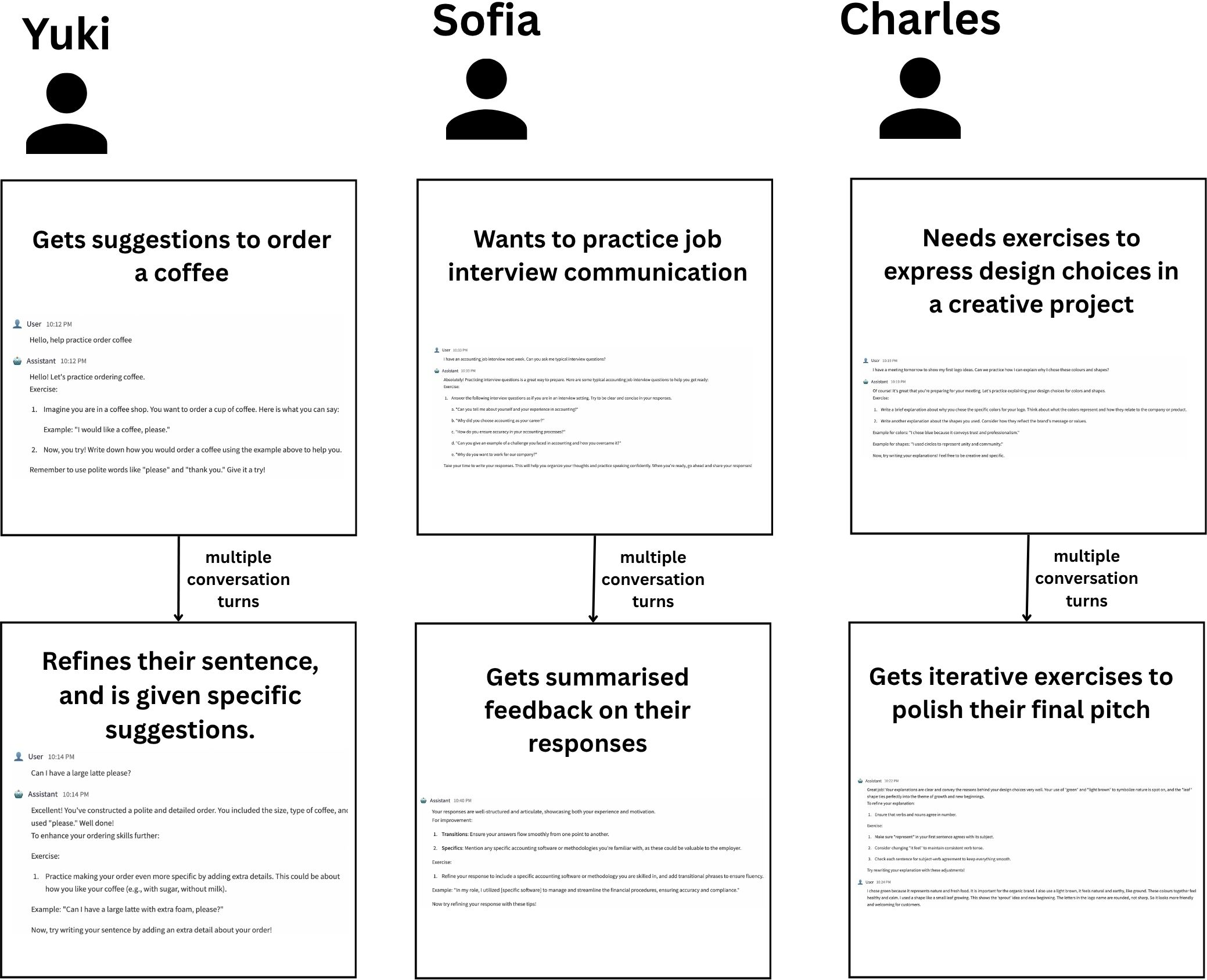

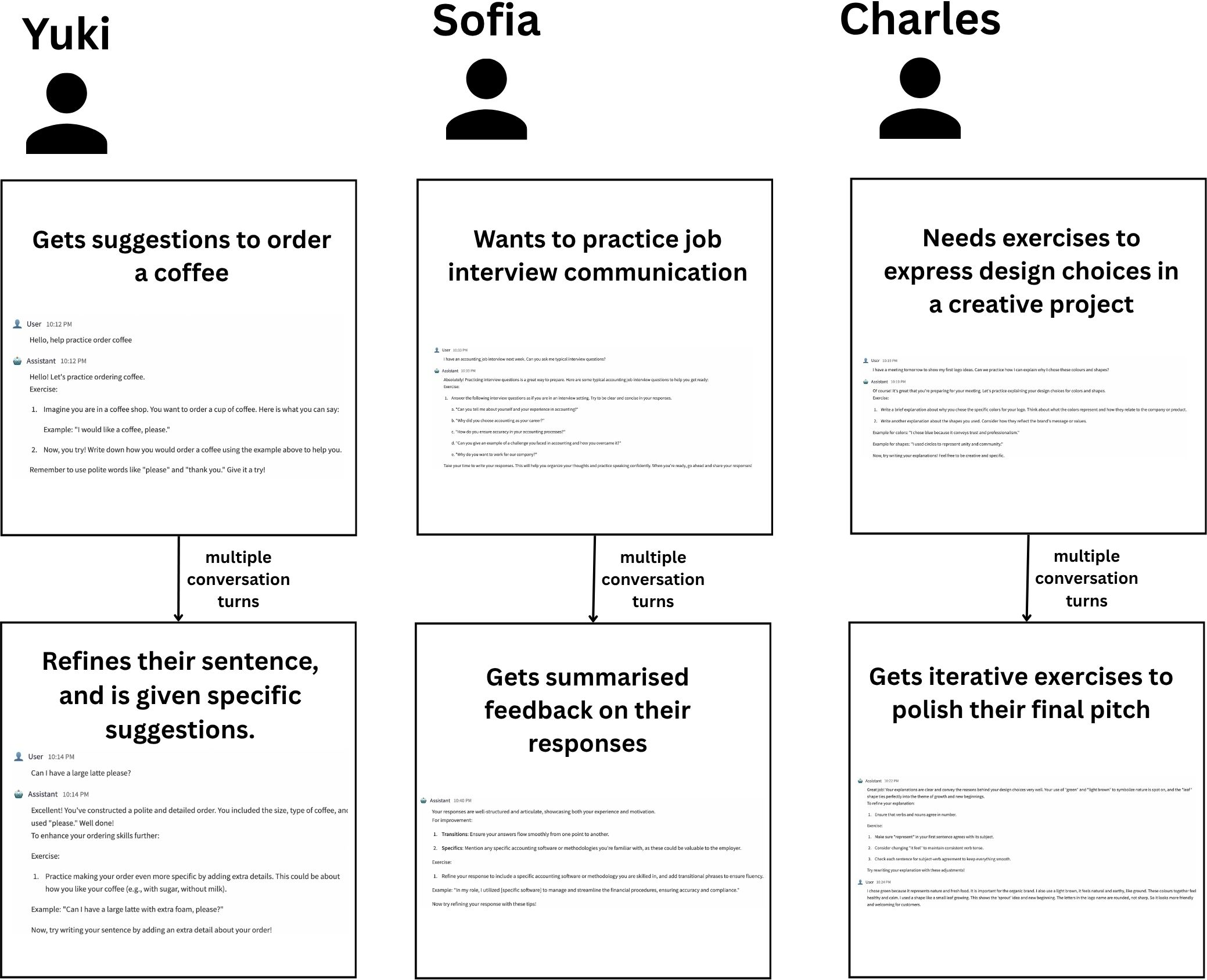

Persona-based Evaluation

Three archetypal learner personas (undergraduate, job seeker, professional) were crafted and mapped to simulated sessions to test contextual adaptability and robustness. Scoring was performed by an expert annotator.

Figure 4: Persona-based evaluation.

- Persona 1 (Yuki): The system scaffolded progression in spoken everyday scenarios, emphasizing polite requests and incremental refinement.

- Persona 2 (Sofia): Delivered situational prompts for job interviews, with actionable feedback for fluency and confidence building.

- Persona 3 (Charles): Supported professional language improvement, focusing on subject-verb agreement and domain-relevant dialogic constructs.

The persona evaluation affirmed LangLingual's capacity to tailor coaching and exercise strategy based on divergent learner traits and specific linguistic objectives.

Implications and Future Directions

LangLingual advances LLM-driven educational technology by tightly coupling proficiency assessment, context-sensitive exercise generation, and actionable feedback. The pipeline supports persistent learner tracking, enabling both instructors and autonomous users to quantify incremental improvement over time. Unlike generic LLM chatbots, the system is engineered to behave analogously to human educators employing Socratic prompts, hints, and adaptive resources.

Longitudinal retention and engagement can be bolstered via gamified features (e.g., badges, streaks), and alignment with external standards (CEFR) is a logical pathway for interoperability and transferability. Incorporating more pedagogically rigorous advancement criteria (e.g., mastery thresholds, session consistency rewards) could support reliable level progression.

Model-agnostic design affords flexibility in LLM choice, facilitating experiments with open-source instruction-tuned models or local deployment. Future research may prioritize scaling evaluation to larger and more diverse cohorts, comparative benchmarking against commercial systems, and integration with multimodal assessment components.

Conclusion

LangLingual validates the efficacy of LLM-centric, personalized language learning via a robust and extensible architecture. Evaluation supports claims of improved learner engagement and meaningful skill development, particularly in grammar and conversational proficiency. The platform delineates a clear route forward for individualized, scalable language education leveraging AI and dialogue-centric design.