- The paper introduces the α-Flow framework to harmonize conflicting objectives in MeanFlow models through a curriculum-based approach.

- It decomposes the MeanFlow objective into trajectory flow matching and consistency, mitigating negative gradient correlation for more efficient training.

- Experimental results on ImageNet-1K show that the α-Flow-XL/2+ model achieves state-of-the-art FID scores (2.58 and 2.15), outperforming conventional methods.

AlphaFlow: Understanding and Improving MeanFlow Models

Introduction

The paper "AlphaFlow: Understanding and Improving MeanFlow Models" (2510.20771) explores the MeanFlow framework, a recent paradigm for few-step generative modeling, and introduces a new family of objectives, termed α-Flow, to address limitations in optimization due to conflicts between trajectory flow matching and trajectory consistency. By employing a novel curriculum strategy, the authors propose a method that achieves superior performance over the conventional MeanFlow, particularly in the context of image generation tasks on datasets like ImageNet-1K.

Objective Decomposition and Challenges

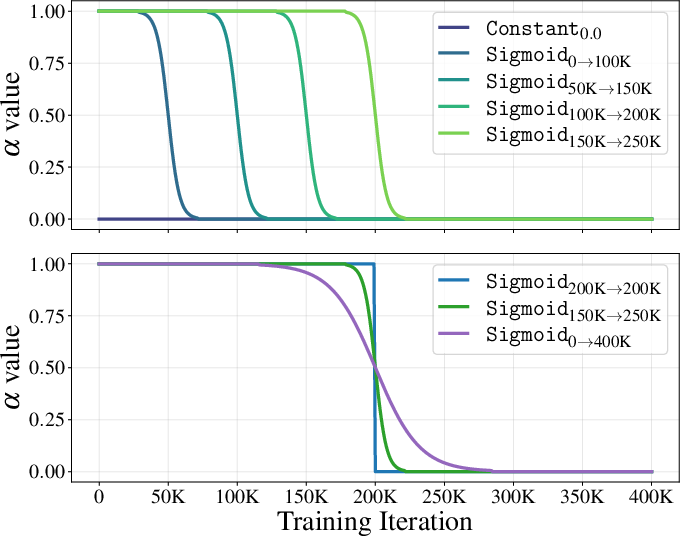

The authors identify a key challenge in optimizing the MeanFlow objective: it naturally decomposes into two parts, trajectory flow matching and trajectory consistency, which exhibit strong negative gradient correlation. This correlation leads to optimization conflicts, hindering convergence and training efficiency. To mitigate these issues, the paper introduces α-Flow, a comprehensive framework that harmonizes these objectives through a curriculum learning approach. This transition demystifies incompatible elements, facilitating smoother and more efficient optimization.

Figure 1: Gradient similarity highlights the optimization conflicts inherent in MeanFlow training.

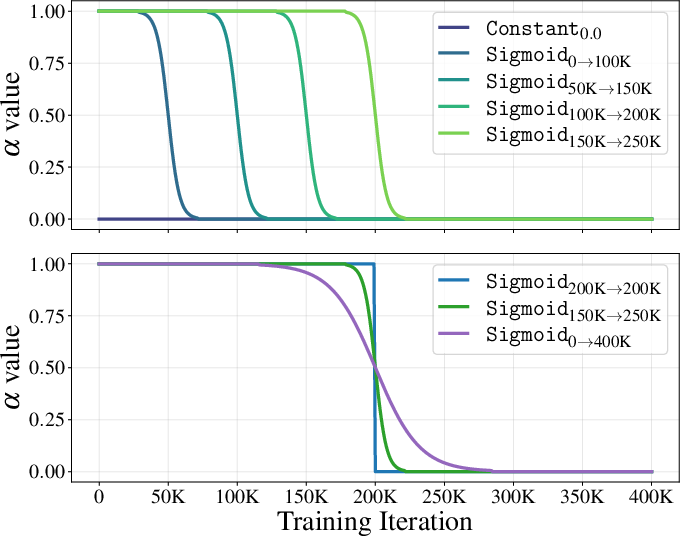

α-Flow Framework

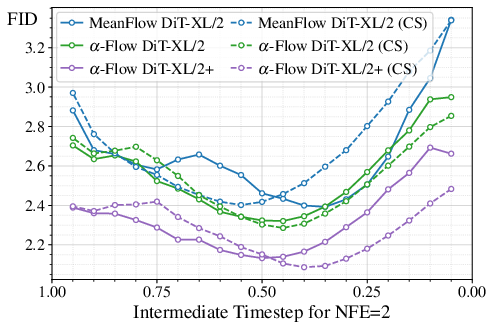

The α-Flow framework unifies the trajectory flow matching, Shortcut Models, and MeanFlow under a single formulation. By systematically annealing between these objectives, α-Flow disentangles the conflicting elements of the optimization process. This strategic shift significantly reduces reliance on computationally expensive border-case supervision and enhances convergence efficiency.

Experimental Validation

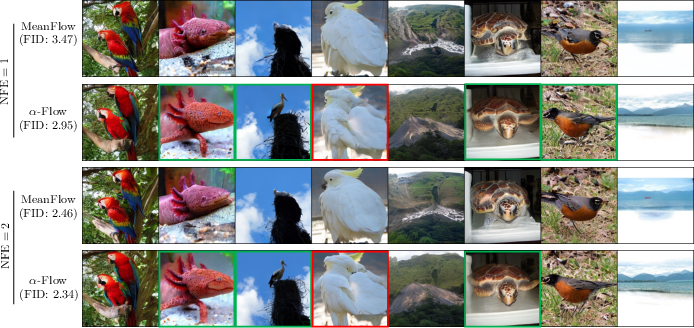

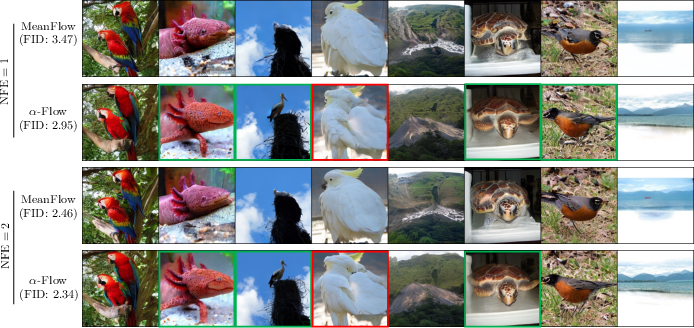

The authors validate the effectiveness of α-Flow through extensive experiments on the ImageNet-1K dataset, utilizing various model sizes and configurations. Notably, the α-Flow-XL/2+ model achieves state-of-the-art results with FID scores of 2.58 (1-NFE) and 2.15 (2-NFE), surpassing previous benchmarks set by conventional MeanFlow models.

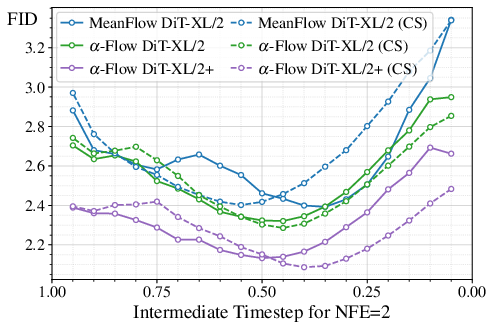

Figure 3: Comparing ODE vs consistency sampling for MeanFlow and α-Flow models.

Implications and Future Directions

The introduction of α-Flow provides a significant advancement in understanding and improving few-step generative modeling. The framework not only elevates performance across scales and configurations but also lays the foundation for further exploration into optimizing generative models. Future research could explore extending the α-Flow framework to other types of generative models beyond image generation, potentially catalyzing advancements in generative modeling efficiency across various domains.

Conclusion

In summary, "AlphaFlow: Understanding and Improving MeanFlow Models" (2510.20771) presents a detailed analysis and innovative solution to the MeanFlow optimization challenges through the α-Flow framework. This work contributes a profound understanding and systematic approach to optimizing generative models, promising significant improvements in both theoretical foundations and practical applications.