- The paper demonstrates that continuous-time neural models can emulate discrete update algorithms by integrating SGD, FA, DFA, and KP rules via coupled ODEs.

- The paper shows that robust learning requires synaptic plasticity timescales to significantly exceed stimulus durations to ensure proper temporal overlap between signals.

- The paper’s simulations reveal that biologically plausible continuous-time networks yield stable learning performance, offering guidelines for neuromorphic hardware design.

On Biologically Plausible Learning in Continuous Time

Introduction to Continuous-Time Neural Models

The paper "On Biologically Plausible Learning in Continuous Time" explores continuous-time neural models that unify several biologically plausible learning algorithms without the need for discrete updates or separate inference and learning phases. By casting inference and learning as systems of coupled Ordinary Differential Equations (ODEs), the work integrates stochastic gradient descent (SGD), feedback alignment (FA), direct feedback alignment (DFA), and Kolen–Pollack (KP) rules as special cases under specific timescale separations. The simulations within the paper demonstrate that these continuous-time networks learn robustly at biological timescales, even under conditions of temporal mismatch and integration noise. A notable observation is the requirement for synaptic plasticity timescales to significantly exceed stimulus duration to ensure stability in the learning process.

Continuous-Time Neural Network Architecture

The continuous-time model is composed of neurons receiving a feedforward input and a modulatory error signal. Updates to synaptic weights occur synchronously according to coupled ODEs, eliminating the traditional phase separation in neural network learning processes.

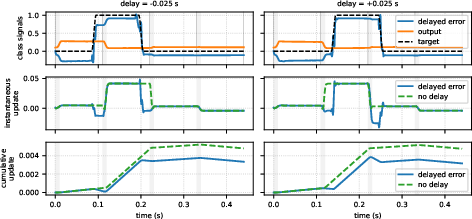

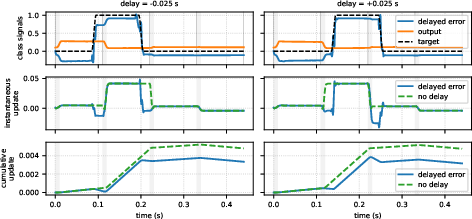

Figure 1: Single-neuron dynamics with different relative timings of the error and input signals.

In Figure 1, the dynamics showcase how delays between input and error signals affect learning at the neuronal level. The paper reveals that the correct synaptic updates depend significantly on the temporal overlap between input signals and error signals; mismatched timing leads to incorrect weight adjustments.

Timing Robustness and Temporal Overlap

One of the paper’s pivotal analyses is the effect of timing mismatches between input and error signals. The robustness of learning is directly related to the temporal overlap between these signals. Learning degrades sharply as the delay between input and error approaches the stimulus duration, particularly in deeper network architectures where signal propagation lag accumulates.

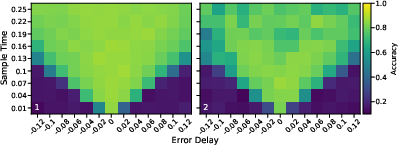

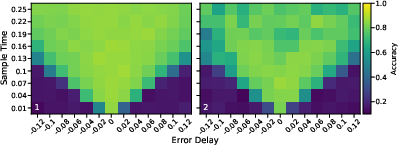

Figure 2: Evaluation of the direct error routing topology on the downsampled MNIST dataset.

The layered architecture and its error routing topologies—direct feedback alignment and layerwise feedback alignment—demonstrate how error signals can propagate in different network configurations. Figure 2 evaluates how various temporal delays affect learning performance, emphasizing the importance of sample duration and delay in defining learning efficacy.

Biological Timescales

The paper aligns neural ODEs with biologically plausible timescales: rapid synaptic transmission (order of milliseconds), intermediate plasticity (seconds), and slow synaptic decay (minutes). It effectively connects the algorithmic viability of continuous-time learning models to experimental biological findings.

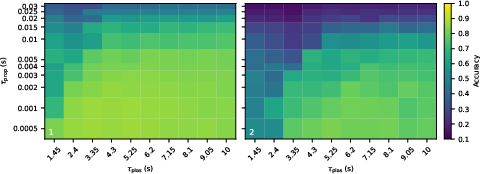

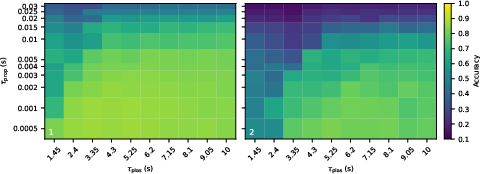

Figure 3: Evaluation of a layerwise error routing network on the MNIST dataset demonstrating the requirement that plasticity persist far longer than the input presentation time.

Figure 3 illustrates how increased plasticity timescales lead to stabilized learning performance, underscoring the prediction that synaptic plasticity windows must outlast stimulus durations by at least an order of magnitude. These insights present a testable hypothesis within neurobiology and propose implementation guidelines for neuromorphic hardware.

Experimental Evaluation

The computational demands of simulating continuous-time learning models are addressed through the use of JAX and Diffrax libraries for ODE integration. These simulations highlight the resilience of learning algorithms to temporal mismatches and delays across various architectures.

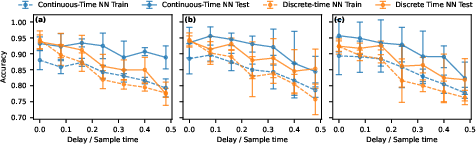

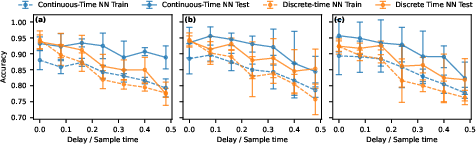

Figure 4: Delay ratio versus accuracy in networks with different hidden layer configurations.

Figure 4 demonstrates how continuous-time networks fare against discrete-time networks under conditions of error signal delay, showcasing the potential of continuous-time architectures to outperform traditional neural network models in tasks critical to real-time processing.

Conclusion

The research concludes that continuous-time learning models can replicate biologically plausible learning without discrete phase separation, provided the plasticity windows significantly exceed the stimulus duration. The findings present a unifying framework that ties biological temporal dynamics to practical machine learning applications and suggest avenues for future research in both theoretical neuroscience and the development of neuromorphic systems. The paper facilitates a deeper understanding of feeding back path topologies and their roles in maintaining the temporal overlap necessary for learning, thus offering a mechanistic insight with applicable guidelines for AI hardware design.