- The paper introduces a constrained optimization framework that generates diverse multi-step demonstrations for bimanual mobile manipulation by enforcing hard reachability and visibility constraints.

- It employs joint full-body planning and fast feasibility checks, resulting in high success rates and enriched data diversity even under aggressive domain randomization.

- The generated synthetic data significantly boost imitation learning performance and sim-to-real transfer, demonstrating cross-embodiment applicability on different robotic platforms.

MoMaGen: Constrained Demonstration Generation for Multi-Step Bimanual Mobile Manipulation

Introduction and Motivation

MoMaGen addresses the challenge of scalable data generation for multi-step bimanual mobile manipulation, a domain where collecting large-scale, high-quality human demonstrations is prohibitively expensive due to the complexity of teleoperating both a mobile base and dual high-DoF arms. Existing X-Gen frameworks (e.g., MimicGen, SkillMimicGen, DexMimicGen) are limited to static or single-arm settings and fail to generalize to mobile manipulation due to two critical issues: (1) base placement for reachability and (2) camera positioning for visibility. MoMaGen formulates demonstration generation as a constrained optimization problem, enforcing hard constraints (reachability, visibility during manipulation) and balancing soft constraints (visibility during navigation, retraction), enabling principled synthesis of diverse, valid demonstrations from minimal human input.

Figure 1: MoMaGen augments a single human-collected demonstration to generate diverse trajectories under aggressive object and obstacle randomization.

MoMaGen models demonstration generation as a constrained optimization over the robot's state and action trajectories within a Markov Decision Process (MDP). Given a source demonstration, the method decomposes the task into object-centric subtasks, each annotated with target objects, pregrasp/contact frames, and retraction types. For each subtask, MoMaGen samples scene configurations and solves for feasible base, torso, head camera, and arm trajectories that satisfy:

- Hard constraints: kinematic feasibility, collision avoidance, reachability of end-effector poses, visibility of task-relevant objects during manipulation, and task success.

- Soft constraints: visibility of objects during navigation, minimization of trajectory length and jerkiness, and retraction to compact configurations post-manipulation.

The optimization is solved via a combination of motion planning (cuRobo), inverse kinematics, and conditional sampling, with fast feasibility checks to accelerate generation.

Figure 2: MoMaGen pipeline: scene randomization, pose transformation, base/camera sampling under constraints, trajectory planning, and task-space control for replay.

Technical Innovations

MoMaGen introduces several key innovations for bimanual mobile manipulation:

- Joint full-body planning: Simultaneous optimization of base, torso, head camera, and both arms, rather than isolated end-effector trajectories.

- Visibility guarantees: Enforces object visibility before manipulation (hard constraint) and encourages visibility during navigation (soft constraint), critical for visuomotor policy learning.

- Expanded workspace coverage: Samples base poses near randomized object locations, enabling manipulation across the entire environment, not just regions covered by the source demo.

- Efficient generation: Prioritizes fast IK checks and decomposes configuration space for scalable sampling, leveraging GPU-accelerated planners.

Experimental Evaluation

Task Suite and Randomization

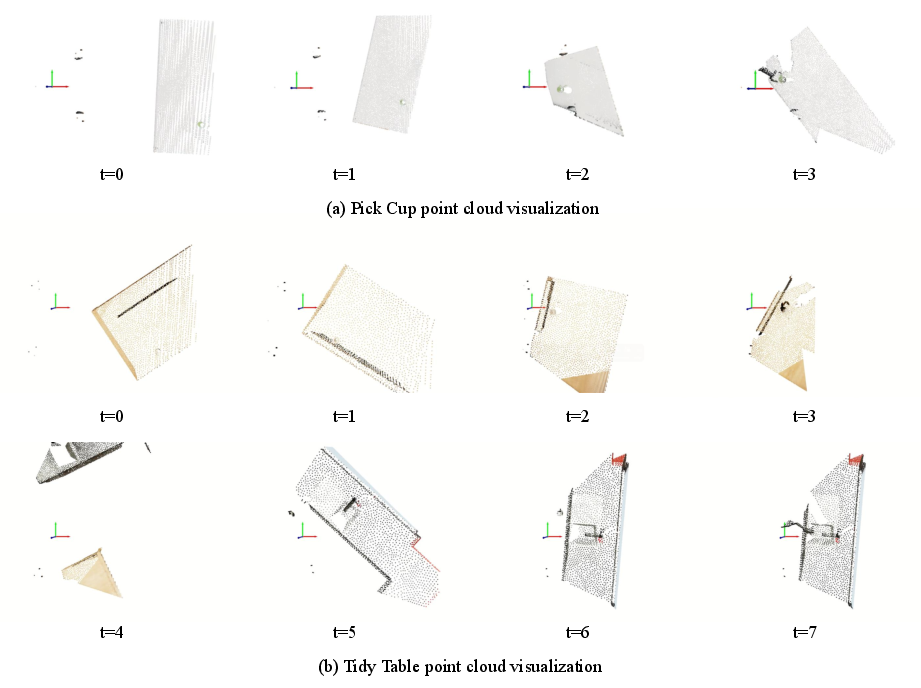

MoMaGen is evaluated on four household tasks in OmniGibson: Pick Cup, Tidy Table, Put Dishes Away, and Clean Frying Pan. Each task requires long-range navigation, sequential and coordinated bimanual manipulation, and contact-rich interactions.

Figure 3: Multi-step tasks require navigation, bimanual pick-and-place, and contact-rich motion.

Three domain randomization levels are defined:

- D0: Small object pose perturbations.

- D1: Unrestricted object placement/orientation on furniture.

- D2: D1 plus additional obstacles/distractors for navigation and manipulation.

Data Diversity and Success Rates

MoMaGen achieves substantially higher data diversity than baselines, sampling a wide range of base, end-effector, and joint configurations, especially under aggressive randomization (D1/D2). Baselines fail to generate valid data when objects are out of reach of replayed base trajectories.

Figure 4: MoMaGen generates diverse base, end-effector, and joint trajectories, covering broader state/action spaces than SkillMimicGen.

MoMaGen maintains high data generation success rates (up to 86% for Pick Cup D0, 80% for Tidy Table D0), with throughput decreasing as randomization increases. Hard visibility constraints improve success rates for complex tasks by ensuring suitable torso/camera configurations.

Object Visibility Analysis

Visibility of task-relevant objects is critical for training visuomotor policies. MoMaGen's hard and soft constraints yield >75% visibility even under D1/D2 randomization, outperforming ablations and baselines.

Cross-Embodiment Transfer

MoMaGen demonstrates cross-embodiment capability by generating valid demonstrations for a TIAGo robot using a Galexea R1 source demo, leveraging task-space trajectory replay agnostic to robot-specific kinematics.

Policy Learning and Sim-to-Real Transfer

Imitation learning policies (WB-VIMA, π0) trained on MoMaGen-generated data outperform those trained on baseline data, especially for tasks requiring diverse navigation and manipulation. Visibility constraints during data generation directly translate to higher policy success rates. Scaling the number of synthetic demonstrations improves policy performance, particularly under high randomization.

Figure 5: Real-world Pick Cup setup and WB-VIMA validation loss curve, showing faster convergence with simulation pretraining.

Sim-to-real experiments show that pretraining on MoMaGen data followed by fine-tuning on limited real-world demonstrations yields nontrivial success rates (10% for WB-VIMA, 60% for π0), whereas training on real data alone fails to generalize. This demonstrates the utility of diverse synthetic data for efficient policy adaptation in low-data regimes.

Implementation Considerations

Implications and Future Directions

MoMaGen provides a unified, constraint-driven framework for automated demonstration generation in complex mobile manipulation domains. Its principled approach to balancing hard and soft constraints generalizes across prior X-Gen methods and enables scalable synthesis of diverse, valid data for imitation learning. The demonstrated improvements in policy learning and sim-to-real transfer highlight its practical utility for real-world deployment.

Future research directions include:

- Automated scene understanding: Integrating perception models for real-world object pose estimation.

- Whole-body manipulation: Extending the framework to tasks with simultaneous navigation and manipulation.

- Cross-embodiment generalization: Developing more robust transfer mechanisms for heterogeneous robot platforms.

- Resource-efficient planning: Optimizing the data generation pipeline for reduced computational overhead.

Conclusion

MoMaGen advances the state of automated data generation for multi-step bimanual mobile manipulation by formulating demonstration synthesis as a constrained optimization problem. Its innovations in reachability, visibility, and full-body planning yield diverse, high-quality data that directly improve imitation learning outcomes and facilitate efficient sim-to-real transfer. While limitations remain in real-world scene knowledge and compute requirements, MoMaGen establishes a principled foundation for scalable robot learning in complex environments.