- The paper introduces a novel framework using Shapley values to assign contribution scores to each query atom in complex query answering.

- The paper employs a neurosymbolic approach that combines neural predictions with symbolic execution to handle multi-hop reasoning over incomplete knowledge graphs.

- The paper demonstrates significant improvements in necessary and sufficient explanations on benchmark datasets FB15k-237 and NELL995, outpacing baseline methods.

CQD-SHAP: Explainable Complex Query Answering via Shapley Values

CQD-SHAP introduces a novel framework for Complex Query Answering (CQA) over Knowledge Graphs (KGs) by leveraging Shapley values from cooperative game theory. This approach attempts to provide an explainable model for CQA by evaluating the contribution of each constituent part of a query to the final answer ranking, aiming to bridge the gap between neural and symbolic reasoning methodologies.

Introduction to the Problem Space

In real-world scenarios, KGs are often incomplete, thus limiting the effectiveness of traditional symbolic methods in retrieving all relevant answers to complex queries. These queries frequently require multi-hop reasoning and may combine elements via logical operators like conjunctions and disjunctions. Neurosymbolic approaches such as Complex Query Decomposition (CQD) aim to address these challenges by allowing reasoning over incomplete graphs.

However, a significant drawback of current neural query answering systems is their opaqueness, as they do not elucidate the importance or reasoning behind how specific answer rankings are derived. CQD-SHAP addresses this by employing Shapley values to reveal how each part of the query influences the rank of an answer, thereby providing insights into the reasoning process of the neural model.

Methodology

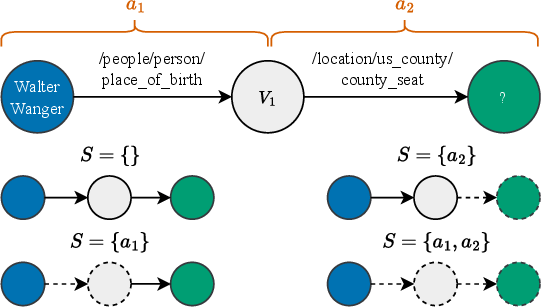

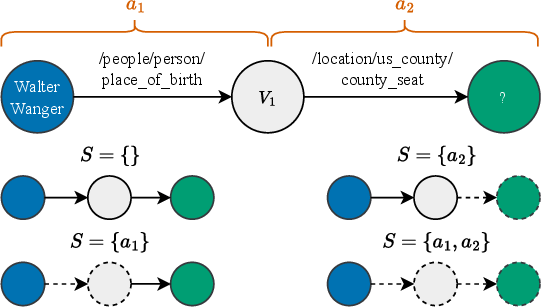

CQD-SHAP utilizes Shapley values, originally from cooperative game theory, to assign contributions to the individual components (atoms) of a query in influencing the neural model's ranking of an answer. This is achieved through the definition of a cooperative game where:

- Players: The query atoms.

- Coalitions: Subsets of query atoms that can be processed either neurally or symbolically.

- Value Function: Computes the rank improvement of an answer when processing is switched from symbolic to neural for a subset of query atoms.

Neural and Symbolic Execution

The framework distinguishes between symbolic execution, where results are retrieved through direct graph traversal, and neural execution, where machine learning models make predictions about the links in the KG.

The task is set using a neurosymbolic approach, where different logical operations such as conjunctions (t-norms) and disjunctions (t-conorms) are used to merge scores from individual link predictions into a final score that reflects the likelihood of the answer being correct.

Evaluation Strategy

CQD-SHAP proposes necessary and sufficient explanation scenarios for evaluation:

- Necessary Explanations: If the execution of the most important atom (as per CQD-SHAP) is changed from neural to symbolic and the answer rank deteriorates, the atom is deemed necessary for the neural model's success.

- Sufficient Explanations: If the execution of the most important atom is changed from symbolic to neural and the answer's rank improves, the atom's neural execution is deemed sufficient.

Figure 1: Illustration of all possible partial queries QS for a query consisting of two sequential projections ($2p$).

Quantitative Results

The framework was evaluated on benchmark datasets FB15k-237 and NELL995, demonstrating significant improvements in providing necessary and sufficient explanations for most query types. CQD-SHAP outperformed baseline approaches, illustrating the utility of Shapley values in elucidating the complexities hidden within modern CQA over KGs.

Among the insights gained, CQD-SHAP found that atom-level explanations closely mimic human intuition in understanding how certain answers are produced, which aligns with theoretical expectations of Shapley values as a fair attribution method.

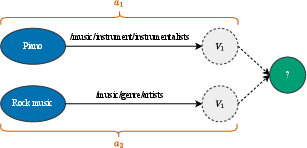

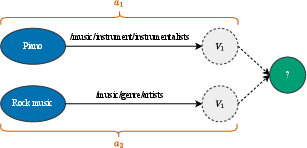

Figure 2: An example of a 2i query in the FB15k-237 test data, illustrating its structure and the role of its components in delivering answers.

Future Directions

Future work may extend CQD-SHAP by incorporating more sophisticated players beyond query atoms, potentially considering entire sub-graphs or paths as players. There is also potential in generalizing Shapley-based frameworks to capture global model behaviors by aggregating local explanations. Additionally, enhancing datasets to more accurately reflect real-world KB limitations could improve the robustness and applicability of these methods.

Conclusion

CQD-SHAP provides a solid foundation for taking important steps toward better transparency in CQA models. By aligning the contributions of individual query atoms to overall model predictions with theoretical underpinnings in cooperative game theory and Shapley values, CQD-SHAP presents a pioneering approach to explain neural query answering, aiding practitioners in trustfully deploying these models in real-world applications.