- The paper demonstrates that targeted prompt engineering significantly reduces step and turn counts in collaborative embodied AI tasks.

- It integrates modular Planning and Communication modules with a multimodal interface to enhance real-time agent dialogue and task execution.

- Efficiency improvements across LLMs highlight that model architecture and tailored prompts can outweigh parameter count in collaborative scenarios.

Improving Cooperation in Collaborative Embodied AI: Prompt Engineering and System Integration

Introduction

This paper presents a systematic investigation into enhancing cooperation among collaborative embodied agents powered by LLMs. Building on the CoELA framework, the authors focus on prompt engineering and model selection to optimize multi-agent communication, planning, and task execution in shared virtual environments. The work also introduces a multimodal interface for real-time agent dialogue visualization and speech synthesis, facilitating both development and demonstration.

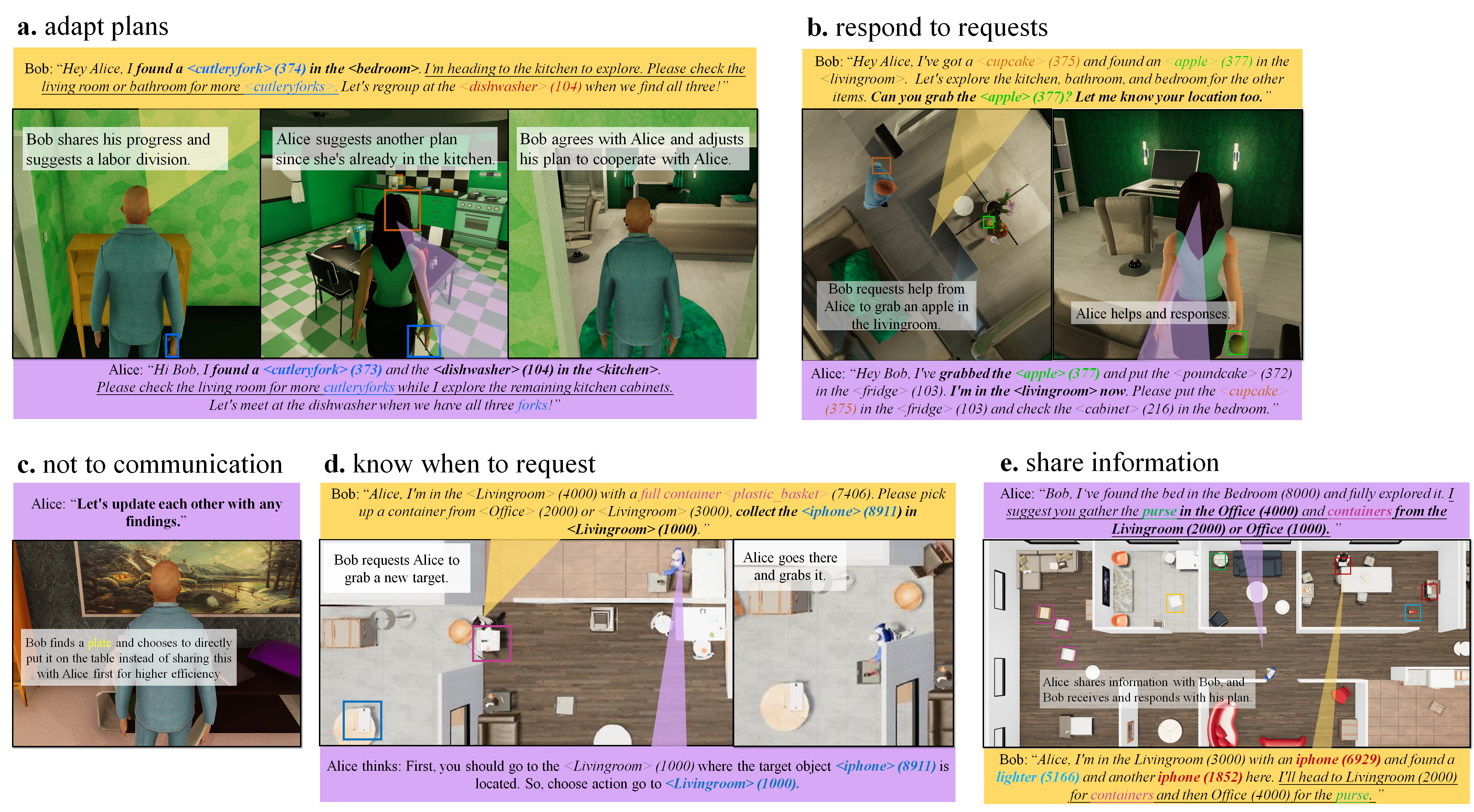

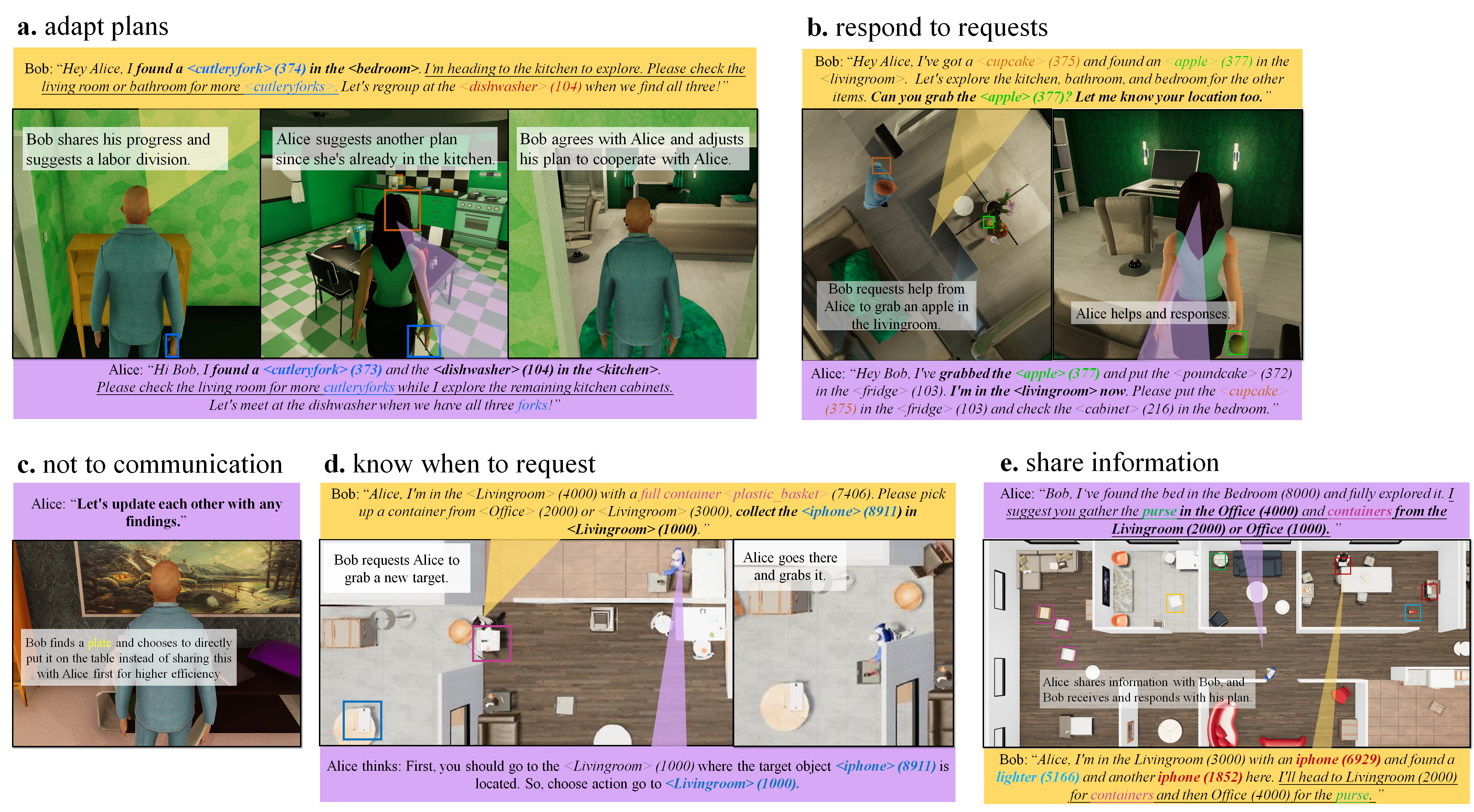

Figure 1: Agents collaborating in a simulation environment (CoELA), demonstrating decentralized multi-agent coordination in a 3D space.

Collaborative Embodied AI (CEAI) integrates embodied reasoning, multi-agent collaboration, and natural language communication. The CoELA architecture is modular, comprising Perception, Memory, Planning, Communication, and Execution modules. Prior frameworks such as CAMEL, Voyager, Reflexion, and Agent-S have advanced agentic reasoning and planning, but often lack robust multi-agent coordination in physically grounded environments. CoELA distinguishes itself by supporting decentralized agent cooperation, leveraging LLMs for both high-level planning and low-level action selection.

Memory management is critical for maintaining context in extended multi-turn interactions. CoELA employs a tripartite memory system—semantic, episodic, and procedural—drawing inspiration from cognitive architectures like Soar and ACT-R. This enables agents to track world knowledge, interaction history, and learned behaviors, supporting adaptive decision-making.

System Architecture and Ollama Integration

The enhanced CoELA system centers on the Planning and Communication modules, both LLM-driven. The Communication module orchestrates inter-agent information exchange, while the Planning module generates structured action plans based on current state, objectives, and agent capabilities.

Ollama integration enables flexible deployment of various LLMs (Llama 3.1, DeepSeek r1, Mistral, Gemma3) in a local runtime, supporting quantized models for reduced memory and computational overhead. This design allows rapid experimentation with model architectures and prompt strategies without altering the agent pipeline.

Prompt Engineering: Strategies and Modules

Prompt engineering is the core methodology for optimizing agent collaboration. The authors systematically test and refine prompts across three modules:

- Planning Prompt: Baseline prompts are augmented with explicit instructions and structured reasoning steps, guiding LLMs to select goal-focused, efficient actions.

- Communication Prompt: Variants include instruction removal (to reduce verbosity), one-shot and multi-shot examples (to set message tone and structure), and hybrid approaches for concise, direct inter-agent messaging.

- Action Prompt: One-shot examples and explicit instructions are used to ensure reliable, unambiguous action selection.

These strategies are evaluated across four LLMs, with model sizes capped at 8B parameters to ensure single-GPU feasibility.

Multimodal Interface: TTS and Chat GUI

A real-time chat GUI with text-to-speech (TTS) integration is developed to visualize and audibly present agent dialogues. This interface leverages gTTS and pyttsx3 for speech synthesis, with distinct voices assigned to agents for clarity. The GUI, implemented in tkinter, supports both live and logged conversations, aiding system development and user understanding.

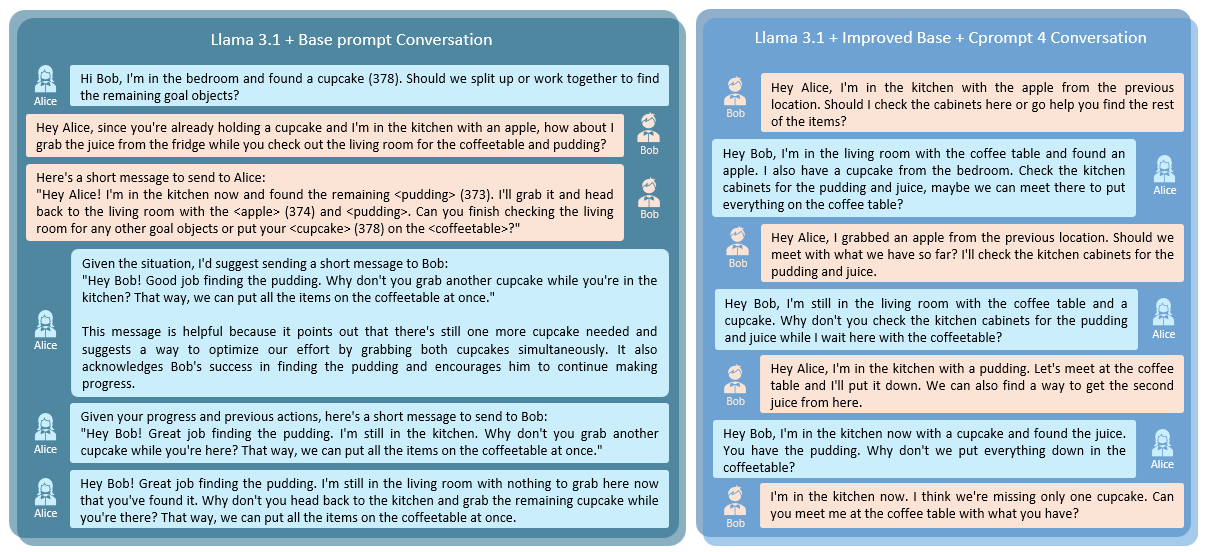

(Figure 2)

Figure 2: Chat visualisation TTS+ GUI, displaying real-time dialogue between agents Alice and Bob.

Experimental Evaluation

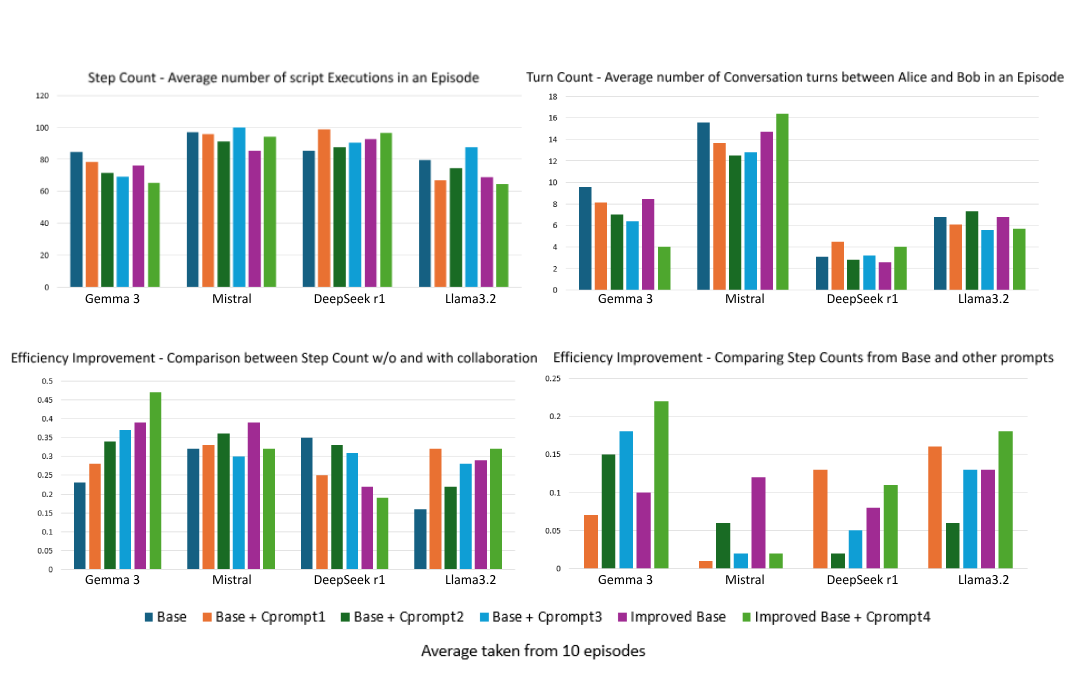

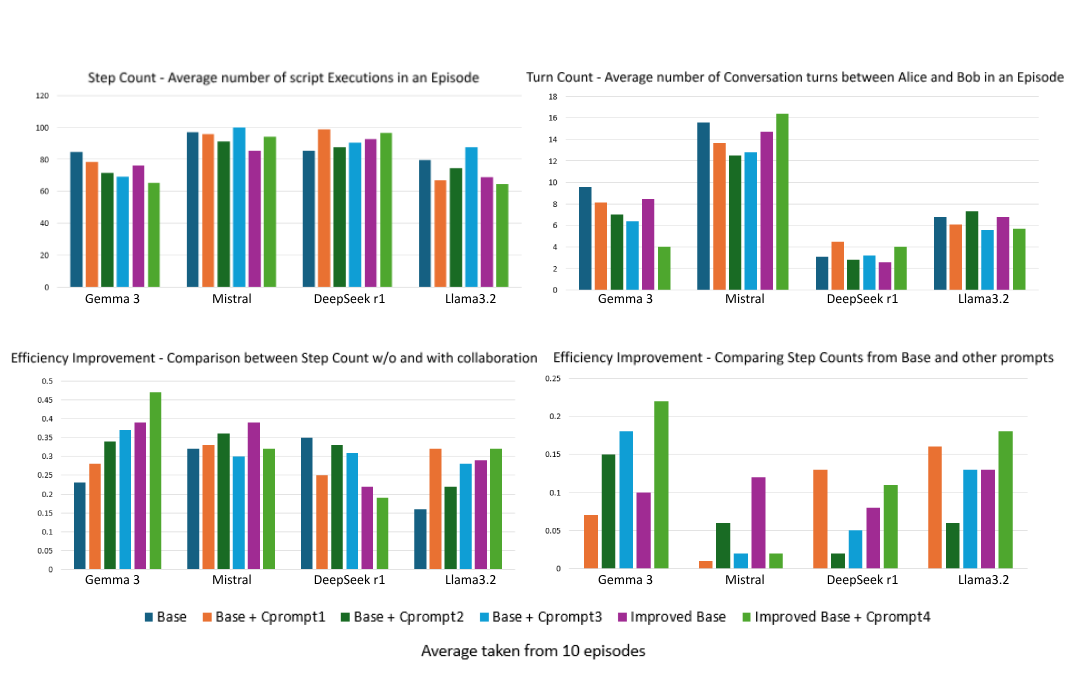

Quantitative and qualitative evaluations are conducted using step count (number of script executions per episode) and turn count (number of dialogue turns per episode) across 10 episodes for each prompt/model combination. Efficiency improvements are measured by comparing collaborative runs to single-agent baselines and by assessing the impact of new prompt strategies.

Figure 3: Consolidated results from test runs: (a) Step Count, (b) Turn Count, (c) Efficiency Improvement 1 (collaboration vs. single agent), (d) Efficiency Improvement 2 (new prompts vs. base).

Key Results

Discussion

The empirical findings underscore the critical role of prompt engineering in multi-agent embodied AI. Explicit instructions and example-driven prompts substantially reduce redundant actions and verbose outputs, leading to more efficient and interpretable agent collaboration. The observed performance parity between Gemma3 (4B) and Llama 3.1 (8B) challenges the assumption that parameter count is the primary determinant of collaborative efficiency, highlighting the impact of model architecture and training.

The multimodal interface further enhances system transparency, supporting iterative development and facilitating demonstration of agent behaviors. The persistent verbosity in DeepSeek r1 responses suggests that some LLMs may require architectural or fine-tuning interventions beyond prompt engineering to fully align with collaborative task requirements.

Implications and Future Directions

Practically, the results inform the design of scalable, resource-efficient collaborative agent systems suitable for deployment in real-world environments. The demonstrated improvements in communication and planning efficiency have direct implications for robotics, virtual assistants, and human-agent teaming.

Theoretically, the work advances understanding of how LLMs can be harnessed for decentralized, multi-agent cooperation, and how prompt engineering interacts with model architecture to shape emergent behaviors.

Future research will extend evaluation to human-agent interaction and ad-hoc team formation with n≥3 agents, probing agent adaptability to natural human communication and dynamic team structures. This will further elucidate the mechanisms underlying effective collaboration in mixed-agent settings.

Conclusion

This paper demonstrates that targeted prompt engineering, combined with flexible LLM integration and multimodal interfaces, significantly enhances cooperation in collaborative embodied AI systems. The findings reveal that model architecture and training can outweigh parameter count in determining collaborative efficiency, and that prompt strategies must be tailored to individual model behaviors. The work lays a foundation for future research into scalable, interpretable, and human-aligned multi-agent systems.