- The paper introduces a novel AERIS framework that integrates a pixel-level Swin diffusion transformer with SWiPe parallelism to enable exascale Earth system forecasting.

- It leverages advanced architectural innovations such as pre-RMSNorm, SwiGLU activations, and 2D sinusoidal encodings for efficient high-resolution spatiotemporal modeling.

- Robust experimental results demonstrate superior forecast skill across medium-range and seasonal timescales, highlighting its potential for operational climate applications.

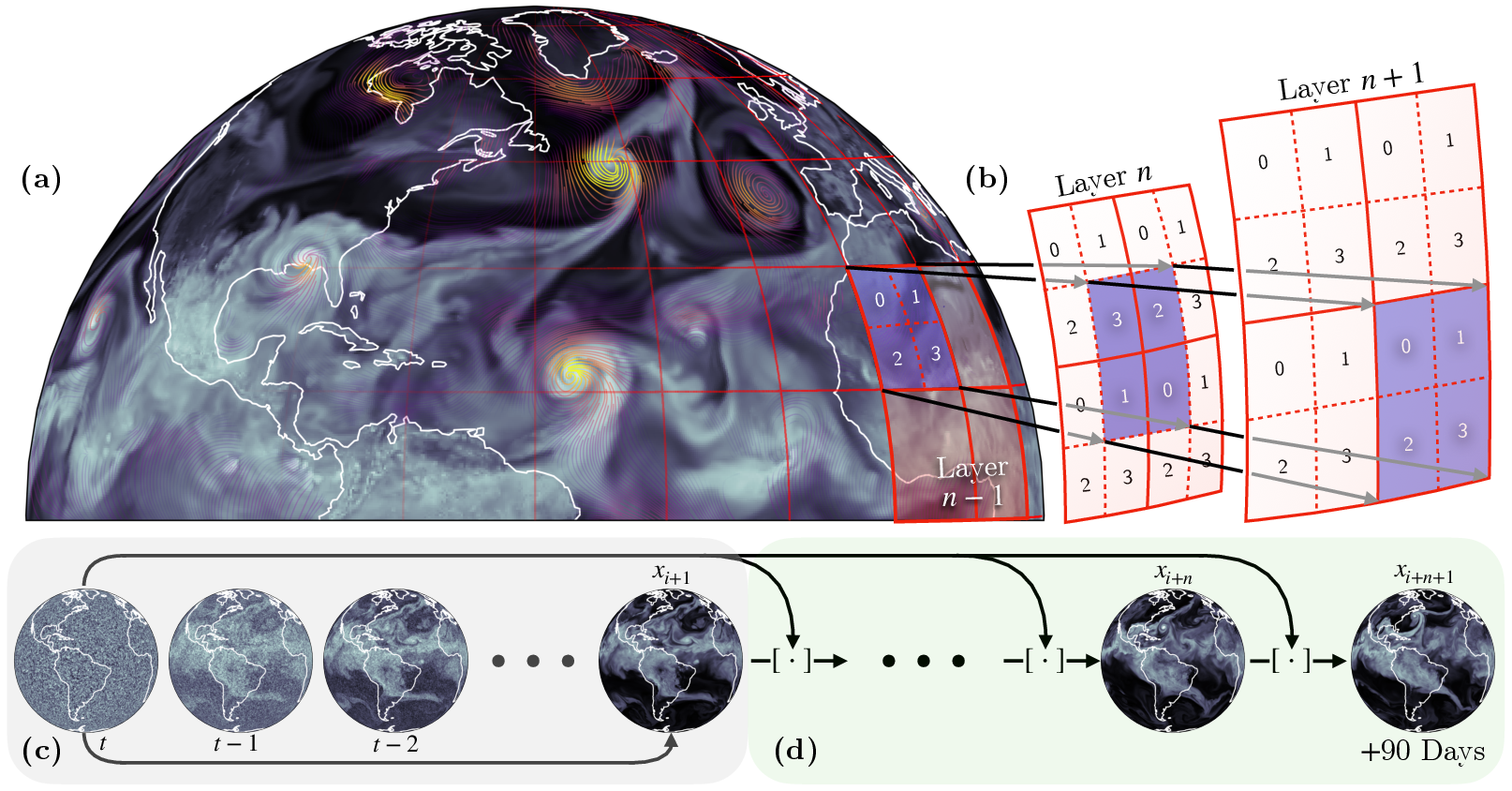

The AERIS framework introduces a billion-parameter, pixel-level Swin diffusion transformer for global weather and climate forecasting, addressing the limitations of both traditional numerical weather prediction (NWP) and prior deep learning approaches. The work is distinguished by its integration of a novel parallelism strategy (SWiPe), enabling efficient scaling to exascale supercomputers, and by demonstrating robust, stable, and skillful forecasts from medium-range to seasonal timescales.

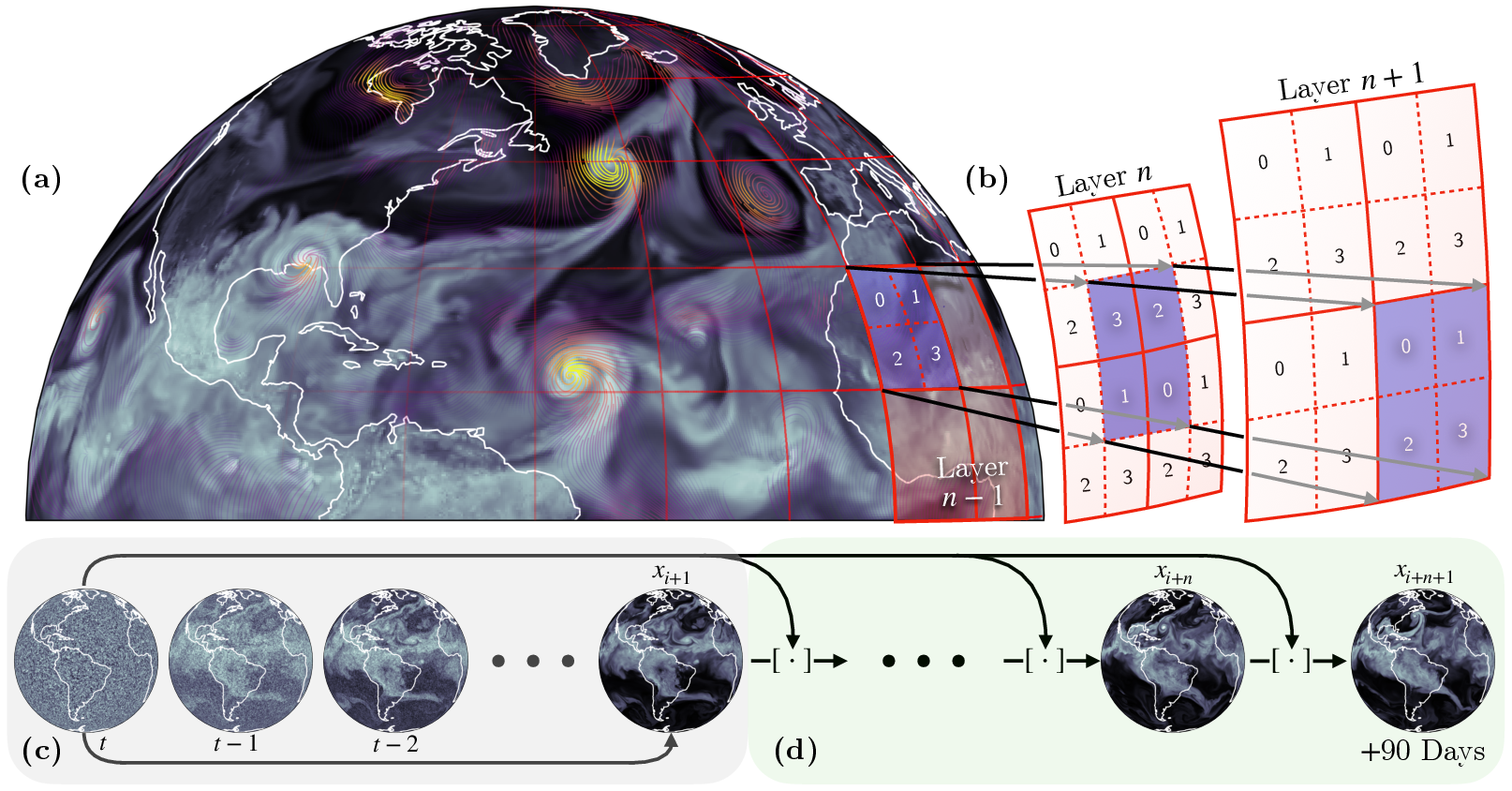

Figure 1: High-level overview of AERIS, including global forecasts, window-based parallelism, diffusion-based ensemble generation, and autoregressive seasonal rollouts.

Motivation and Context

Traditional NWP models, while foundational for operational forecasting, are constrained by parameterization uncertainties, high computational cost, and limited scalability with increasing data and resolution. Recent advances in deep learning, particularly transformer-based architectures, have closed the gap with NWP in medium-range skill, but scaling these models to high resolution and large parameter counts has been hindered by memory and communication bottlenecks. Generative diffusion models, such as GenCast, have improved ensemble calibration and uncertainty quantification but suffer from instability at long lead times and are less amenable to large-scale training.

AERIS is designed to overcome these challenges by combining a non-hierarchical, pixel-level Swin Transformer backbone with a diffusion generative modeling objective, and by introducing SWiPe—a hybrid sequence-window parallelism scheme that enables efficient training and inference at unprecedented scale.

Model Architecture and Parallelism Innovations

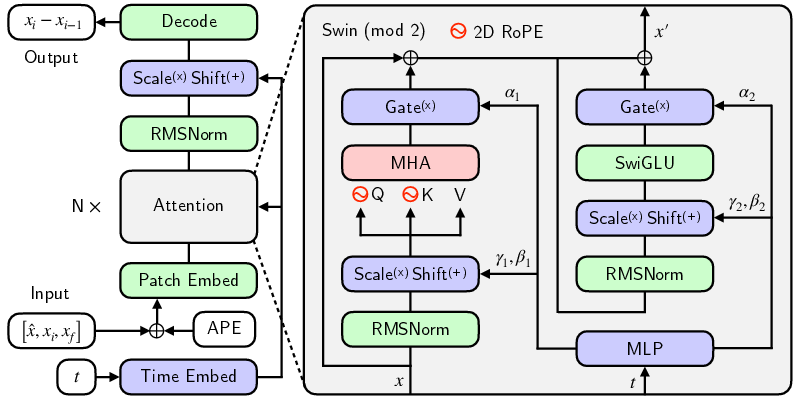

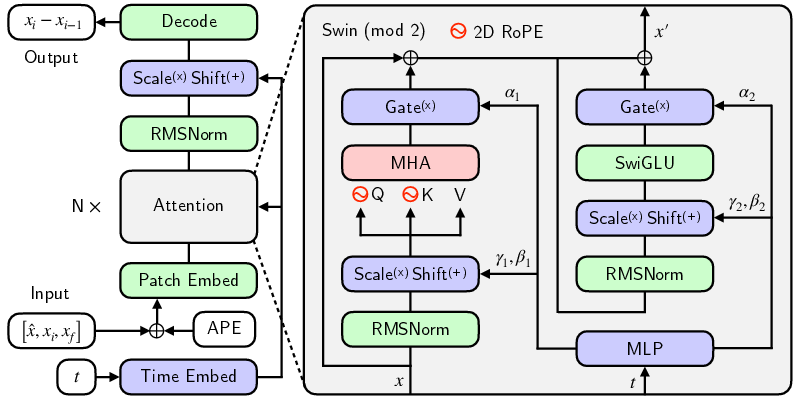

AERIS employs a non-hierarchical Swin Transformer architecture, optimized for spatiotemporal modeling of global atmospheric fields at 0.25∘ resolution. The model is parameterized as a conditional diffusion model using TrigFlow, which unifies EDM and flow matching under a v-prediction objective. Key architectural modifications include pre-RMSNorm, SwiGLU activations, and 2D sinusoidal positional encodings, with axial rotary embeddings for attention.

Figure 2: Model architecture of AERIS, highlighting the pixel-level Swin Transformer backbone, shifted window attention, and diffusion conditioning.

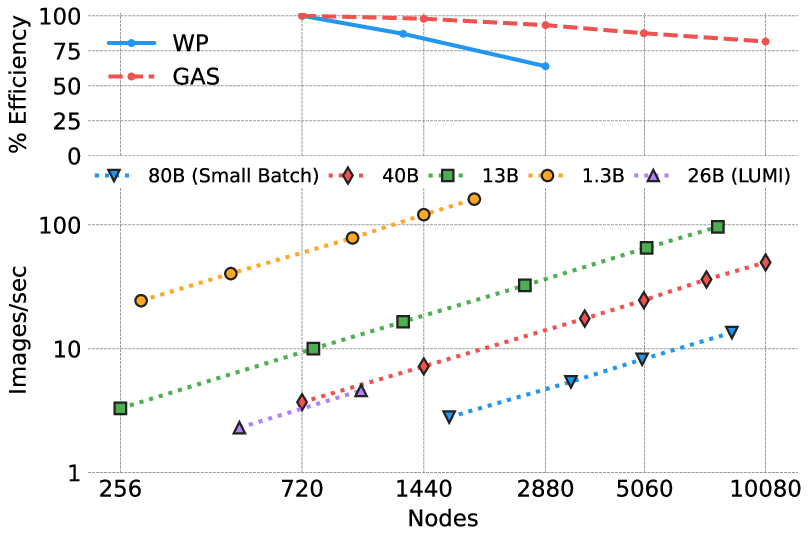

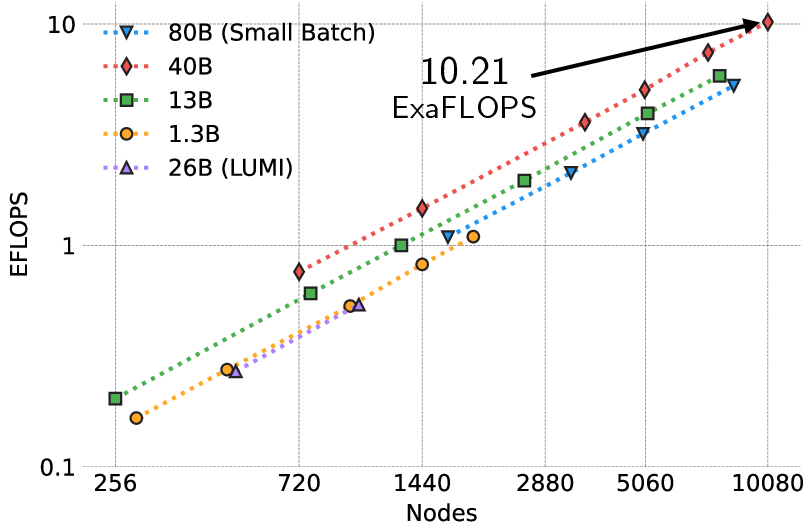

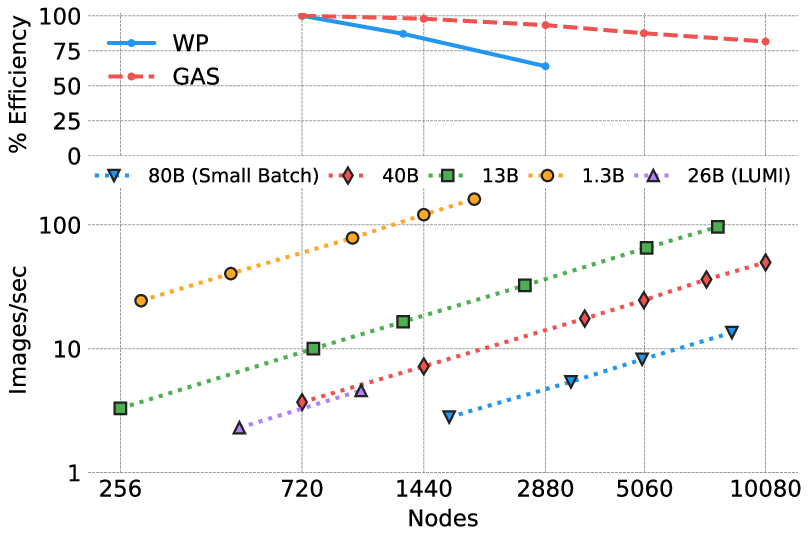

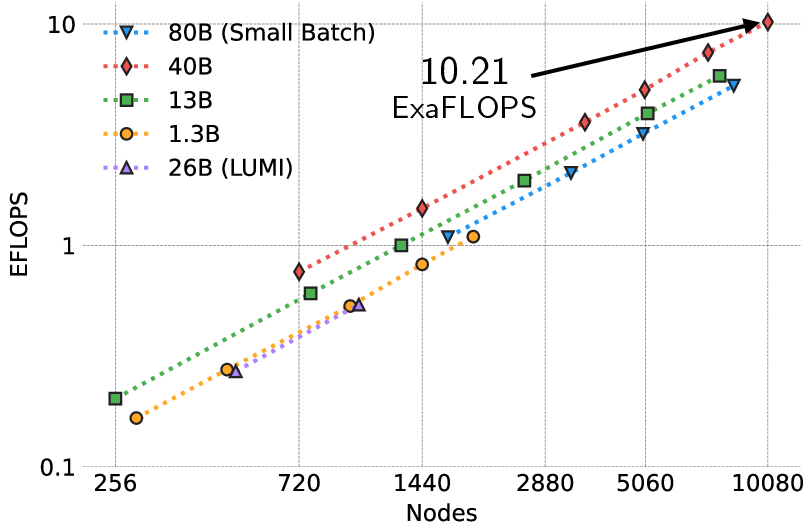

The SWiPe parallelism strategy composes window parallelism (WP) with sequence (SP) and pipeline (PP) parallelism, sharding attention windows across compute nodes and GPUs without additional communication overhead. This enables efficient scaling to 10,080 nodes (120,960 GPU-tiles) on Aurora, achieving 10.21 ExaFLOPS sustained and 11.21 ExaFLOPS peak mixed-precision performance, with 95.5% weak scaling and 81.6% strong scaling efficiency.

Figure 3: Strong scaling efficiency (top) and weak scaling throughput (bottom) for AERIS training on Aurora and LUMI, demonstrating near-linear scaling and high utilization.

SWiPe reduces activation memory, minimizes communication, and allows for small-batch, high-resolution training, which is critical for both model convergence and operational deployment.

Training, Inference, and Evaluation Protocols

AERIS is trained on four decades of ERA5 reanalysis data at native 0.25∘ resolution, predicting a comprehensive set of surface and atmospheric variables. The diffusion objective is physically weighted by latitude and pressure, and the model is trained with AdamW and an exponential moving average of parameters. Inference is performed via numerical integration of the probability flow ODE, with ensemble generation through stochastic noise resampling.

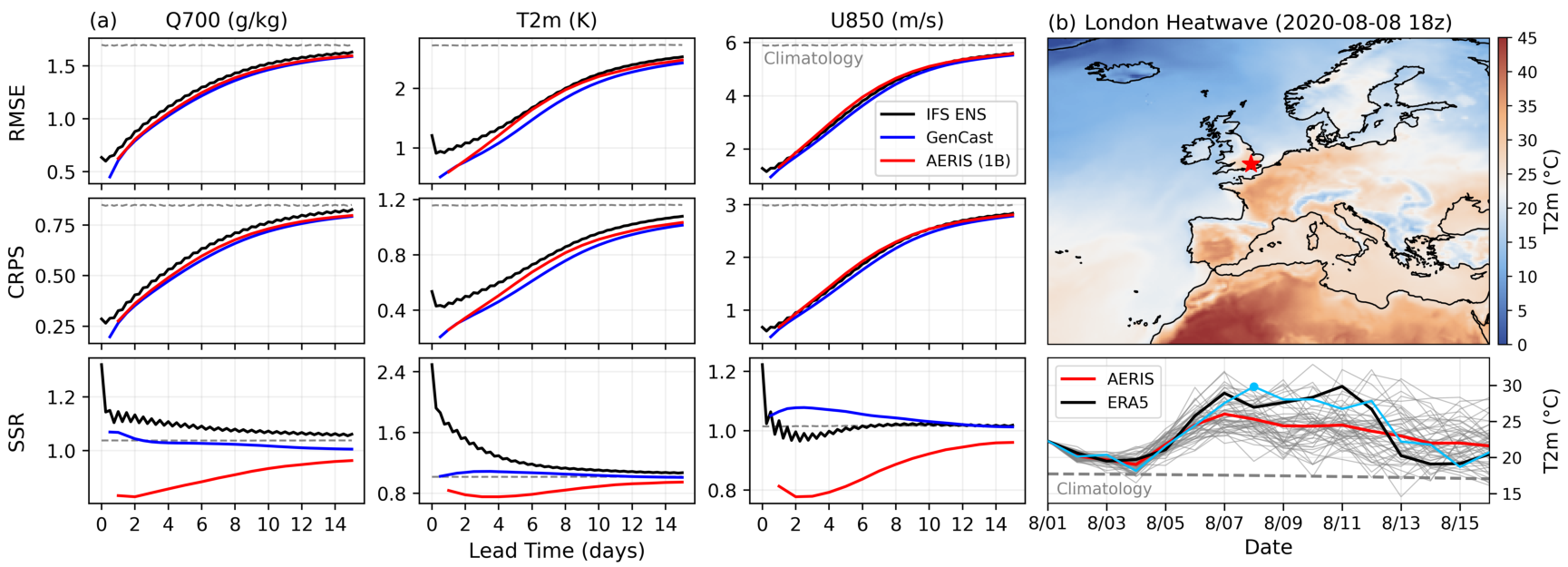

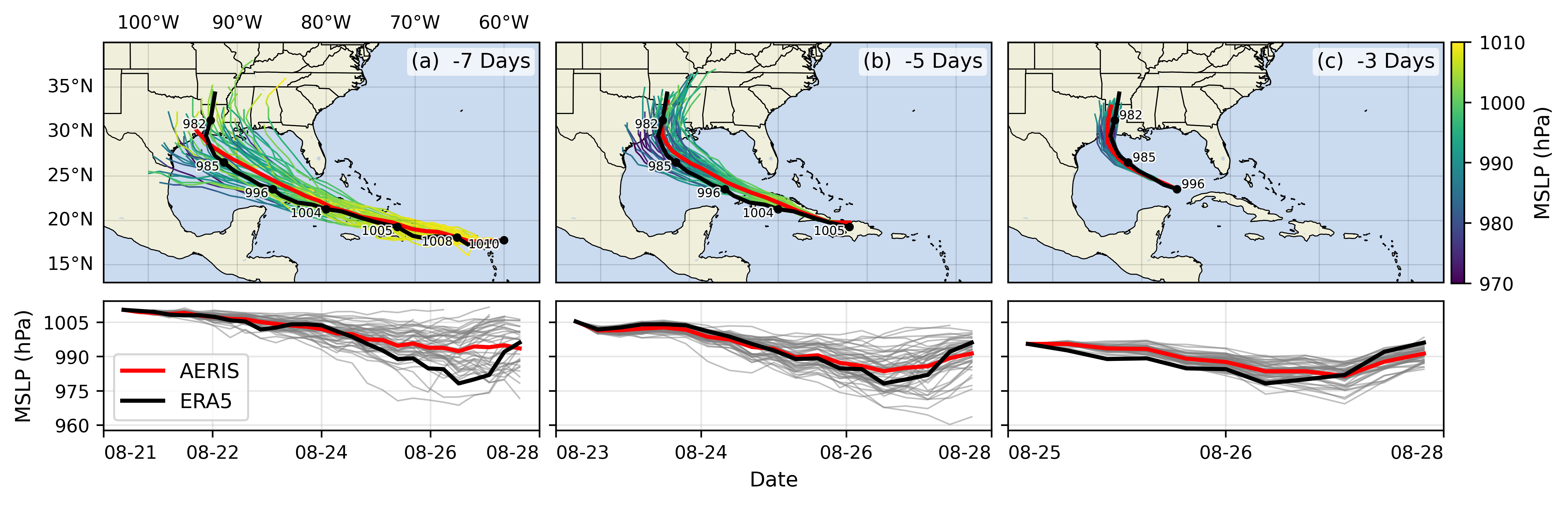

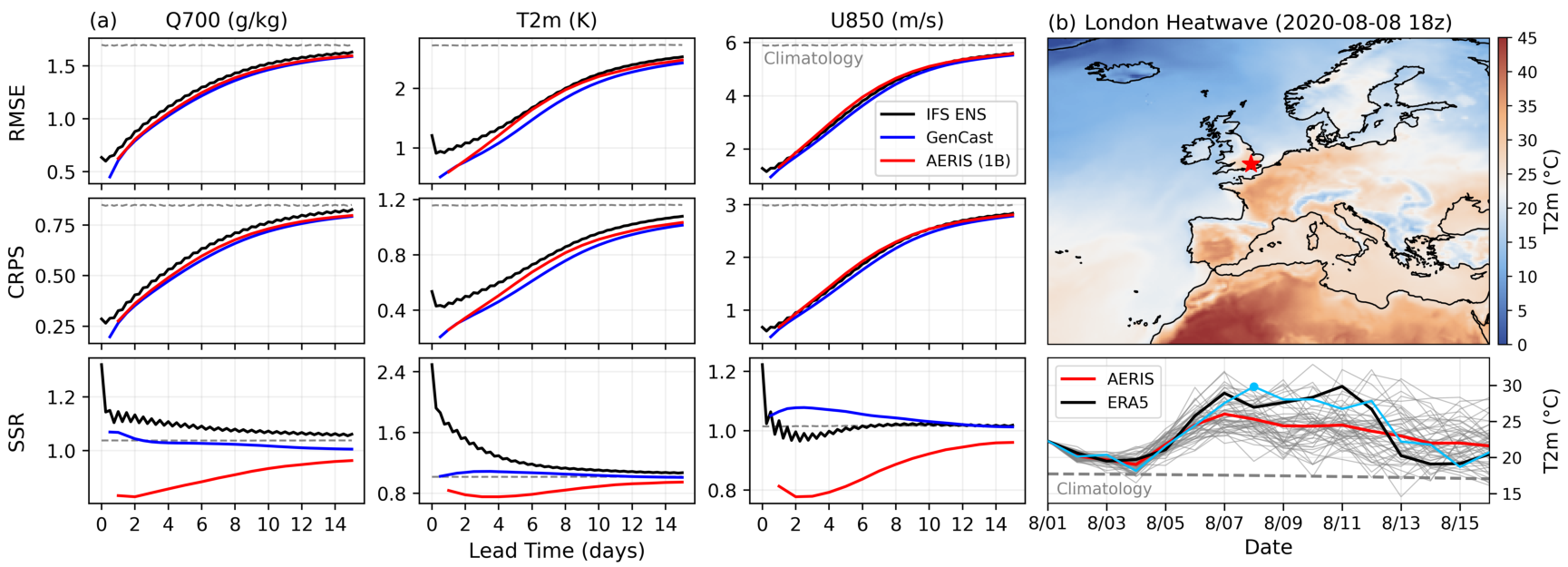

The evaluation suite includes medium-range (1–14 day) and seasonal (up to 90 day) forecasts, with metrics such as latitude-weighted RMSE, CRPS, and spread/skill ratio (SSR), as well as case studies on extreme events (heatwaves, hurricanes).

Empirical Results

AERIS demonstrates robust scaling across multiple model sizes (1.3B–80B parameters), with the 40B configuration achieving 10.21 ExaFLOPS sustained on 10,080 nodes. The parallelism strategy enables high throughput even at small global batch sizes, and the system achieves over 30% Model FLOPS Utilization (MFU) at scale.

Forecast Skill

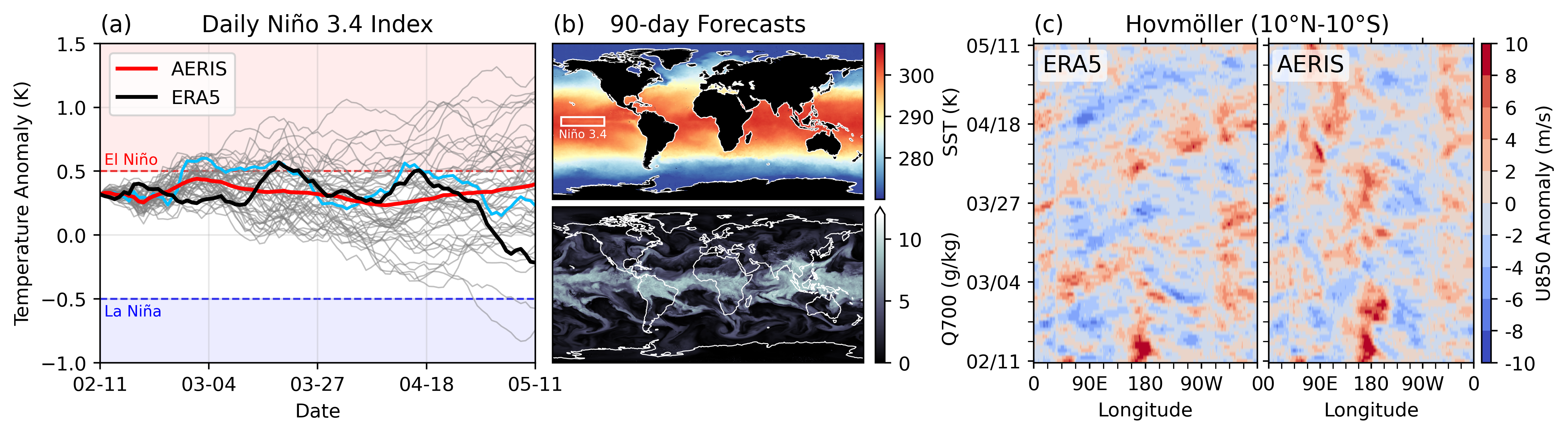

AERIS outperforms the IFS ENS and is competitive with GenCast in medium-range ensemble mean RMSE and CRPS, with particularly strong performance at both short (1–3 day) and long (10+ day) lead times. Notably, AERIS maintains stability and skill in 90-day seasonal forecasts, a regime where prior diffusion models (e.g., GenCast) become unstable.

Figure 4: Medium-range forecast skill: RMSE, CRPS, and SSR for key variables, and accurate heatwave forecast over London, England.

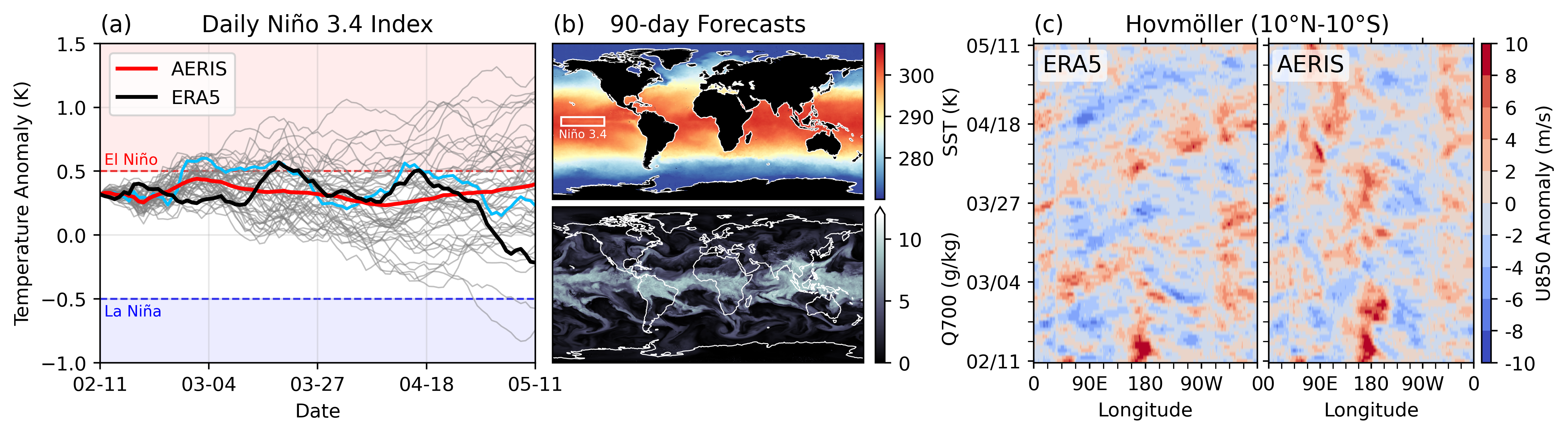

AERIS accurately predicts the evolution of the El Niño Southern Oscillation (ENSO) and maintains realistic ensemble spread and sharpness in sea surface temperature and specific humidity fields at seasonal lead times.

Figure 5: Seasonal forecast stability, including ENSO prediction, sharp SST and Q700 fields at 90 days, and stable Hovmöller diagrams of U850 anomalies.

Extreme Event Prediction

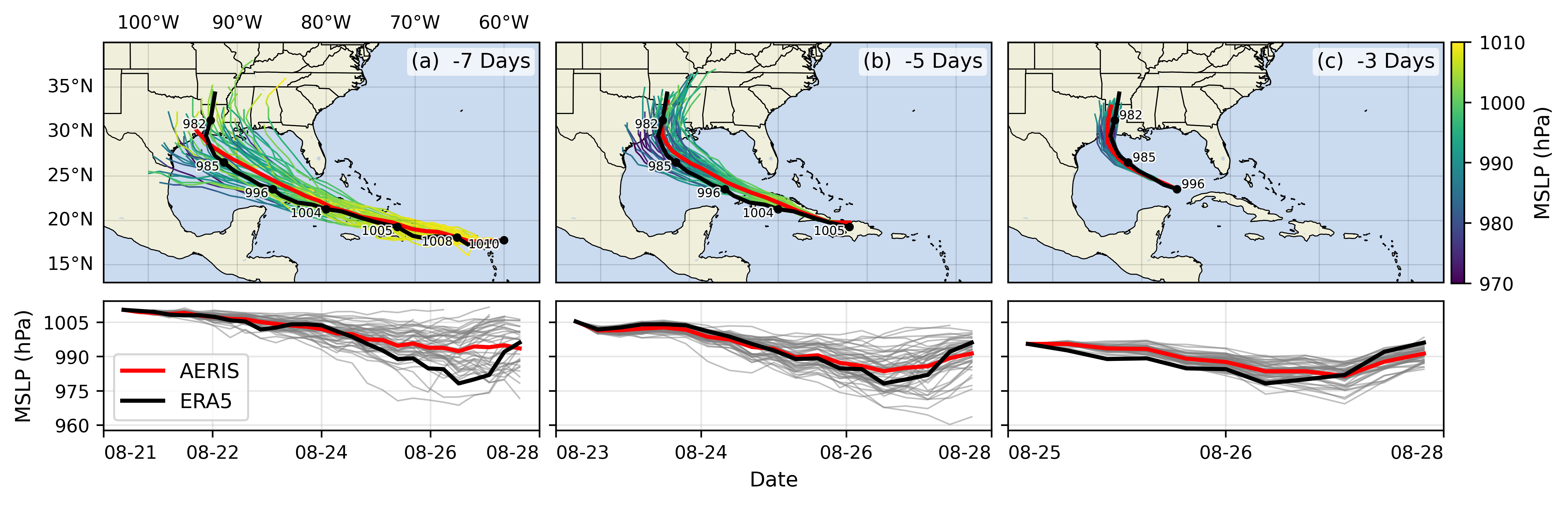

AERIS demonstrates high skill in forecasting extreme events, including the track and intensity of Hurricane Laura up to 7 days before landfall, and the 2020 European heatwave, with ensemble members capturing both the magnitude and timing of extremes.

Figure 6: Hurricane Laura track and intensity forecasts, initialized 7, 5, and 3 days prior to landfall, showing close agreement with ERA5.

Limitations and Future Directions

Despite strong results, AERIS exhibits under-dispersive ensembles (SSR < 1), indicating overconfidence in probabilistic forecasts. Improving ensemble spread via initial condition perturbations and tuning the diffusion churning schedule is a priority. The current science results are based on the 1.3B parameter model; scaling to larger models with 1×1 patch size remains computationally intensive. Consistency distillation and multi-step finetuning are promising avenues for reducing inference cost and further improving skill.

AERIS is trained on historical reanalysis and is not governed by explicit physical equations, so non-physical artifacts may arise. Operational deployment would require finetuning on real-time NWP data. The SWiPe parallelism framework is generalizable and can be extended to higher resolutions, alternative datasets, and other window-based transformer architectures.

Implications and Outlook

AERIS establishes a new state-of-the-art in data-driven, generative Earth system modeling, demonstrating that billion-parameter diffusion transformers can deliver reliable, stable, and skillful forecasts from weather to seasonal scales. The SWiPe parallelism strategy enables efficient exascale training, making it feasible to develop foundation models for climate science at the highest available resolutions.

The practical implications are significant: AERIS can generate global, high-resolution ensemble forecasts in seconds, supporting improved preparedness for extreme events and advancing the integration of AI in operational meteorology. Theoretically, the work demonstrates that diffusion-based generative models, when scaled appropriately and paired with efficient parallelism, can overcome the stability and calibration limitations of both deterministic and prior generative approaches.

Future developments will likely focus on further scaling, improved ensemble calibration, integration with physical constraints, and operational deployment. The SWiPe strategy may also inform parallelism design in other domains requiring high-resolution, window-based transformer models.

Conclusion

AERIS represents a substantial advance in the application of large-scale generative models to Earth system prediction, combining architectural, algorithmic, and systems innovations to achieve exascale performance and robust, skillful forecasts across timescales. The framework sets a new benchmark for both computational and scientific performance in AI-driven weather and climate modeling, and provides a foundation for future research at the intersection of machine learning, high-performance computing, and geoscience.