- The paper introduces a framework combining Vision-Language Models and robotic imagination to actively resolve 6D pose ambiguities in real-time scenarios.

- It employs entropy-guided next-best-view selection and an equivariant diffusion policy for dynamic object pose tracking in cluttered environments.

- Results show significant performance gains with up to 97.5% success in simulations and 90% success in integrated tasks like peg-in-hole assembly.

ActivePose: Active 6D Object Pose Estimation and Tracking for Robotic Manipulation

The paper "ActivePose: Active 6D Object Pose Estimation and Tracking for Robotic Manipulation" introduces an integrated framework for addressing the challenges of 6-DoF object pose estimation and tracking in the context of robotic manipulation. This framework is designed to dynamically leverage visual cues to resolve pose ambiguities and efficiently maintain object visibility during motion.

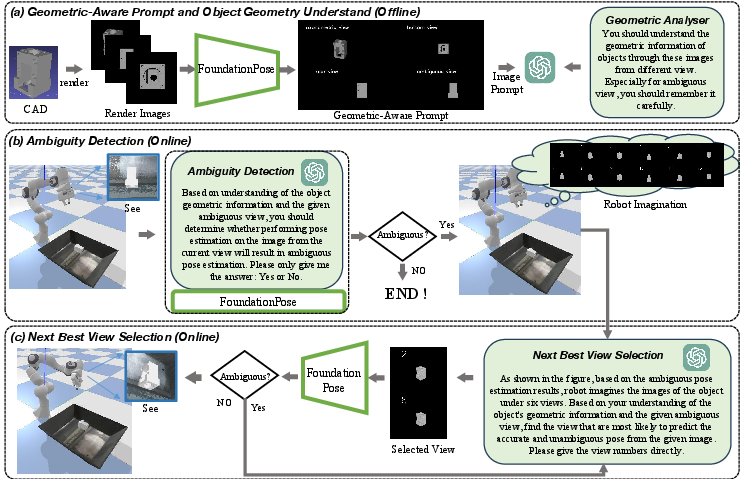

Active Pose Estimation

The active pose estimation framework employs a Vision-LLM (VLM) alongside "robotic imagination" to effectively detect and resolve ambiguities in real-time scenarios. The pipeline consists of offline and online stages:

Active Pose Tracking

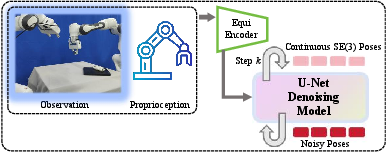

The active pose tracking module capitalizes on an equivariant diffusion policy, trained through imitation learning, to preserve object visibility and minimize pose ambiguity:

- Encoding and Denoising: The module encodes the current observation and applies denoising over K reverse-diffusion steps to yield continuous SE(3) poses, subsequently executing these poses in a receding-horizon loop to maintain optimal camera positioning.

- Trajectory Generation: It dynamically generates smooth camera trajectories that preserve the visibility of critical object features, essential for maintaining pose accuracy during dynamic manipulation tasks.

Figure 2: Pipeline of Active Pose Tracking. Encode the current observation, denoise over K reverseâdiffusion steps to obtain continuous SE(3) poses, then execute the last k poses in a recedingâhorizon loop.

Implementation and Results

The proposed ActivePose framework has been evaluated and validated in both simulation and real-world environments, demonstrating superior performance over classical baselines in various challenging scenarios:

- Pose Estimation: The proposed method outperforms fixed-view and random-NBV approaches significantly, achieving a success rate as high as 97.5% in random placements and 95.0% in high-entropy placements, both in simulation and real-world settings.

- Pose Tracking: The active tracking component shows enhanced capabilities under conditions of long-range linear motion, circular rotation, temporary occlusions, and random spatial movements. The success rates range from 52.5% to 91.3%, indicating significant improvements in maintaining object visibility and reducing ambiguity.

- Integrated Tasks: In the context of peg-in-hole assembly, ActivePose delivers a consistent 90% success rate, highlighting its efficacy in maintaining accurate pose estimation and tracking over the entire manipulation process.

Conclusion

The ActivePose framework introduces a novel approach to addressing the inherent challenges of 6D pose estimation and tracking by integrating next-best-view selection with dynamic pose tracking. It advances the capability of robotic manipulation systems to operate effectively in cluttered and dynamically changing environments, facilitating robust object interaction. Future work intends to explore image-based feature representations to enhance the computational robustness and sensitivity to pose variations.

This work contributes significantly to the field of robotic perception, aiming to eliminate the traditional bottlenecks associated with pose ambiguity and tracking stability. By actively and intelligently adjusting viewpoints, ActivePose sets a new standard for autonomous manipulation tasks requiring high precision and adaptability.