- The paper introduces BenchECG, a comprehensive benchmark that aggregates diverse ECG datasets and tasks to standardize model evaluation.

- The paper presents xECG, an efficient xLSTM-based model pretrained with SimDINOv2, achieving state-of-the-art performance and computational gains.

- The paper demonstrates xECG’s strong generalization across various populations and modalities, reducing finetuning time and memory requirements.

BenchECG and xECG: Standardized Benchmarking and Efficient Foundation Modeling for ECG Analysis

Introduction

This paper introduces BenchECG, a comprehensive benchmark for evaluating ECG foundation models, and xECG, a novel xLSTM-based recurrent model pretrained with SimDINOv2 self-supervised learning. The work addresses critical limitations in the field: inconsistent evaluation protocols and the computational inefficiency of transformer-based architectures for long-context ECG tasks. BenchECG aggregates diverse datasets and clinically relevant tasks, enabling rigorous, reproducible comparison of ECG foundation models. xECG demonstrates superior generalization, efficiency, and performance across all evaluated tasks, establishing a new baseline for ECG representation learning.

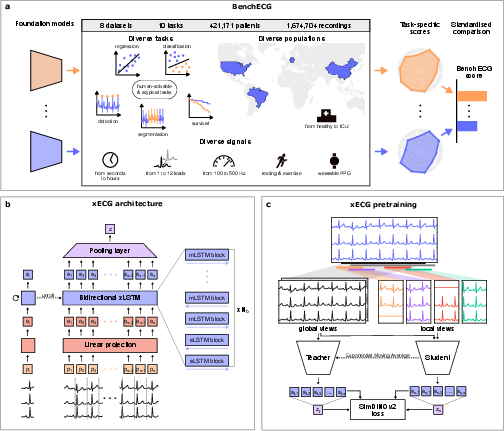

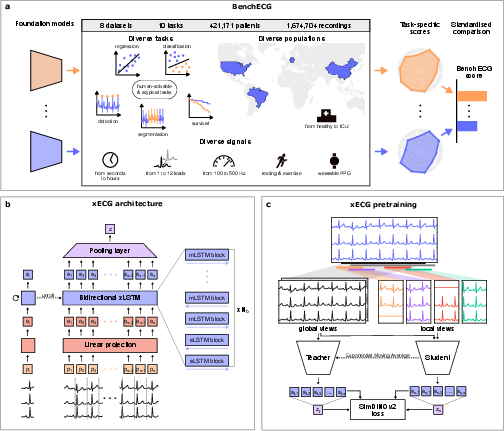

Figure 1: BenchECG provides a unified evaluation suite for ECG foundation models; xECG is a bi-directional xLSTM model pretrained via SimDINOv2.

BenchECG: Comprehensive Benchmarking for ECG Foundation Models

BenchECG comprises eight publicly available datasets, spanning 421,171 patients and 1,674,704 recordings, and ten tasks including classification, segmentation, detection, regression, and survival analysis. The benchmark covers a wide spectrum of signal types (12-lead, 2-lead, single-lead, PPG), populations (healthy, ICU, geographically diverse), and task types (diagnosis, age estimation, mortality prediction, blood test abnormality detection, R-peak detection, sleep apnea segmentation).

Key features of BenchECG:

- Signal Diversity: Includes short clinical ECGs, long-term ambulatory recordings, and PPG signals.

- Population Diversity: Datasets from Europe, USA, China, Brazil; varying cohort sizes and clinical settings.

- Task Diversity: Multilabel classification, beat-level arrhythmia detection, regression, segmentation, survival analysis, and OOD generalization.

- Rigorous Evaluation: Models are evaluated via both fine-tuning and linear probing, with standardized splits and metrics (AUROC, F1, SMAPE, C-index).

BenchECG enables systematic comparison of model generalization, representation quality, and adaptability to real-world clinical scenarios.

xECG: Efficient, General-Purpose ECG Foundation Model

xECG is built on the xLSTM architecture, which combines scalar (sLSTM) and matrix (mLSTM) memory blocks, exponential gating, and multi-head memory mechanisms. This design allows for linear scaling in sequence length and supports training-time parallelism, overcoming the quadratic complexity of transformers.

Architecture highlights:

- Bidirectional Processing: Alternating sLSTM and mLSTM layers process ECG patches in both forward and reverse directions, efficiently aggregating temporal information.

- Patch-Based Input: ECG signals are divided into non-overlapping temporal patches, projected into an embedding space, and encoded by the xLSTM stack.

- Flexible Output: Patch-level representations are pooled for signal-level tasks or used directly for beat-level/detection tasks.

Pretraining leverages SimDINOv2, a non-contrastive teacher-student SSL framework with multi-view augmentation (global/local crops, lead dropout, baseline wander simulation, amplitude scaling, multiplicative jitter). The student network matches teacher representations across views, regularized by coding rate to maximize feature diversity.

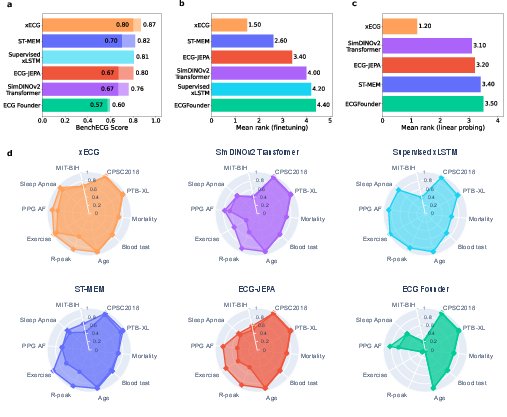

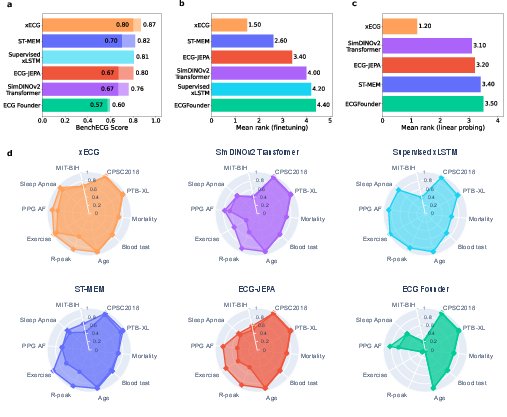

Figure 2: xECG achieves the highest BenchECG score and mean rank across all tasks, outperforming transformer and CNN-based models in both fine-tuning and linear probing.

Empirical Results and Comparative Analysis

xECG achieves the highest BenchECG score (0.868±0.0030) and mean rank ($1.50$ for fine-tuning, $1.20$ for linear probing), outperforming ST-MEM, ECG-JEPA, ECGFounder, and transformer baselines. xECG is the only model to perform strongly across all datasets and task types.

Long-Context Tasks

xLSTM-based models, especially xECG, excel in tasks requiring extended temporal context:

- Sleep Apnea Segmentation: xECG AUROC 0.932±0.014 (vs. ST-MEM 0.702±0.020).

- MIT-BIH Arrhythmia Classification: xECG F1 0.677±0.025 (vs. ST-MEM 0.644±0.007).

Linear probing reveals that xECG's pretrained features are inherently better aligned with long-term dependencies, reducing the need for extensive task-specific adaptation.

Short-Context Tasks

On conventional 12-lead ECGs (PTB-XL, CPSC2018), performance differences are narrower after fine-tuning, but xECG maintains an advantage in linear probing, indicating superior representation quality.

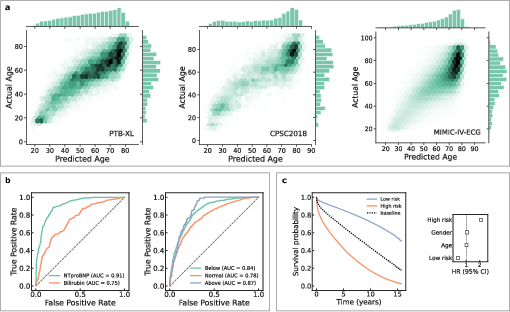

Generalization Across Populations and Modalities

Computational Efficiency

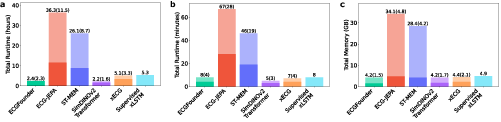

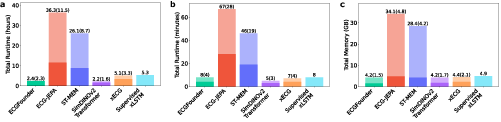

xECG is substantially more efficient than transformer-based models:

- Finetuning Time: $5.1$ hours (xECG) vs. $26.1$ hours (ST-MEM) across all tasks.

- PTB-XL Task: $7$ min (xECG) vs. $67$ min (ST-MEM); $4.2$ GB (xECG) vs. $28.4$ GB (ST-MEM) memory usage.

Recurrent architectures provide linear scaling with input length, enabling practical deployment for long-duration ECGs and resource-constrained clinical environments.

Figure 4: xECG demonstrates superior runtime and memory efficiency for both fine-tuning and linear probing compared to transformer-based models.

Discussion and Implications

BenchECG establishes a standardized, reproducible framework for evaluating ECG foundation models, facilitating robust model selection and driving architectural innovation. The results demonstrate that xLSTM-based models, particularly xECG, are well-suited for long-context physiological signal modeling, offering both superior generalization and computational efficiency.

Key implications:

- Model Selection: BenchECG enables informed selection of foundation models for novel cardiac applications based on task-specific performance.

- Architectural Trade-offs: Recurrent models (xLSTM) outperform transformers in long-context tasks and efficiency, while CNNs (ECGFounder) are limited in temporal resolution and generalization.

- Pretraining Strategies: SimDINOv2 SSL yields robust, transferable representations, especially in low-data and OOD scenarios.

- Clinical Translation: xECG's generalization across populations and modalities supports its potential for real-world deployment, though prospective clinical validation remains necessary.

Limitations include potential overfitting to benchmark datasets, variability in pretraining data across models, and the need for broader clinical utility assessment.

Conclusion

BenchECG provides a rigorous, standardized benchmark for ECG foundation models, addressing reproducibility and generalization challenges in cardiac AI. xECG, leveraging xLSTM architecture and SimDINOv2 SSL, sets a new baseline for ECG representation learning, achieving state-of-the-art performance, generalization, and efficiency across diverse tasks and populations. Future work should explore scaling pretraining, harmonizing pretraining datasets, and extending evaluation to prospective clinical settings.