- The paper identifies reinforcement learning as a strategic improvement over supervised fine-tuning in deep research systems.

- It outlines innovative methodologies in data synthesis, reward design, and hierarchical agent coordination for managing multi-step tasks.

- The survey offers practical insights on curriculum design and multimodal integration, underpinning scalable and efficient RL training frameworks.

Reinforcement Learning Foundations for Deep Research Systems: A Survey

The paper titled "Reinforcement Learning Foundations for Deep Research Systems: A Survey" provides a comprehensive examination of reinforcement learning (RL) methodologies applicable for training deep research systems within agentic AI frameworks. The paper provides a systematic categorization of existing works based on data synthesis, RL methods for agentic research, and training frameworks, while also tackling issues related to hierarchical agent coordination and evaluation benchmarks. This essay elucidates the core components, methodologies, and future implications as discussed in the paper (2509.06733).

Introduction to Deep Research Systems

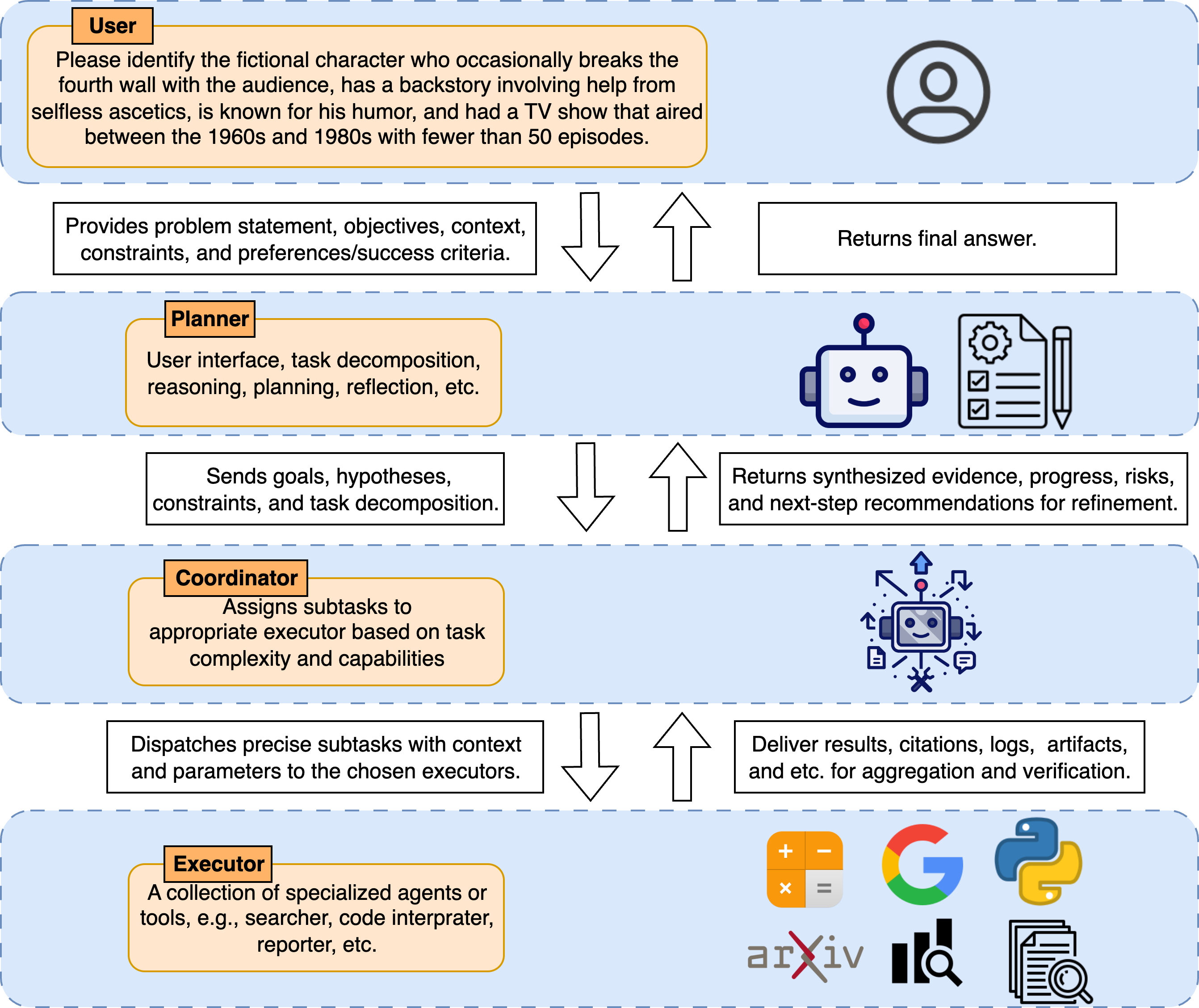

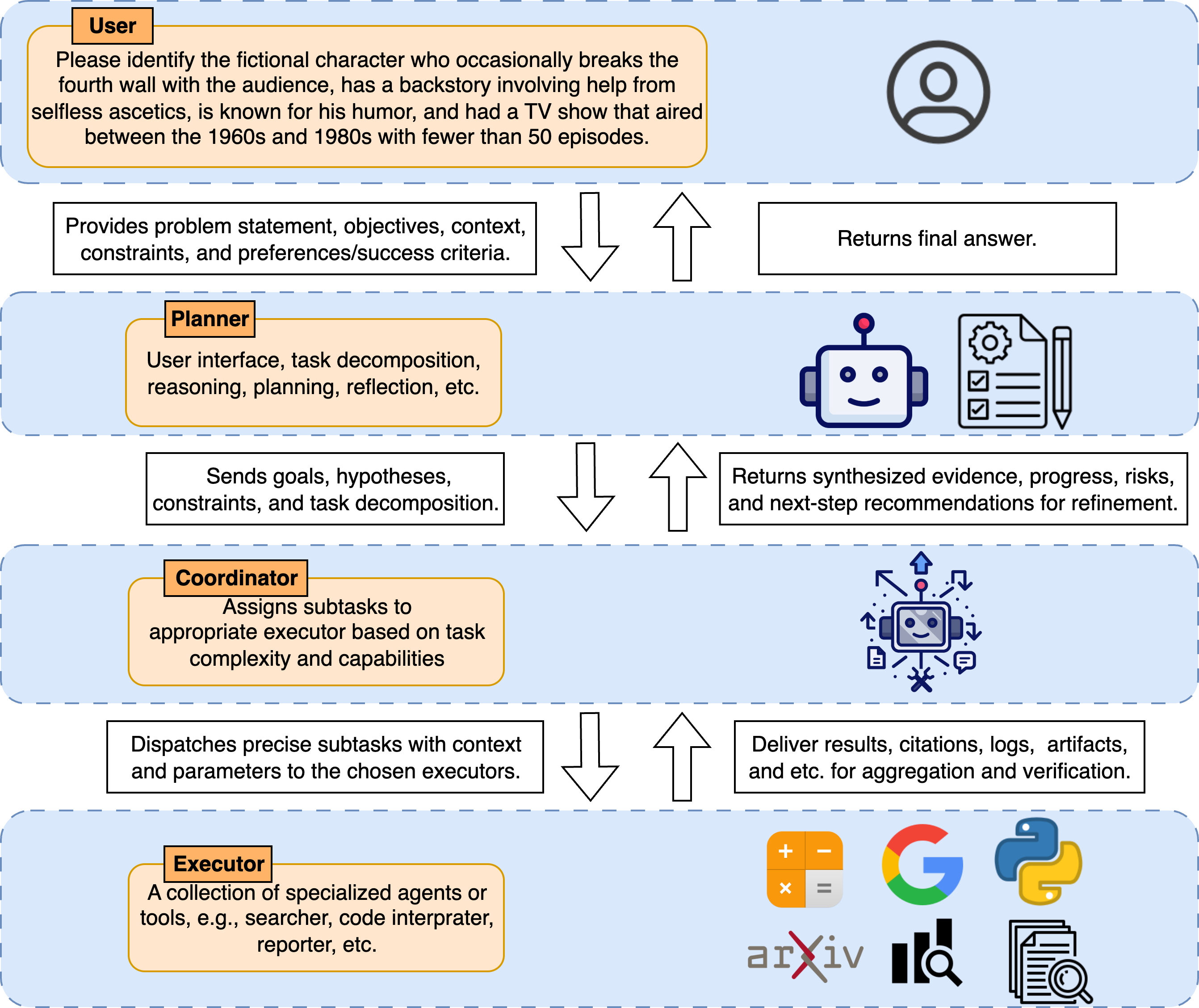

Deep research systems are envisioned as autonomous AI entities capable of executing complex, multi-step inquiries across digital information landscapes (Figure 1). These systems are architecturally framed with a hierarchical structure featuring a Planner, Coordinator, and a suite of Executors to manage strategic reasoning, task decomposition, and actionable follow-through.

Figure 1: Illustration of the hierarchical deep research system architecture.

Supervised Fine-Tuning (SFT) is employed to lay the foundation for these systems, but its limitations—imitation and exposure biases, along with underutilization of dynamic environment feedback—highlight the potential of RL methodologies. Reinforcement Learning, focusing on optimizing trajectory-level policies, offers a strategic advantage in complex task domains by minimizing dependencies on human priors and improving resilience through exploration and sophisticated credit assignments.

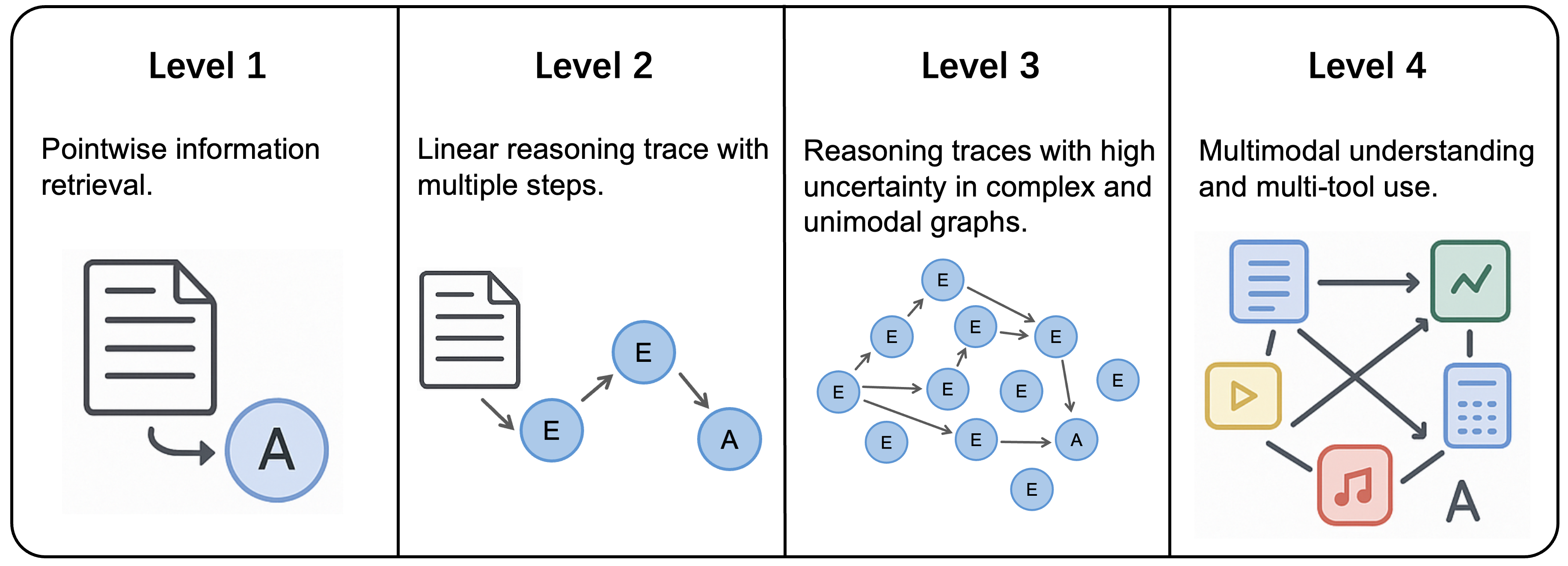

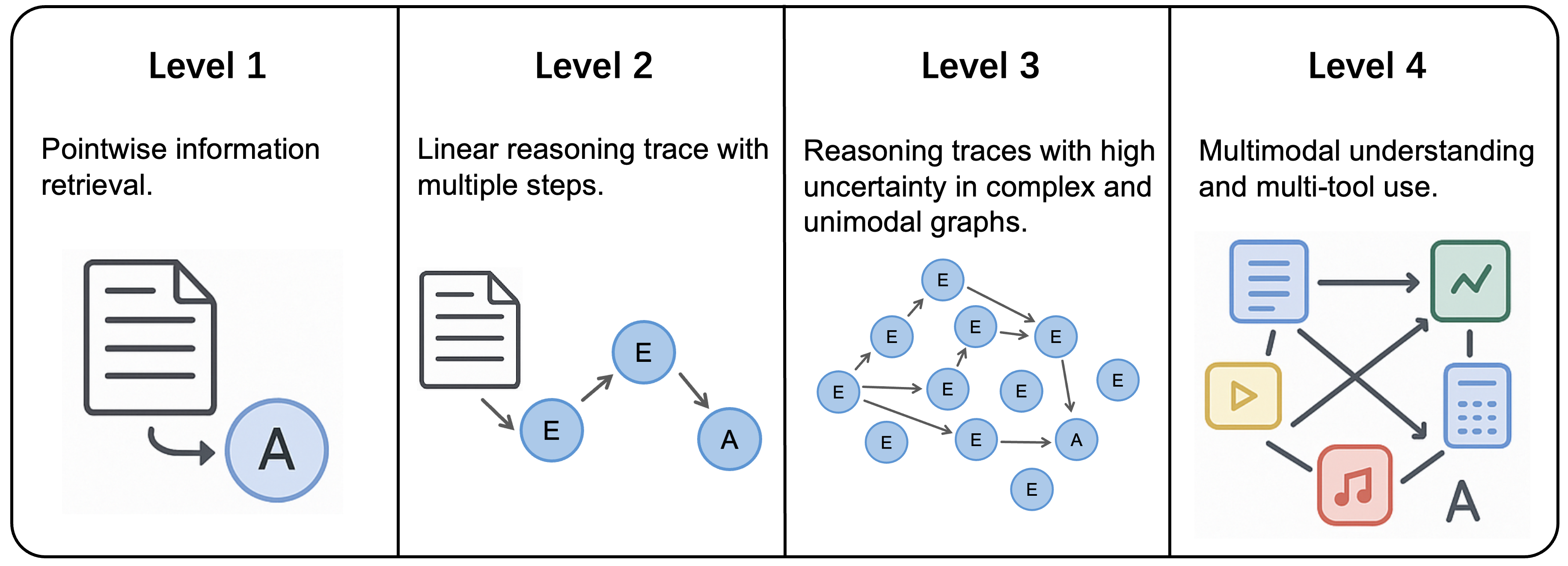

Figure 2: Illustration of QA Task Complexity Levels.

The authors categorize the literature into three main axes: (i) data synthesis and curation, (ii) RL methods for agentic research inclusive of stability and rewards design, multimodal integration, and (iii) RL training systems. These axes are analyzed to present a cohesive view of the current landscape and to extract practical insights for advancing the field.

Data Synthesis and Curation

The success of deep research systems is intricately tied to the quality of data used for training. Synthetic data generation, consequently, plays a pivotal role. Current research segments this domain into three primary strategies: cross-document composition, structure-driven path growth, and difficulty staging by transformation/rollouts. Each approach targets eliciting and refining model capabilities for complex, multi-step reasoning tasks (Table 1).

The paper distinguishes RL training data from SFT/DPO in its purpose of prioritizing end-to-end improvement from closed-loop, verifiable environment signals, as opposed to imitation (SFT) or relative preference alignment (DPO). RL data are designed to reward the system for trajectory-level performance, leveraging both outcome and step-level feedback. This reduces reliance on human priors and biases by permitting exploration and principled trade-offs over long horizons. The authors categorize QA tasks into four complexity levels (Figure 2) to guide dataset construction and curriculum design.

\subsection{RL Methods for Agentic Research}

Deep research systems evaluate multi-step, tool-rich environments, thus requiring advanced RL training pipelines (see example works in Table \ref{tab:rl-regime}). Building on the established DeepSeek-R1-style pipeline, recent innovations enhance stability, efficiency, and scalability. Critical themes include cold-start strategies, curriculum design, cost and latency control in training, optimized token stochastic gradient descent (PPO/GRPO with token masking and KL anchors), guided exploration, and verifiable, outcome-first rewards to ensure stable optimization without tool-avoidance or reward hacking, as illustrated in Figure 1.

\paragraph{Training Regimes:} Fundamental to long-horizon learning is the training regime itself. The standard approach of a cold-started (optional SFT/RSFT) policy; templated rollouts with explicit tool tags and budgets; outcome and format rewards; and PPO/GRPO (plus KL penalties) provide anchoring stability. Beyond this baseline, research introduces improvements focusing on curriculum learning and search necessity, optimizing sample efficiency, exploration, and multi-objective trade-offs by applying warm starts and dynamic/task-specific curricula.

\paragraph{Reward Design}

Recent research illuminates methodologies for both outcome-level and step-level credit (Table \ref{tab:rl-reward}). While verifiable outcome rewards anchor instruction alignment, novel signalsâgain-beyond-RAG, group-relative efficiency, knowledge-boundary checksâand fine-grained, step-level process rewards (tool execution, evidence utility) effectively bias search and reasoning. These strategies enhance performance on multi-step tasks, albeit the choice of rewarding eventually affects stability and policy effectiveness. Open questions remain on composing/scheduling multiple objectives without inducing reward hacking and learning budget-aware, risk-sensitive policies.

Multimodal Integration

Deep research systems extend to multimodal settings, necessitating solutions for tasks involving diverse data types (Table \ref{tab:rl-multimodal}). The survey delineates evolving models that integrate vision-LLMs (VLMs) to unify token space perception and reasoning, emphasizing action-initiated perception strategies (crop/zoom, edit-reason cycles) under high-entropy tasks. These agents demand observation engineering to foster verifiable evidence utilization and discern modality preferences, offering vital progress paths for efficient reasoning over complex, heterogeneous inputs.

Agent Architecture and Coordination

The hierarchical architecture of deep research systems emphasizes the delineation between planning and execution, allowing for strategic tools, task delegation, and division of labor, facilitated by Coordinator and Executors. The survey highlights various system architectures focusing on task orchestration methodologies, displaying varied choices in planning roles, tool structures, and human observability. These strategies inform scalable, reliable AI solutions for real-world challenges while considering adaptation possibilities for distinct task volumes.

Conclusion

This paper dismantles the complex RL foundations essential for training and deploying deep research systems. By addressing RL's scalability, data curation, reward design, and coordination intricacies, the paper maps a pathway for enhancing AI task proficiency in multi-step environments. Potential advancements lie in evaluating refined reward models, multimodal unification, and further optimization of longitudinal agent behavior tasks, essential for expanding AI's scope in various domains, reflecting on shared agent roles and decision-making frameworks within dynamic operational environments.