- The paper presents a systematic, large-scale benchmark of 11 optimizers for LLM pretraining, identifying methods like AdEMAMix and MARS as potent alternatives to AdamW.

- The paper employs rigorous hyperparameter tuning and controlled ablations on weight decay, learning rate schedules, and warmup durations to assess optimizer performance.

- The paper demonstrates that insights from dense model experiments transfer to MoE architectures, offering practical guidance for tuning optimizers in different LLM setups.

Benchmarking Optimizers for LLM Pretraining: A Comprehensive Evaluation

Introduction

This work presents a systematic, large-scale empirical paper of optimization algorithms for LLM pretraining, addressing the lack of standardized, controlled benchmarks in the field. The authors evaluate 11 optimizers—including AdamW, AdEMAMix, MARS, SOAP, Muon/D-Muon, Signum, Lion, Sophia, Prodigy, and SF-AdamW—across a range of model sizes (124M–720M parameters), batch sizes, and training durations, with careful hyperparameter tuning and compute accounting. The paper also includes extensive ablations on critical training hyperparameters (e.g., weight decay, learning rate schedules, warmup, initialization, gradient clipping), and extends the analysis to Mixture-of-Experts (MoE) architectures. The codebase and all experimental configurations are open-sourced for reproducibility.

Experimental Design and Methodology

The benchmark is constructed around Llama-like transformer architectures with modern components (SwiGLU, RMSNorm, RoPE, weight tying), trained on a 100B-token subset of FineWeb. Four dense model sizes (124M, 210M, 583M, 720M) and a 520M MoE variant are considered. Batch sizes are varied from 16K to 2M tokens, and training durations are chosen to span both below and above the Chinchilla-optimal regime. All optimizers are tuned per model/batch/horizon, with grid searches over learning rate, betas, weight decay, warmup, gradient clipping, and scheduler parameters. Compute cost is normalized across methods.

The optimizer suite covers:

- Adam-like: AdamW, ADOPT, AdEMAMix

- Sign-based: Lion, Signum

- Second-order/Preconditioned: Muon, D-Muon, SOAP, Sophia

- Schedule-free/Parameter-free: SF-AdamW, Prodigy

- Variance-reduced: MARS

Ablations are performed for each optimizer on weight decay, learning rate schedule (cosine, WSD, linear), warmup, and learning rate decay endpoint. The paper also tracks wall-clock time and gradient norm dynamics.

Main Results

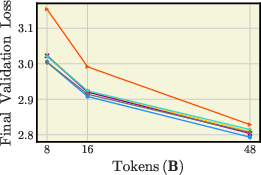

Optimizer Rankings Across Scales

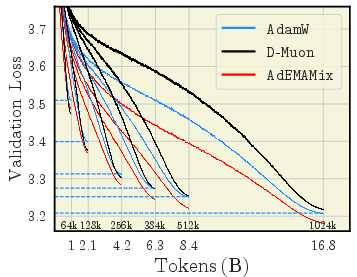

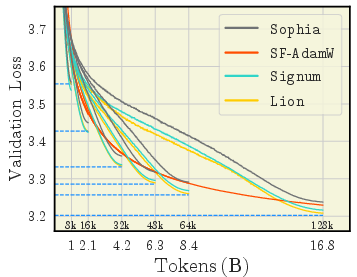

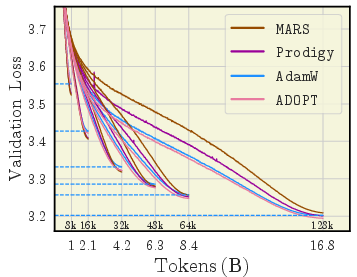

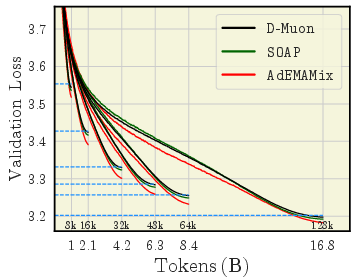

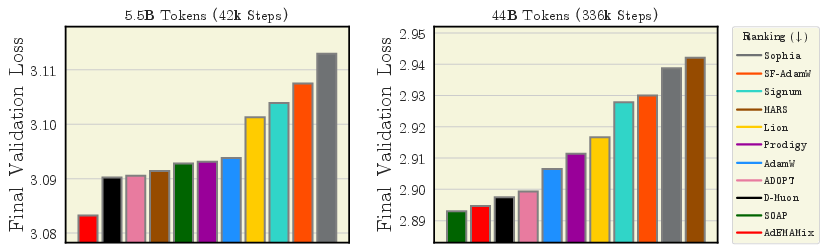

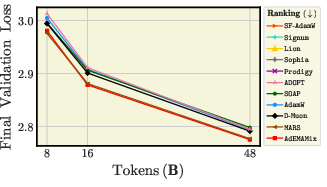

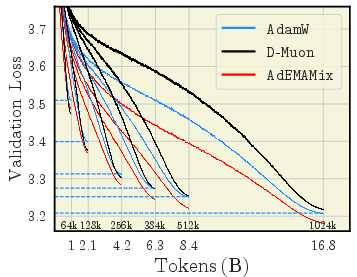

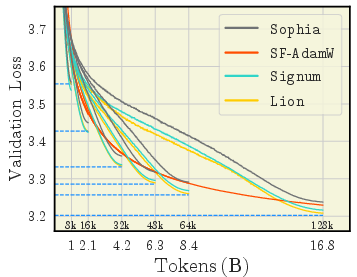

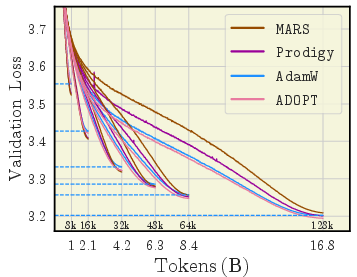

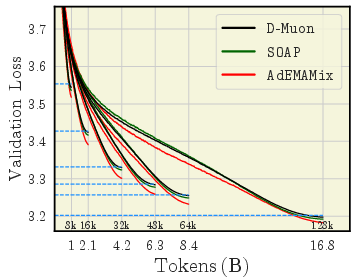

For small models (124M) and small batch sizes, AdamW remains competitive, but AdEMAMix, D-Muon, SOAP, and Prodigy can outperform it, especially in short runs. As batch size increases, sign-based methods (Signum, Lion) and MARS benefit substantially, closing the gap with or surpassing AdamW. For large models (720M) and large batches, AdEMAMix and MARS consistently dominate, with a significant margin over AdamW and other methods.

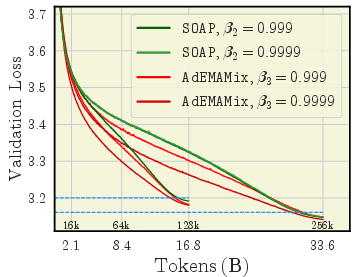

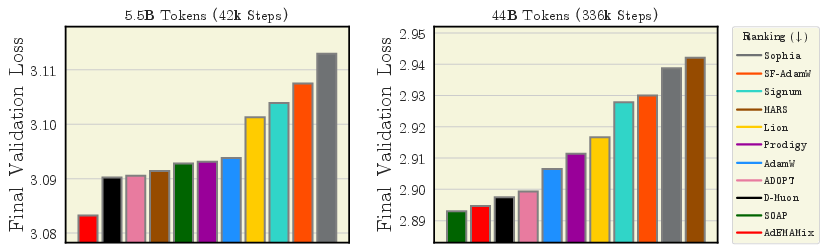

Figure 1: Ranking of optimizers for 720M Llama-based models. AdEMAMix and MARS achieve the lowest final validation loss, outperforming AdamW and other baselines.

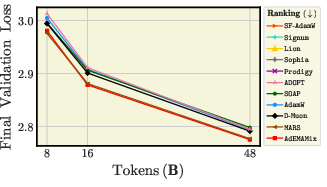

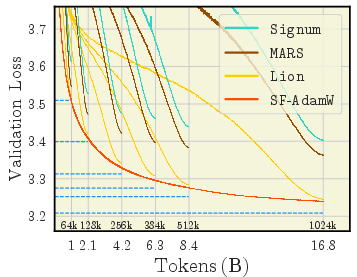

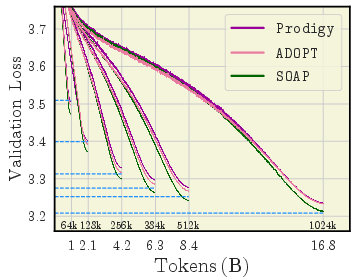

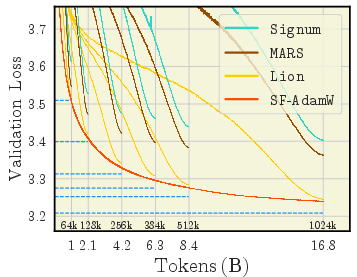

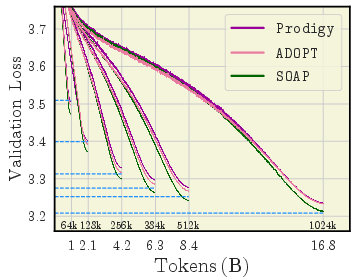

Training Dynamics and Batch Size Effects

Short training runs favor optimizers with aggressive weight decay and fast adaptation (e.g., D-Muon, AdEMAMix). As training duration increases, AdamW narrows the gap, but AdEMAMix remains superior, especially when betas are re-tuned for longer horizons. Increasing batch size disproportionately benefits sign-based and variance-reduced methods, with MARS, Prodigy, Lion, and Signum matching or exceeding AdamW at large batch sizes.

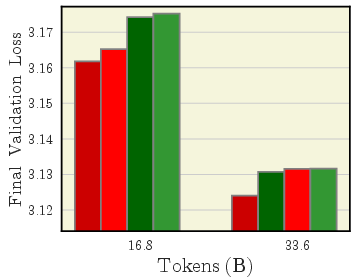

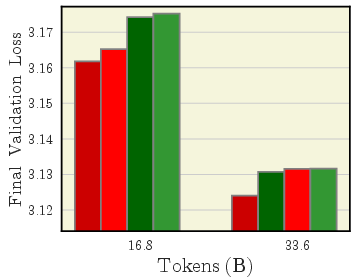

Figure 2: Comparing optimizers for training a 124M parameter LLM. Signum, MARS, Lion, and Prodigy benefit from increased batch size, outperforming AdamW in long runs.

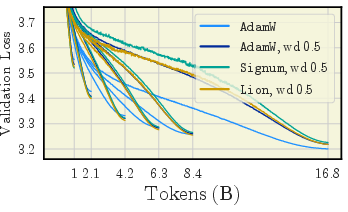

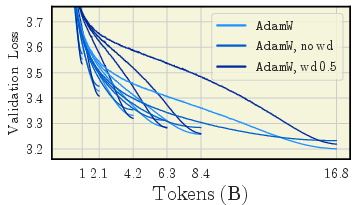

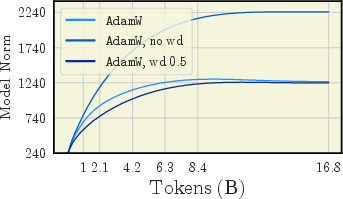

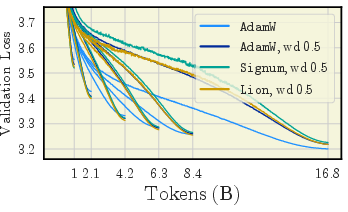

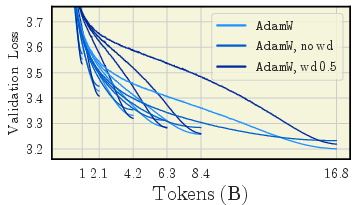

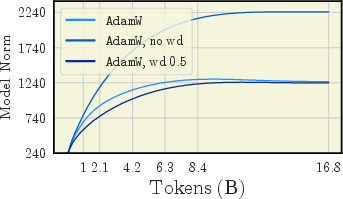

Weight Decay and Learning Rate Decay

Ablations reveal that large weight decay (e.g., 0.5) is optimal for short training, but moderate decay (0.1) is best for long runs. Omitting weight decay is consistently suboptimal. For learning rate schedules, decaying to 0.01× (or lower) of the maximum learning rate is critical; the common practice of decaying only to 0.1× is suboptimal across all tested schedulers.

Figure 3: Larger weight decay achieves significantly better results when training on fewer tokens. For long training, moderate decay is optimal.

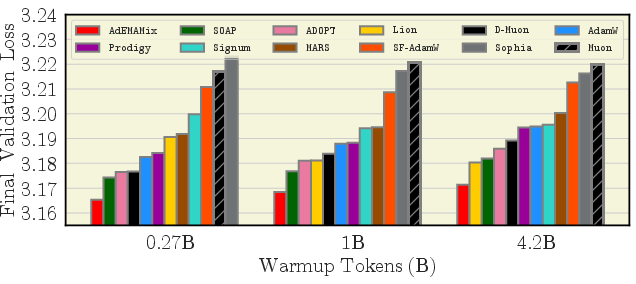

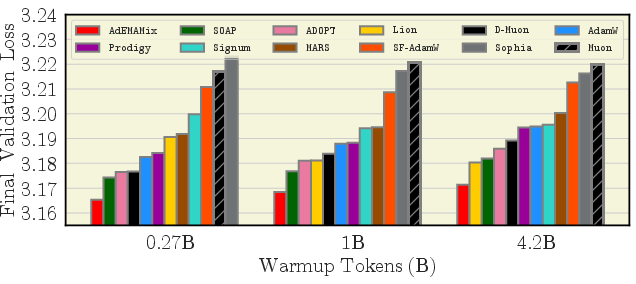

Warmup and Scheduler Interactions

Warmup duration is optimizer-dependent. SF-AdamW, Sophia, Signum, and Lion benefit from longer warmup, while AdamW and AdEMAMix prefer shorter warmup. Cosine scheduling is generally optimal, but WSD is preferred by Muon, and linear scheduling can be competitive for sign-based methods.

Figure 4: Warmup ablation. Sign-based optimizers and SF-AdamW benefit from increased warmup duration.

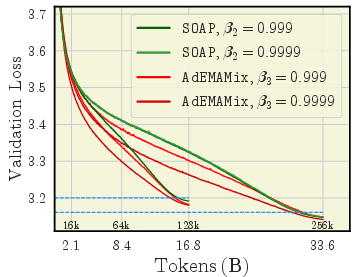

Hyperparameter Sensitivity and Transfer

Learning rate and beta parameters require careful tuning, especially for long training runs and large models. The optimal betas for AdamW-like methods increase with training duration (e.g., β₂=0.9999 for long runs). Prodigy’s effective learning rate closely tracks AdamW’s, suggesting its utility as a proxy for learning rate tuning.

Figure 5: Re-tuning beta parameters is significant for longer training. Increasing β₃ for AdEMAMix is crucial for long runs.

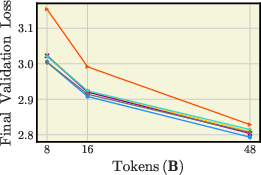

MoE Architectures

Optimizer rankings and best practices transfer smoothly from dense to MoE models. SOAP and AdEMAMix remain strong, with Prodigy and AdamW also competitive.

Figure 6: Ranking optimizers for 520M MoE models with 256×512 batch size. Rankings mirror those for dense models.

All optimizers except SOAP exhibit similar wall-clock time scaling with model size. SOAP incurs a significant slowdown due to preconditioner computations.

Implementation and Practical Guidance

The paper provides detailed pseudocode and PyTorch implementations for all optimizers, including correct decoupled weight decay for sign-based methods (Signum, Lion). For Muon and D-Muon, the application of weight decay to all parameter groups is essential for robust performance. For AdEMAMix, slow EMA and α-scheduling are critical for stability and scaling. For Prodigy and SF-AdamW, bias correction and careful β₂ tuning are necessary.

Key practical recommendations:

- Always tune weight decay and learning rate decay endpoint; default values from popular codebases are often suboptimal.

- Re-tune betas for longer training runs; higher β₂ is generally better for AdamW-like methods.

- Use large batch sizes to unlock the potential of sign-based and variance-reduced optimizers.

- For schedule-free optimizers, tune warmup and betas carefully; do not disable gradient clipping for SF-AdamW.

- For Muon/D-Muon, ensure weight decay is applied to all parameter groups.

- For SOAP, be aware of wall-clock overhead at large model sizes.

Theoretical and Empirical Implications

The results challenge the default reliance on AdamW for LLM pretraining, demonstrating that newer optimizers (AdEMAMix, MARS, D-Muon) can achieve better loss and scaling, especially when hyperparameters are properly tuned. The paper also highlights the optimizer-dependence of best practices for weight decay, learning rate scheduling, and warmup, and exposes the sensitivity of some methods (e.g., Sophia) to training horizon and batch size.

The findings suggest that optimizer selection and tuning remain a critical, under-explored axis for improving LLM pretraining efficiency and final model quality. The transferability of results to MoE architectures and the open-sourcing of the benchmark framework provide a foundation for future research and industry adoption.

Limitations and Future Directions

The benchmark is limited to models up to 720M parameters and does not directly evaluate downstream task performance, though loss scaling is generally predictive. Some optimizers (e.g., Shampoo, Adan, Scion) and memory-efficient variants are not included. The paper does not address distributed training or sharding framework compatibility, which may affect practical deployment at larger scales.

Future work should extend the benchmark to trillion-parameter models, include downstream evaluation, and explore optimizer performance in distributed and low-precision settings. The development of unified benchmarks for memory- and communication-efficient optimizers is also a priority.

Conclusion

This work establishes a rigorous, reproducible benchmark for optimizer selection in LLM pretraining, providing actionable insights for both practitioners and researchers. The results demonstrate that with careful tuning, several modern optimizers can outperform AdamW, especially at scale and with large batch sizes. The open-source codebase and comprehensive ablations set a new standard for optimizer evaluation in the LLM community.