- The paper introduces TLinFormer, an innovative linear attention mechanism that ensures full context awareness while reducing quadratic complexity.

- It employs unique neuron connectivity and context path encoding, achieving exact Softmax attention with strictly linear computational cost.

- Experimental results show competitive perplexity and reduced memory footprint, enhancing efficiency for long-sequence tasks.

"Rethinking Transformer Connectivity: TLinFormer, A Path to Exact, Full Context-Aware Linear Attention"

Introduction

The Transformer architecture is pivotal in modern AI, especially due to its self-attention mechanism, which dynamically computes context representations. However, the quadratic complexity of self-attention limits its scalability for long-sequence tasks. This paper introduces TLinFormer, a novel linear attention mechanism that maintains exact attention computations and ensures that the complete historical context is accessible.

Current Limitations and Alternatives

Efficient Transformer variants (e.g., Longformer, BigBird) address the self-attention bottleneck using sparse attention or kernel methods. These approaches often compromise on performance due to their sparse patterns or data-agnostic kernel functions. TLinFormer tackles these limitations directly, providing a strictly linear complexity model without approximations.

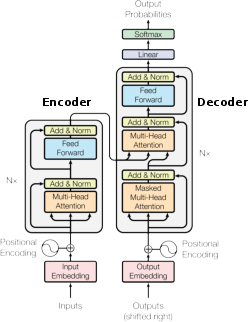

TLinFormer's architecture enables exact Softmax attention calculations, while maintaining linear computational complexity. This is achieved through a novel connectivity structure that involves unique neuron connection patterns:

This architecture (Figure 1) ensures full context awareness without approximating the attention mechanism, using architectural strategies that differ from traditional sparse or kernel-based approaches.

Complexity and Cache Efficiency

TLinFormer exhibits linear complexity in both Cache Miss and Cache Hit scenarios:

- Cache Miss: Every forward pass is calculated freshly, maintaining linear complexity relative to sequence length.

- Cache Hit: Exploits precomputed results to drastically reduce computation when generating subsequent tokens.

Experimental Results

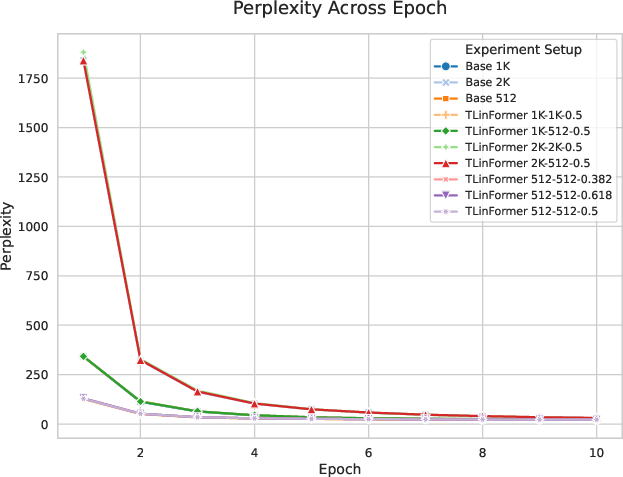

The experiments validate TLinFormer's efficiency, showcasing a significant reduction in memory footprint and inference latency compared to traditional Transformer models (Figure 2).

Figure 3: Perplexity (PPL) of each model over training epochs.

Results demonstrate that TLinFormer achieves competitive perplexity scores while maintaining efficiency. Notably, it supports significantly larger sequence lengths with reduced inference memory, making it suitable for long-context tasks.

Discussion

TLinFormer's "forced compression" approach represents a step towards more intelligent models by compelling a deeper compression and abstraction of information. This architecture could lead to more efficient and higher capacity models that operate effectively on large inputs.

Conclusion

TLinFormer provides an innovative framework that resolves the efficiency and performance trade-offs in existing Transformer models. By adhering to connectionist principles and optimizing information flow, TLinFormer sets a strong precedent for future research into scalable and efficient neural architectures.

Through this work, TLinFormer demonstrates significant potential for advancing AI models' capabilities, especially in handling long sequences. Future work will involve scaling this architecture further and exploring its applications across diverse AI tasks.