Gated Linear Attention Transformers with Hardware-Efficient Training

Overview

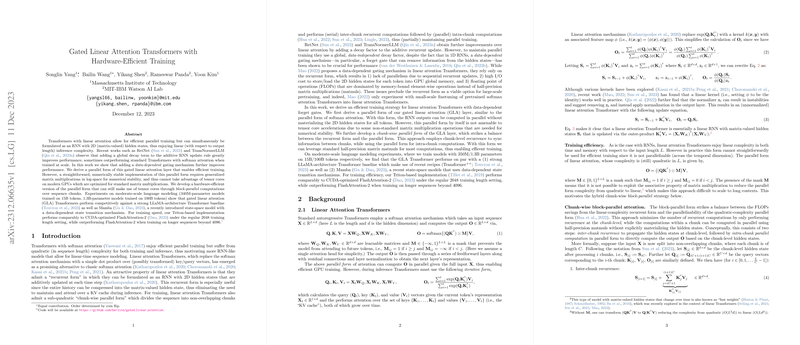

The paper "Gated Linear Attention Transformers with Hardware-Efficient Training" presents advancements in Transformer architectures that leverage linear attention mechanisms to improve computational efficiency, particularly in hardware-limited environments. The proposed Gated Linear Attention (GLA) Transformer introduces a hardware-efficient algorithm for linear attention that strategically manages memory movement and parallelizability. This approach, known as FlashLinearAttention, is benchmarked against softmax attention-based Transformers and other linear attention variants, showing competitive performance on both training speed and model accuracy.

Contributions

The primary contributions of the paper include:

- FlashLinearAttention Algorithm: The paper introduces FlashLinearAttention, a novel linear attention algorithm optimized for hardware efficiency. It addresses the inefficiencies of prior linear attention methods by avoiding excessive memory movements and better utilizing parallel computation resources.

- Data-Dependent Gating Mechanism: The text extends linear attention with data-dependent gates, creating Gated Linear Attention (GLA). This mechanism replaces the fixed global decay rate in traditional models with a more expressive, data-aware variant that improves model flexibility and performance.

- Empirical Benchmarking: Extensive experiments are conducted to validate the GLA Transformer against existing models, such as LLaMA, RetNet, and Mamba, and on various benchmarks. The results indicate that GLA Transformers match or exceed the performance of these baselines on LLMing tasks and exhibit strong length generalization capabilities.

Technical Details

FlashLinearAttention

FlashLinearAttention achieves hardware efficiency through two key strategies:

- Tiling and Memory Hierarchy Awareness: The algorithm breaks down computations into tiles that fit into fast, on-chip memory (SRAM), significantly reducing the reliance on slower global memory (HBM).

- Parallel and Sequential I/O Operations: Depending on memory constraints, it employs either a materialization approach, holding intermediary states in HBM for increased parallelism, or a non-materialization approach, recomputing states to save memory at the cost of additional computation.

Gated Linear Attention (GLA)

GLA introduces a gating mechanism that dynamically adjusts based on the input data:

- Matrix-Valued Gates: Instead of using a fixed decay factor, GLA uses data-dependent gates calculated through a low-rank linear transformation followed by a sigmoid function. This allows finer control over the retention of information across time steps.

- Parallel Computation Form: The paper also establishes a parallel form for GLA, demonstrating how efficient chunkwise parallel computation can be achieved despite the complexity added by the gates.

Empirical Results

The empirical evaluation of the GLA Transformer encompasses several dimensions:

- Synthetic Tasks: The Multi-Query Associative Recall (MQAR) task shows that GLA outperforms scalar decay-based models like RetNet, validating the effectiveness of the data-dependent gating mechanism.

- LLMing: On LLMing benchmarks, GLA Transformers exhibit competitive perplexity and accuracy, closely matching or outperforming the state-of-the-art, including the LLaMA architecture.

- Training Efficiency: GLA Transformers offer superior training throughput compared to Mamba and traditional Transformers, particularly when leveraging the materialization strategy for handling longer sequences.

Future Directions

The findings in this paper point towards several future research avenues:

- Scaling Up: Given the promising empirical results at moderate scales, the next step involves scaling GLA Transformers to larger models and datasets to explore their potential at industry-relevant scales.

- Cross-Modal Applications: Extending GLA mechanisms to other domains, such as vision and audio, where long-range dependencies are critical, could further validate its versatility and efficiency.

- Further Optimization: Continued enhancements in hardware-aware algorithms, potentially integrating emerging memory technologies or specialized computation units, could further improve the efficiency and performance of GLA Transformers.

Conclusion

The paper offers a significant step forward in the development of efficient Transformer architectures by integrating gated mechanisms into linear attention frameworks and optimizing their implementation for hardware. The GLA Transformer, underpinned by the FlashLinearAttention algorithm, presents a compelling alternative to conventional models, balancing computational efficiency and modeling power. This work opens new pathways for deploying large-scale neural models in resource-constrained environments, maintaining high performance standards.