- The paper introduces a semi-centralized MAS that uses structured A2A communication via an MCP server to overcome centralized planning limitations.

- It demonstrates a +9.09% performance improvement over the OWL system on the GAIA benchmark, emphasizing collaborative refinement and reduced context redundancy.

- The architecture minimizes token overhead and latency while enabling scalable, modular integration of diverse agent types for real-world applications.

Anemoi: A Semi-Centralized Multi-Agent System Leveraging Agent-to-Agent Communication via MCP Server

Introduction and Motivation

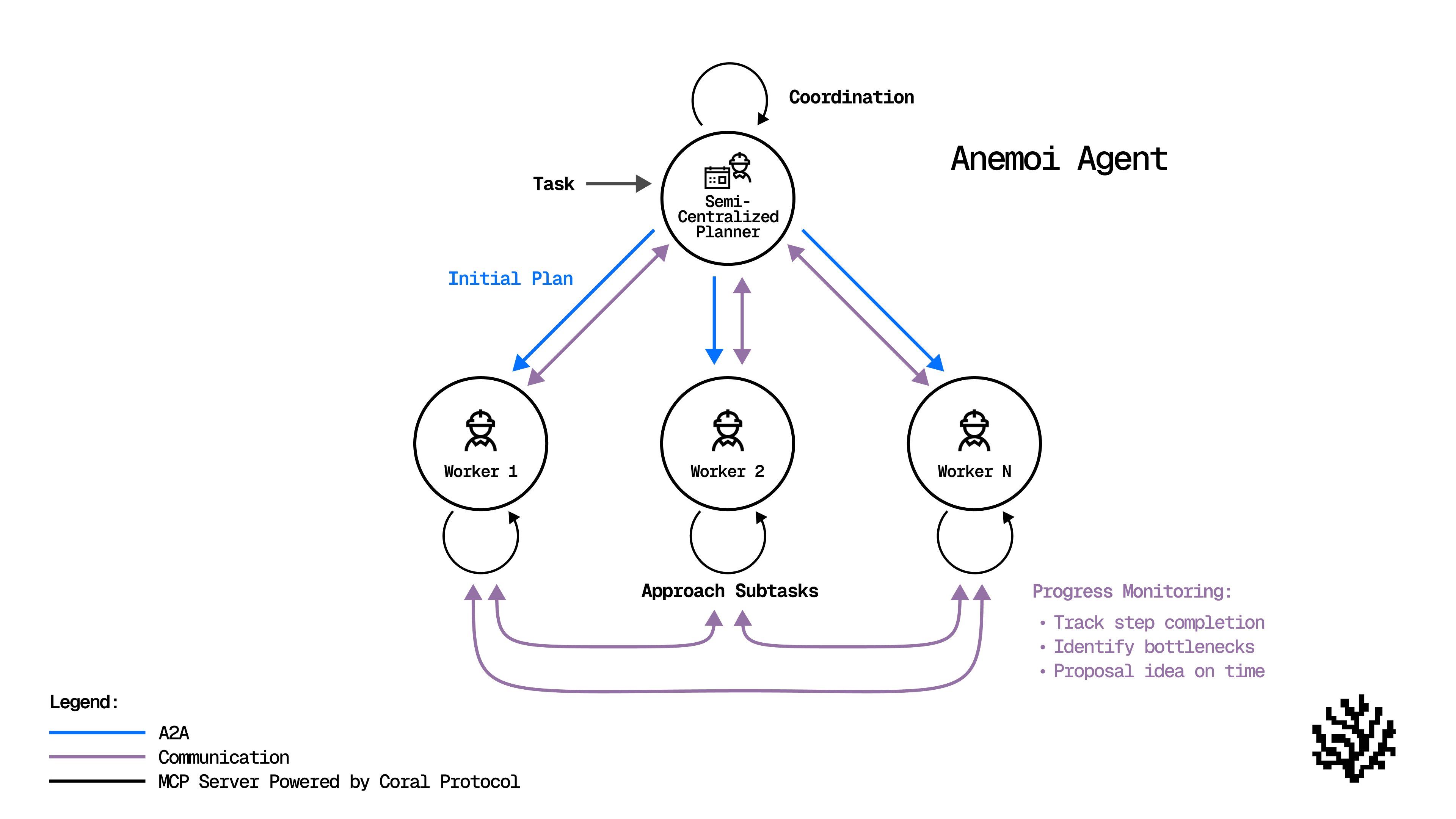

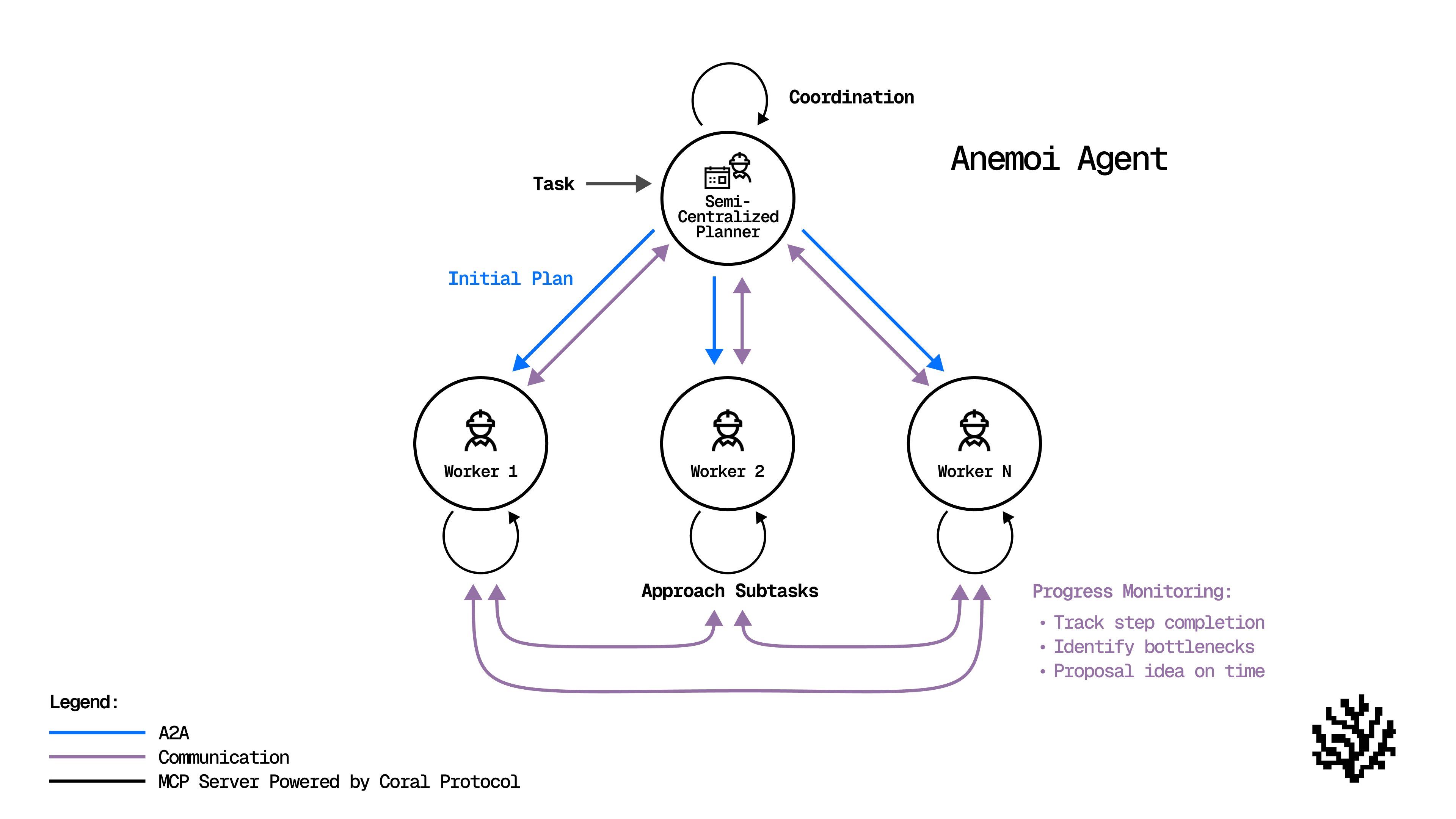

The Anemoi framework introduces a semi-centralized multi-agent system (MAS) architecture, fundamentally diverging from the prevailing context-engineering plus centralized paradigm in generalist MAS. Traditional systems rely on a strong planner agent to coordinate worker agents through unidirectional prompt passing, which results in two major limitations: (1) performance degradation when the planner is powered by a smaller LLM, and (2) inefficient inter-agent collaboration due to prompt concatenation and context injection, leading to redundancy and information loss. Anemoi addresses these issues by enabling structured, direct agent-to-agent (A2A) communication via the MCP server from Coral Protocol, allowing all agents to monitor progress, assess results, and propose refinements in real time.

Figure 1: Architecture of the Anemoi: a semi-centralized multi-agent system based on the A2A communication MCP server from Coral Protocol.

System Architecture and Communication Protocol

Anemoi's architecture is built around a dedicated MCP server that facilitates thread-based communication among agents. Each agent connects to the MCP server, which provides primitives for agent discovery, thread management, and message exchange. Threads serve as contextual compartments, ensuring that messages remain within their respective conversations and supporting directed queries and task delegation.

The agent composition includes:

- Planner Agent: Generates the initial plan and initiates coordination.

- Critique Agent: Evaluates contributions for validity and certainty.

- Answer-Finding Agent: Compiles and submits the final response.

- Web Agent: Executes web searches and simulates browser actions.

- Document Processing Agent: Handles diverse document formats.

- Reasoning/Coding Agent: Specializes in reasoning, coding, and offline data processing.

All agents are integrated with the MCP toolkit, enabling them to monitor progress, track step completion, and propose new ideas throughout execution. The communication pattern supports dynamic plan refinement and consensus-based answer submission, reducing reliance on a single planner and minimizing redundant context passing.

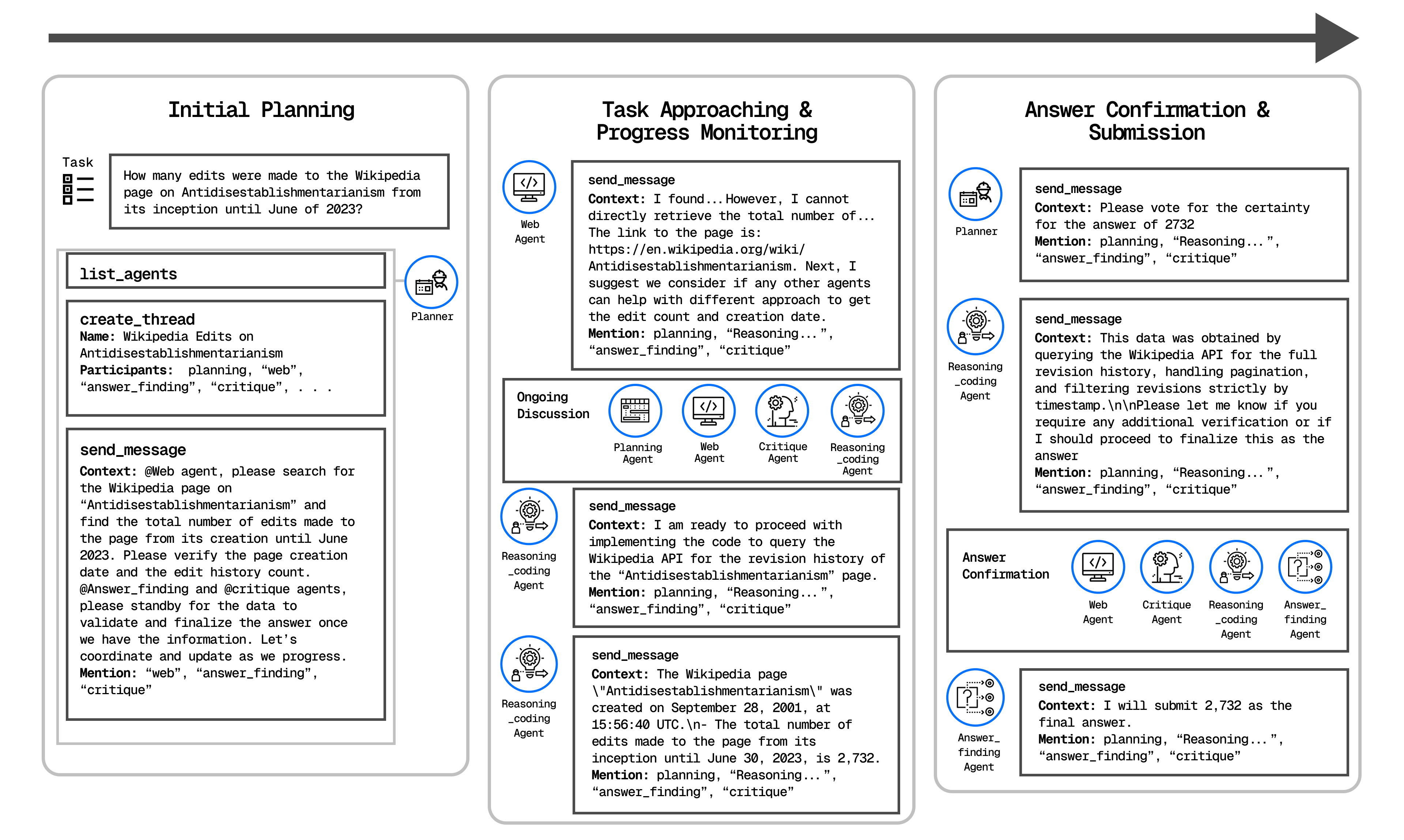

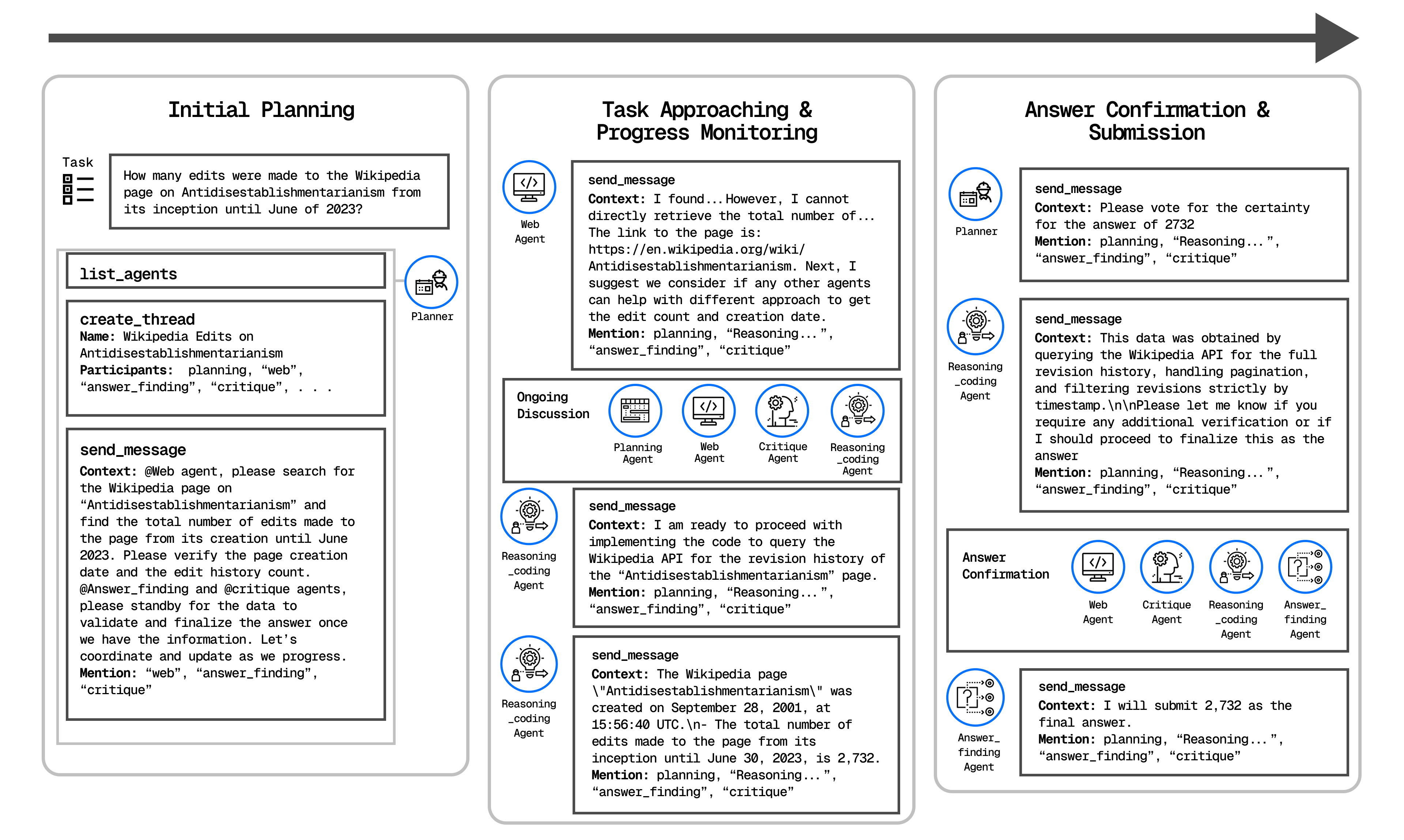

Figure 2: Overview of Anemoi. The system includes a planning agent to make initial plan, and a set of agents with different capability. The A2A communication MCP server enables all agents to monitor progress together.

Experimental Evaluation

Benchmark and Baselines

Anemoi was evaluated on the GAIA benchmark, which comprises real-world, multi-step tasks requiring web search, multi-modal file processing, and coding capabilities. The experimental setup ensured parity with the strongest open-source baseline, OWL, by using identical worker agent configurations and toolkits. The planner agent in Anemoi was powered by GPT-4.1-mini, while worker agents used GPT-4o. This configuration was chosen to highlight the robustness of the semi-centralized paradigm under weaker planner models.

Anemoi achieved an accuracy of 52.73% on the GAIA validation set (pass@3), outperforming OWL (43.63%) by +9.09 percentage points under identical LLM settings. Notably, Anemoi also surpassed several proprietary and open-source frameworks that employed stronger LLMs, demonstrating the efficacy of the A2A communication paradigm in mitigating the limitations of context-engineering-based coordination.

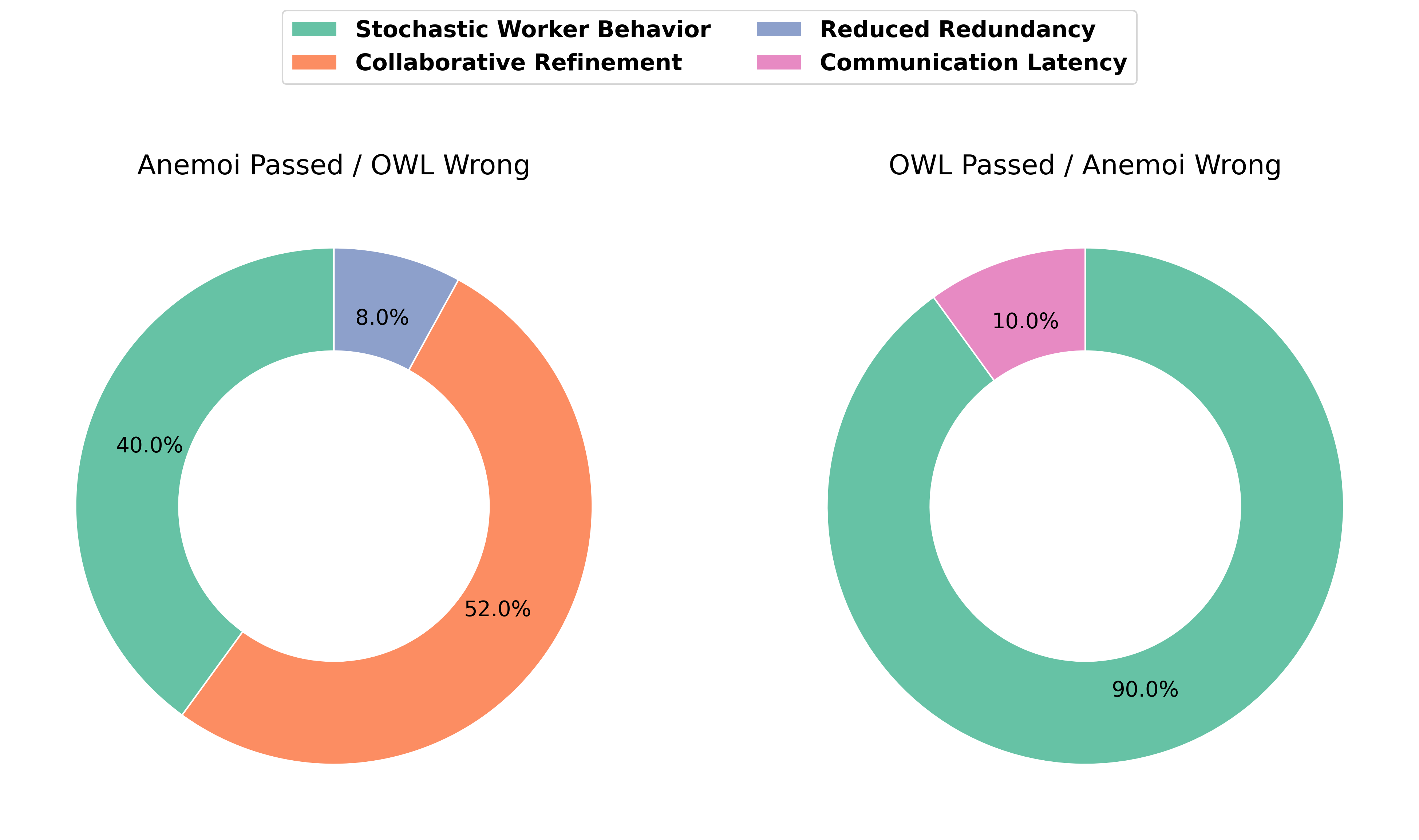

Comparative Task Attribution and Error Analysis

Task Attribution Analysis

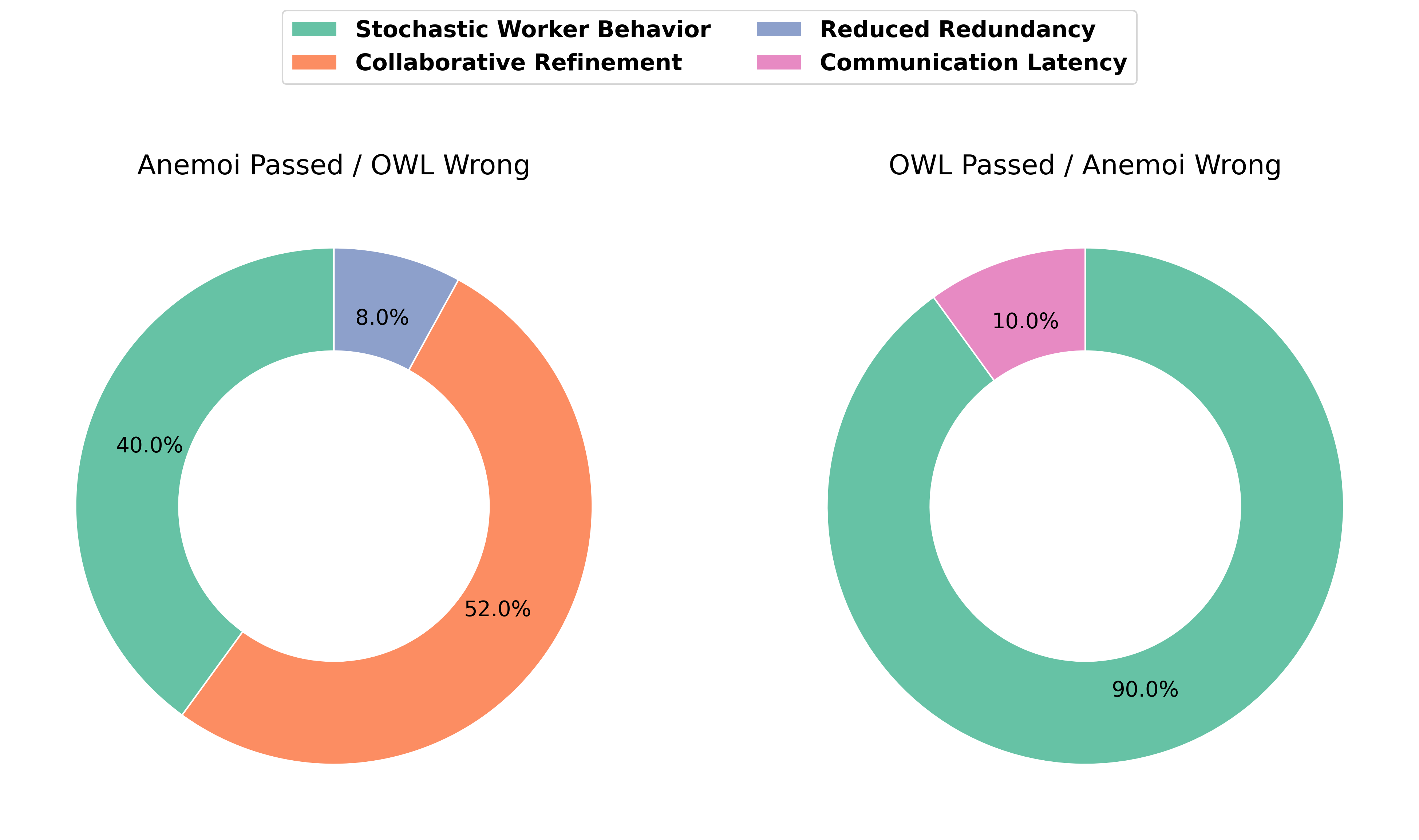

A detailed comparison of task attribution between Anemoi and OWL revealed that Anemoi's additional successes were primarily due to collaborative refinement (52%) and reduced context redundancy (8%), with the remainder attributed to stochastic worker behavior (40%). Conversely, OWL's successes over Anemoi were predominantly due to stochastic worker behavior (90%) and, to a lesser extent, communication latency (10%).

Figure 3: Comparison of task attribution categories between Anemoi and OWL. The donut chart illustrates the distribution of reasons why Anemoi succeeded where OWL failed, and vice versa.

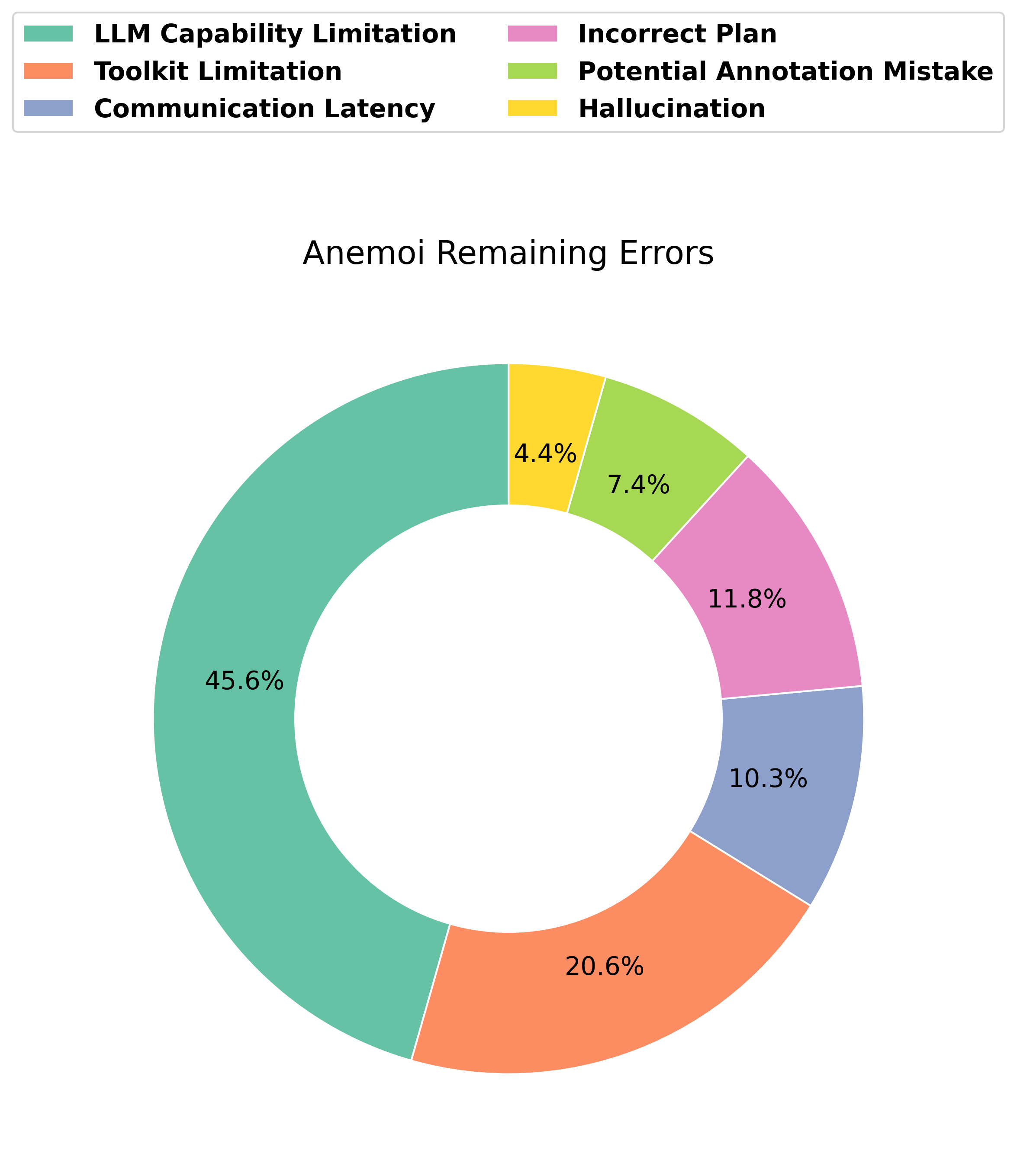

Error Analysis

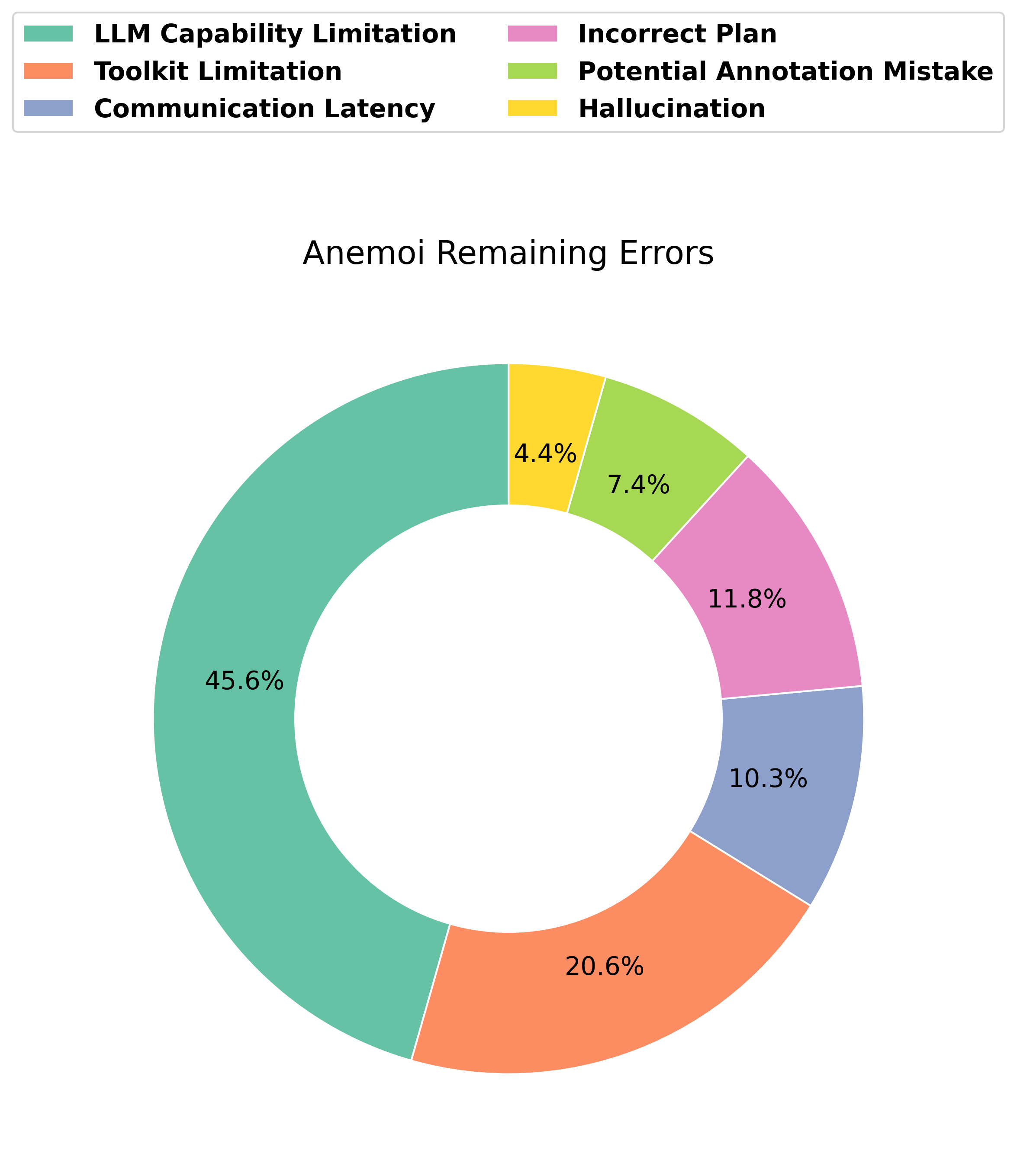

Anemoi's remaining errors were analyzed, with the largest fraction attributed to LLM capability limitations (45.6%), followed by toolkit limitations (20.6%), incorrect plans (11.8%), communication latency (10.3%), annotation mistakes (7.4%), and LLM hallucinations (4.4%). The error profile underscores the importance of further improving agent toolkits and LLM reliability, as well as optimizing communication latency in agent orchestration.

Figure 4: Remaining errors of the Anemoi.

Implementation Considerations and Trade-offs

The Anemoi system demonstrates that semi-centralized coordination via A2A communication can sustain high performance even with weaker planner models, provided that worker agents are sufficiently capable. The thread-based MCP server architecture offers contextual isolation and efficient message routing, reducing token overhead and improving scalability. However, the system's performance is still bounded by the capabilities of the underlying LLMs and toolkits, and communication latency can impact task completion in time-sensitive scenarios.

Resource requirements are moderate, as the MCP server can be deployed on standard cloud infrastructure, and agent orchestration scales linearly with the number of agents. The modular design facilitates integration of new agent types and toolkits, supporting extensibility for domain-specific applications.

Implications and Future Directions

The Anemoi framework advances the state of MAS by demonstrating that direct, structured inter-agent communication can overcome the bottlenecks of centralized planning and context engineering. The empirical results suggest that future MAS architectures should prioritize adaptive, consensus-driven coordination and minimize reliance on prompt concatenation. Further research should focus on enhancing agent toolkits, improving LLM reliability, and optimizing communication protocols to reduce latency.

Potential future developments include:

- Integration of more diverse agent types (e.g., multimodal reasoning, external API agents).

- Exploration of decentralized consensus mechanisms for fully distributed MAS.

- Application of Anemoi in real-world domains such as autonomous research, enterprise automation, and collaborative robotics.

Conclusion

Anemoi introduces a robust semi-centralized MAS architecture leveraging A2A communication via the MCP server, enabling scalable, adaptive, and cost-efficient agent coordination. The system achieves strong empirical performance on the GAIA benchmark, particularly under weaker planner models, and provides a blueprint for future MAS designs that emphasize direct inter-agent collaboration and dynamic plan refinement. The results highlight the practical and theoretical advantages of structured agent communication, marking a significant step toward scalable, generalist multi-agent AI systems.