- The paper introduces the A_min algorithm that dynamically refines lower bound estimates to optimize LLM inference scheduling under adversarial output uncertainty.

- It rigorously compares the conservative A_max and adaptive A_min methods, demonstrating that A_min achieves a logarithmic competitive ratio even with wide prediction intervals.

- Numerical experiments validate that A_min approaches optimal latency and better utilizes GPU memory, enabling practical integration into large-scale LLM serving systems.

Adaptively Robust LLM Inference Optimization under Prediction Uncertainty

The paper addresses the operational challenge of scheduling LLM inference requests under output length uncertainty, a critical issue for minimizing latency and energy consumption in large-scale deployments. LLM inference consists of a prefill phase (input prompt processing) and a decode phase (autoregressive token generation), with the output length unknown at request arrival. This uncertainty directly impacts both GPU KV cache memory usage and total processing time, making efficient scheduling nontrivial. The authors formalize the problem as an online, batch-based, resource-constrained scheduling task, where only interval predictions [ℓ,u] of output lengths are available, and the true output length oi is adversarially chosen within this interval.

Benchmark Algorithms and Competitive Analysis

Two primary algorithms are proposed and analyzed:

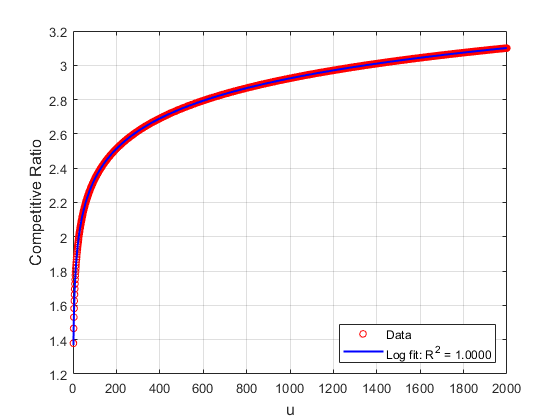

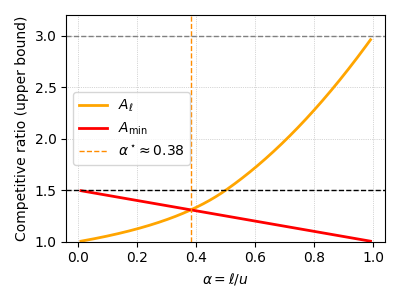

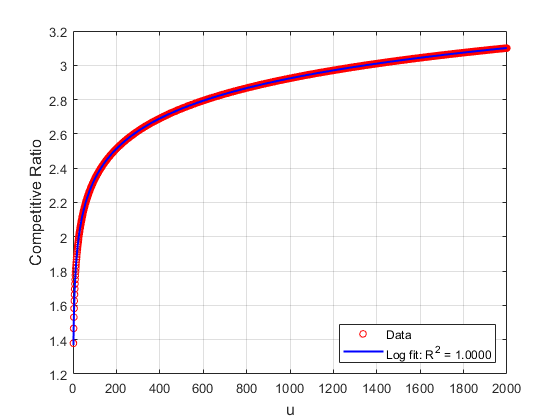

1. Conservative Max-Length Algorithm (Amax)

Amax schedules requests assuming the worst-case output length u for every job, ensuring no memory overflow but often severely underutilizing available memory. The competitive ratio of Amax is shown to be upper bounded by 2α−1(1+α−1) and lower bounded by 2α−1(1+α−1/2), where α=ℓ/u quantifies prediction accuracy. As α→0 (i.e., predictions become less precise), the competitive ratio grows unbounded, indicating poor robustness.

Figure 1: Competitive ratio of Amax as a function of α, illustrating rapid degradation as prediction intervals widen.

2. Adaptive Min-Length Algorithm (Amin)

Amin initializes each request with the lower bound ℓ and dynamically refines this estimate as tokens are generated. When memory overflow is imminent, jobs are evicted in order of increasing accumulated lower bounds, and their lower bounds are updated to reflect progress. This approach leverages only the lower bound, avoiding reliance on the upper bound u, which is typically harder to predict accurately.

Theoretical analysis demonstrates that Amin achieves a competitive ratio of O(log(α−1)), a significant improvement over Amax, especially for wide prediction intervals. The competitive ratio is derived via a Rayleigh quotient involving the output length distribution, and its spectral radius is shown to scale logarithmically with u.

Extensions: Distributional Analysis and Algorithm Selection

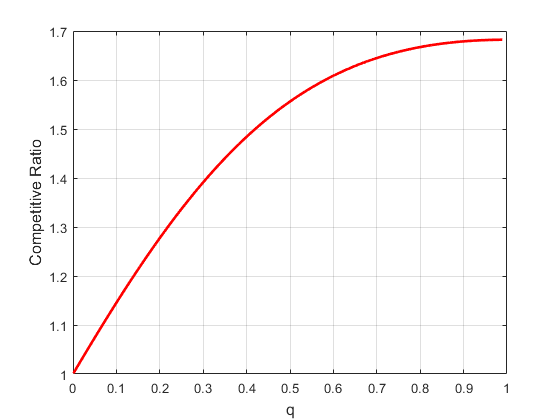

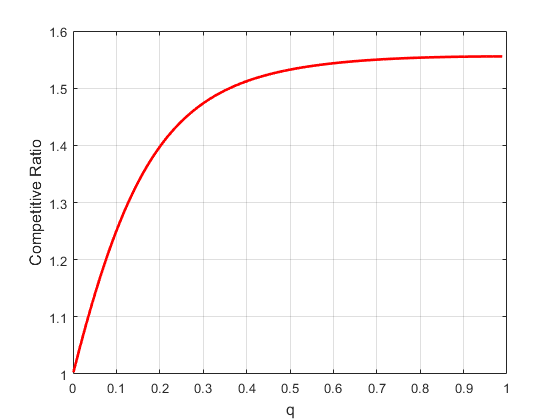

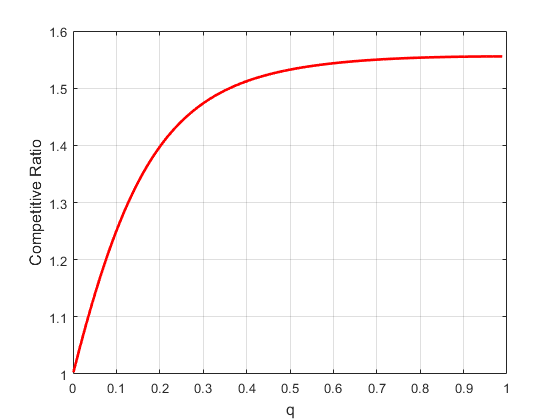

The paper further investigates the performance of Amin under specific output length distributions:

Figure 3: Competitive ratio as a function of parameter q: (Left) geometric distribution G(p); (Right) linearly weighted geometric distribution LG(p).

This distributional analysis enables adaptive algorithm selection based on empirical workload characteristics, further improving robustness.

Numerical Experiments

Experiments on the LMSYS-Chat-1M dataset validate the theoretical findings. Three prediction scenarios are considered:

- Rough Prediction ([1,1000]): Amax performs poorly due to extreme conservatism, while Amin matches the hindsight optimal scheduler.

- Non-Overlapping Classification: As prediction intervals become more accurate, both algorithms improve, but Amin consistently approaches optimal latency.

- Overlapping Interval Prediction: With individualized intervals, Amin maintains low latency even as prediction accuracy degrades, whereas Amax deteriorates rapidly.

These results confirm the robustness and adaptiveness of Amin across a range of practical settings.

Implementation Considerations

- Computational Complexity: Amin operates in O(MlogM) per time step, where M is the KV cache size.

- Memory Management: The algorithm is compatible with non-preemptive, batch-based LLM serving architectures and can be integrated with existing inference pipelines.

- Prediction Model Integration: Only lower bound predictions are required, which can be efficiently generated via lightweight ML models or heuristics.

- Deployment: The adaptive eviction and batch formation logic can be implemented as a scheduling layer atop standard LLM inference servers, with minimal modification to the underlying model code.

Theoretical and Practical Implications

The paper establishes that robust, adaptive scheduling can dramatically improve LLM inference efficiency under prediction uncertainty, with provable guarantees. The logarithmic competitive ratio of Amin is a strong result, especially given the adversarial setting. The distributional analysis and adaptive algorithm selection framework provide a pathway for further improvements in real-world systems, where output length distributions are often non-uniform and can be empirically estimated.

The memory-preserving combinatorial proof technique introduced for analyzing latency scaling under memory constraints is broadly applicable to other resource-constrained scheduling problems in AI systems.

Future Directions

Potential avenues for future research include:

- Extending the framework to heterogeneous prompt sizes and multi-worker settings.

- Incorporating multi-metric objectives (e.g., throughput, energy, SLO compliance).

- Leveraging richer prediction models (e.g., quantile regression, uncertainty-aware neural predictors).

- Exploring online learning approaches for dynamic adaptation to changing workload distributions.

Conclusion

This work rigorously advances the theory and practice of LLM inference scheduling under output length uncertainty. The adaptive algorithm Amin achieves robust, near-optimal latency across a wide range of prediction qualities and output distributions, requiring only lower bound estimates. The results have direct implications for the design of scalable, efficient LLM serving systems, and the analytical techniques developed herein are relevant to a broad class of online scheduling and resource allocation problems in AI operations.