- The paper presents a counterbalanced user study revealing that directive prompts correlate with higher satisfaction in using codebase assistants.

- It identifies key challenges including missing functionality, transparency issues, and difficulties with complex coding tasks.

- The study proposes design improvements such as dynamic context utilization and real-time auditing to enhance developer control.

Exploring the Challenges and Opportunities of AI-assisted Codebase Generation

Introduction

The introduction of codebase-level assistants (CBAs), leveraging advanced capabilities of LLMs, marks a significant shift in AI-powered programming tools' development. Unlike traditional snippet-level generation tools, CBAs handle entire codebases via natural language inputs, enabling comprehensive software development processes. However, their adoption compared to snippet-level assistants remains limited, primarily due to unresolved usability issues and integration challenges into existing developer workflows.

This paper examines these gaps by analyzing real-world interaction patterns and satisfaction levels among developers using CBAs. It explores participants' experiences during a paper involving coding tasks using popular CBAs like GitHub Copilot and GPT-Engineer.

Methodology

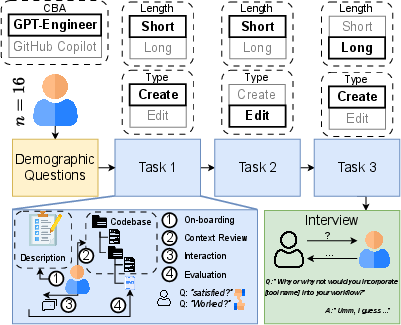

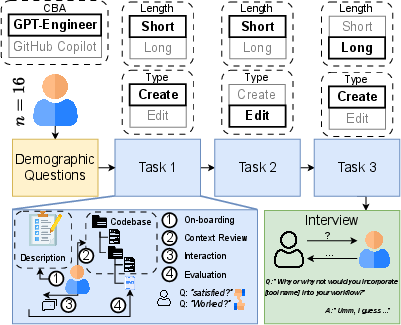

A counterbalanced user paper involving 16 participants, comprising students and professional developers, was conducted to explore how they interact with CBAs. The paper's design involved tasks of varying lengths and complexities to extract comprehensive insights regarding the usability, problem-solving approach, and satisfaction levels with CBAs. Post-task interviews provided qualitative data to complement the observations.

Figure 1: An overview of our methodology. Each participant was assigned to a CBA and completed three coding tasks.

User Interaction and Prompting Patterns

Prompting Styles and Content

Participants' prompts predominantly centered around functional requirements, yet exhibited significant variance concerning detail richness and interaction flow elements. A noteworthy finding was the positive correlation between directive prompt styles and user satisfaction, suggesting that CBAs currently thrive better with unambiguous, command-like instructions.

Satisfaction Metrics

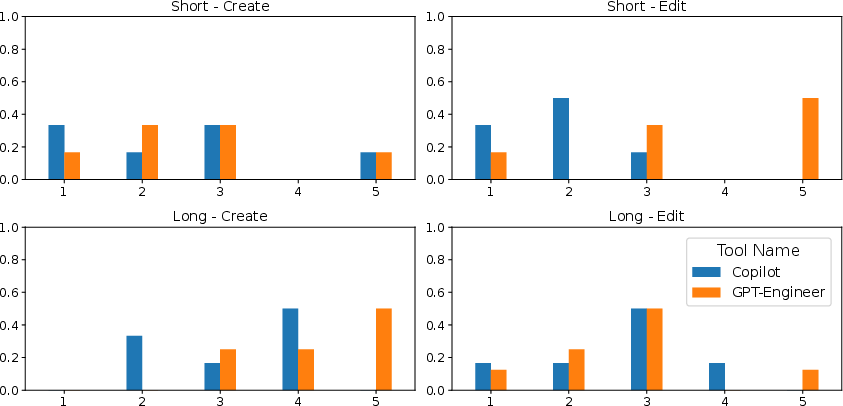

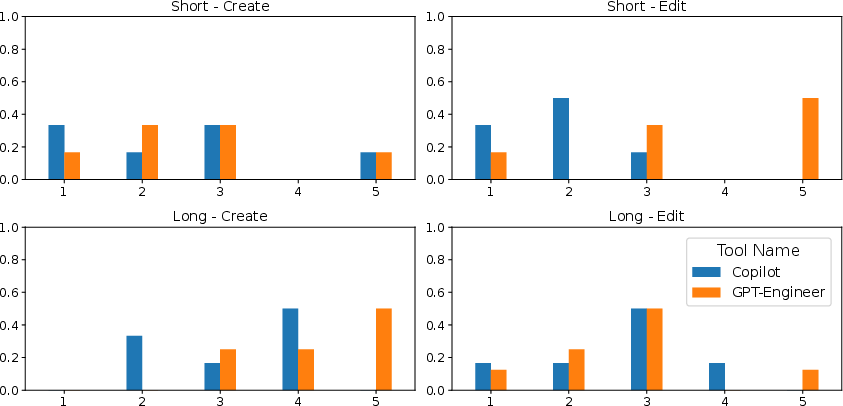

The paper revealed a moderate level of satisfaction with CBA-generated code, with variations apparent between different task types and lengths. Issues of unmet functional needs and incomplete executability emerged as common dissatisfaction drivers, highlighting a potential gap in fully meeting user expectations.

Figure 2: Distribution of satisfaction scores by task type, task length, and CBA. The X-axis represents satisfaction scores; the Y-axis shows the fraction of participants assigning each score.

Challenges in Using CBAs

Participants encountered challenges concerning missing functionality, inadequate communication, and context ignorance, impeding a seamless integration into their workflows. A key concern that emerged was the lack of transparency in CBAs' decision-making processes, often resulting in unexpected or incorrect output.

Barriers to Adoption

Usability and Adoption Barriers

Participants identified significant barriers to CBA adoption, citing limited capability in handling complex tasks, an added effort in managing interactions, and legal or privacy concerns regarding generated code. The unpredictable nature of AI behaviors was highlighted as a critical usability challenge, stemming from unclear prompts and resulting in inconsistent outputs.

Capabilities and Design Opportunities

Current CBA tools, while varied in their offerings, predominantly fall short in addressing the developer needs identified. There exists an opportunity for CBAs to improve through enriched prompting guidance, facilitation of a collaborative design process, enhanced output verifications, and proactive feature incorporations.

Design Recommendations

The paper proposes a pathway towards refining CBAs through dynamic context utilization, hierarchical code construction, and incorporation of real-time auditing frameworks to improve alignment with user needs. Additionally, CBAs should strengthen user control by incorporating features ensuring users' agency over code modifications, akin to a collaborative programming environment.

Conclusion

This exploration highlights the opportunities for advancing CBA capabilities to better align with developers' needs. By addressing identified gaps in usability, transparency, and control, CBAs can potentially transform into reliable, user-centric tools that seamlessly integrate into professional programming environments, thereby enhancing developer productivity and satisfaction.