Beyond Accuracy: How AI Metacognitive Sensitivity improves AI-assisted Decision Making

Abstract: In settings where human decision-making relies on AI input, both the predictive accuracy of the AI system and the reliability of its confidence estimates influence decision quality. We highlight the role of AI metacognitive sensitivity -- its ability to assign confidence scores that accurately distinguish correct from incorrect predictions -- and introduce a theoretical framework for assessing the joint impact of AI's predictive accuracy and metacognitive sensitivity in hybrid decision-making settings. Our analysis identifies conditions under which an AI with lower predictive accuracy but higher metacognitive sensitivity can enhance the overall accuracy of human decision making. Finally, a behavioral experiment confirms that greater AI metacognitive sensitivity improves human decision performance. Together, these findings underscore the importance of evaluating AI assistance not only by accuracy but also by metacognitive sensitivity, and of optimizing both to achieve superior decision outcomes.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

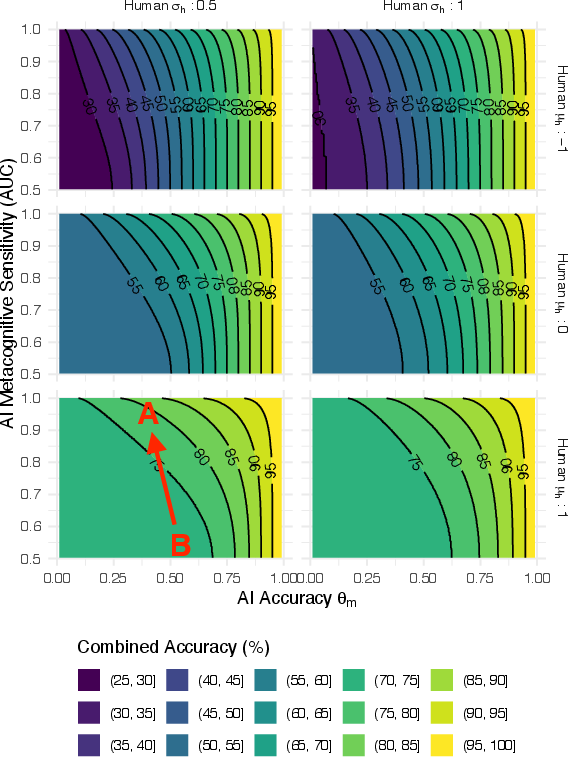

This paper looks at how people can make better decisions when they get help from AI. It doesn’t just ask “How often is the AI right?” It also asks “How well does the AI know when it’s right or wrong?” That second part is called the AI’s metacognitive sensitivity—basically, how good the AI is at setting high confidence when it’s correct and low confidence when it’s not.

What questions are the researchers trying to answer?

The paper focuses on three simple questions:

- When people use AI advice, does it help more if the AI is very accurate, or if it’s very good at judging its own confidence?

- Can an AI that’s a little less accurate still lead to better teamwork with a human if it’s better at signaling when to trust it?

- Do these ideas hold up not just in theory but also in real human experiments?

How did they study this?

The big idea (theory)

Imagine you have a friend who gives you answers and also tells you how sure they are. Two things matter:

- Accuracy: How often your friend is right.

- Metacognitive sensitivity: How well your friend’s “I’m sure/I’m not sure” matches reality. A sensitive friend is very confident when right and cautious when wrong.

The researchers built a mathematical model (using a tool called signal detection theory) to simulate this. They treated the AI’s confidence like two overlapping “hills”:

- One hill for confidence when the AI is correct

- One hill for confidence when the AI is incorrect

If the hills are far apart, the AI has high metacognitive sensitivity—it’s good at telling which answers to trust. Their model shows how a human should switch between “go with my own answer” and “follow the AI” based on the AI’s confidence. If the AI sounds very sure, follow it; if it sounds unsure, stick with your own judgment.

Key idea: Even if an AI isn’t the most accurate, it can still boost the human’s final accuracy if its confidence signals are reliable. The model spells out when this “trade-off” can happen.

The experiment (real people)

The team ran an online study with 110 participants. In each round, people watched a short animation with colored dots and guessed which color had the most dots. Then they saw an AI’s answer plus the AI’s confidence. They could change their answer after seeing the AI’s advice.

Participants were paired with different AI assistants:

- Four AIs had the same accuracy (66%) but different metacognitive sensitivity (from low to very high).

- One AI had lower accuracy (55%) but extremely high metacognitive sensitivity.

This setup tested whether “being better at confidence” could beat “being more accurate” when helping a human.

What did they find, and why does it matter?

Here are the main findings:

- When AI accuracy was held the same, higher metacognitive sensitivity led to better human-AI results. In other words, people did better when the AI was good at signaling when it was likely right vs. wrong.

- “Complementarity” increased with sensitivity. Complementarity means the human-AI team did better together than either could alone. The more sensitive the AI, the more often and the more strongly this happened.

- The “inversion” effect showed up: An AI with lower accuracy but very high metacognitive sensitivity helped people more than some higher-accuracy AIs that were worse at judging their own confidence.

Why this matters: In real life, people don’t just need a right answer; they need to know when to trust the AI and when to rely on themselves. Clear, honest confidence signals make that possible.

What does this mean for the future?

- Don’t judge AI only by accuracy. Also measure how well it knows when it’s right or wrong (metacognitive sensitivity). This can make human-AI teams more effective.

- In systems where speed or cost matters, a highly sensitive AI can act like a “smart router.” If it’s confident, use its answer; if it’s unsure, escalate to a stronger or slower tool, or ask another expert. This saves time while keeping quality high.

- Better sensitivity can reduce over-reliance and under-reliance on AI. If an AI honestly signals uncertainty, people know when to question it—and when to trust it.

- For LLMs used in everyday advice (legal, medical, financial), improving metacognitive sensitivity could make them safer and more dependable, especially when their confidence is communicated clearly.

In short, the paper shows that to get the best results from human-AI collaboration, we should build and choose AIs that are not just accurate, but also good at telling us how confident they are—and make sure that confidence really separates their correct answers from their mistakes.

Collections

Sign up for free to add this paper to one or more collections.