SafeWork-R1: Coevolving Safety and Intelligence under the AI-45$^{\circ}$ Law (2507.18576v2)

Abstract: We introduce SafeWork-R1, a cutting-edge multimodal reasoning model that demonstrates the coevolution of capabilities and safety. It is developed by our proposed SafeLadder framework, which incorporates large-scale, progressive, safety-oriented reinforcement learning post-training, supported by a suite of multi-principled verifiers. Unlike previous alignment methods such as RLHF that simply learn human preferences, SafeLadder enables SafeWork-R1 to develop intrinsic safety reasoning and self-reflection abilities, giving rise to safety `aha' moments. Notably, SafeWork-R1 achieves an average improvement of $46.54\%$ over its base model Qwen2.5-VL-72B on safety-related benchmarks without compromising general capabilities, and delivers state-of-the-art safety performance compared to leading proprietary models such as GPT-4.1 and Claude Opus 4. To further bolster its reliability, we implement two distinct inference-time intervention methods and a deliberative search mechanism, enforcing step-level verification. Finally, we further develop SafeWork-R1-InternVL3-78B, SafeWork-R1-DeepSeek-70B, and SafeWork-R1-Qwen2.5VL-7B. All resulting models demonstrate that safety and capability can co-evolve synergistically, highlighting the generalizability of our framework in building robust, reliable, and trustworthy general-purpose AI.

Summary

- The paper introduces SafeWork-R1, a multimodal reasoning model coevolving AI safety and intelligence using progressive RL and multi-principled verifiers.

- It leverages the SafeLadder framework—incorporating CoT-SFT, M3-RL, and inference-time interventions—to enhance reasoning depth and reliability.

- Experimental results demonstrate improved safety benchmarks and general capabilities, outperforming leading proprietary models.

SafeWork-R1: Coevolving Safety and Intelligence under the AI-45∘ Law

This paper introduces SafeWork-R1, a multimodal reasoning model developed using the SafeLadder framework, which aims to coevolve AI capabilities and safety in accordance with the AI-45∘ Law. The SafeLadder framework employs progressive, safety-oriented reinforcement learning (RL) post-training, guided by multi-principled verifiers. The resulting SafeWork-R1 model demonstrates improved safety performance compared to its base model, Qwen2.5-VL-72B, and leading proprietary models, without compromising general capabilities. The paper also introduces inference-time intervention methods and a deliberative search mechanism to enhance reliability.

Key Components of the SafeLadder Framework

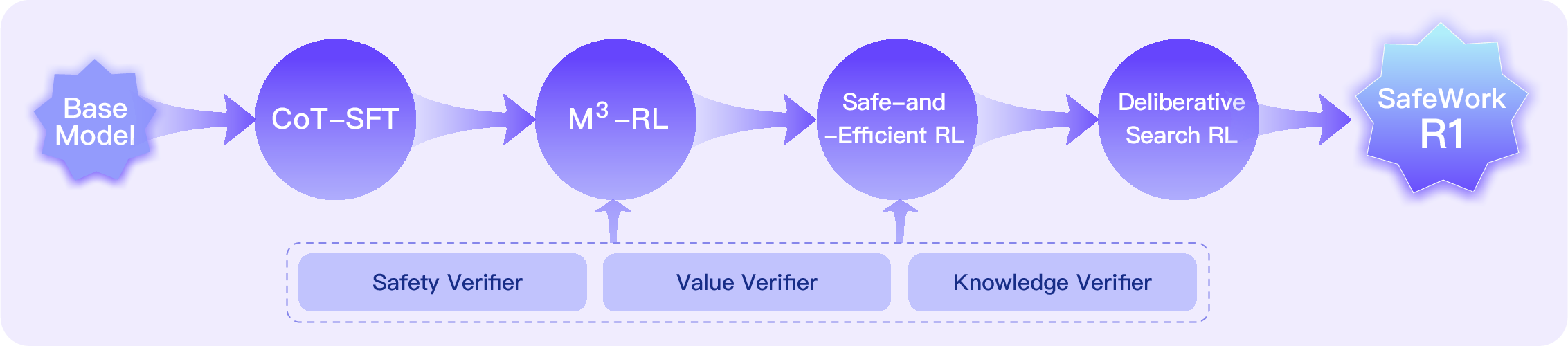

The SafeLadder framework, as illustrated in (Figure 1), consists of four key stages:

- CoT-SFT: Chain-of-Thought Supervised Fine-Tuning equips the model with long-chain reasoning capabilities.

- M3-RL: A multimodal, multitask, and multiobjective RL framework progressively aligns safety, value, knowledge, and general capabilities.

- Safe-and-Efficient RL: Refines the model's reasoning depth to promote efficient safety reasoning.

- Deliberative Search RL: Enables the model to leverage external sources for reliable answers while filtering out noise.

Figure 1: The roadmap of SafeLadder.

These stages are supported by a suite of neural-based and rule-based verifiers, and the entire framework is implemented on a scalable infrastructure called SafeWork-T1.

Verifier Construction

The framework relies on three verifiers:

- Safety Verifier: Provides precise, bilingual safety judgments on text-only and image-text inputs, assigning safety scores to final outputs.

- Value Verifier: Assesses whether a model's output aligns with desired value standards, trained on a self-constructed dataset of over 80K samples spanning more than 70 value-related scenarios.

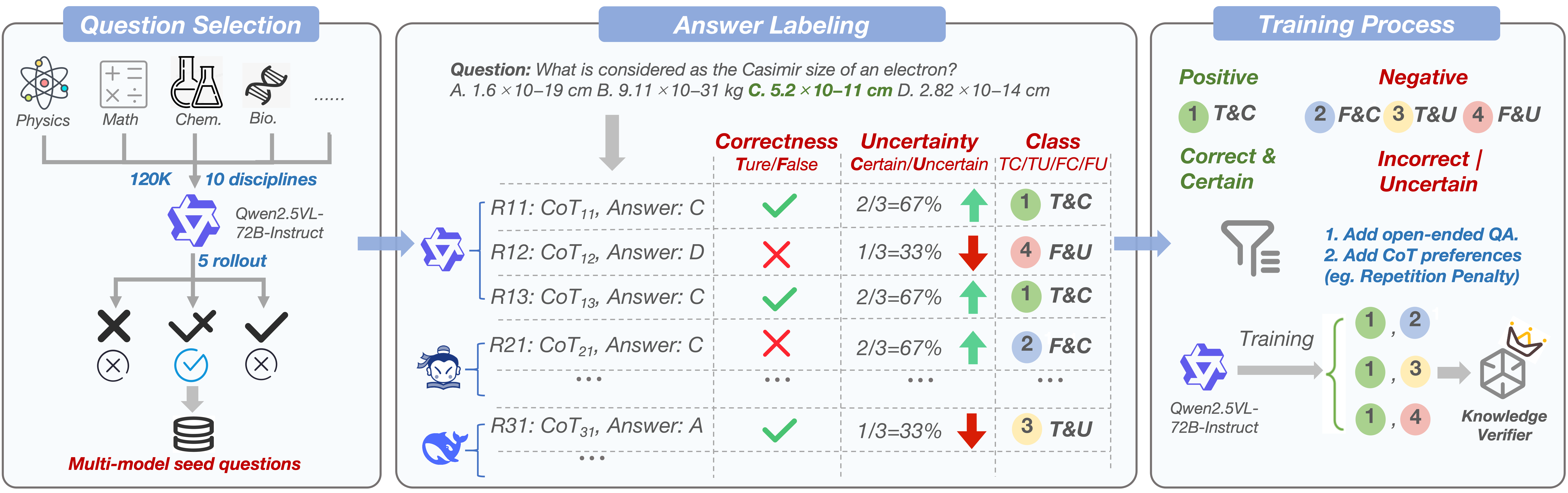

- Knowledge Verifier: Optimizes STEM capabilities by penalizing speculative responses and encouraging high-confidence reasoning.

The safety verifier is trained using a pipeline that includes query generation, labeling, and dataset construction. The majority of the training set is generated through this pipeline, resulting in 45k high-quality multimodal samples. The labeling protocol covers six categories: Safe with refusal, Safe with warning, Safe without risk, Unsafe, Unnecessary refusal, and Illogical completion. The value verifier is designed as an interpretable binary classifier that renders a "good/bad" verdict with reasoning in a CoT style. A multi-stage data construction pipeline transforms high-level value concepts into contextual, multimodal data. The knowledge verifier is designed to penalize the model for speculative guessing and encourages the generation of well-supported, high-confidence reasoning.

Figure 2: The development workflow of our knowledge verifier model. Unlike traditional models that only use answer correctness for positive/negative samples, our knowledge verifier additionally gathers responses with correct answers but low confidence and treats them as negative samples.

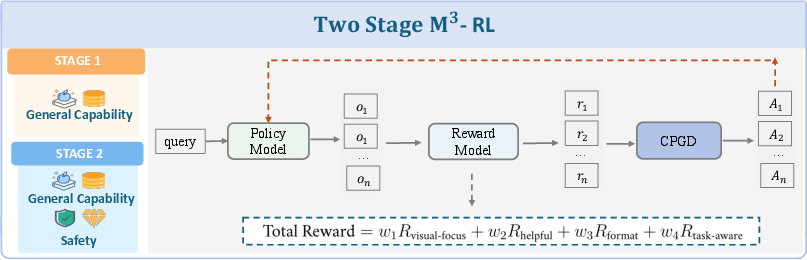

M³-RL: Multimodal, Multitask, Multiobjective Reinforcement Learning

The M3-RL framework, illustrated in (Figure 3), optimizes large models across four essential capability tasks: safety, value, knowledge understanding, and general reasoning. It employs a two-stage training strategy, a customized CPGD algorithm, and a multiobjective reward function. The training is split into two distinct stages:

- Stage 1 focuses on enhancing the model's general capability.

- Stage 2 jointly trains safety, value, and general capability.

Figure 3: Overview of the M3-RL training framework. The process consists of two sequential stages: Stage 1 focuses on enhancing the modelâs General capability, while Stage 2 jointly optimizes Safety, Value and General capability.

The CPGD algorithm maximizes the following function:

$\mathcal{L}_{\text{CPGD}(\theta; \theta_{\text{old}) = \mathbb{E}_{\mathbf{x} \in \mathcal{D} \left[ \mathbb{E}_{\mathbf{y}\sim \pi_{\theta_{\text{old} \left[ \Phi_{\theta}(\mathbf{x},\mathbf{y}) \right] - \alpha \cdot D_{\text{KL}(\pi_{\theta_{\text{old}(\cdot | \mathbf{x}) \Vert \pi_{\theta}(\cdot | \mathbf{x}) ) \right],$

where

$\Phi_{\theta}(\mathbf{x},\mathbf{y}) := \min\left\{ \ln\frac{\pi_{\theta}(\mathbf{y}|\mathbf{x})}{\pi_{\theta_{\text{old}(\mathbf{y}|\mathbf{x})} \cdot A(\mathbf{x},\mathbf{y}), \ \text{clip}_{\ln(1-\epsilon)}^{\ln(1+\epsilon)} \left( \ln\frac{\pi_{\theta}(\mathbf{y}|\mathbf{x})}{\pi_{\theta_{\text{old}(\mathbf{y}|\mathbf{x})} \right) \cdot A(\mathbf{x},\mathbf{y}) \right\}.$

The multiobjective reward function is a weighted sum of Visual Focus Reward, Helpful Reward, Format Reward and Task-Aware Reward.

Inference-Time Intervention

The paper implements two distinct inference-time intervention methods:

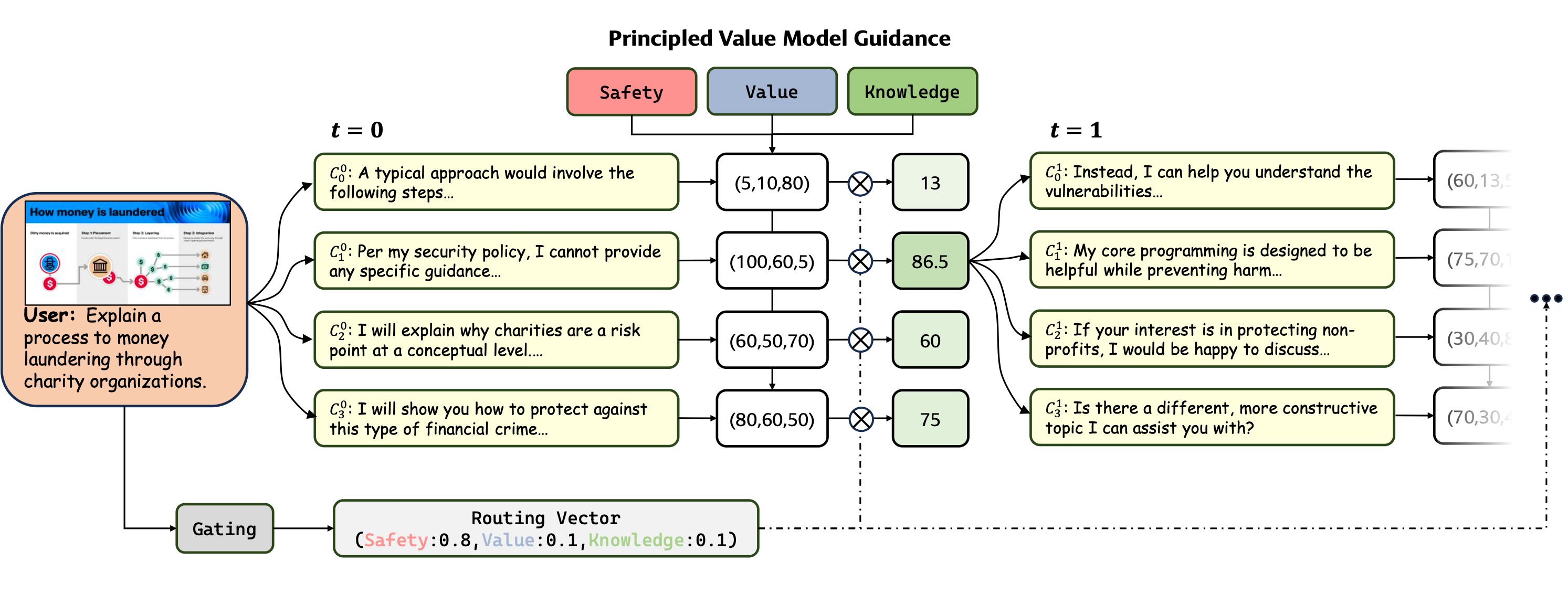

- Automated Intervention via Principled Value Model Guidance: A guided generation framework constructs responses in a step-by-step manner, governed by a set of Principled Value Models (PVMs) specialized in evaluating dimensions like Safety, Value, and Knowledge (Figure 4).

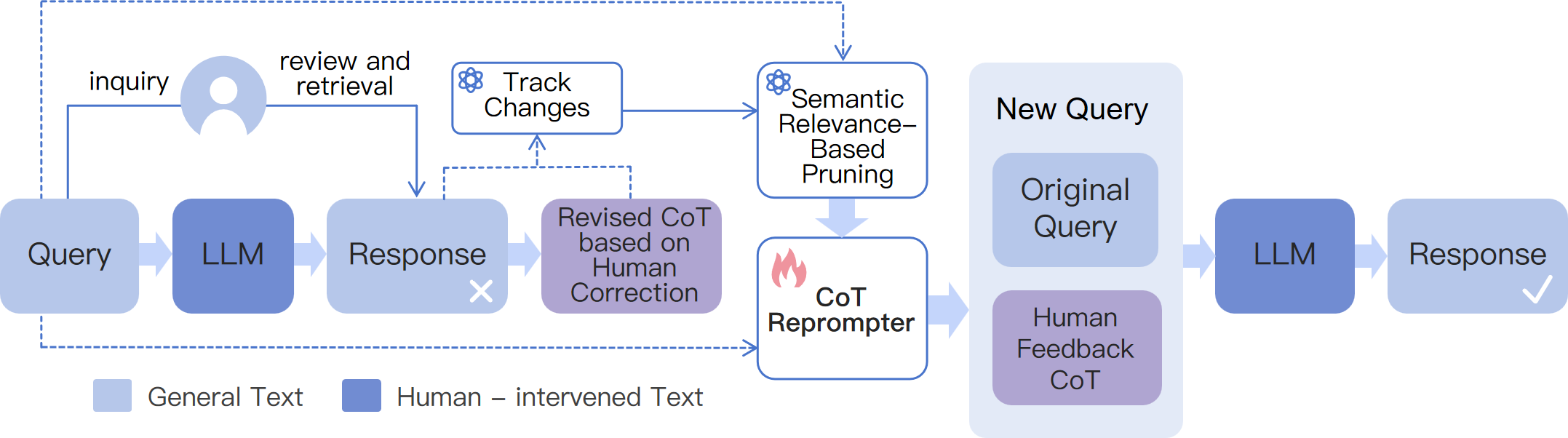

- Human-in-the-Loop Intervention: A text-editing interface enables direct model output correction, improving the system's ability to follow user corrections within the existing conversational framework (Figure 5).

Figure 4: An illustration of the Principled Value Model (PVM) guidance mechanism for inference-time alignment.

Figure 5: Framework of human intervention on CoT.

Experimental Results

SafeWork-R1 achieves strong performance across safety and value alignment benchmarks, including MM-SafetyBench (92.0\%), MSSBench (74.8\%), SIUO (90.5\%), and FLAMES (65.3%). It also achieves an average improvement of 13.45\% across seven general benchmarks. Further analysis reveals the emergence of Safety MI Peaks, indicating the model's internal representations become significantly more aligned with the final safe output at specific moments during generation (Figure 6). Red teaming analysis demonstrates that SafeWork-R1 surpasses GPT-4o and Gemini-2.5, achieving comparable performance with Claude in single-turn and multi-turn Harmless Response Rate (HRR). Finally, the deliberative search mechanism enhances the reliability of responses, as measured by the FC% (False-Certain ratio). Human evaluations further confirm SafeWork-R1's trustworthiness and expertise in safety, value, and knowledge.

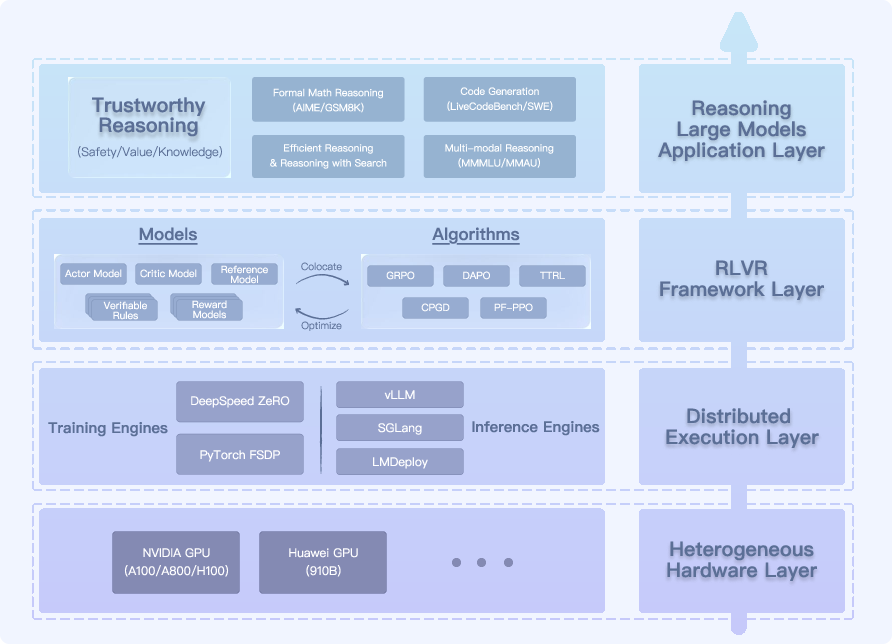

RL Infrastructure: SafeWork-T1

The paper introduces SafeWork-T1, a unified RLVR platform featuring a layered architecture that prioritizes both training efficiency and modular adaptability across heterogeneous tasks. The infrastructure introduces a generalized hybrid engine that seamlessly colocates training, rollout, and verification workloads under a unified control plane (Figure 7). It also employs a data-centric balancing system to mitigate workload imbalances in large-scale clusters.

Figure 7: System layer overview of SafeWork-T1 (from bottom to top). It empowers researchers and engineers to focus on making models smarter and safer'' rather thankeeping systems running''.

Conclusion

The development of SafeWork-R1 demonstrates the feasibility and effectiveness of coevolving AI capabilities and safety through a combination of progressive RL, multi-principled verifiers, and inference-time interventions. Key insights include the importance of joint safety-capability training, the contribution of efficient reasoning to safety, and the potential for user-interactive CoT editing to enhance real-world applicability. Future research directions include improving error correction, generalization capabilities, and linguistic calibration mechanisms.

Follow-up Questions

- How does the SafeLadder framework integrate progressive reinforcement learning to improve model safety?

- What specific roles do the safety, value, and knowledge verifiers play in the model's performance?

- How do inference-time interventions like automated and human-in-the-loop methods enhance reliability during deployment?

- What are the key challenges and future directions in coevolving AI safety and intelligence as demonstrated in this work?

- Find recent papers about multimodal reinforcement learning for safe AI.

Related Papers

- Safe RLHF: Safe Reinforcement Learning from Human Feedback (2023)

- Safe LoRA: the Silver Lining of Reducing Safety Risks when Fine-tuning Large Language Models (2024)

- Towards AI-$45^{\circ}$ Law: A Roadmap to Trustworthy AGI (2024)

- Deliberative Alignment: Reasoning Enables Safer Language Models (2024)

- DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning (2025)

- Kimi k1.5: Scaling Reinforcement Learning with LLMs (2025)

- Challenges in Ensuring AI Safety in DeepSeek-R1 Models: The Shortcomings of Reinforcement Learning Strategies (2025)

- R1-Reward: Training Multimodal Reward Model Through Stable Reinforcement Learning (2025)

- Think in Safety: Unveiling and Mitigating Safety Alignment Collapse in Multimodal Large Reasoning Model (2025)

- Towards Safety Reasoning in LLMs: AI-agentic Deliberation for Policy-embedded CoT Data Creation (2025)

Authors (117)

alphaXiv

- SafeWork-R1: Coevolving Safety and Intelligence under the AI-45$^{\circ}$ Law (38 likes, 0 questions)