- The paper introduces a latent-space ideation framework that enhances LLM creativity by exploring continuous embedding spaces using interpolation, extrapolation, and noise-based perturbation.

- It employs a cross-modal projection mechanism to convert latent vectors into token embeddings, enabling the decoder LLM to produce coherent and innovative textual ideas.

- Empirical evaluation shows a significant boost in idea generation, with a fivefold increase in the population size, improving both originality and fluency of outputs.

Latent Space Exploration for LLM-Based Novelty Discovery

This paper introduces a novel framework designed to enhance the creativity of LLMs by leveraging latent space exploration for innovative idea generation. The method addresses the limitations of LLMs, which often struggle to produce novel and relevant outputs due to their tendency to replicate patterns observed during training. The proposed framework offers a model-agnostic approach that navigates the continuous embedding space of ideas, thereby enabling controlled and scalable creativity across various domains and tasks.

Background and Motivation

LLMs excel at generating fluent and contextually relevant ideas but often lack genuine originality. While increasing randomness or instructing an LLM to "be creative" yields limited improvements, augmenting LLMs with heuristic seeding and structured idea transformations has shown promise. However, these methods typically rely on hand-crafted rules and domain-specific representations, limiting their scalability. The authors address this limitation by proposing a latent-space ideation framework that automatically explores variations of seed ideas within a continuous latent "idea space." The key insight is that generative models implicitly organize concepts in a high-dimensional latent space, where semantic relationships and potential combinations are encoded as vector operations. By navigating this space, the framework uncovers imaginative combinations that would be difficult to achieve through direct prompting.

Latent-Space Ideation Framework

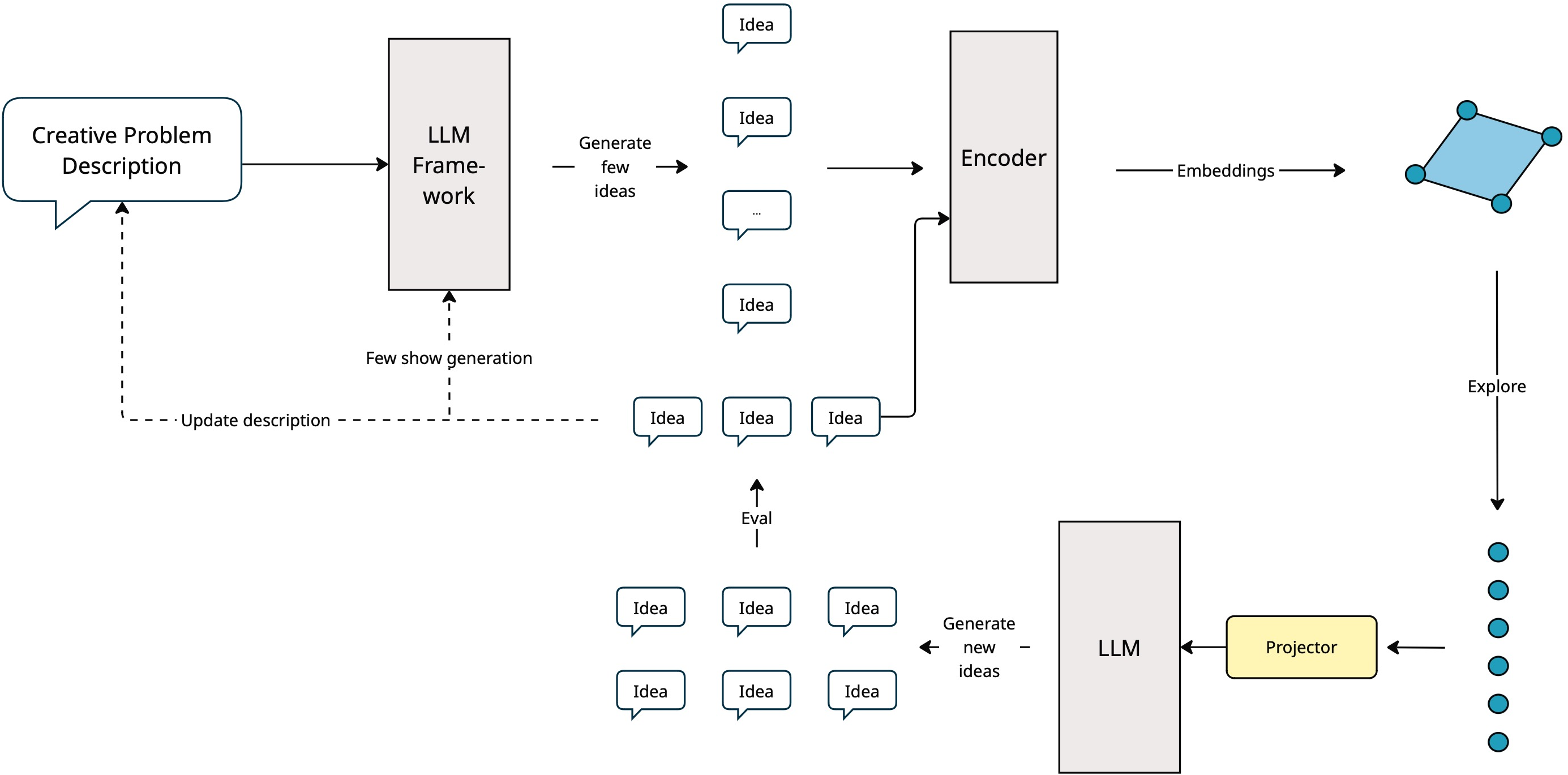

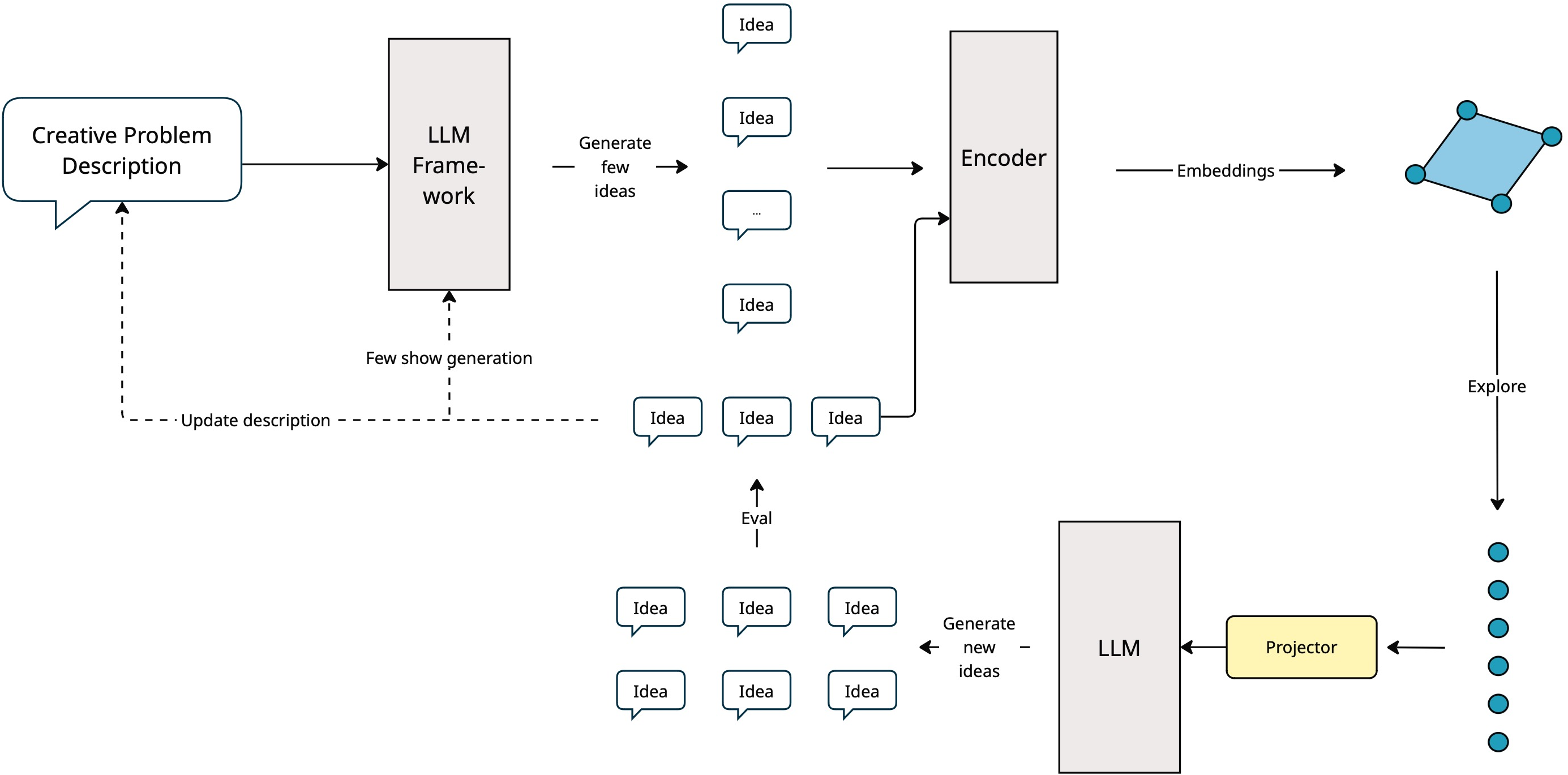

The framework consists of modular components that transform an initial problem description into diverse and expanded ideas. The architecture includes a semantic encoder, a latent explorer, a cross-modal projector, a decoder LLM, and an evaluator LLM.

Figure 1: Overview of the latent-space ideation framework, which includes encoding seed ideas, exploring the latent space, projecting into the token embedding space, decoding novel ideas, and evaluating the generated outputs.

The process begins with optional seed generation using an LLM, followed by encoding seed ideas into latent vectors using a text encoder. The latent space is explored through interpolation, extrapolation, or noise-based perturbation to generate new candidate embeddings. A learned projector maps these latent vectors into the token embedding space of a decoder LLM, which generates natural-language idea descriptions. Finally, an evaluator LLM scores the generated ideas based on originality and relevancy. High-scoring ideas can be fed back into the latent space to enable iterative refinement.

Exploration Strategies

The paper considers three strategies for exploring the latent space around the set of known embeddings:

- Interpolation: Samples a point between two embeddings ei and ej using a parameter λ∈[0,1]: enew=λei+(1−λ)ej.

- Extrapolation: Extends beyond the known embedding space by setting λ∈/[0,1], which explores novel semantic directions.

- Noise-based Perturbation: Introduces isotropic Gaussian noise to an existing embedding: enew=ei+ϵ, where ϵ∼N(0,σ2I).

The empirical evaluation focuses on interpolation, but the framework can accommodate any continuous metaheuristic or sampling scheme for richer exploration strategies.

Cross-Modal Projection and Decoding

The framework maps new vectors enew∈Rd into the token embedding space of a decoder LLM using a learned projector Wp:Rd→Rm, where m matches the LLM's token embedding dimension. The output hX=Wp(enew) is inserted as a special token embedding [X] into the input sequence, acting as a latent-conditioned prompt, similar to continuous prefix-tuning. The decoder LLM then generates a textual description ynew=Dec(hX), treating hX as a learned virtual token and expanding it into coherent text.

Experimental Results

The proposed method was evaluated using a benchmark from Lu et al. (2024) (Lu et al., 10 May 2024), with 10 ideation tasks in each category. A Mistral 7B model (Jiang et al., 2023) was used for idea generation, SRF-Embeddings-Mistral [SFRAIResearch2024] as the encoder, and an MLP projector from Cheng et al. (2024) (Cheng et al., 22 May 2024). GPT-4o was employed for judgment without influencing idea generation. Interpolation was used as the exploration strategy, with λ∼[0.45,0.55]. The baseline was the LLM Discussion method (Lu et al., 10 May 2024), extended with additional ideas generated via the proposed method. The method was applied in a single iteration, increasing the population size fivefold, with new ideas sampled and evaluated at each stage. Generated ideas were filtered based on relevancy and an originality score of ≥4. The results indicate that the method improves both Originality and Fluency with each iteration, demonstrating that latent space exploration can facilitate the generation of highly creative ideas.

Discussion and Conclusion

The paper introduces a latent-space ideation framework that enhances AI-assisted creative idea generation by moving beyond traditional prompt engineering and heuristic-based approaches. By encoding ideas into a continuous latent space and systematically exploring this space through operations like interpolation, the framework generates original and fluent ideas. The framework's compositionality and adaptability enable it to initiate the ideation process from a concise textual brief or user-provided seed ideas, recursively exploring latent neighbors to expand the idea space. The experiments demonstrate that latent-space exploration enhances the originality and fluency of generated ideas across various tasks.

Future Directions

Future research will focus on developing more sophisticated latent space exploration strategies, such as swarm-based optimization algorithms, to increase the efficiency of idea generation and improve flexibility. Integrating more advanced human-in-the-loop feedback mechanisms and dynamic adjustment of exploration parameters could further refine the iterative ideation process. The authors also recognize the need for more efficient and nuanced scoring functions to evaluate generated ideas, moving beyond reliance on GPT-4o as a judge and exploring specialized evaluators or incorporating more objective, domain-specific metrics to streamline the feedback loop.