- The paper introduces DyG-RAG to enhance LLMs using a dynamic event graph framework that improves temporal reasoning.

- It employs DEU extraction and time-aware vector search to enable multi-hop retrieval and coherent event timeline generation.

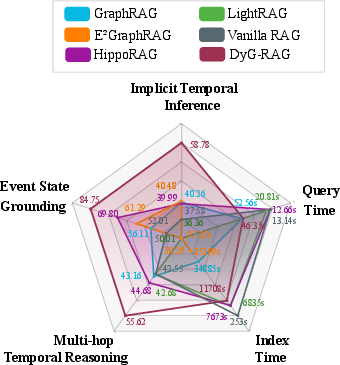

- The approach achieves higher accuracy and recall in temporal QA benchmarks by structuring and grounding temporal knowledge.

DyG-RAG: Dynamic Graph Retrieval-Augmented Generation with Event-Centric Reasoning

Introduction

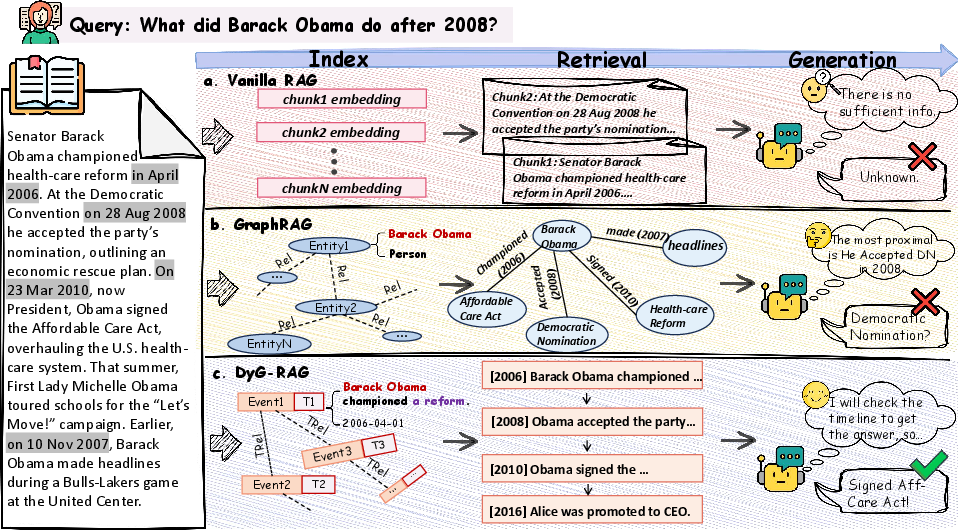

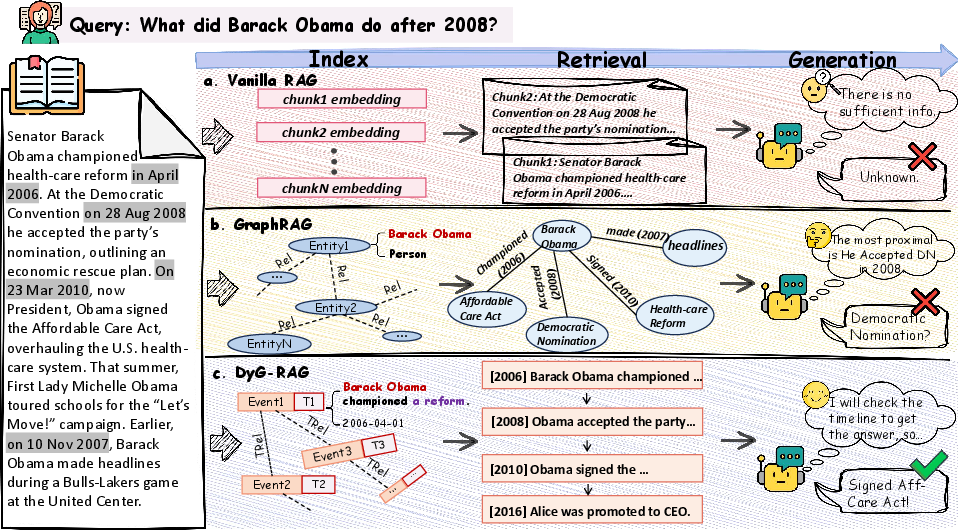

The paper introduces DyG-RAG, a dynamic graph retrieval-augmented generation framework designed to enhance temporal reasoning in LLMs. Existing Graph RAG methods typically fail to model temporal dynamics adequately, often treating retrieved documents as isolated, unstructured items without evolving contextuality. DyG-RAG addresses this by representing knowledge as a dynamic event graph, allowing for more accurate and interpretable reasoning about temporal knowledge embedded within unstructured text.

Framework Overview

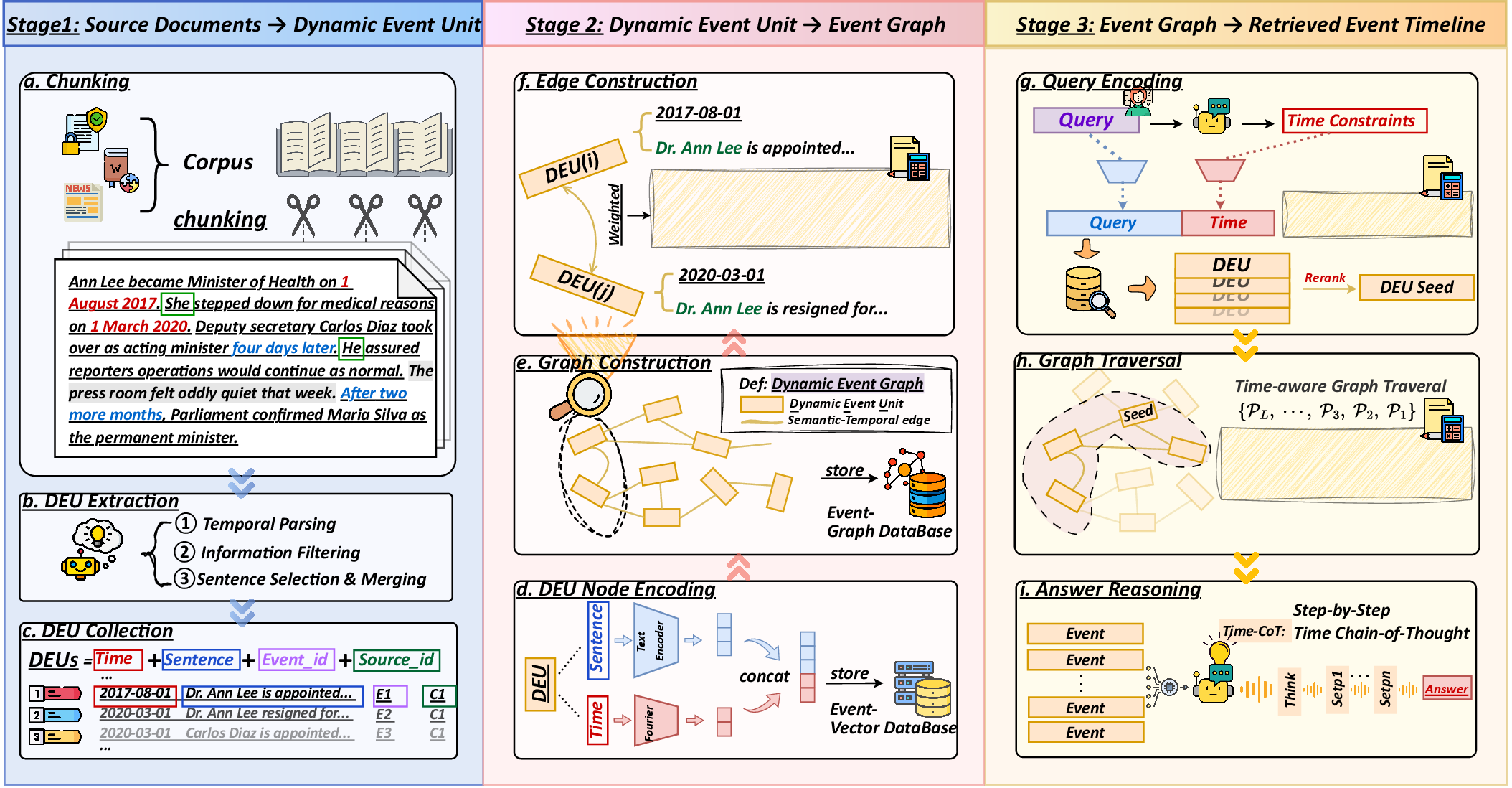

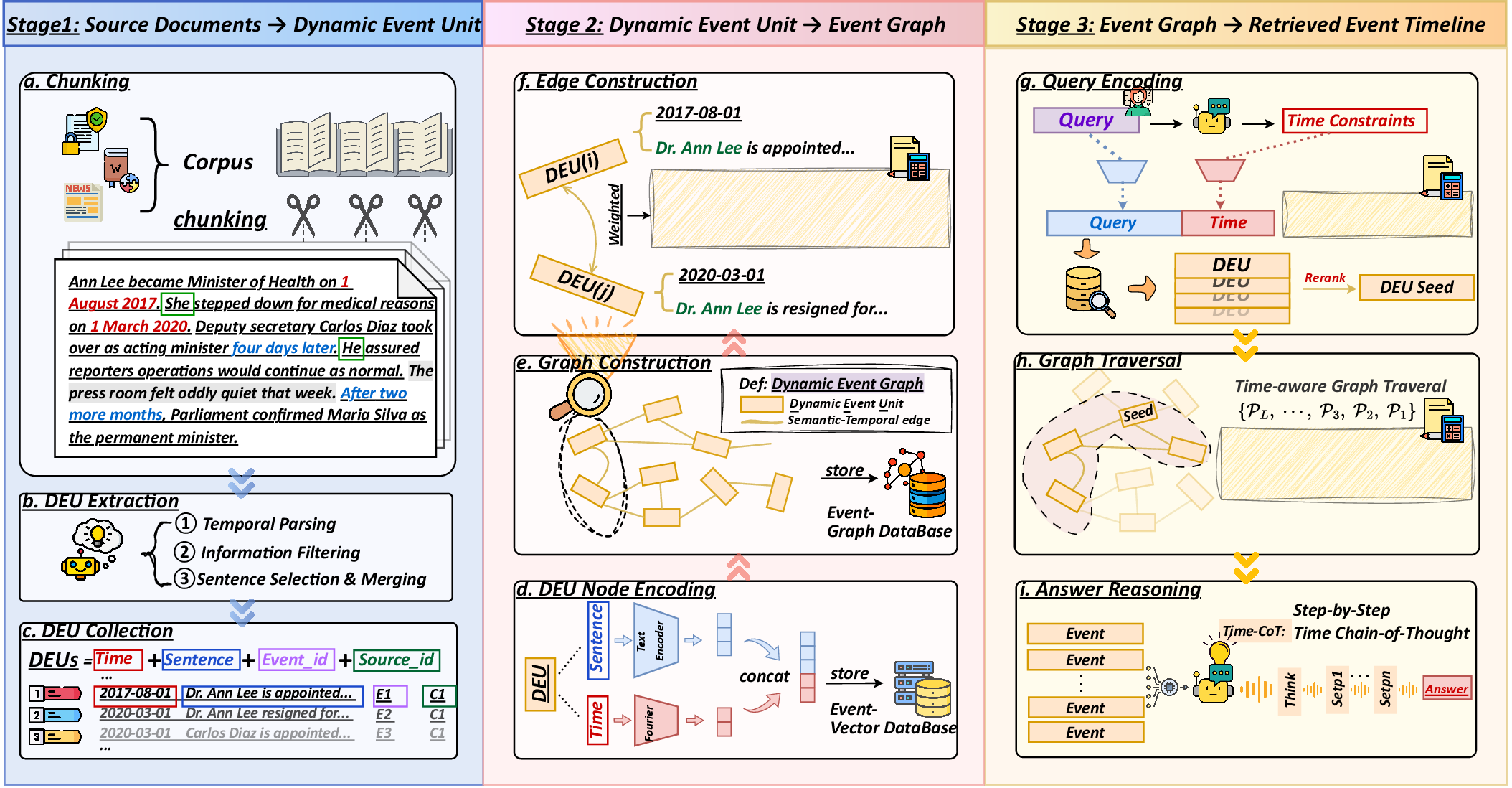

DyG-RAG's framework comprises three stages:

- Dynamic Event Unit (DEU) Extraction: Here, source documents are parsed into structured DEUs. These DEUs encode semantic content and precise temporal anchors, forming the foundational units for the dynamic event graph.

- Event Graph Construction: DEUs are organized into a dynamic Event Graph, where nodes represent DEUs and edges encode temporal-semantic relationships. This structure facilitates multi-hop reasoning by enabling traversal of temporally and semantically linked events.

- Event Timeline Retrieval and Temporal Reasoning: On receiving a query, the system performs bi-encoding, DEU seed retrieval, and time-aware graph traversal. This process generates a temporally coherent event sequence used to reason the answer. DyG-RAG introduces a Time Chain-of-Thought (Time-CoT) strategy to guide LLMs in generating temporally grounded answers.

Figure 1: Overall framework of DyG-RAG. The framework includes DEU parsing, dynamic event graph construction, and time-aware graph traversal for query resolution.

Dynamic Event Units and Event Graph

Dynamic Event Units (DEUs) are self-contained statements describing an event or state with a specific temporal anchor. These units are parsed from source documents through temporal parsing and information filtering, ensuring precise time-aware retrieval.

Event Graph Construction involves encoding DEUs into dense vector representations which combine semantic and temporal information. Edges reflect semantic similarity and temporal proximity, facilitating meaningful connections and narrative flow within the graph.

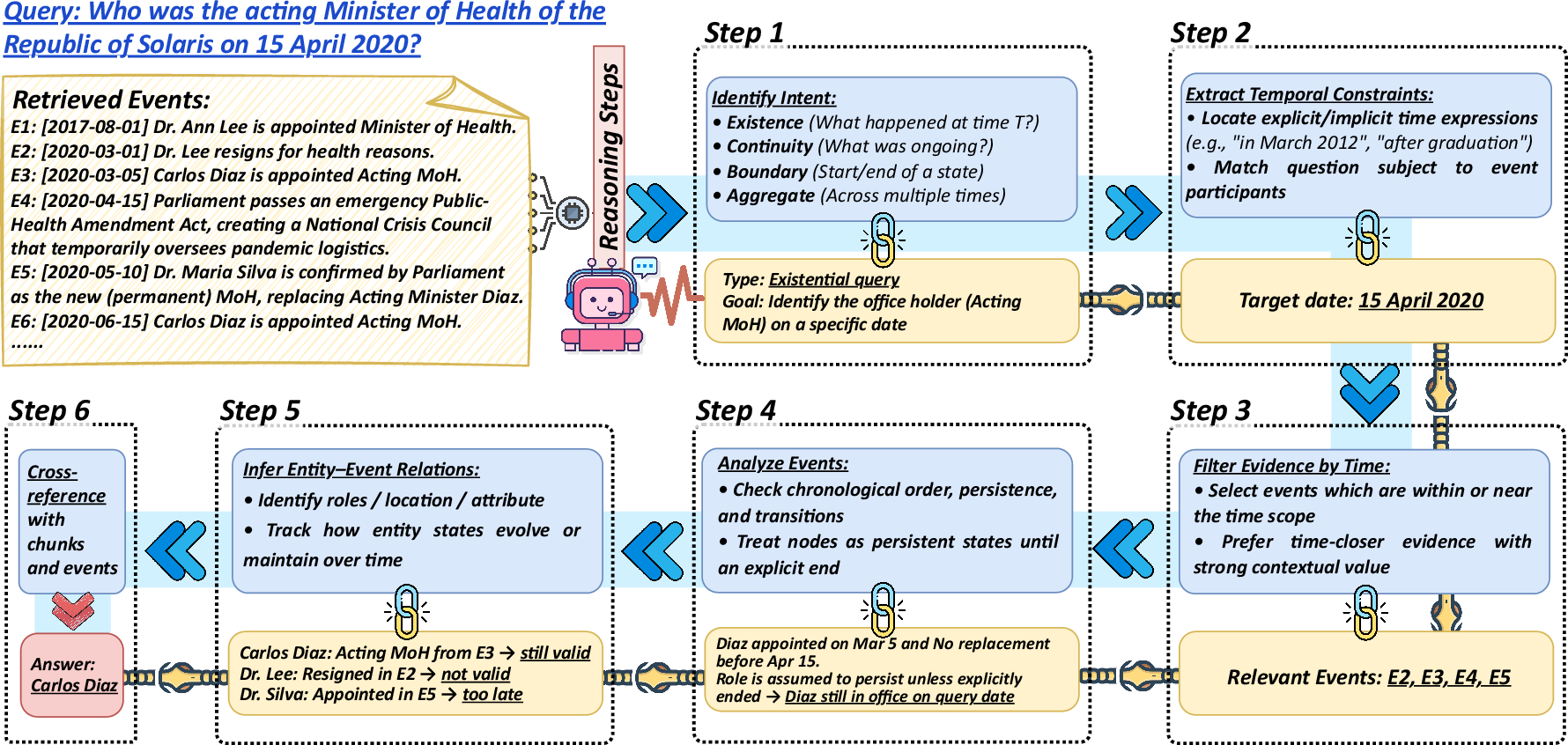

Retrieval Process and Time-CoT Strategy

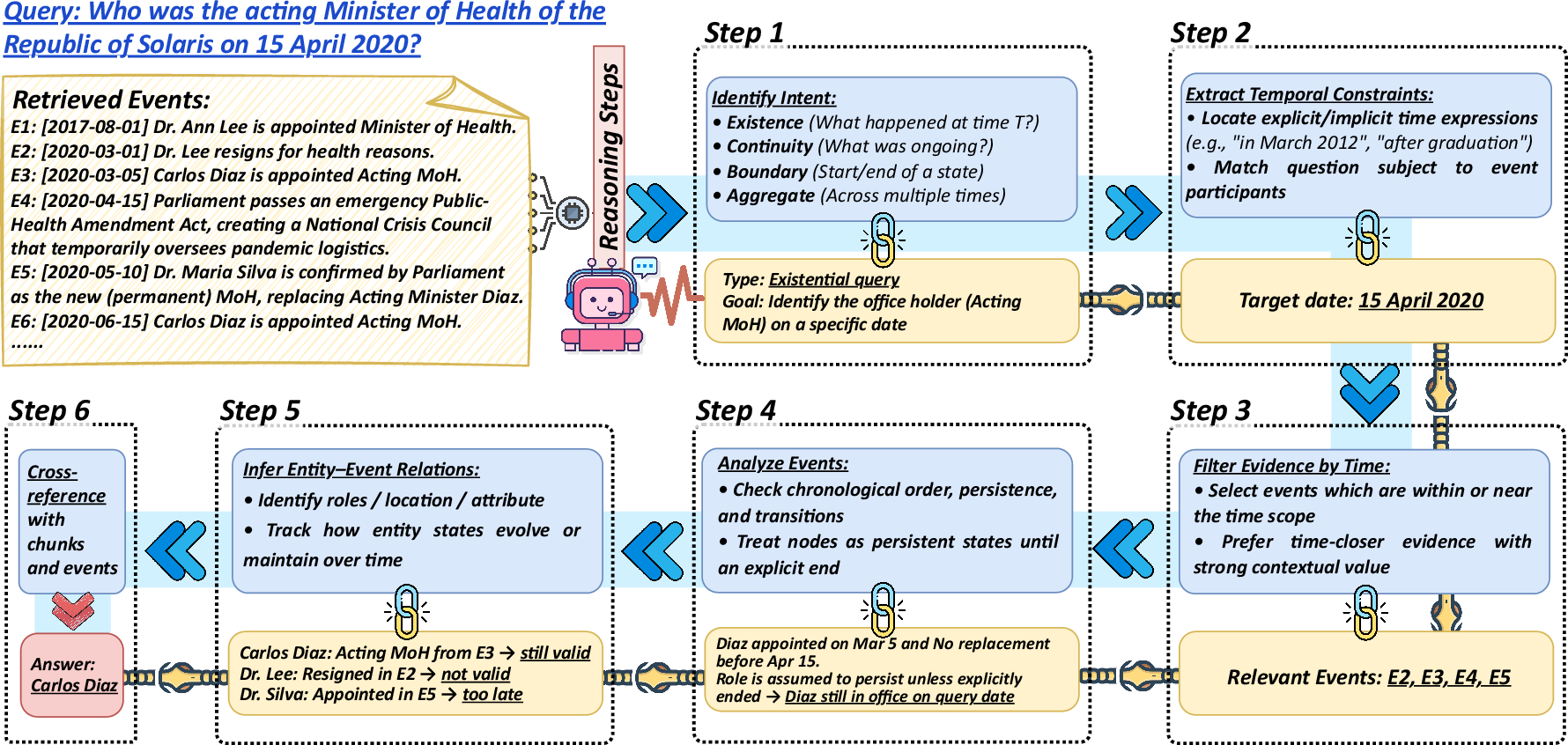

The retrieval process begins with encoding the query into joint semantic-temporal embeddings. Time-aware vector search retrieves event nodes which are further refined using a cross-encoder reranker. The Time-CoT strategy explicitly encodes temporal relations within LLM prompts, guiding the LLM through a structured temporal reasoning process to synthesize coherent answers.

Figure 2: Illustration of Time-CoT, guiding LLMs in a structured temporal reasoning process.

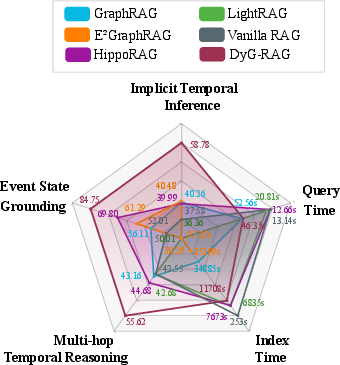

DyG-RAG demonstrates significant improvements over existing methods in temporal QA benchmarks. It achieves higher accuracy and recall rates by effectively capturing and reasoning about temporal relationships in the data.

Figure 3: Comparison and evaluation of representative RAG methods and DyG-RAG, highlighting superior event state grounding and multi-hop reasoning capabilities.

Conclusion

DyG-RAG represents a significant step forward in enhancing LLMs with temporal reasoning capabilities. By structuring knowledge as a dynamic event graph, it facilitates precise, time-aware retrieval and reasoning. The introduction of DEUs and Time-CoT strategies exemplifies how structured temporal processing can lead to more accurate and contextually relevant responses in time-sensitive tasks. Future work could focus on further optimizing retrieval efficiency and expanding to broader domains.

References

Please refer to the citations in the original document to access the detailed bibliographic information for referenced works.