- The paper demonstrates that neural module repetition, inspired by the minicolumn hypothesis, enhances energy efficiency and scalability in AI systems.

- The paper reveals that shared parameter designs improve generalization across tasks, enabling robust performance in dynamic environments.

- The paper presents experimental implementations in robotics and distributed control, illustrating practical applications of repeated neural modules in AI.

Overview of the Paper

"The Generalist Brain Module: Module Repetition in Neural Networks in Light of the Minicolumn Hypothesis" investigates neural module repetition within artificial intelligence, focusing on the minicolumn hypothesis. The paper connects this hypothesis to collective intelligence, providing a thorough review of historical, theoretical, and methodological perspectives on repeated neural modules. This exploration aims to bridge the gap between cortical column architectures and repeated neural modules within AI systems, highlighting potential solutions to AI challenges such as energy efficiency, scalability, and generalization.

Theoretical Foundations

Minicolumn Hypothesis

The minicolumn hypothesis serves as an organizing principle for neural module repetition. Mountcastle's work on the neocortex described it as a network of repeated modules, introducing the idea that brain functions stem from distributed, replicated units. The Thousand Brains Theory, further evolved in recent years, emphasizes these columns as individual agents forming comprehensive models of objects, suggesting advancements in sensory-motor learning.

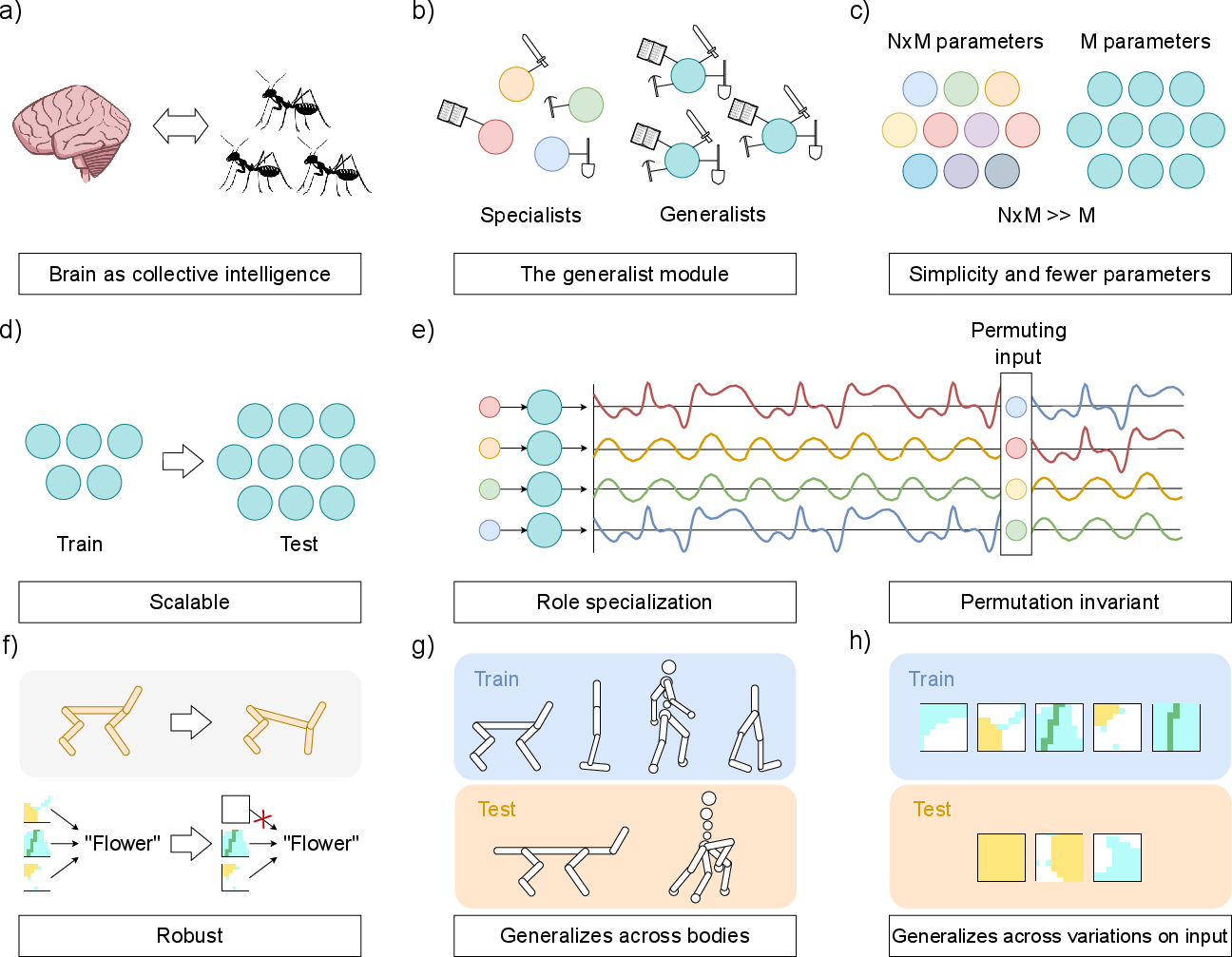

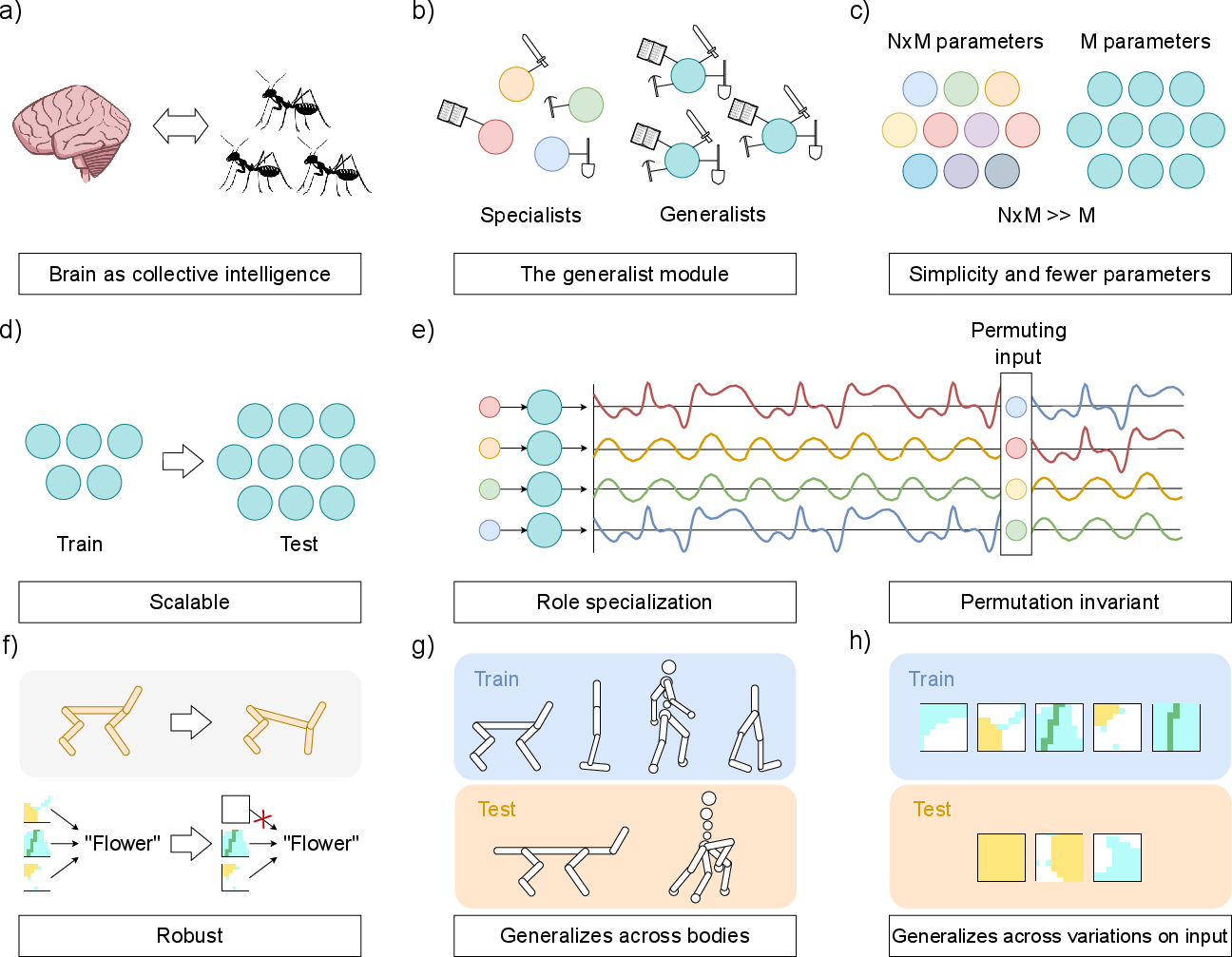

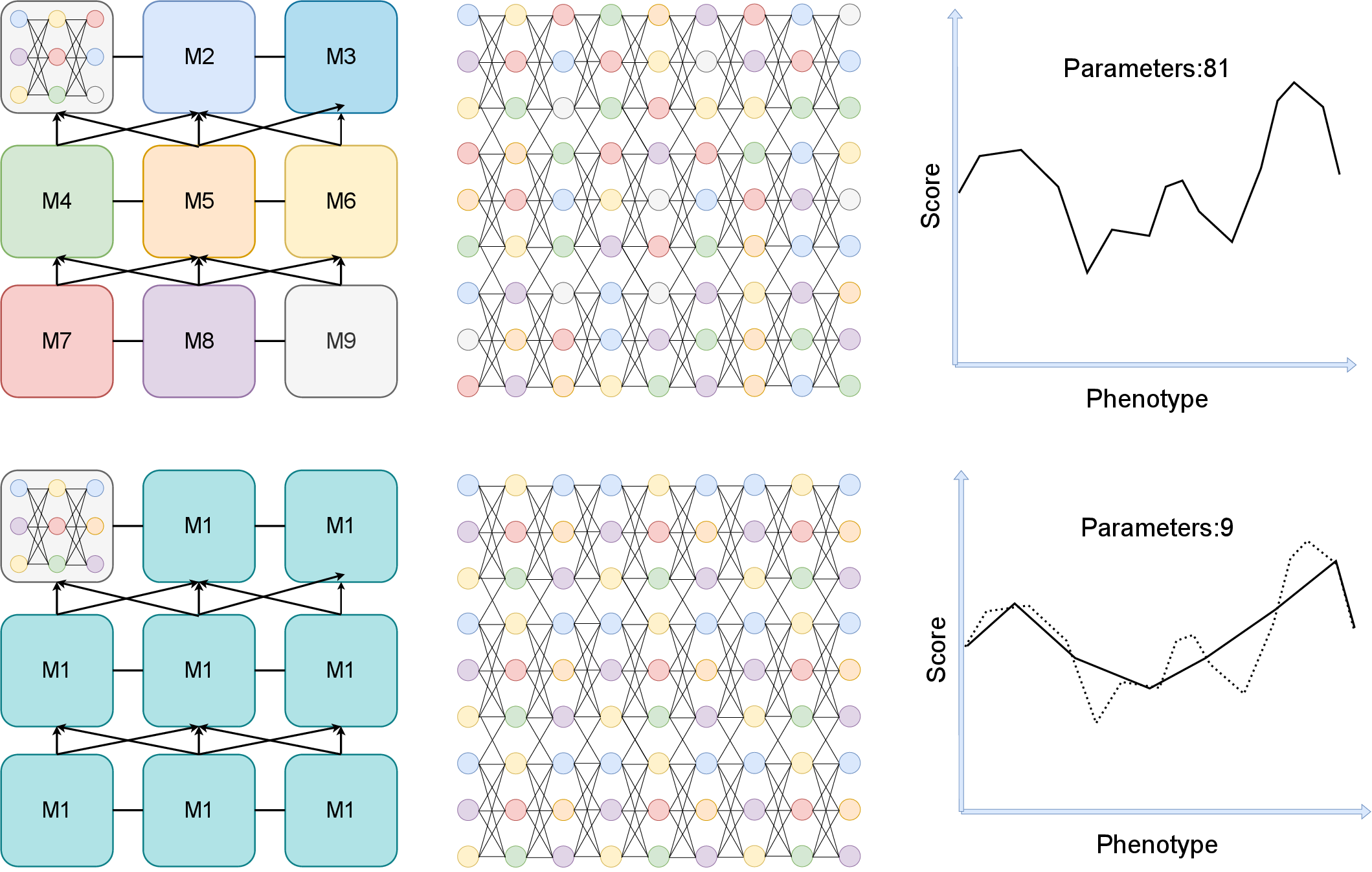

Figure 1: Key theoretical and empirical insights on the generalist module, exhibiting distributed architectures and potential for robust multi-task generalization.

Collective Intelligence

The paper suggests that the minicolumn hypothesis aligns with collective intelligence principles, where distributed systems demonstrate emergent intelligent behavior. Swarm intelligence, characterized by simple, homogeneous units interacting locally, showcases advantages such as adaptability, robustness, parallel execution, and scalability. Comparing brain modules to swarm systems uncovers potential for AI systems that emulate these intrinsic qualities.

Advantages and Challenges of Module Repetition

Parameter Reduction

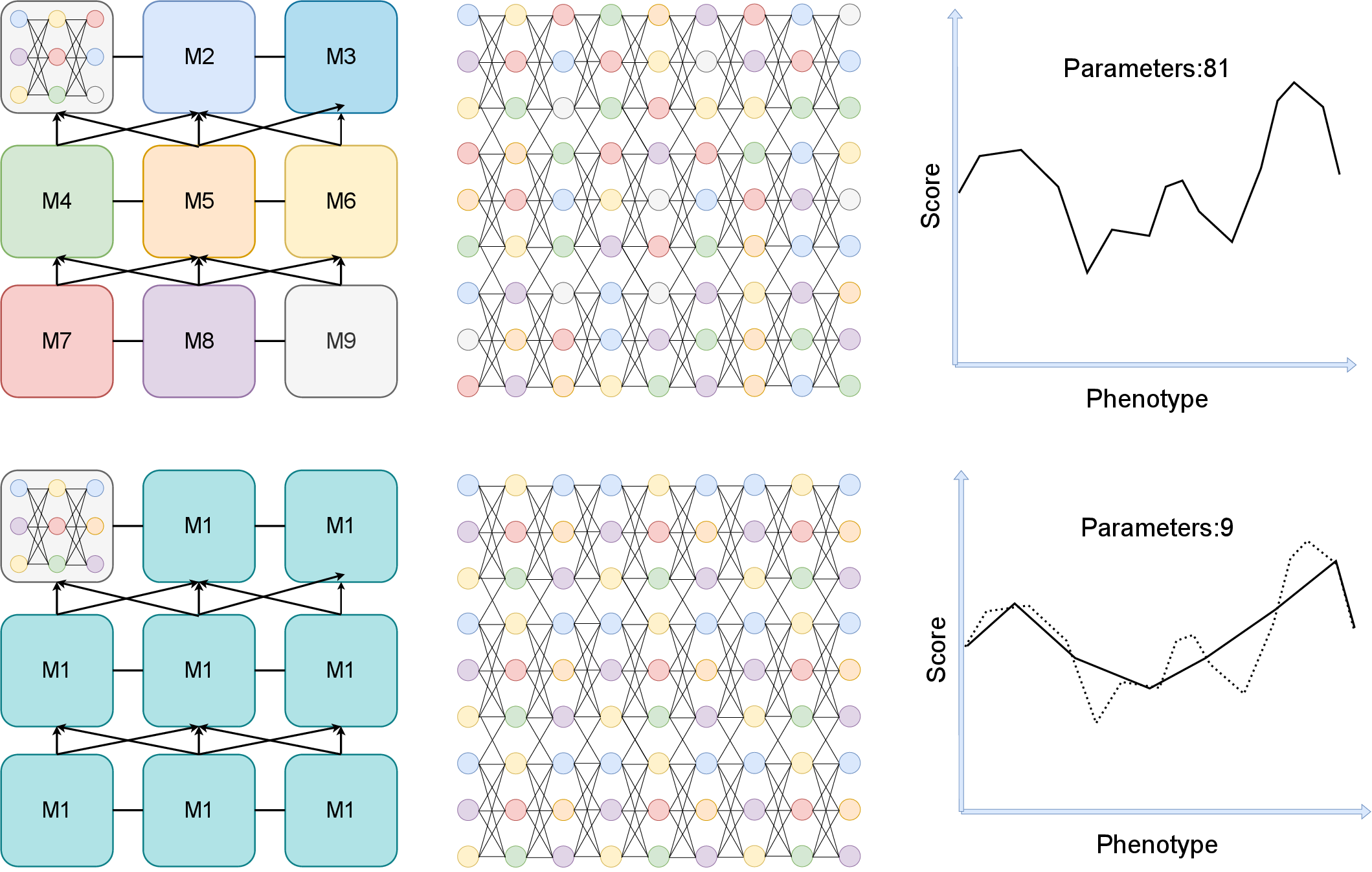

Neural module repetition inherently reduces parameters within networks, improving efficiency in optimization processes. This reduction supports scalability, enabling simpler architecture designs that maintain functional capabilities while minimizing computational demands. Such architectures could challenge conventional AI architectures that often demand extensive computational resources.

Figure 2: Module repetition reduces the search space dimensions, leading to improved scalability and efficiency in network design.

Generalization Capabilities

Repeated modules, due to their design and shared parameters, have demonstrated enhanced generalization across tasks and environments. This attribute is pivotal for developing AI systems that perform effectively in novel situations without retraining, a characteristic crucial for deploying AI in dynamic and unpredictable environments.

Architectural Constraints

While advantageous, module repetition introduces architectural constraints, limiting integration flexibility. These constraints require innovative solutions for synthesizing expanded inputs and outputs, as seen in current implementations featuring non-modular integration methods.

Experimental Observations and Implementations

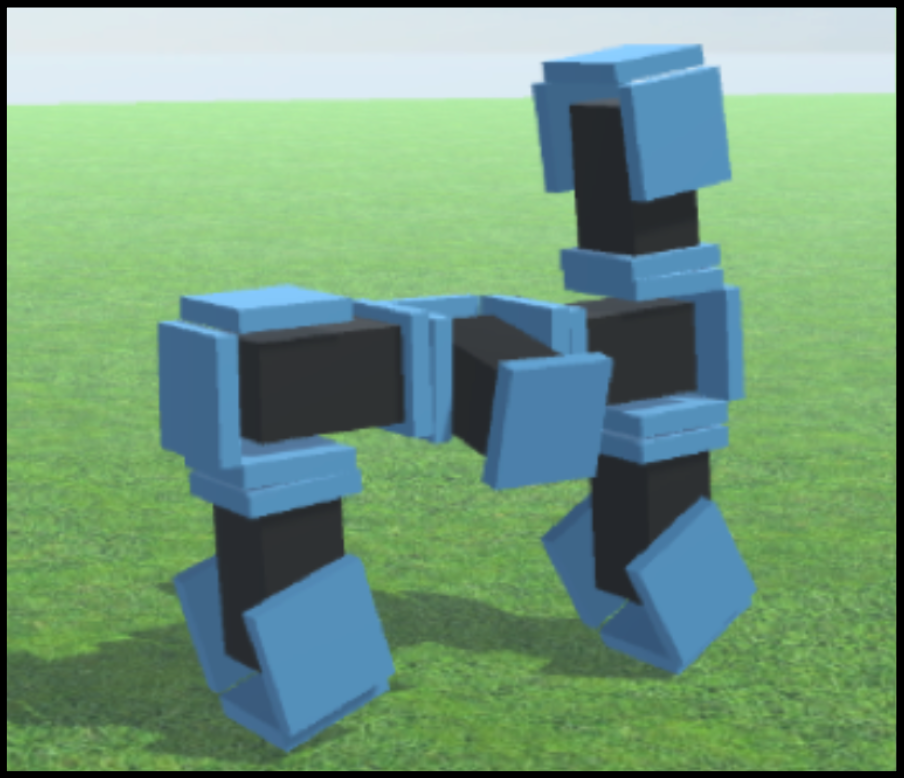

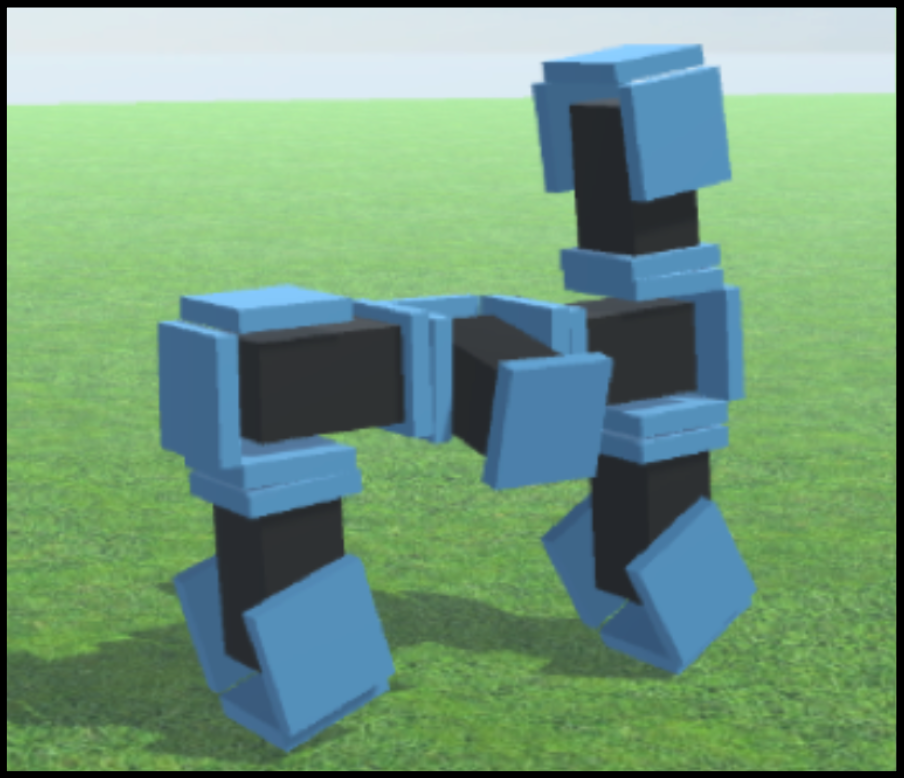

The paper reviews various implementations featuring neural module repetition, citing improvements in energy efficiency, scalability, and adaptability. Specific examples include distributed control mechanisms in robotics, showcasing the robustness and zero-shot adaptability of repeated modules in heterogeneous environments. These studies demonstrate promising applications in fields demanding resilience and environmental adaptability.

Figure 3: Modular robot example, illustrating repeated physical modules that support robust navigation in 3D environments.

Implications and Future Directions

The findings highlight a compelling approach to AI system design, suggesting that neural module repetition inspired by the minicolumn hypothesis and collective intelligence principles offers notable advantages in efficiency, scalability, and adaptability. Future research should focus on further exploring these advantages, deepening theoretical understanding, and expanding empirical evaluations of these architectures.

The paper underscores the significance of examining biological and theoretical foundations to inform AI development. Such interdisciplinary research could propel advancements in AI architecture design, addressing pervasive challenges in resource demands and adaptability, while fostering collaboration across AI, neuroscience, and cognitive science domains.

Conclusion

By synthesizing insights from neuroscience, AI, and cognitive science, "The Generalist Brain Module: Module Repetition in Neural Networks in Light of the Minicolumn Hypothesis" emphasizes the potential of neural module repetition. This approach paves the way for developing more efficient, robust, and adaptable AI systems that mirror biological intelligence. As AI continues to evolve, embracing these principles may lead to transformative advancements in AI system design and functionality.