- The paper introduces PhysiX, a general-purpose foundation model that uses a universal tokenizer and autoregressive transformer for multi-domain physics simulations.

- It achieves state-of-the-art results on The Well benchmark by significantly reducing VRMSE and excelling in long-horizon predictions.

- Ablation studies reveal that leveraging natural video priors and a refinement CNN notably enhances simulation fidelity and adaptability.

PhysiX: A Foundation Model for Physics Simulations

This paper introduces PhysiX, a novel foundation model for physics simulations, addressing the limitations of current ML-based surrogates that are typically task-specific and struggle with long-range predictions (2506.17774). PhysiX leverages a 4.5B parameter autoregressive generative model with a discrete tokenizer and refinement module to achieve state-of-the-art performance on The Well benchmark. The model is pretrained on a diverse collection of physics simulation datasets and initialized from pretrained checkpoints of high-capacity video generation models to leverage spatiotemporal priors learned from natural videos.

Model Architecture and Training

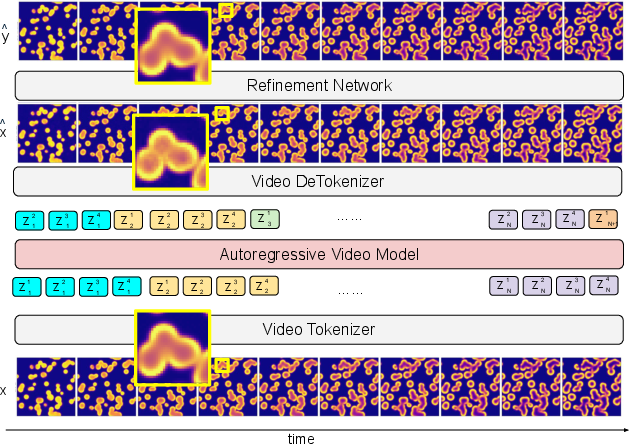

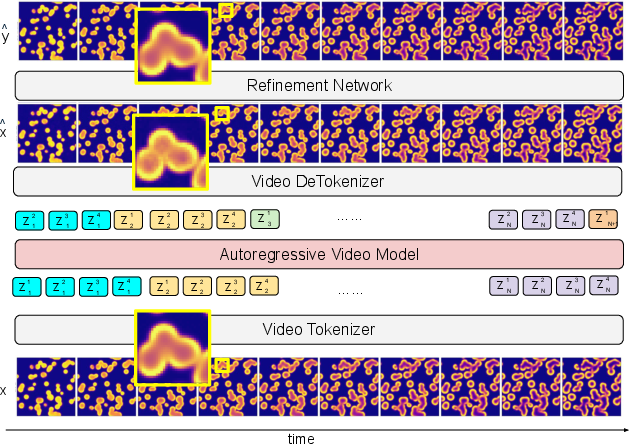

PhysiX comprises three key components: a universal discrete tokenizer, a large-scale autoregressive transformer, and a refinement module (Figure 1).

Figure 1: The overall design of PhysiX, showcasing the video tokenizer, autoregressive model, and refinement network for physics simulation.

The universal discrete tokenizer, adapted from the Cosmos framework, encodes continuous spatiotemporal fields at different scales into discrete tokens. This allows the model to capture shared structural and dynamical patterns across diverse physical domains. The tokenizer employs an encoder-decoder architecture with temporally causal convolution and attention layers, followed by Finite-Scalar Quantization (FSQ) [mentzerfinite]. Separate decoders are used for each dataset to improve reconstruction quality, while the encoder is shared to enforce a shared embedding space.

The autoregressive model, a decoder-only transformer, predicts discrete tokens using a next-token prediction objective. 3D rotary position embeddings (RoPE) are incorporated to capture spatiotemporal relationships. The model is initialized from a 4.5B parameter Cosmos checkpoint to leverage spatiotemporal priors learned from large-scale natural video datasets.

A refinement module, implemented as a convolutional neural network, mitigates quantization errors introduced by the tokenizer, improving output fidelity. The refinement module is trained as a post-processing step, refining the AR model's output in pixel space using MSE loss. Separate refinement modules are trained for each dataset.

Experimental Results

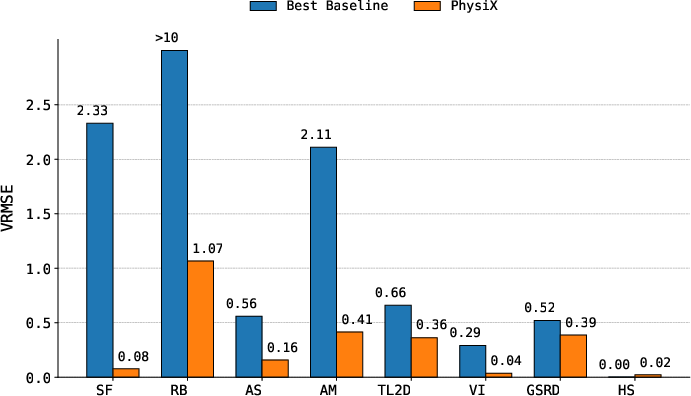

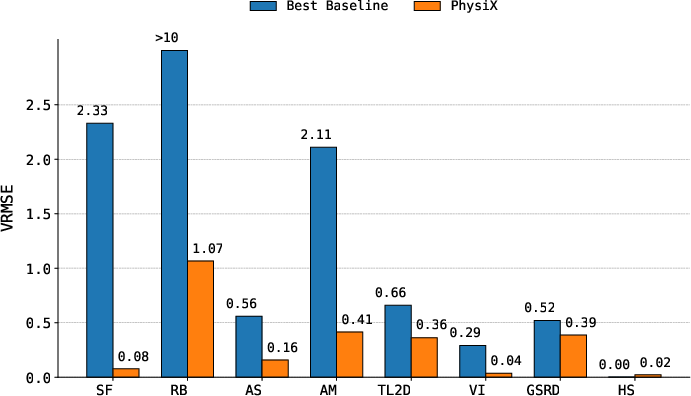

PhysiX was evaluated on eight simulation tasks from The Well benchmark, demonstrating significant improvements over task-specific baselines (Figure 2).

Figure 2: PhysiX outperforms previous single-task baselines on the Well benchmark, demonstrating improved VRMSE across various physics simulation tasks.

In next-frame prediction, PhysiX achieves the best average rank across the eight tasks, outperforming baselines on five datasets. Notably, PhysiX achieves this performance using a single model checkpoint shared across all tasks. In long-horizon prediction, PhysiX achieves state-of-the-art performance on 18 out of 21 evaluation points across different forecasting windows. For example, on shear_flow, PhysiX reduces VRMSE by over 97% at the 6:12 horizon compared to the best-performing baseline. The long-term stability of PhysiX stems from its autoregressive nature, allowing it to learn from full sequences of simulations.

Ablation Studies

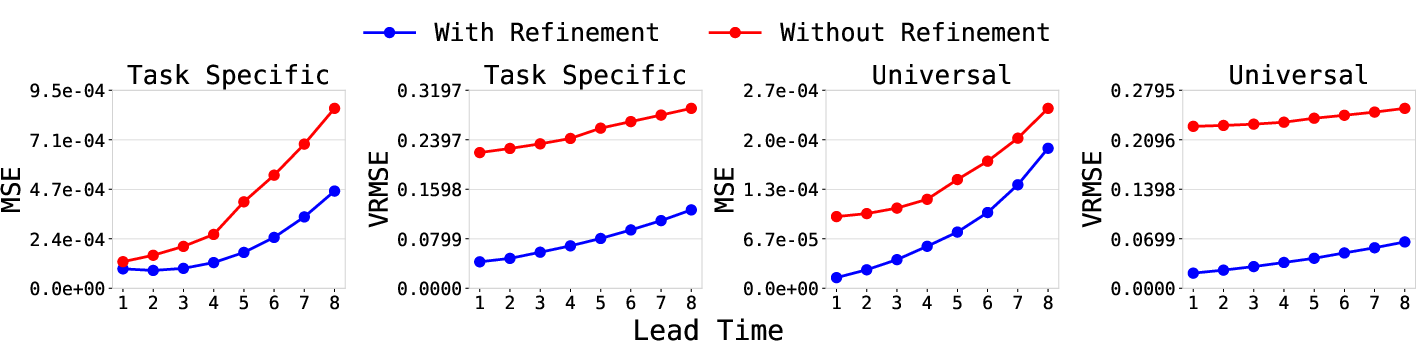

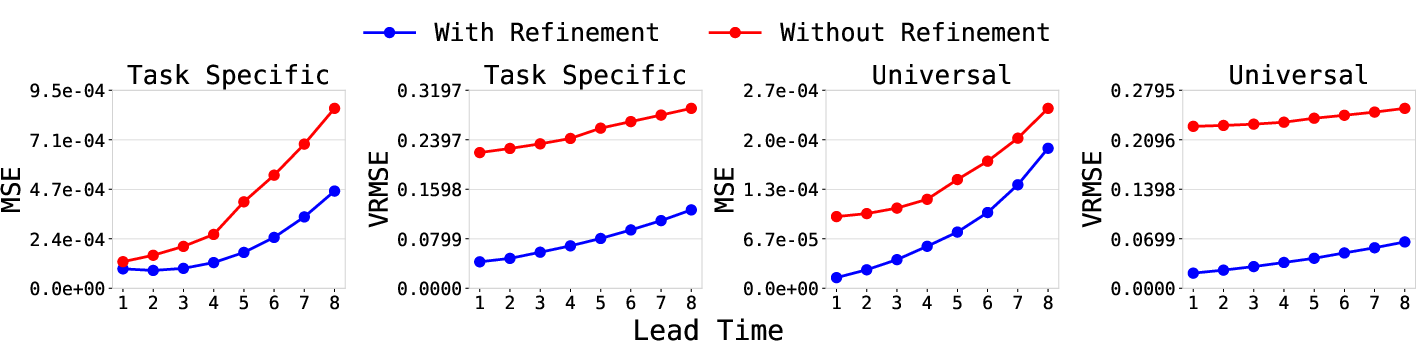

Ablation studies compared multi-task and single-task models, demonstrating that the universal model outperforms task-specific models on the majority of datasets. Additional experiments validated the effectiveness of the refinement module, which reduces MSE and VRMSE metrics, and showed that initializing the model with weights pre-trained on natural videos significantly accelerates convergence and improves performance.

Figure 3: The refinement module reduces VRMSE and MSE across different prediction windows, highlighting its effectiveness in improving prediction accuracy.

Adaptation to Unseen Simulations

PhysiX's adaptability was evaluated on two unseen simulations, euler_multi_quadrants and acoustic_scattering, involving novel input channels and physical dynamics. The tokenizer was fully finetuned for each task, and two variants of the autoregressive model were considered: PhysiXf (finetuned) and PhysiXs (trained from scratch using the Cosmos checkpoint as initialization). PhysiXf consistently outperformed PhysiXs across all settings, highlighting its ability to effectively transfer knowledge to previously unseen simulations.

Qualitative Analysis

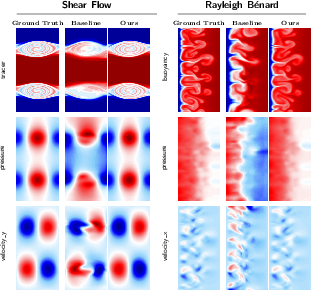

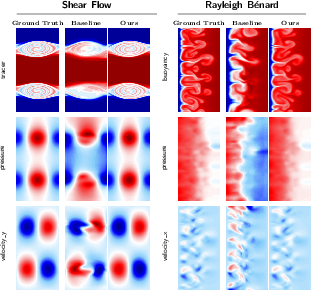

Qualitative comparisons between PhysiX and baseline models on shear_flow and rayleigh_benard datasets (Figure 4) demonstrate that PhysiX produces predictions that remain visually consistent with the ground truth, while baseline models exhibit noticeable distortions and loss of fine-grained structures.

Figure 4: Qualitative comparison of PhysiX and baseline models, showing that PhysiX maintains high-fidelity predictions over longer time horizons.

Conclusion

PhysiX presents a unified foundation model for general-purpose physical simulation, using a universal tokenizer, a large-scale autoregressive transformer, and a refinement network. The joint training approach enables PhysiX to capture shared spatiotemporal patterns and adapt to varying resolutions, channel configurations, and physical semantics. The model significantly outperforms task-specific baselines on a wide variety of physical domains, demonstrating the potential of foundation models in accelerating scientific discovery by providing generalizable tools to model complex physical phenomena. Future work could explore more flexible tokenization architectures to enable the compression of higher spatial dimensions and incorporate data from outside The Well benchmark to improve the model's generalization capabilities.