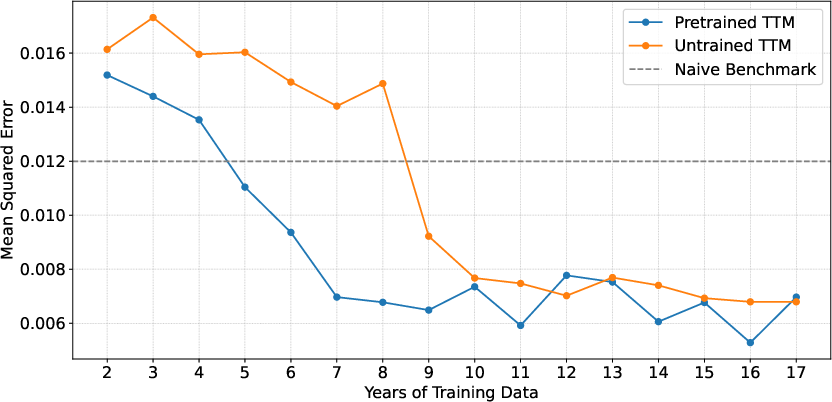

- The paper demonstrates that Tiny Time Mixers (TTM) achieve 25-50% performance gains over untrained models in forecasting US Treasury yields.

- The study finds that TSFMs offer strong transferability and sample efficiency, requiring 3–10 fewer years of data to match traditional model performance.

- The research highlights that while TSFMs excel in noisy financial contexts, traditional methods sometimes match or exceed their performance, indicating a need for domain-specific tuning.

Time Series Foundation Models for Multivariate Financial Time Series Forecasting

This paper investigates the application of Time Series Foundation Models (TSFMs) to financial time series forecasting, focusing on scenarios with limited data availability. It evaluates two TSFMs—Tiny Time Mixers (TTM) and Chronos—across three financial forecasting tasks: US 10-year Treasury yield changes, EUR/USD volatility, and equity spread prediction. The paper assesses the transferability and sample efficiency of these models, highlighting their potential and limitations in financial contexts.

Background and Motivation

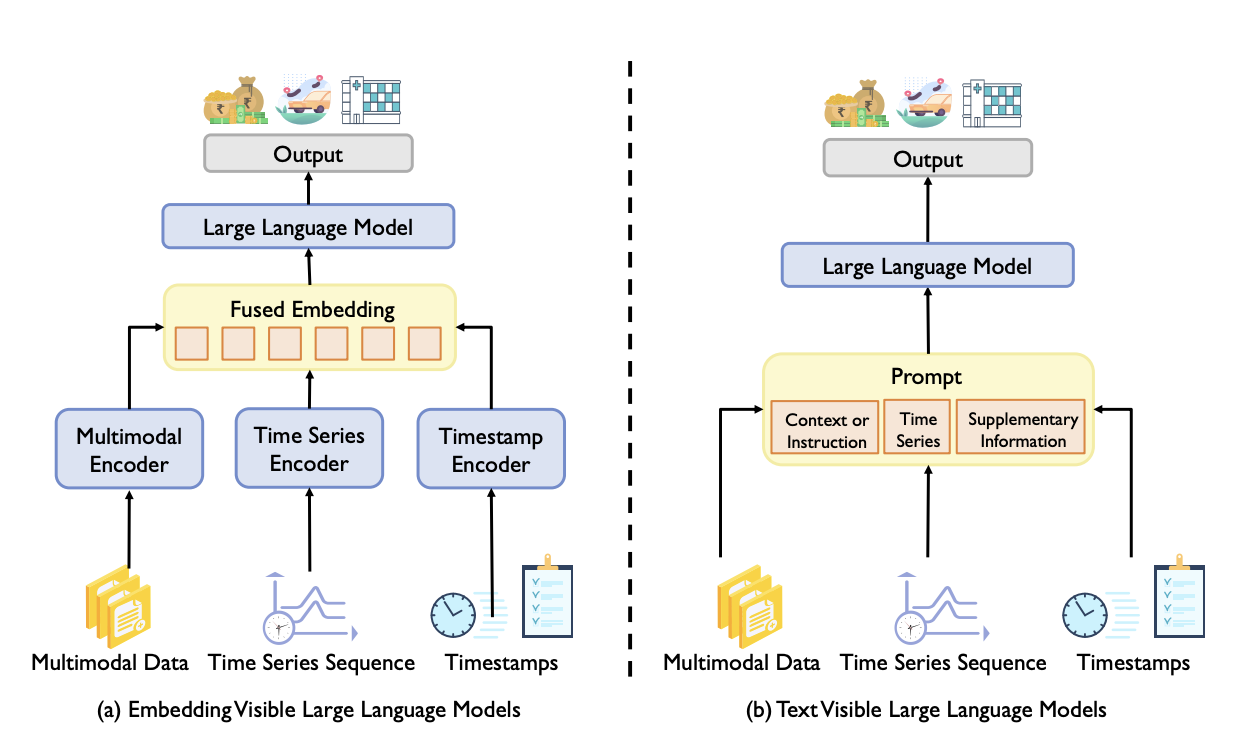

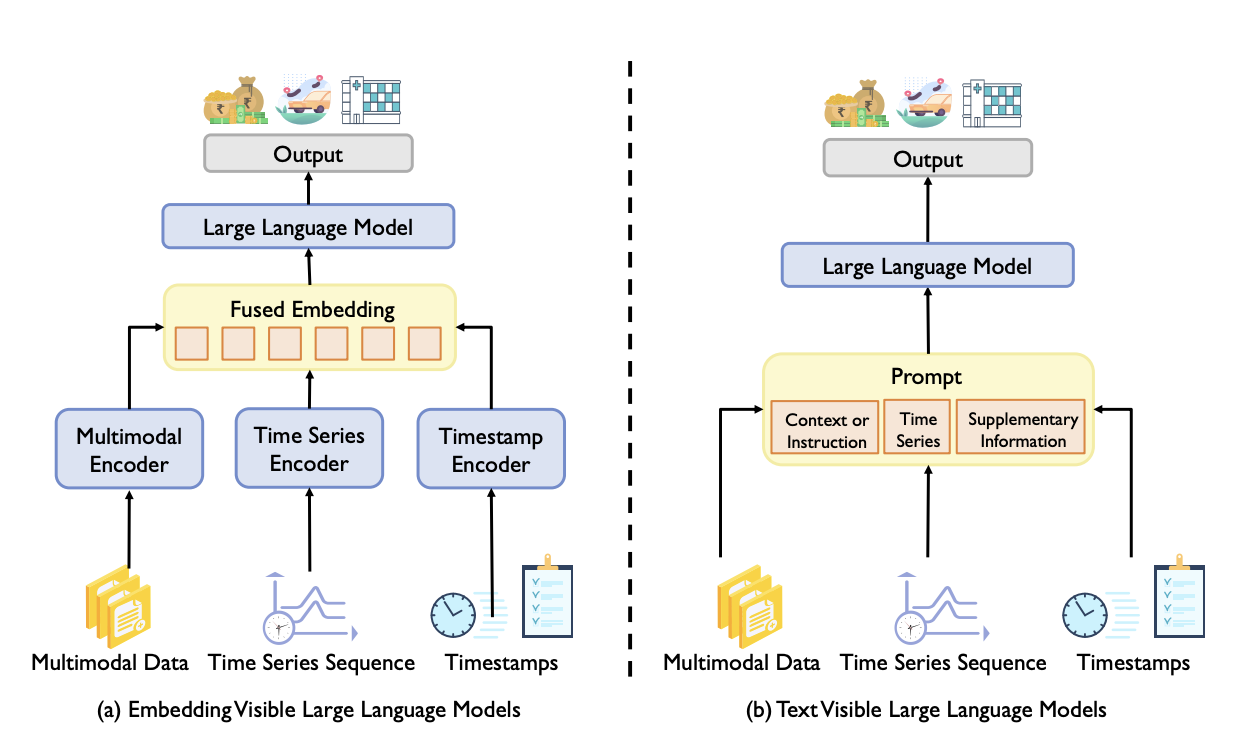

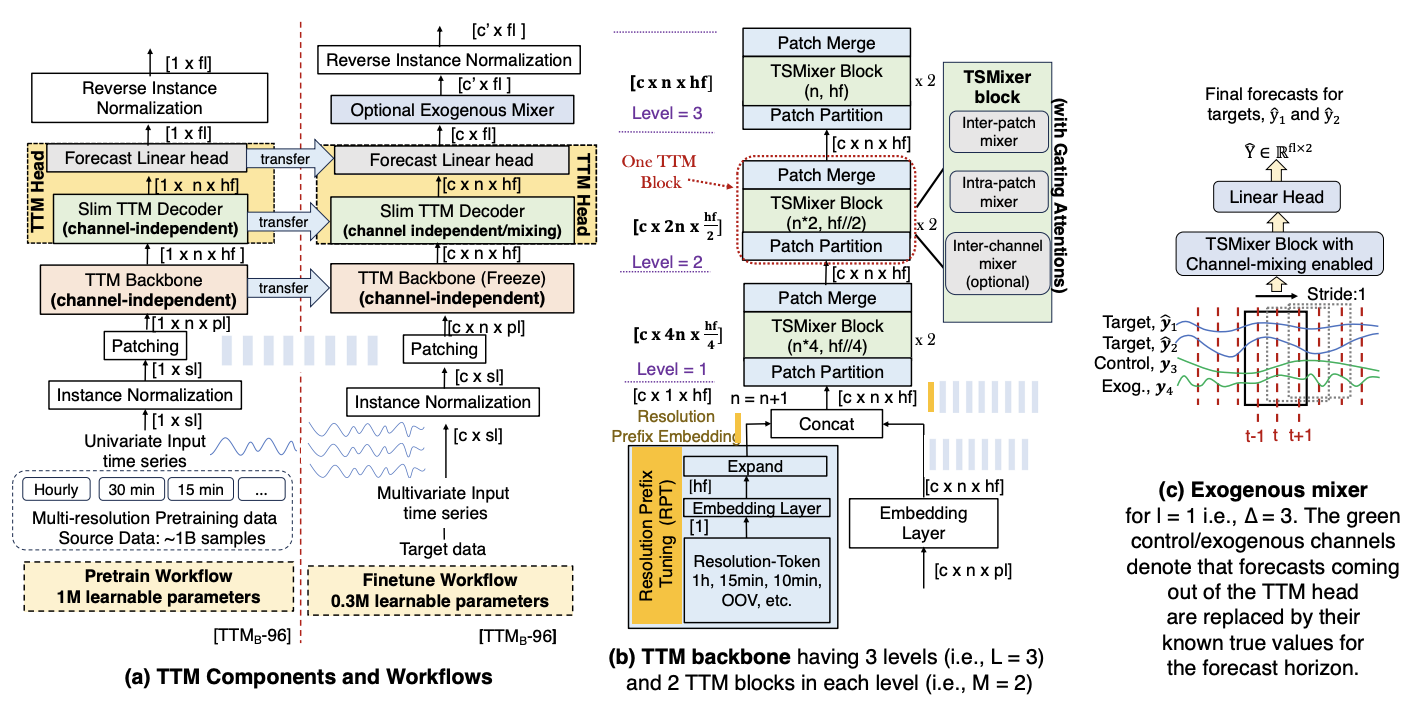

Financial time series forecasting poses challenges due to nonlinear relationships, temporal dependencies, and limited data. Traditional econometric models often struggle to capture these complexities, while machine learning techniques require substantial data for effective training. Foundation Models (FMs) offer a promising solution by pretraining on diverse datasets and adapting to specific tasks. Time Series Foundation Models (TSFMs) extend this concept to temporal data, leveraging patterns learned from broad time series corpora for specific forecasting applications. The paper examines two distinct models: Tiny Time Mixers (TTM), a compact architecture designed specifically for time series applications, and Chronos, which tokenises time series values before training transformer based LLM architectures on these tokenised sequences (Figure 1).

Figure 1: Types of LLM Adaptation to Time Series Methods, from \cite{ye2024}

Experimental Design

The paper employs three financial forecasting tasks:

- Forecasting US 10-year Treasury yield changes: This task is characterised by a low signal-to-noise ratio and aims to predict changes in US 10-year Treasury yields 21 business days ahead.

- Predicting EUR/USD realised volatility: This risk-oriented task forecasts EUR/USD realised volatility 21 business days ahead, leveraging the strong autocorrelation present in volatility series.

- Forecasting the spread between equity indices: This relative-value task uses mid-frequency co-integration signals between MSCI Australia (EWA) and MSCI Canada (EWC) equity indices to forecast mean-reverting spreads.

The models are evaluated using standard regression metrics and compared against traditional benchmarks, including linear models, tree-based methods, and sequential models such as LSTMs. Performance is assessed in zero-shot mode and after fine-tuning on historical data to evaluate transferability and adaptation. The TSFMs are evaluated using two core evaluation frameworks: the sample-efficiency probe and the transfer-gain test.

Implementation Details

The paper focuses on two TSFMs:

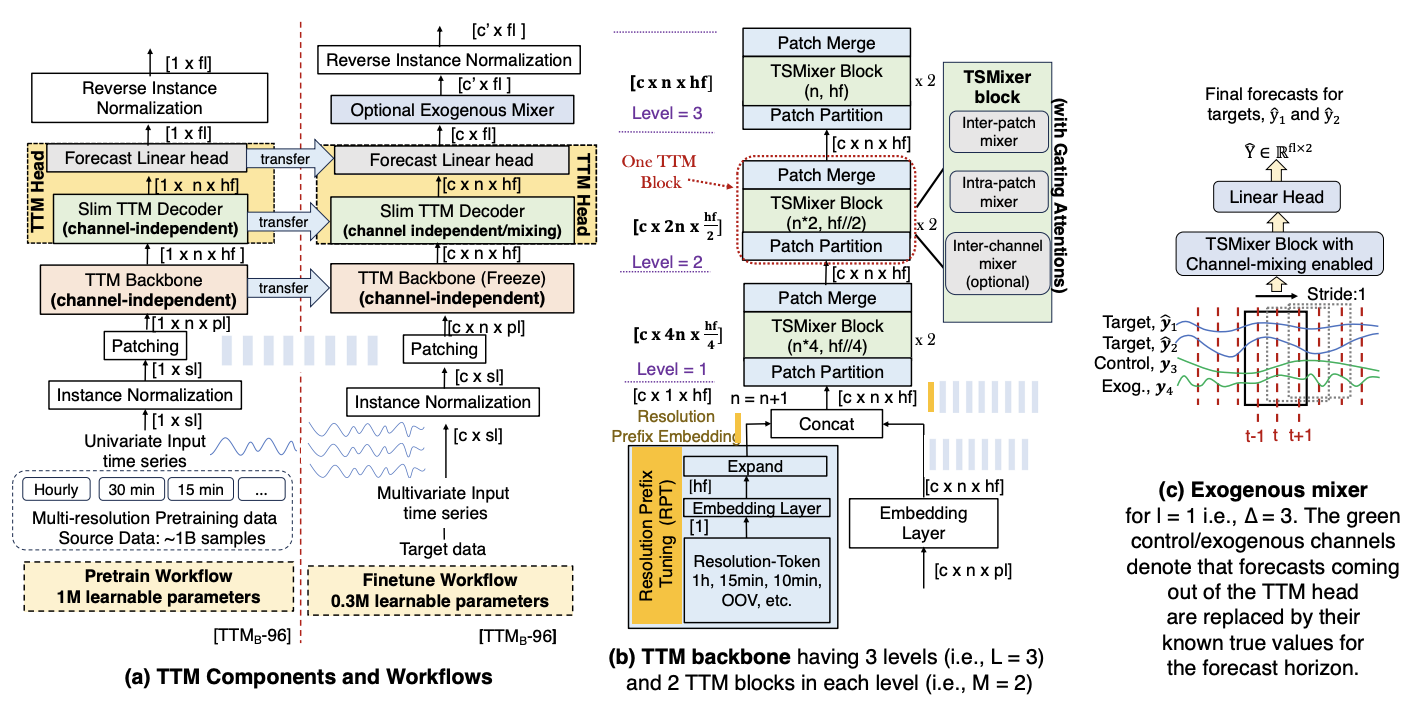

- Tiny Time Mixers (TTM): Developed by IBM, TTM is a parameter-efficient model built on TSMixer, a lightweight feedforward architecture that replaces self-attention with MLPs (Figure 2). It incorporates resolution prefix tuning, adaptive patching, and diverse resolution sampling to generalise across series with different temporal resolutions.

Figure 2: Architecture of Tiny Time Mixers (TTM), a TSFM Developed by IBM, from \cite{vijay2024}

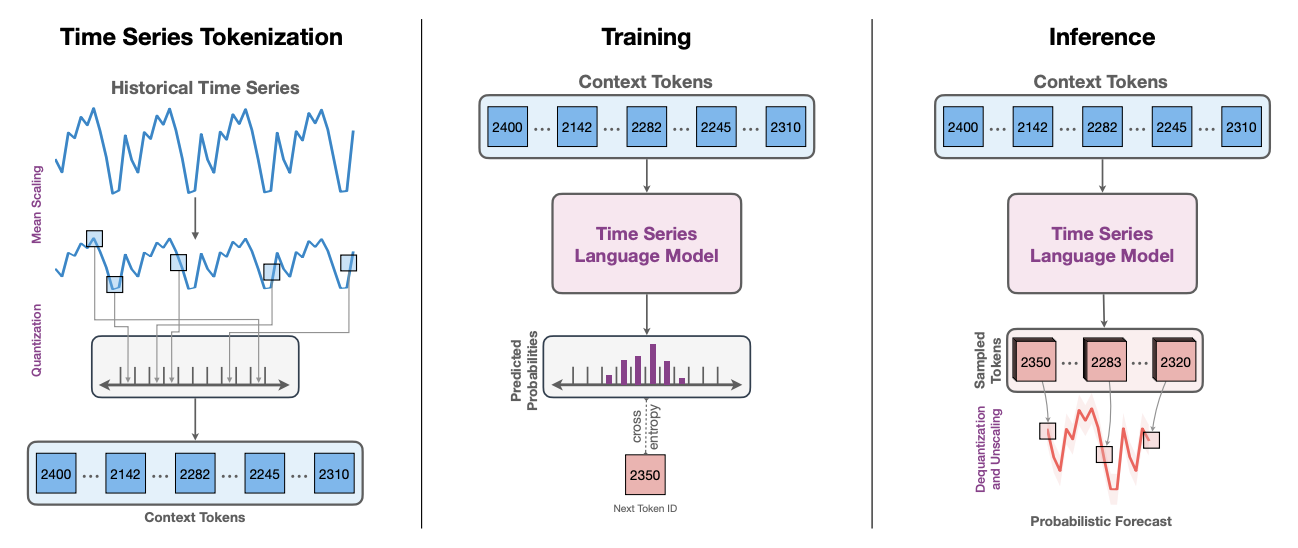

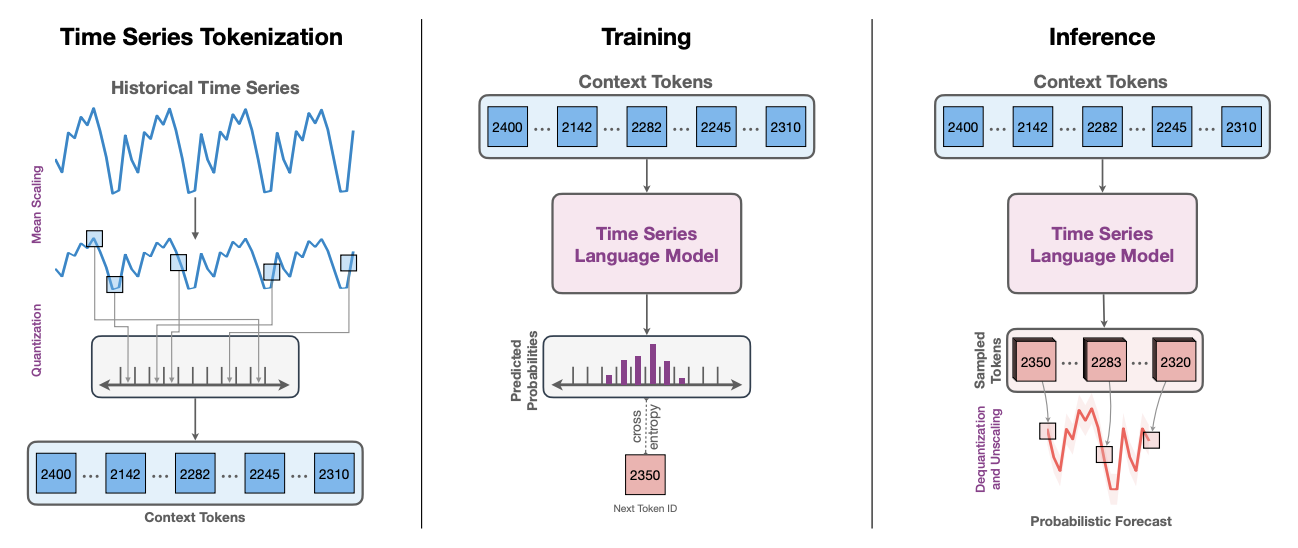

- Chronos: Introduced by Amazon as part of the Chronos framework, this model repurposes LLMs for forecasting tasks [chronos]. Chronos first discretises the input space using a fixed vocabulary of quantised bins, allowing the use of transformer-based LLMs with minimal architectural modifications (Figure 3). The model uses the encoder-decoder T5 architecture, pretrained on a large corpus of time series data and synthetic series generated using Gaussian processes.

Figure 3: Chronos Forecasting Process. From left to right: time series tokenisation via scaling and quantisation; training via LLMling on token sequences; and inference via autoregressive sampling and dequantisation. Image from \cite{chronos}.

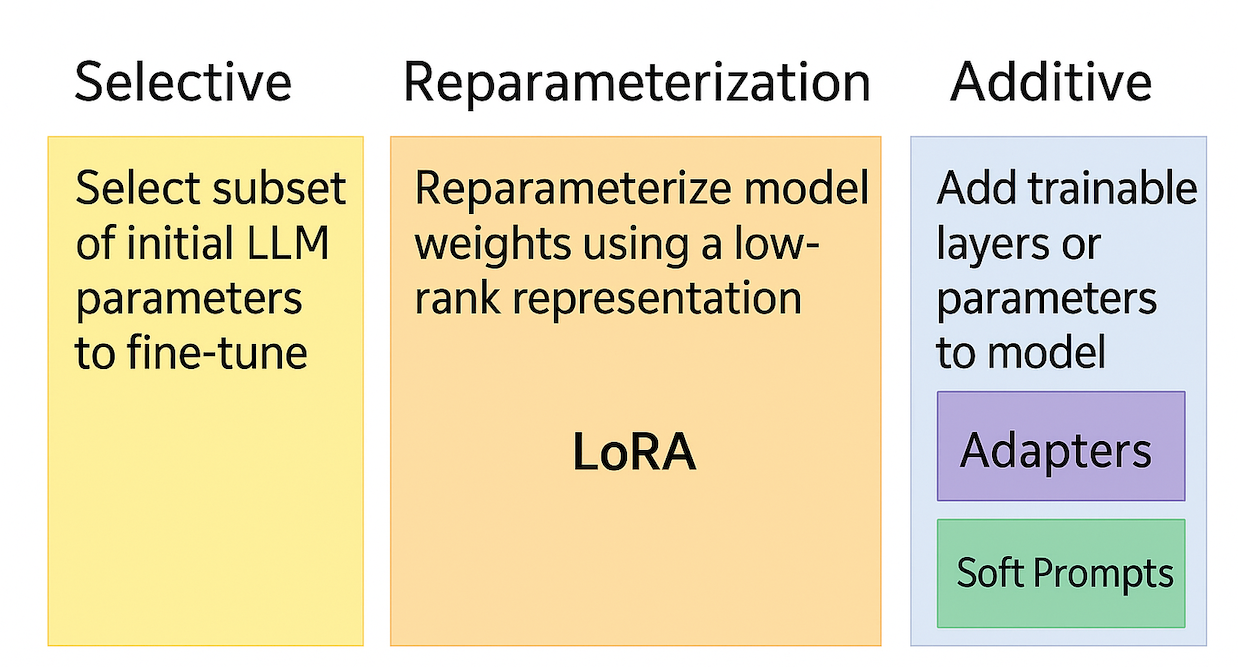

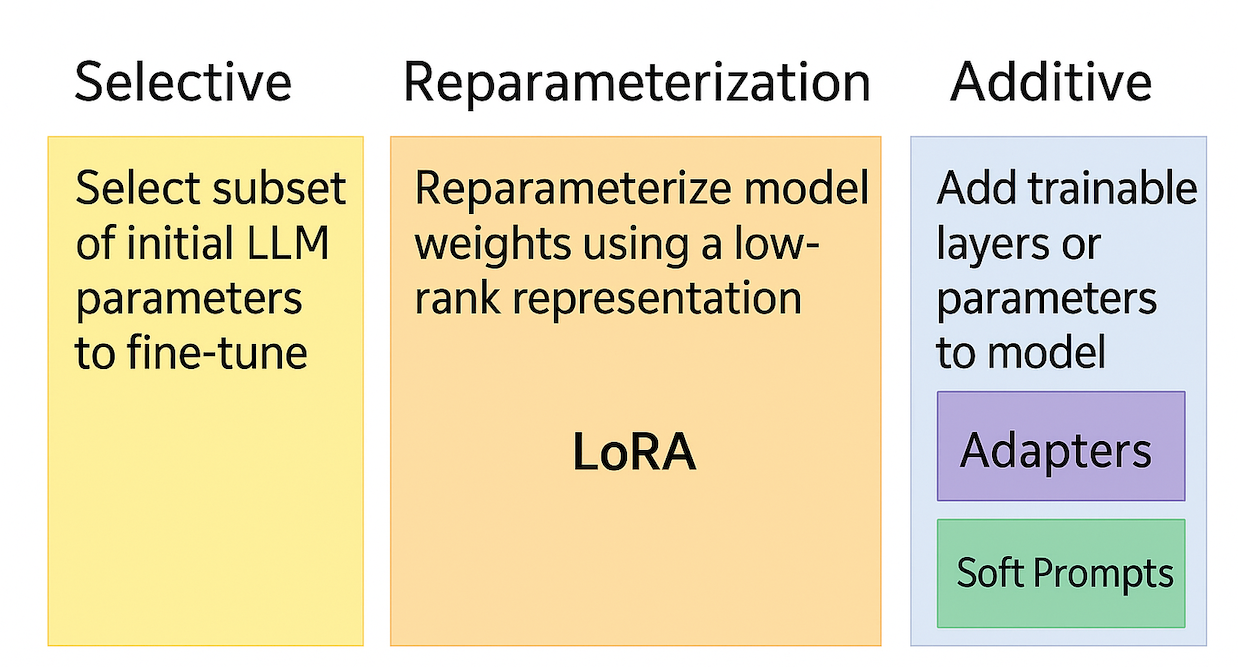

The paper also discusses fine-tuning methods, including full fine-tuning, no fine-tuning, and parameter-efficient fine-tuning (PEFT) techniques. PEFT methods adapt large pretrained models by updating only a small fraction of their parameters, reducing training costs and memory usage while preserving most of the backbone’s representations (Figure 4).

Figure 4: Types of Parameter Efficient Fine-Tuning (PEFT) Methods, from \cite{Adik2023}

Results and Analysis

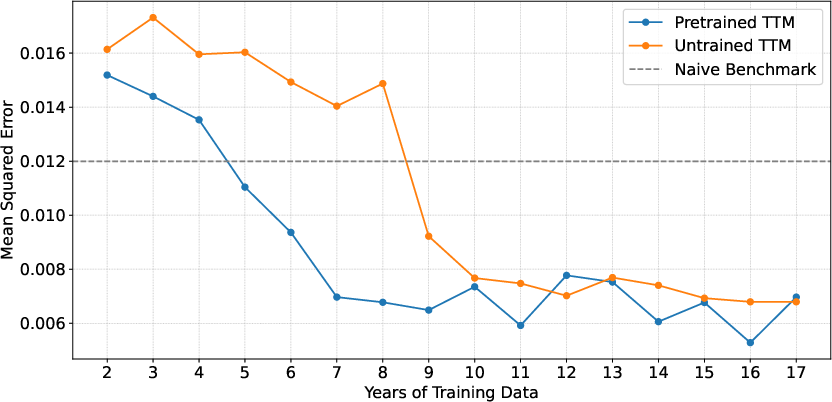

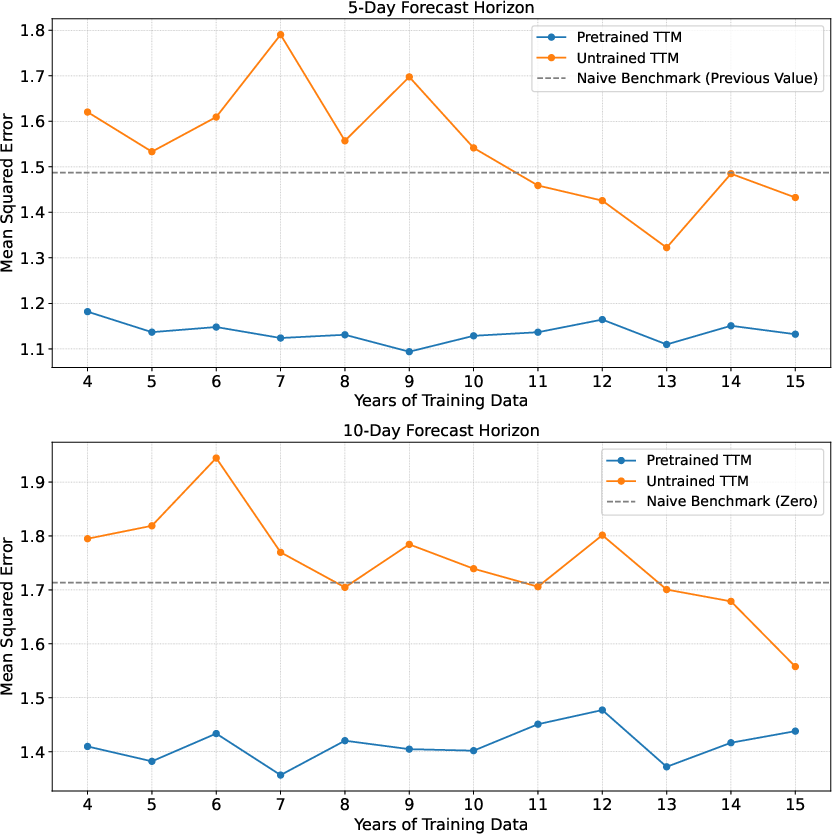

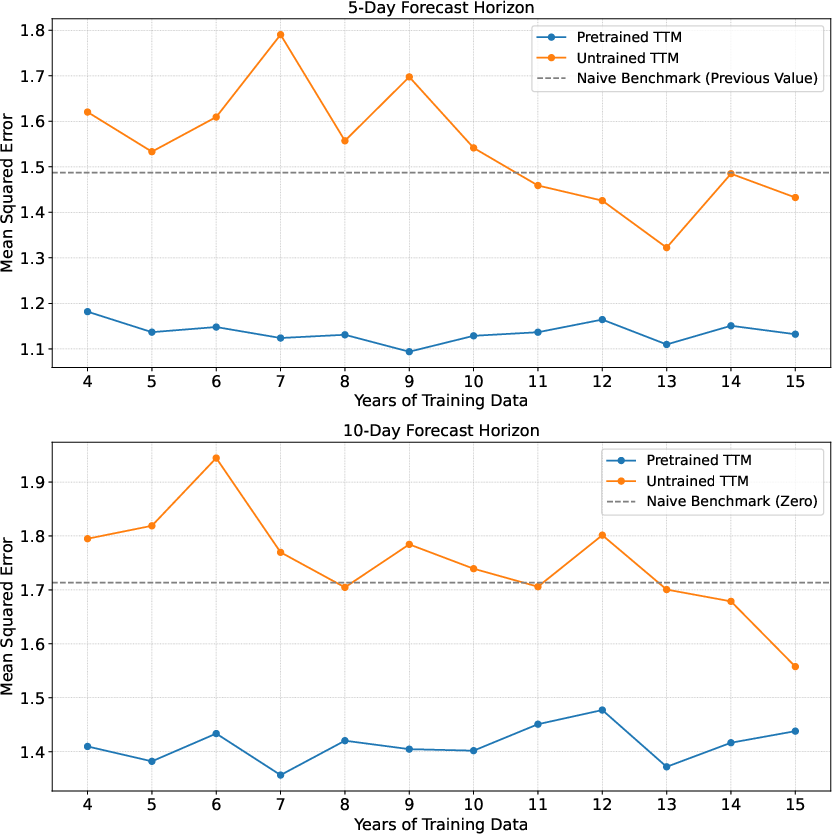

The results demonstrate that TTM exhibits strong transferability, achieving 25-50% better performance than an untrained model when fine-tuned on limited data and 15-30% improvements even with lengthier datasets. TTM's zero-shot performance outperformed naive benchmarks in volatility forecasting and equity spread prediction, demonstrating that TSFMs can surpass traditional benchmark models without fine-tuning. The pretrained model consistently required 3–10 fewer years of data to achieve comparable performance levels compared to the untrained model, demonstrating significant sample efficiency gains (Figure 5). In Task 3, the untrained model never reaches the performance of the pretrained model even with the full fine-tuning dataset (Figure 6).

Figure 5: TTM Mean Squared Error when Fine-tuned on Different Training Dataset Sizes when Forecasting 10Y Treasury Yields 21 Business Days Ahead

Figure 6: TTM Mean Squared Error when Fine-tuned on Different Training Dataset Sizes when Forecasting the Spread Between MSCI Australia and MSCI Canada at Different Horizons

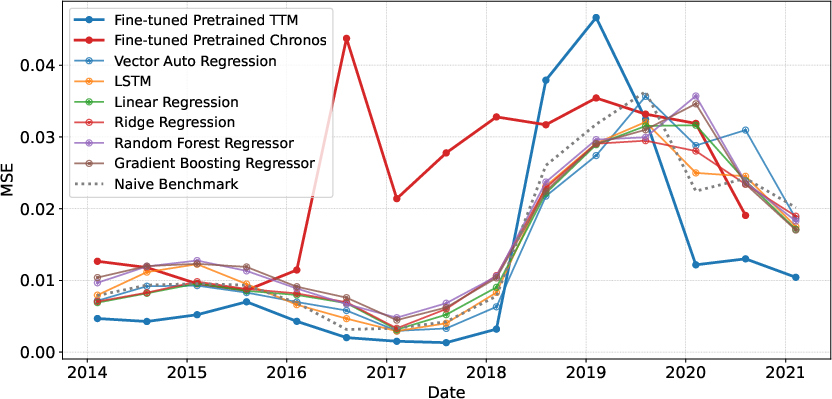

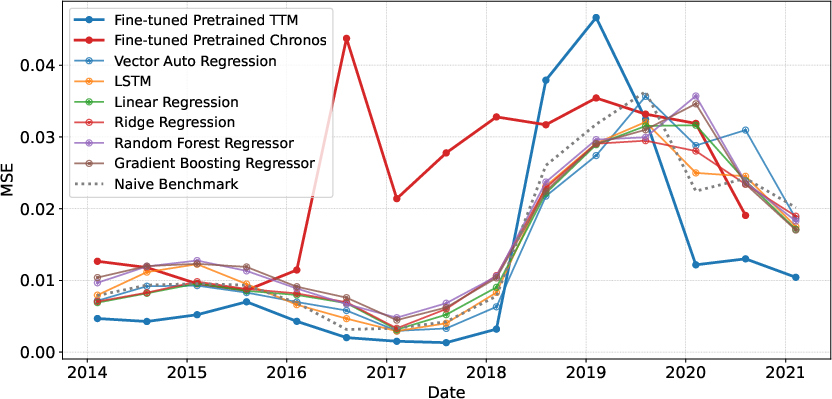

While TTM outperformed naive baselines, traditional specialised models matched or exceeded its performance in two of three tasks, suggesting TSFMs prioritise breadth over task-specific optimisation (Figure 7). Chronos failed to consistently surpass the naive benchmark across all tasks, indicating a lack of effective knowledge transfer.

Figure 7: Rolling Performance of Different Models when Forecasting 10-Year Treasury Yields 21 Business Days Ahead

Conclusions and Implications

The paper indicates that TSFMs offer substantial promise for financial forecasting, particularly in noisy, data-constrained tasks. However, achieving competitive performance likely requires domain-specific pretraining and architectural refinements tailored to financial time series characteristics. The transfer-gain test and sample-efficiency probe offer practical methods for assessing the transferability of TSFMs to financial forecasting applications. The research highlights the importance of architectural design and pretraining strategy alignment, as TTM's decoder architecture with explicit temporal modelling proved more effective than Chronos' adapted LLM approach. Furthermore, pretraining from scratch would eliminate potential look-ahead bias from including correlated future data in TTM's financial pretraining dataset. Future research directions include financial domain-specific pretraining, task-specialised universal models, and multi-modal financial foundation models. The consistent outperformance of TTM is particularly noteworthy given that financial data made up only a small portion of the pretraining data.