- The paper introduces PhysXNet and PhysXGen, pioneering physical-grounded 3D asset generation by integrating physics into the 3D structural pipeline.

- The paper utilizes a human-in-the-loop annotation process and a dual-branch physics-aware VAE to achieve superior PSNR, CD, F-Score, and MAE metrics.

- The paper highlights the transformative potential of integrating physical properties for enhanced simulation and embodied AI while outlining future research directions.

PhysX-3D: Physical-Grounded 3D Asset Generation

This paper introduces PhysXNet, a novel physics-grounded 3D dataset, and PhysXGen, a framework for generating 3D assets with physical properties. The work addresses the gap in existing 3D generative models that often overlook crucial physical properties, hindering their application in domains like simulation and embodied AI. PhysXNet contains over 26K richly annotated 3D objects, while PhysXNet-XL, an extended version, features over 6 million procedurally generated and annotated 3D objects.

PhysXNet Dataset Creation

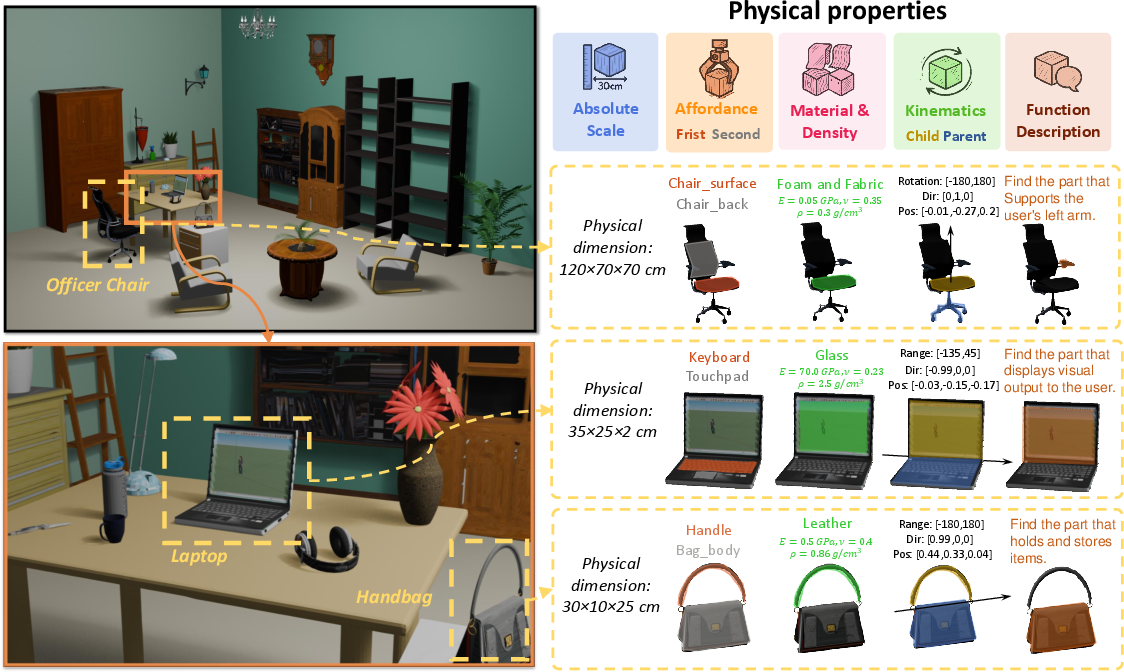

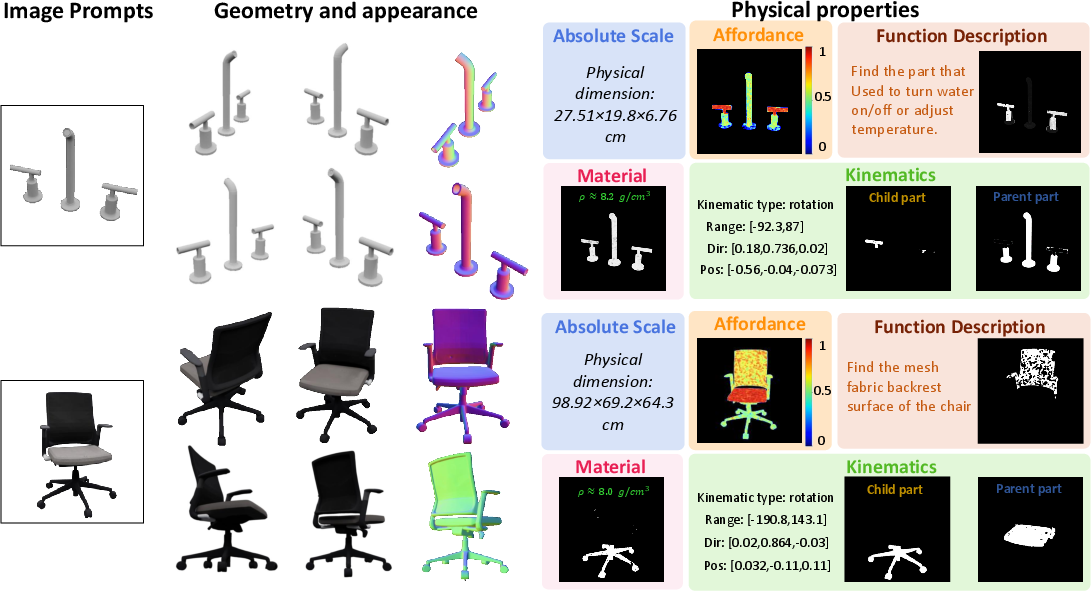

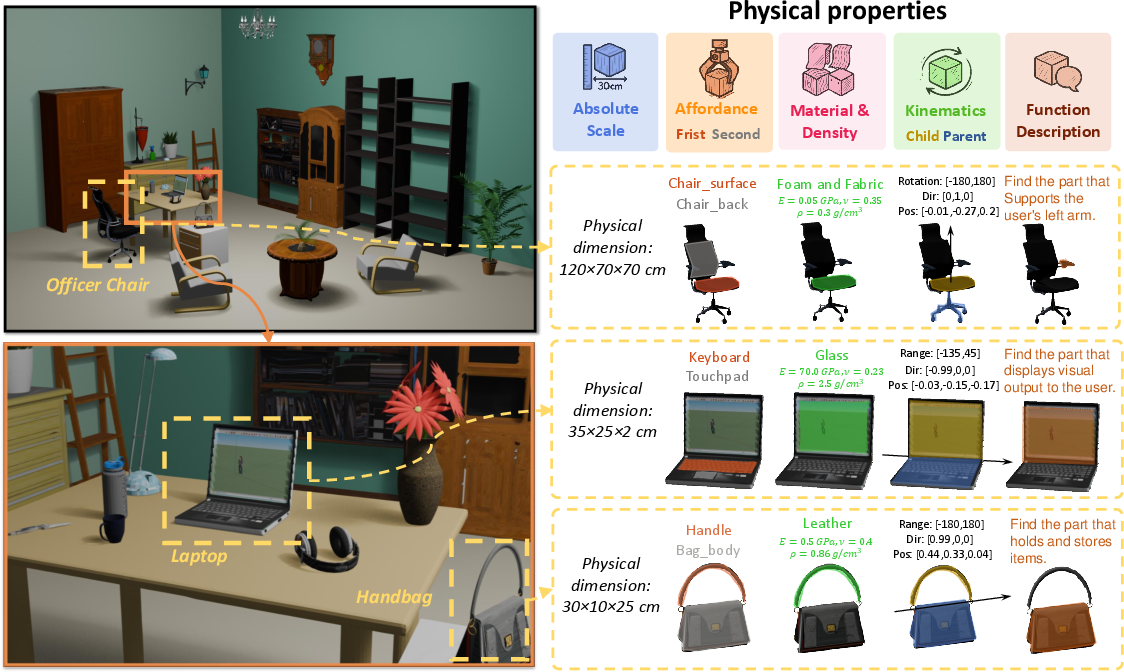

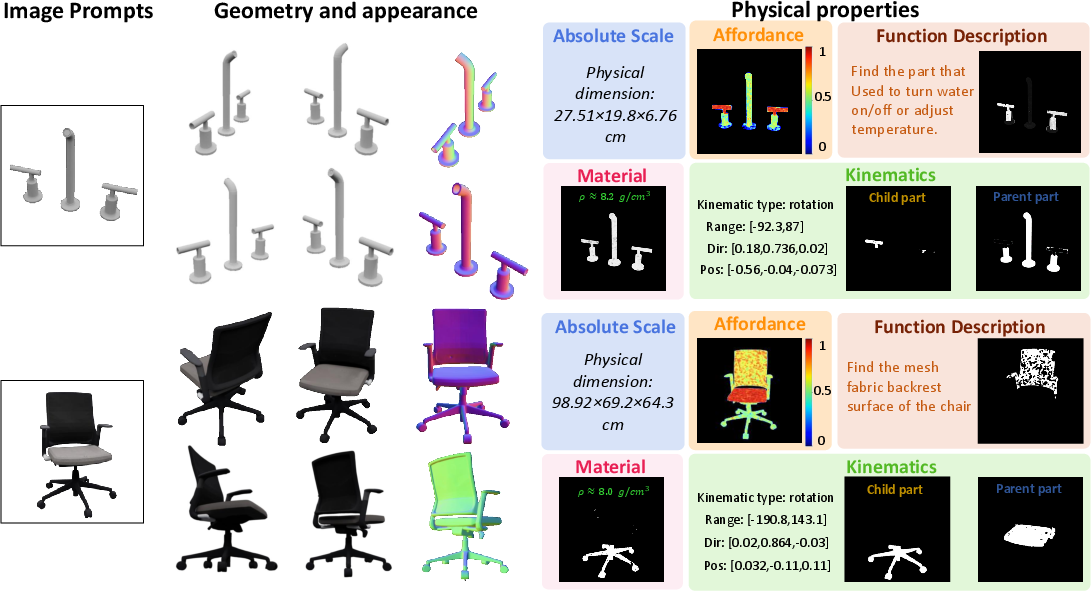

The authors address the limitations of current 3D datasets by introducing PhysXNet, which includes annotations across five dimensions: absolute scale, material, affordance, kinematics, and function description (Figure 1).

Figure 1: Visualizations of PhysXNet for physical 3D generation.

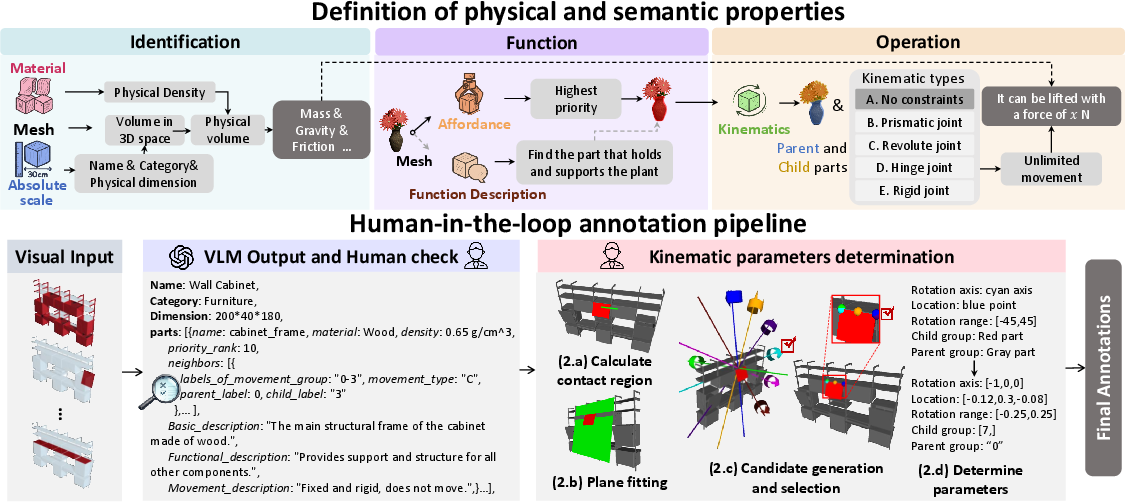

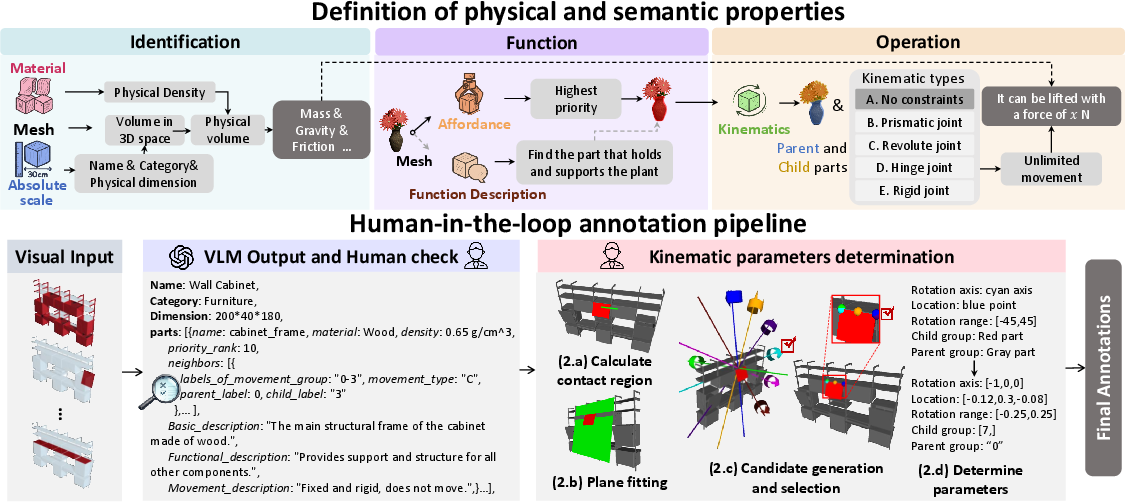

To create this dataset, they developed a human-in-the-loop annotation pipeline that leverages vision-LLMs (VLMs) for efficient and robust labeling (Figure 2).

Figure 2: Definition of properties in PhysXNet and overview of the human-in-the-loop annotation pipeline.

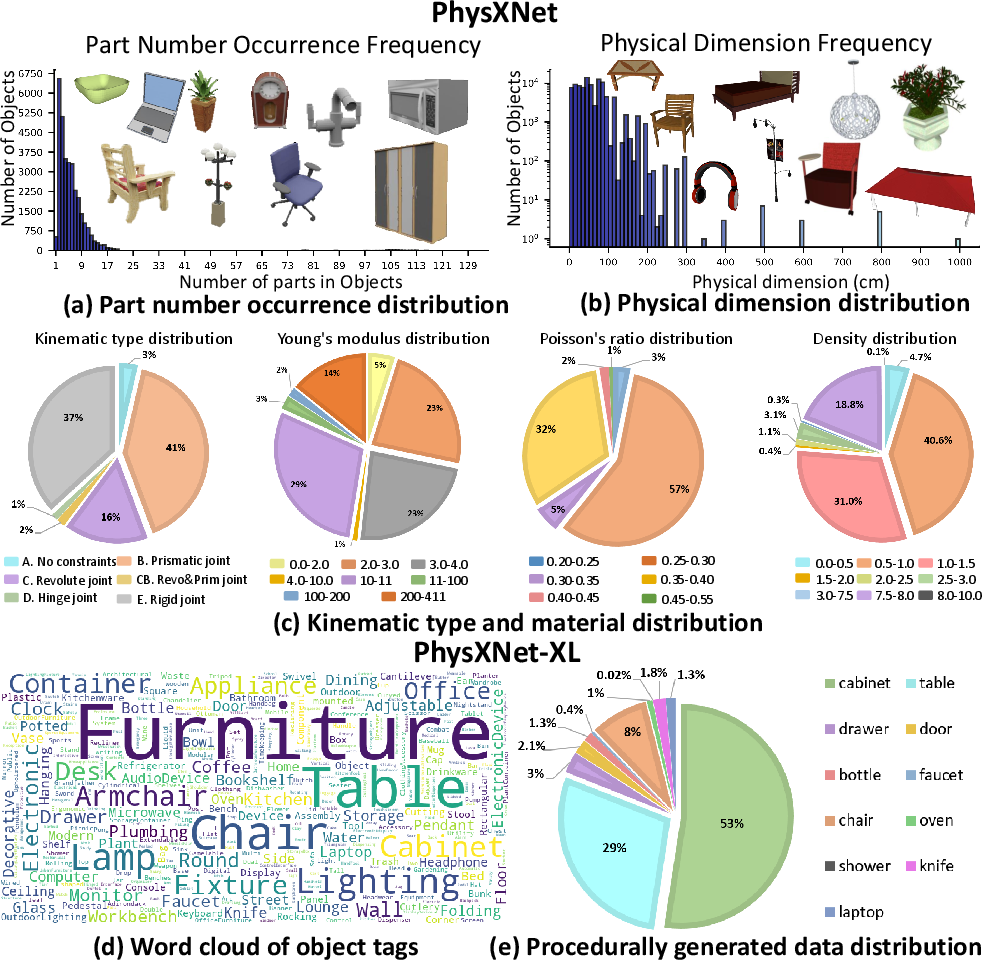

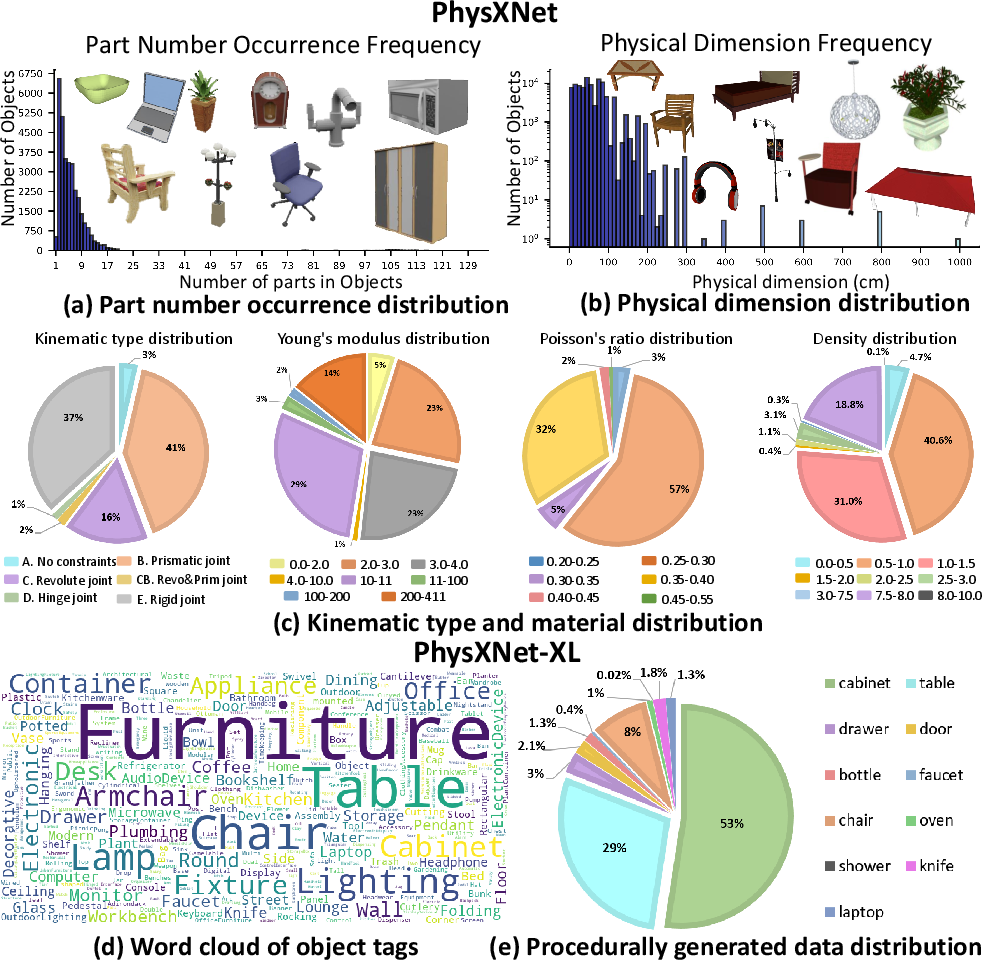

The pipeline consists of three stages: target visual isolation, automatic VLM labeling, and expert refinement. The authors also present PhysXNet-XL, an extended dataset with over 6 million procedurally generated and annotated 3D objects. Statistical analysis (Figure 3) reveals a long-tailed distribution of parts per object and provides insights into the dataset's composition regarding kinematic states, materials, and object labels.

Figure 3: Statistics and distribution of PhysXNet and PhysXNet-XL.

PhysXGen Framework

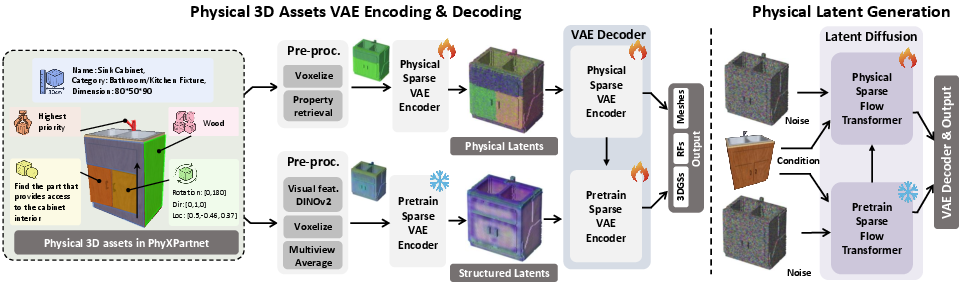

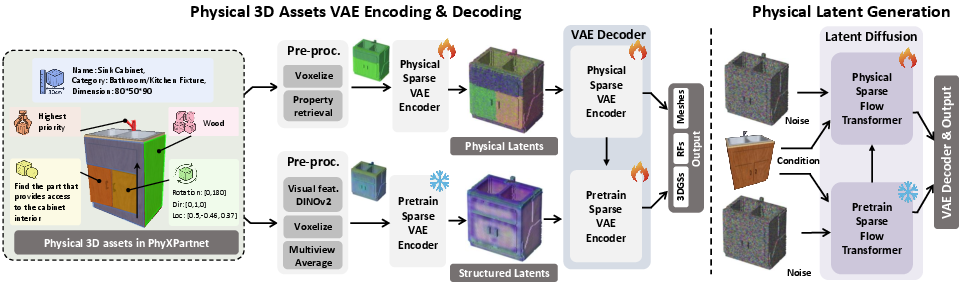

To address the modeling gap in generating physical-grounded 3D assets, the authors propose PhysXGen, a feedforward model that injects physical knowledge into pre-trained 3D structural space (Figure 4).

Figure 4: The architecture of the PhysXGen framework.

PhysXGen employs a dual-branch architecture to model the latent correlations between 3D structures and physical properties explicitly. This design choice allows the model to produce 3D assets with plausible physical predictions while preserving the native geometry quality of the pre-trained model. The framework leverages a physical 3D VAE for latent space learning and a physics-aware generative process for structured latent generation.

Experimental Evaluation and Results

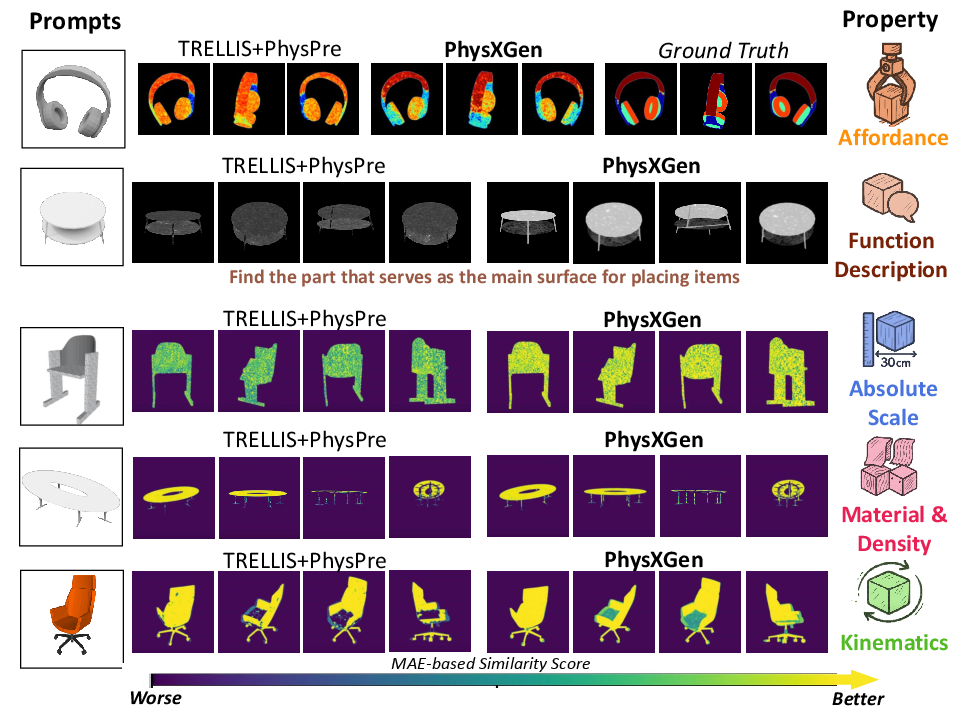

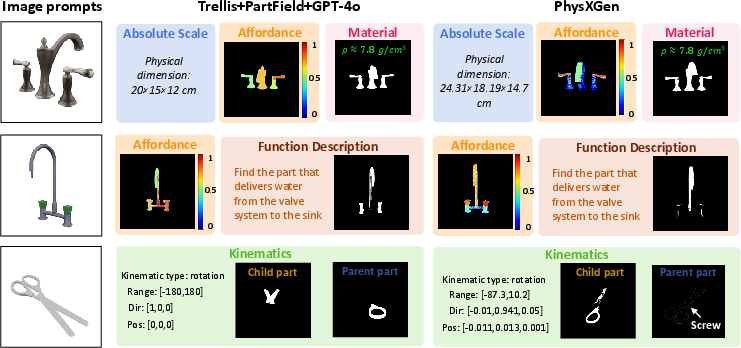

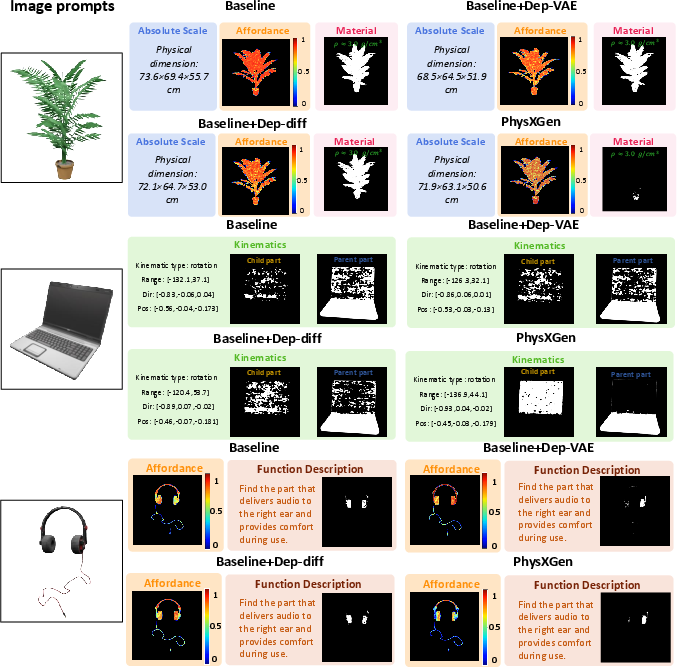

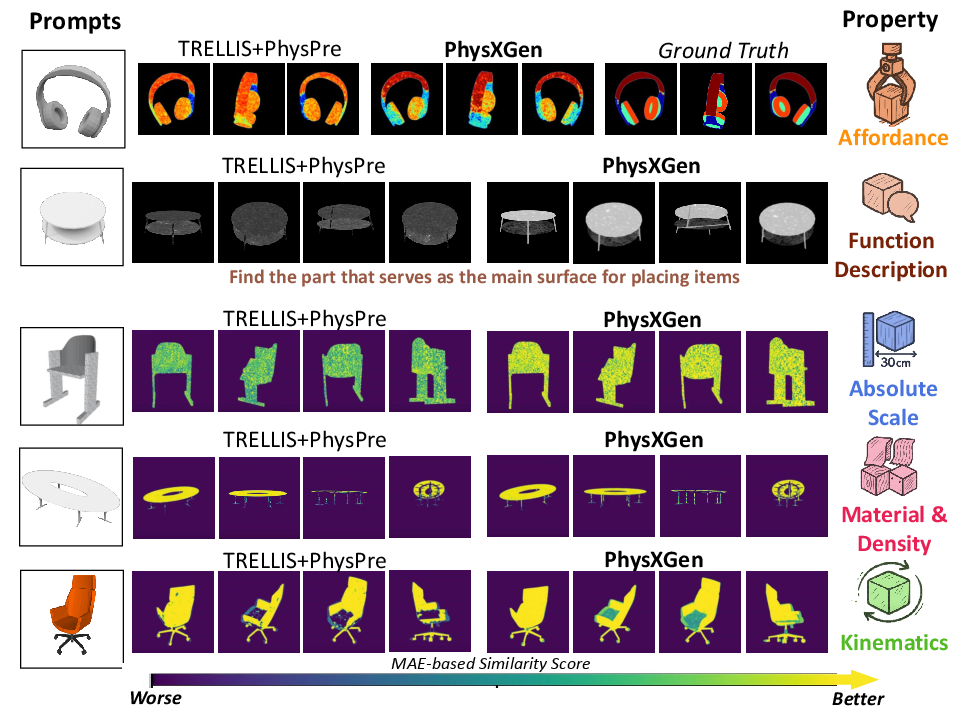

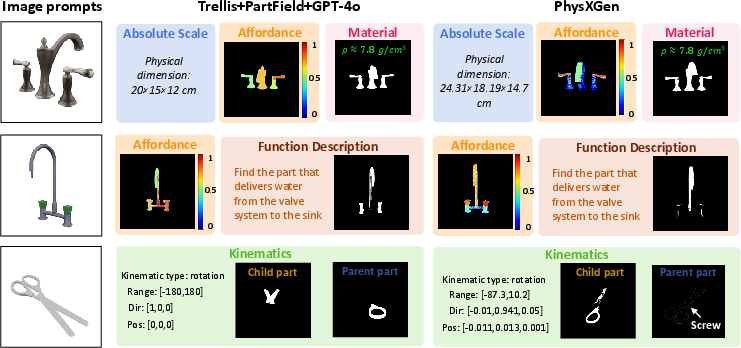

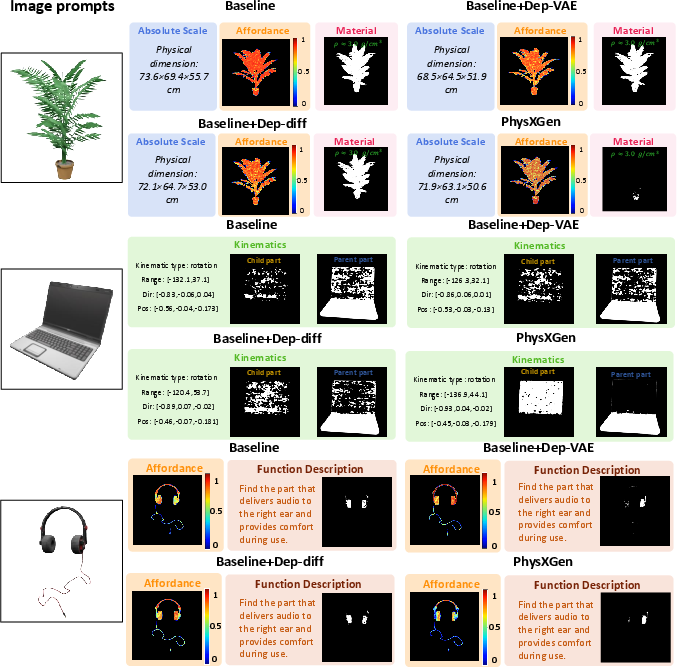

The authors conduct extensive experiments to validate the performance and generalization capability of PhysXGen. They evaluate the generated 3D assets using both structural and physical property metrics. The quantitative results, shown in Table 1 of the paper, demonstrate that PhysXGen outperforms baselines in terms of Peak Signal-to-Noise Ratio (PSNR), Chamfer Distance (CD), F-Score, and Mean Absolute Error (MAE) for physical properties. Qualitative comparisons (Figure 5, Figure 6, Figure 7) further illustrate the ability of PhysXGen to generate physically plausible 3D assets from single image prompts. Ablation studies (Table 2 in the paper, Figure 8) validate the effectiveness of the dual-branch architecture and the joint modeling of structural and physical features.

Figure 5: Visualization of the generated results.

Figure 6: Qualitative comparison of different methods using MAE-based similarity assessment.

Figure 7: Qualitative comparison of generated physical 3D assets.

Figure 8: Qualitative comparison of different architectures.

Implications and Future Directions

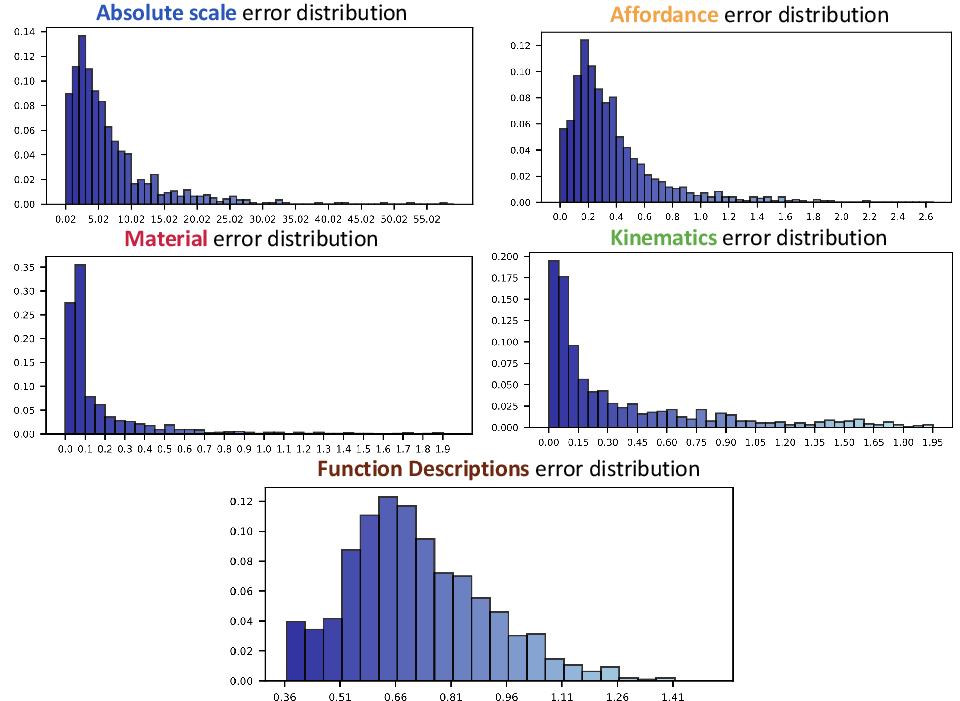

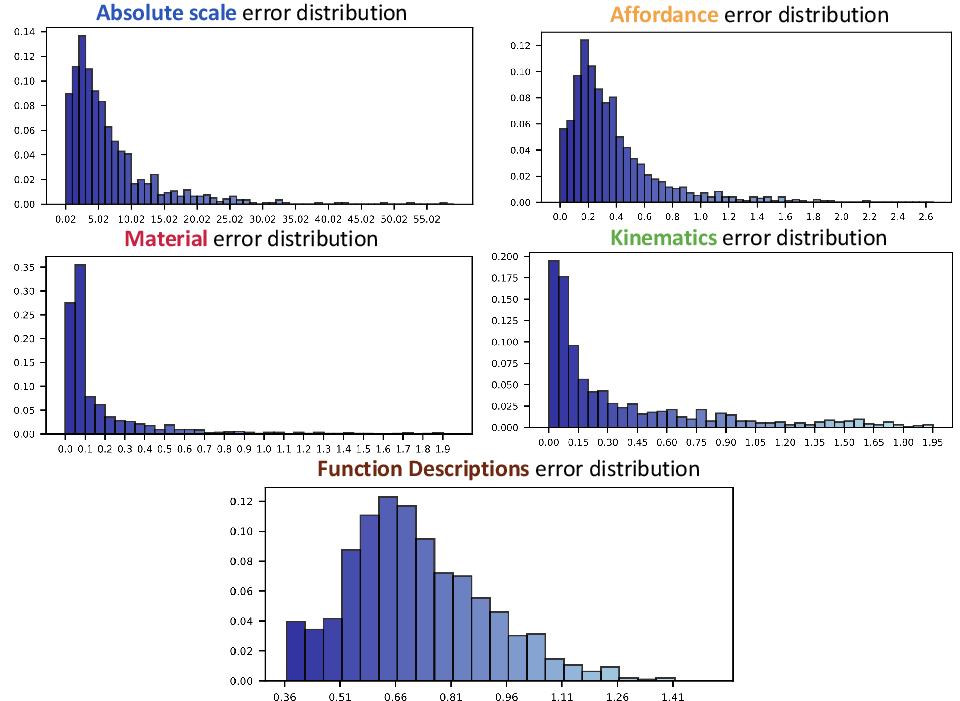

This research pioneers an end-to-end paradigm for physical-grounded 3D asset generation, advancing the state-of-the-art in physical-grounded content creation. The PhysXNet dataset and PhysXGen framework unlock new possibilities for downstream applications in simulation, embodied AI, and robotics. The authors acknowledge limitations in learning fine-grained properties and address artifacts, suggesting future work to include more 3D data and integrate additional physical properties and kinematic types. The error distribution of different physical properties are shown in Figure 9.

Figure 9: Error distribution of different physical properties.

Conclusion

The paper makes significant contributions to physical 3D generation by introducing PhysXNet and PhysXGen. The human-in-the-loop annotation pipeline efficiently converts existing geometry-focused datasets into fine-grained, physics-annotated 3D datasets. The PhysXGen framework integrates physical priors into structural architectures, achieving robust generation performance. The authors reveal fundamental challenges and directions for future research in physical 3D generation, which will likely attract attention from the embedded AI, robotics, and 3D vision communities.