- The paper introduces MLE-STAR, a novel agent that automates ML model development by integrating web search with targeted code refinement, achieving significant performance gains in Kaggle competitions.

- The paper employs a two-phase refinement methodology with outer-loop targeted code block selection and inner-loop iterative improvements guided by ablation studies and ensemble strategies.

- The paper demonstrates that leveraging external model information and safety modules like debugging and data leakage checkers enhances model reliability and generalizability.

MLE-STAR: Automated Machine Learning Engineering

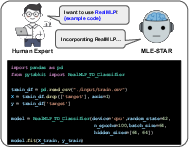

This paper introduces MLE-STAR, a novel machine learning engineering (MLE) agent that automates the development of ML models by integrating web search and targeted code refinement. MLE-STAR addresses limitations in existing MLE agents, which often rely on inherent LLM knowledge and employ coarse exploration strategies. By leveraging external knowledge and focusing on specific ML components, MLE-STAR achieves significant performance gains in Kaggle competitions.

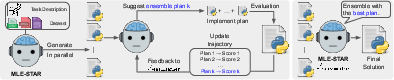

Methodological Overview

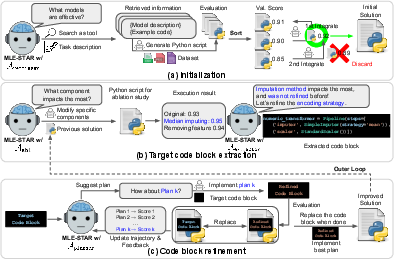

MLE-STAR operates through a series of steps designed to optimize ML model development. The process begins with generating an initial solution by retrieving relevant models from the web using Google Search. This initial solution is then iteratively refined through nested loops. The outer loop selects a specific code block corresponding to an ML component, guided by an ablation paper that evaluates the impact of each component. The inner loop refines the selected code block, using previous attempts as feedback. A novel ensemble method is also introduced, which leverages LLMs to propose and refine ensemble strategies.

Figure 1: Overview of MLE-STAR.

The formal problem setup involves finding an optimal solution s∗=argmaxs∈Sh(s), where S is the space of possible solutions and h is a score function. MLE-STAR uses a multi-agent framework A consisting of n LLM agents (A1,⋯,An), each with specific functionalities. The framework takes datasets D and a task description Ttask as input, working across any data modalities and task types.

Initial Solution Generation

The initial solution is generated by first retrieving M effective models using web search. This mitigates the reliance on LLM's internal knowledge, which can lead to suboptimal model choices. The search retrieves both a model description Tmodel and corresponding example code Tcode to guide the LLM. An agent Ainit generates code siniti for each retrieved model, which is then evaluated using a task-specific metric h. The top-performing scripts are iteratively merged into a consolidated initial solution s0 using an agent Amerger, which is guided to introduce a simple average ensemble.

Code Block Refinement

The iterative refinement phase improves the initial solution s0 over T outer loop steps. At each step t, the goal is to improve the current solution st by targeting specific code blocks within the ML pipeline. An ablation paper, performed by agent Aabl, identifies the code block with the greatest impact on performance. This agent receives summaries of previous ablation studies {Tabli}i=0t−1 as input to encourage exploration of different pipeline parts. The ablation paper results rt are summarized by a module Asummarize to generate a concise ablation summary Tablt. An extractor module Aextractor identifies the code block ct whose modification had the most significant impact, considering previously refined blocks {ci}i=0t−1 as context. An initial plan p0 for code block refinement is generated at the same time.

Once the targeted code block ct is defined, MLE-STAR explores K potential refinements using an inner loop. An agent Acoder implements p0, transforming ct into a refined block ct0. A candidate solution st0 is formed by substituting ct0 into st, and its performance h(st0) is evaluated. Further plans pk are generated by a planning agent Aplanner, which leverages previous attempts within the current outer step t as feedback. For each plan pk, the coding agent generates the corresponding refined block ctk, creates the candidate solution stk, and evaluates its performance h(stk). After exploring K refinement strategies, the best-performing candidate solution is identified, and the solution for the next outer step, st+1, is updated only if an improvement over st is found. This iterative process continues until t=T.

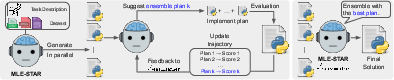

Ensemble Strategy Exploration

To further improve upon the best single solution, a novel ensembling procedure is introduced. Instead of simply selecting the solution with the highest score, MLE-STAR explores ensemble strategies to combine multiple solutions. Given a set of L distinct solutions {sl}l=1L, the goal is to find an effective ensemble plan e that merges these solutions. The process starts with an initial ensemble plan e0, such as averaging the final predictions. For a fixed number of iterations R, an agent Aens_planner proposes subsequent ensemble plans er, using the history of previously attempted plans and their performance as feedback. Each plan er is implemented via Aensembler to obtain sensr. The ensemble result that achieves the highest performance is selected as the final output.

Additional Modules for Robustness

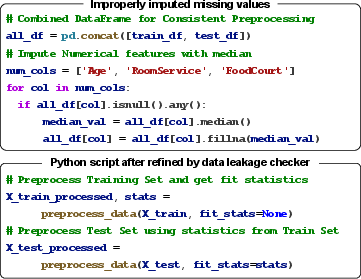

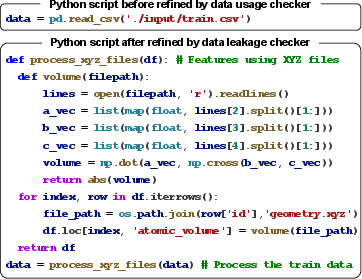

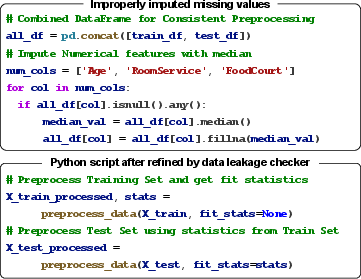

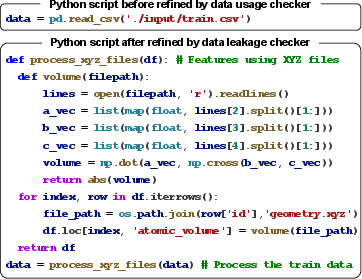

To ensure robust performance, MLE-STAR includes additional modules such as a debugging agent Adebugger to correct errors in the generated code. A data leakage checker Aleakage analyzes the solution script to prevent improper access to test data during training. A data usage checker Adata ensures that all relevant provided data sources are utilized.

Figure 2: MLE-STAR's data leakage checker introduces appropriate preprocessing.

Experimental Evaluation

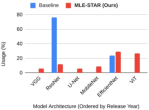

MLE-STAR's effectiveness was evaluated using 22 Kaggle competitions from MLE-bench Lite. The results demonstrate that MLE-STAR significantly outperforms baselines, including AIDE, in terms of medal achievement rates. For example, MLE-STAR with Gemini-2.0-Flash improved AIDE's medal achievement rate from 25.8\% to 43.9\%. The proposed ensemble technique also provides a meaningful improvement.

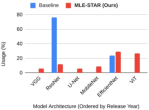

Figure 3: Model usage (\%) on image classification competitions.

The model usage analysis reveals that MLE-STAR leverages more recent and competitive models compared to baselines like AIDE, which tend to rely on older architectures such as ResNet. Manual intervention can further enhance MLE-STAR's performance by incorporating human expertise in model selection and code block targeting. The debugging and data leakage checker modules address potential issues with LLM-generated code, ensuring more reliable and generalizable solutions.

Discussion of Results

Qualitative observations indicate that MLE-STAR selects more up-to-date models. The data leakage checker ensures that the model is not cheating by using information from the test set in its training. The data usage checker ensures that all available data is used.

Figure 4: Ensembling solutions.

Analysis of the solution refinement trajectory shows a consistent improvement in performance as MLE-STAR progresses through its refinement steps. The ablation paper module effectively targets the most influential code blocks for modification, leading to significant improvements in the early stages of refinement.

Conclusion

MLE-STAR represents a significant advancement in automated machine learning engineering. By integrating web search, targeted code refinement, and ensemble strategy exploration, MLE-STAR achieves state-of-the-art performance in Kaggle competitions. The framework's modular design and additional safety checks contribute to its robustness and generalizability. A limitation is that LLMs might have been trained with relevant discussions about the challenge due to the competitions being publicly available.

The development of MLE-STAR highlights the potential of LLMs to automate and improve the ML model development process. Future research could focus on extending the framework to handle more complex tasks, incorporating more sophisticated reasoning and planning capabilities, and further reducing the need for human intervention. The broader impacts of MLE-STAR include lowering the barrier to entry for individuals and organizations to leverage ML, and fostering innovation across various sectors.