The paper introduces lmr and lmr-Bench, a novel framework and benchmark designed for the development and evaluation of LLM agents in the context of AI research tasks. It addresses the gap in existing benchmarks by providing a Gym environment tailored for machine learning tasks, enabling the use of reinforcement learning algorithms for training agents. The authors evaluate frontier LLMs, finding that while these models can enhance existing baselines through hyperparameter optimization, they often fall short of generating genuinely novel hypotheses, algorithms, architectures, or substantial improvements.

The paper's contributions can be summarized as follows:

- Introduction of

lmr, the first Gym environment specifically designed for evaluating and developing AI Research Agents. - Release of

lmr-Bench, a diverse suite of open-ended AI research tasks intended for assessing LLM agents. - Proposal of a new evaluation metric that facilitates the comparison of multiple agents across a variety of tasks.

- Comprehensive evaluation of frontier LLMs using

lmr-Bench. - Open-sourcing the framework and benchmark to encourage future research in the advancement of AI research capabilities of LLM agents.

Capability Levels for AI Research Agents

The authors propose a six-level hierarchy for categorizing LLM agent capabilities:

- Level 0: Reproduction: Agents can reproduce existing research papers.

- Level 1: Baseline Improvement: Agents can improve performance on a benchmark given baseline code.

- Level 2: SOTA Achievement: Agents can achieve state-of-the-art (SOTA) performance given a task description and pre-existing literature.

- Level 3: Novel Scientific Contribution: Agents can develop a new method worthy of publication at a top ML conference.

- Level 4: Groundbreaking Scientific Contribution: Agents can identify key research questions and directions, meriting awards at prestigious ML conferences.

- Level 5: Long-Term Research Agenda: Agents can pursue long-term research, achieving paradigm-shifting breakthroughs worthy of prizes like the Nobel or Turing.

The lmr-Bench focuses primarily on Level 1.

Related Work

The authors present a comparison between lmr and lmr-Bench with other related LLM agent frameworks and benchmarks, including:

- MLE-Bench

- SWE-Bench/Agent

- MLAgentBench

- RE-Bench

- ScienceAgentBench

The comparison highlights lmr's unique features, such as its Gym interface, inclusion of algorithmic tasks, focus on open-ended research, flexible artifact evaluation, and agentic harness.

The lmr Framework

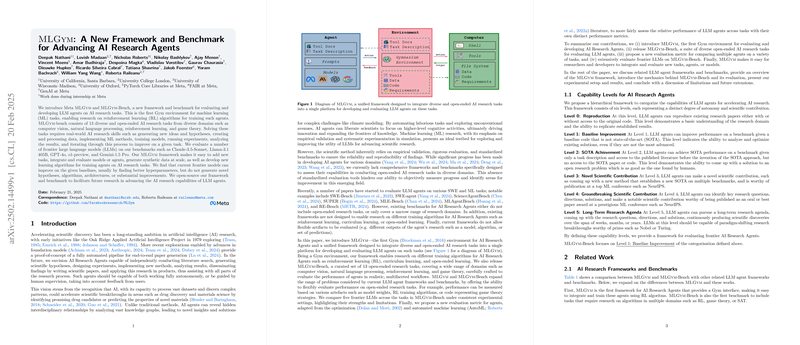

The lmr framework facilitates ML research and development via agent interaction with a shell environment, utilizing task descriptions, starter code, and action/observation history to generate shell commands.

The framework comprises Agents, Environments, Datasets, and Tasks:

- Agents: Agents are wrappers around base LLMs, integrating models, history processors, and cost management.

- Environment: Environments are designed as Gymnasium (gym) environments, initializing a shell environment within a local Docker machine with tools and task-specific dependencies.

- Datasets: Datasets are defined via configuration files and support both local and Hugging Face datasets.

- Tasks: Tasks define ML research tasks using configuration files, incorporating datasets, evaluation scripts, and training timeouts.

Tools and ACI

The framework extends the Agent-Computer Interface (ACI) from SWE-Agent, incorporating literature search and a memory module.

The available tools include:

- SWE-Agent Tools: Includes tools for Search, File Viewing, and File Editing.

- Extended Tools: Encompass literature search, PDF parsing, and a memory module for storing and retrieving experimental findings.

The memory module is a tool to improve performance on long-horizon AI research tasks. It has two core functions: memory_write and memory_read. The memory_write function allows the agent to store key insights and effective configurations and the memory_read method retrieves the top-k most relevant stored entries based on cosine similarity with a given query.

lmr-Bench Tasks

lmr-Bench includes a variety of tasks:

- Data Science: House Price Prediction using the Kaggle House Price dataset.

- 3-SAT: Optimizing a variable selection heuristic for a DPLL solver.

- Game Theory: Developing strategies for iterated Prisoner's Dilemma, Battle of the Sexes, and Colonel Blotto games.

- Computer Vision: Image classification tasks using CIFAR-10 and Fashion MNIST datasets, and image captioning using the MS-COCO dataset.

- Natural Language Processing: Natural Language Inference using MNLI and LLMing with the FineWeb dataset.

- Reinforcement Learning: MetaMaze Navigation, Mountain Car Continuous, and Breakout MinAtar environments.

Experimental Setup

Experiments were conducted using a SWE-Agent-based model with frontier models, including OpenAI O1-preview, Gemini 1.5 Pro, Claude-3.5-sonnet, Llama-3-405b-instruct, and GPT-4o. The environment was configured with a window size of 1000 lines, a context processor maintaining a rolling window, and specialized commands for file navigation, editing, and evaluation.

Evaluation Metrics

The authors use performance profile curves and Area Under the Performance profile (AUP) scores to compare agent performance across tasks. The AUP score is calculated as:

$\text{AUP}_m = \int_{1}^{\tau_\text{max} \rho_{m}(\tau) d{\tau}$

Where:

- is the area under the performance profile for method

- is a threshold on the distance between the method and the best scoring methods on each of the tasks.

- is the minimum for which for all

- is the performance profile curve for method

Additional metrics include Best Submission and Best Attempt scores across four independent runs.

Results and Analysis

The results indicate that OpenAI's O1-preview generally achieves the best aggregate performance across tasks, followed by Gemini 1.5 Pro and Claude-3.5-Sonnet. Cost analysis reveals that Gemini-1.5-Pro offers a better balance between performance and computational cost. Action analysis indicates that agents spend significant time in iterative development cycles of editing and viewing files. GPT-4o takes the least number of actions overall, indicating that the model either errors out or submits too early without reaching an optimal solution. Analysis of termination errors showed that evaluation error occurred most frequently, usually due to missing or incorrect formatting of submission artifacts.

Discussion and Limitations

The authors highlight opportunities and challenges in using LLMs for scientific workflows. Scaling the evaluation framework to accommodate larger datasets and more complex tasks is essential. Additionally, there is a need to address scientific novelty and promote data openness to drive scientific progress.

Ethical Considerations

The authors acknowledge that AI agents proficient in tackling open research challenges could catalyze scientific advancement, while also recognizing that this progress must be carefully controlled and responsibly deployed. They also point out the ability of agents to enhance their own training code could augment the capabilities of cutting-edge models at a pace outstripping human researchers.

The paper concludes by emphasizing the need for improvements in long-context reasoning, better agent architectures, and richer evaluation methodologies to fully harness LLMs’ potential for scientific discovery.